Back in July, we introduced Agents for Amazon Bedrock in preview. Today, Agents for Amazon Bedrock is generally available.

Agents for Amazon Bedrock helps you accelerate generative artificial intelligence (AI) application development by orchestrating multistep tasks. Agents uses the reasoning capability of foundation models (FMs) to break down user-requested tasks into multiple steps. They use the developer-provided instruction to create an orchestration plan and then carry out the plan by invoking company APIs and accessing knowledge bases using Retrieval Augmented Generation (RAG) to provide a final response to the end user. If you’re curious how this works, check out my previous posts on agents that include a primer on advanced reasoning and a primer on RAG.

Starting today, Agents for Amazon Bedrock also comes with enhanced capabilities that include improved control of the orchestration and better visibility into the chain of thought reasoning.

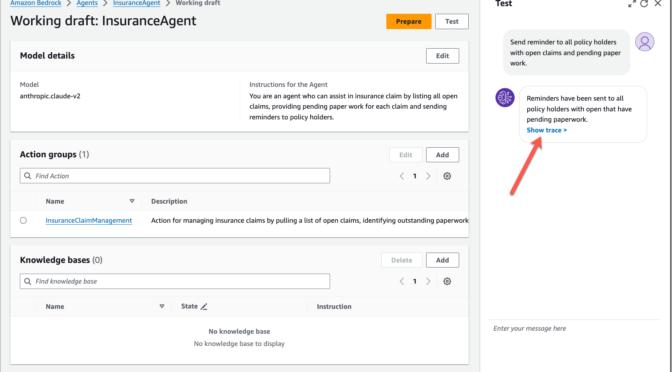

Behind the scenes, Agents for Amazon Bedrock automates the prompt engineering and orchestration of user-requested tasks, such as managing retail orders or processing insurance claims. An agent automatically builds the orchestration prompt and, if connected to knowledge bases, augments it with your company-specific information and invokes APIs to provide responses to the user in natural language.

As a developer, you can use the new trace capability to follow the reasoning that’s used as the plan is carried out. You can view the intermediate steps in the orchestration process and use this information to troubleshoot issues.

You can also access and modify the prompt that the agent automatically creates so you can further enhance the end-user experience. You can update this automatically created prompt (or prompt template) to help the FM enhance the orchestration and responses, giving you more control over the orchestration.

Let me show you how to view the reasoning steps and how to modify the prompt.

View reasoning steps

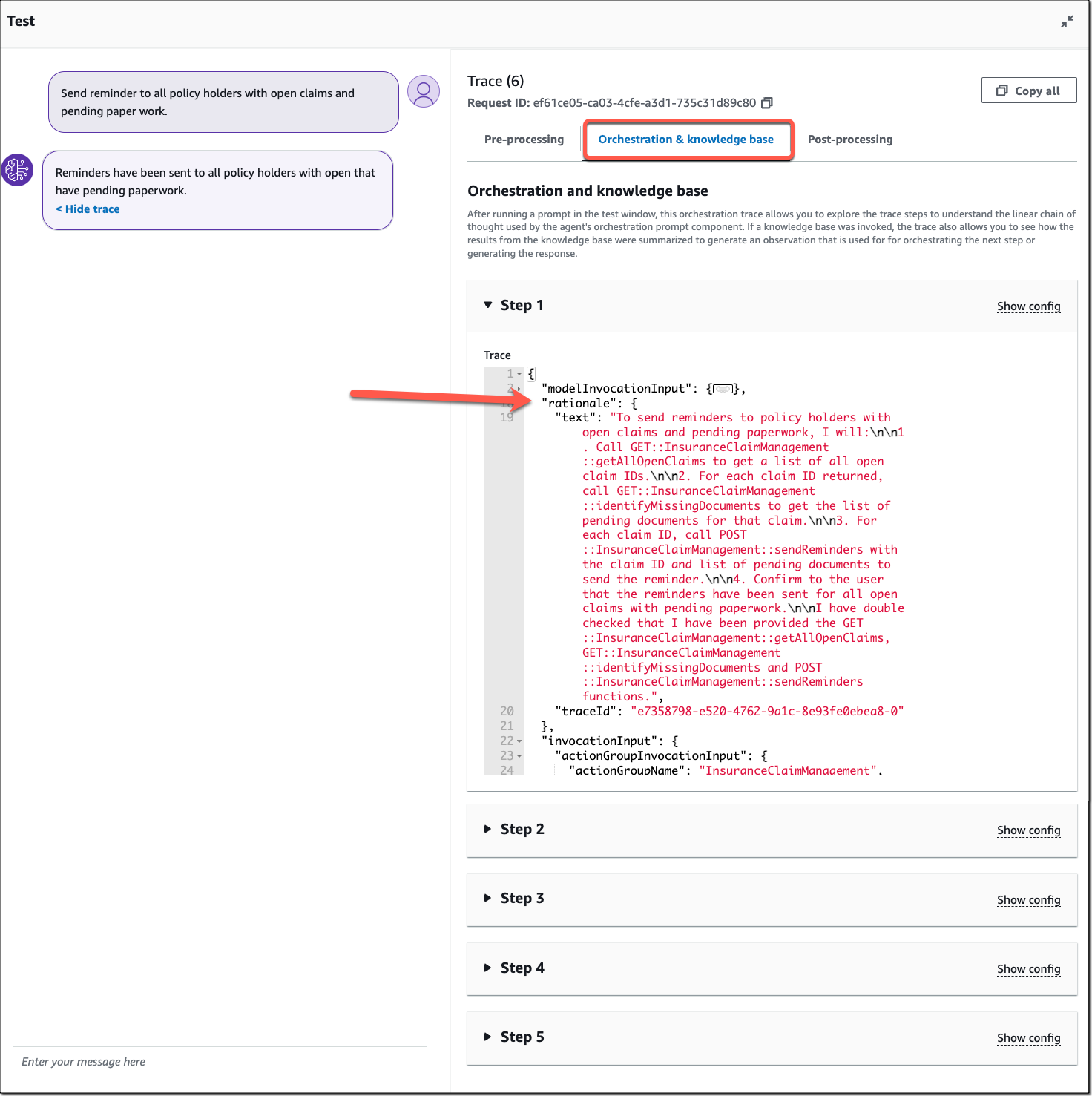

Traces gives you visibility into the agent’s reasoning, known as the chain of thought (CoT). You can use the CoT trace to see how the agent performs tasks step by step. The CoT prompt is based on a reasoning technique called ReAct (synergizing reasoning and acting). Check out the primer on advanced reasoning in my previous blog post to learn more about ReAct and the specific prompt structure.

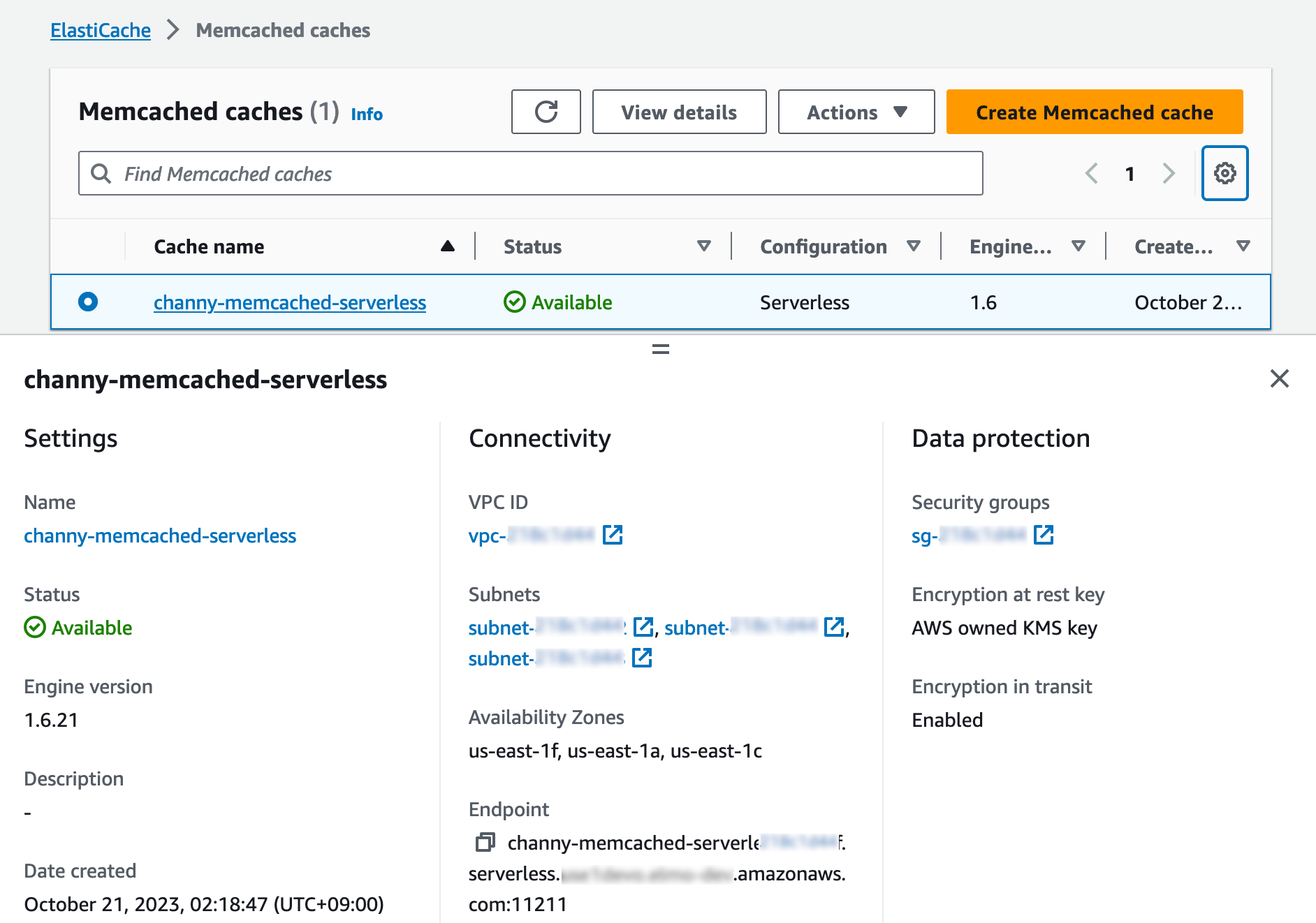

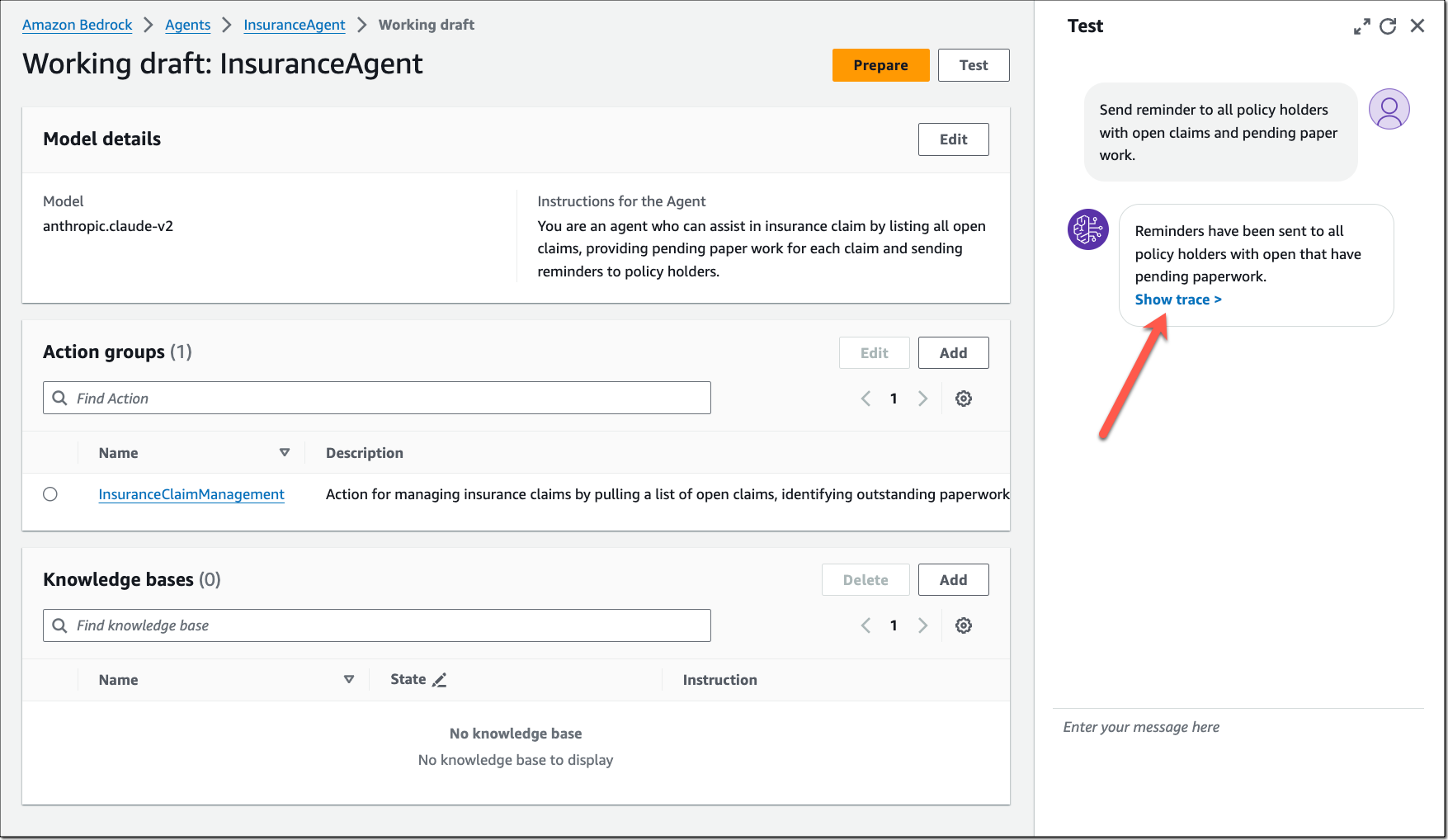

To get started, navigate to the Amazon Bedrock console and select the working draft of an existing agent. Then, select the Test button and enter a sample user request. In the agent’s response, select Show trace.

The CoT trace shows the agent’s reasoning step-by-step. Open each step to see the CoT details.

The enhanced visibility helps you understand the rationale used by the agent to complete the task. As a developer, you can use this information to refine the prompts, instructions, and action descriptions to adjust the agent’s actions and responses when iteratively testing and improving the user experience.

Modify agent-created prompts

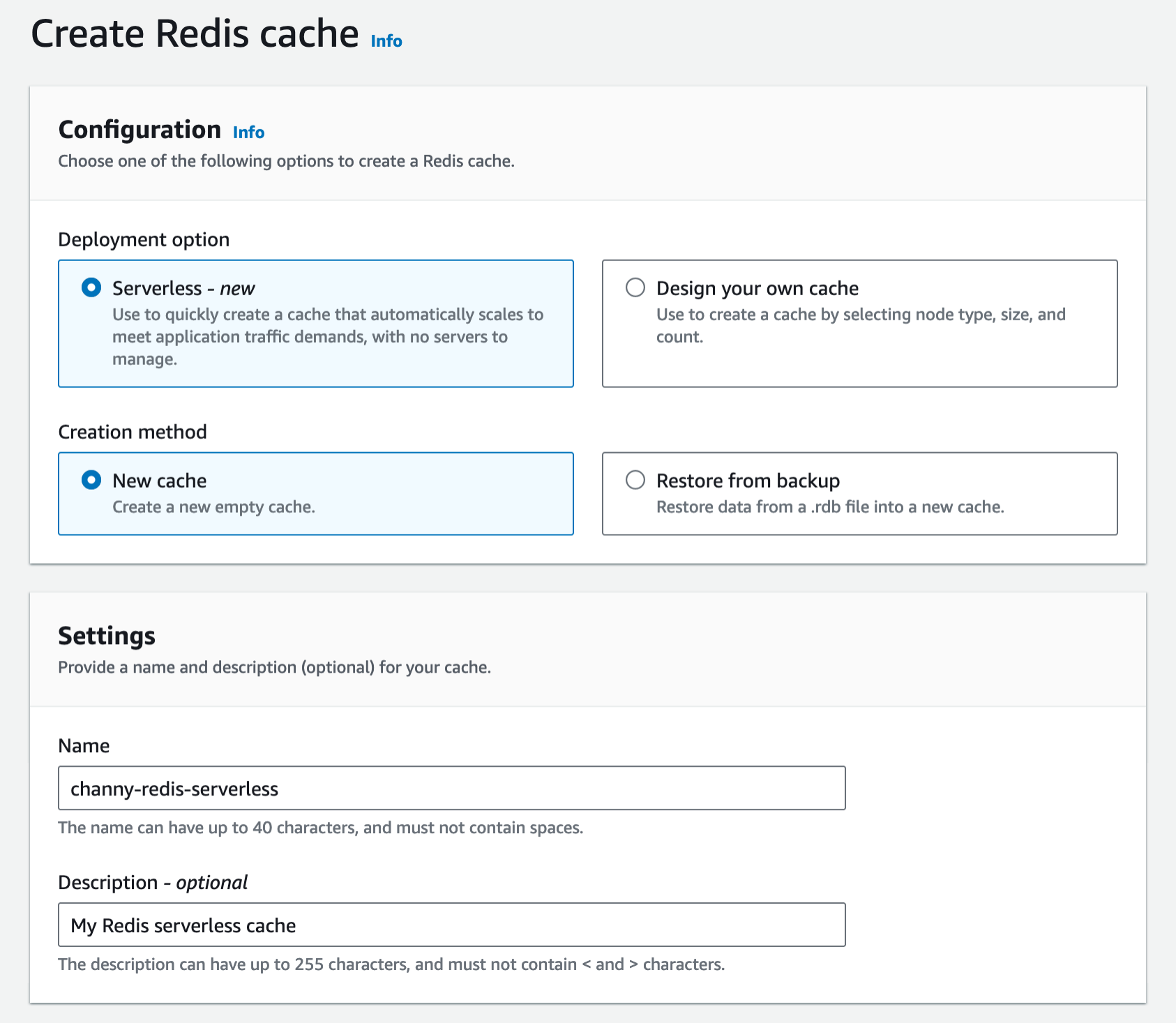

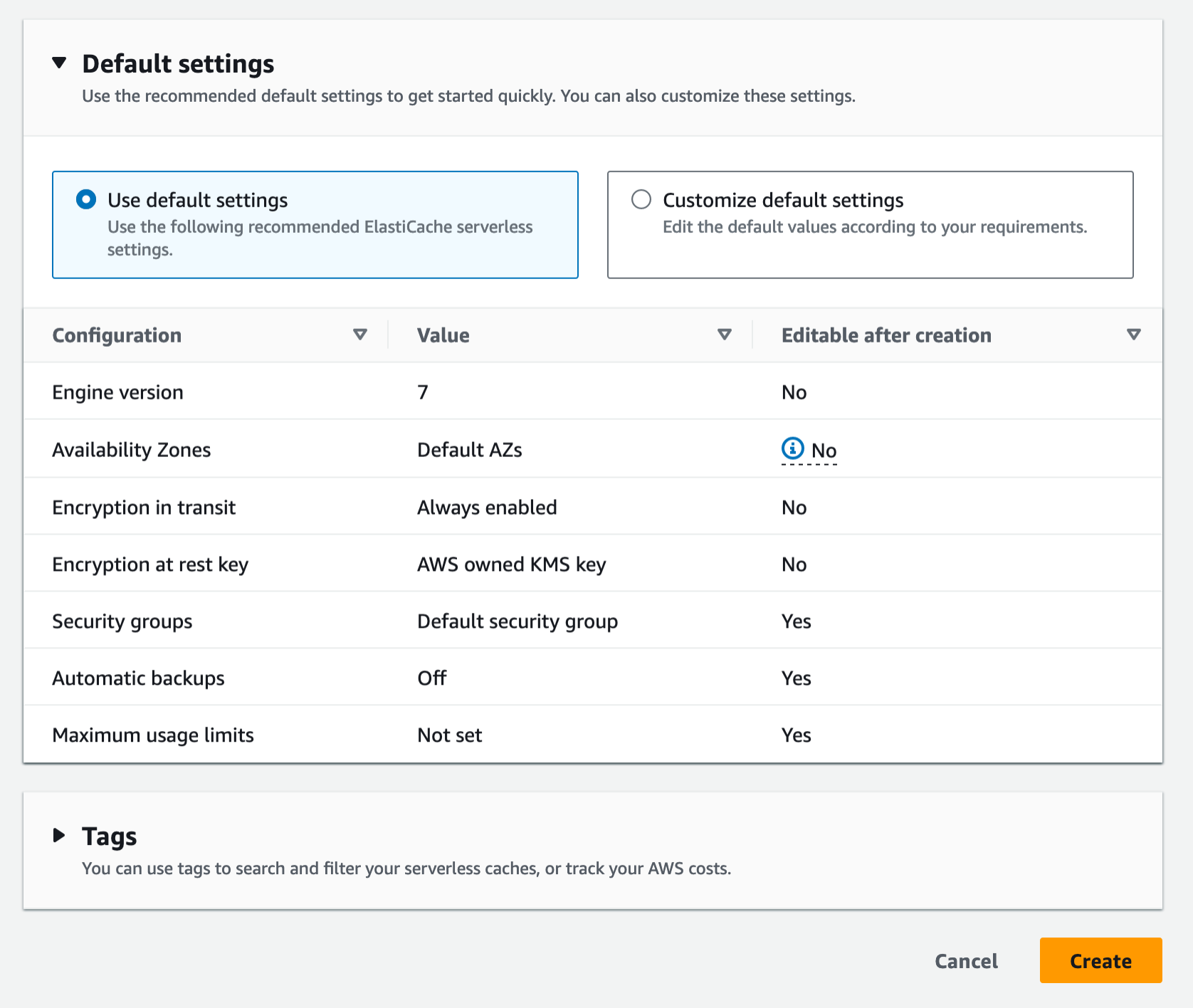

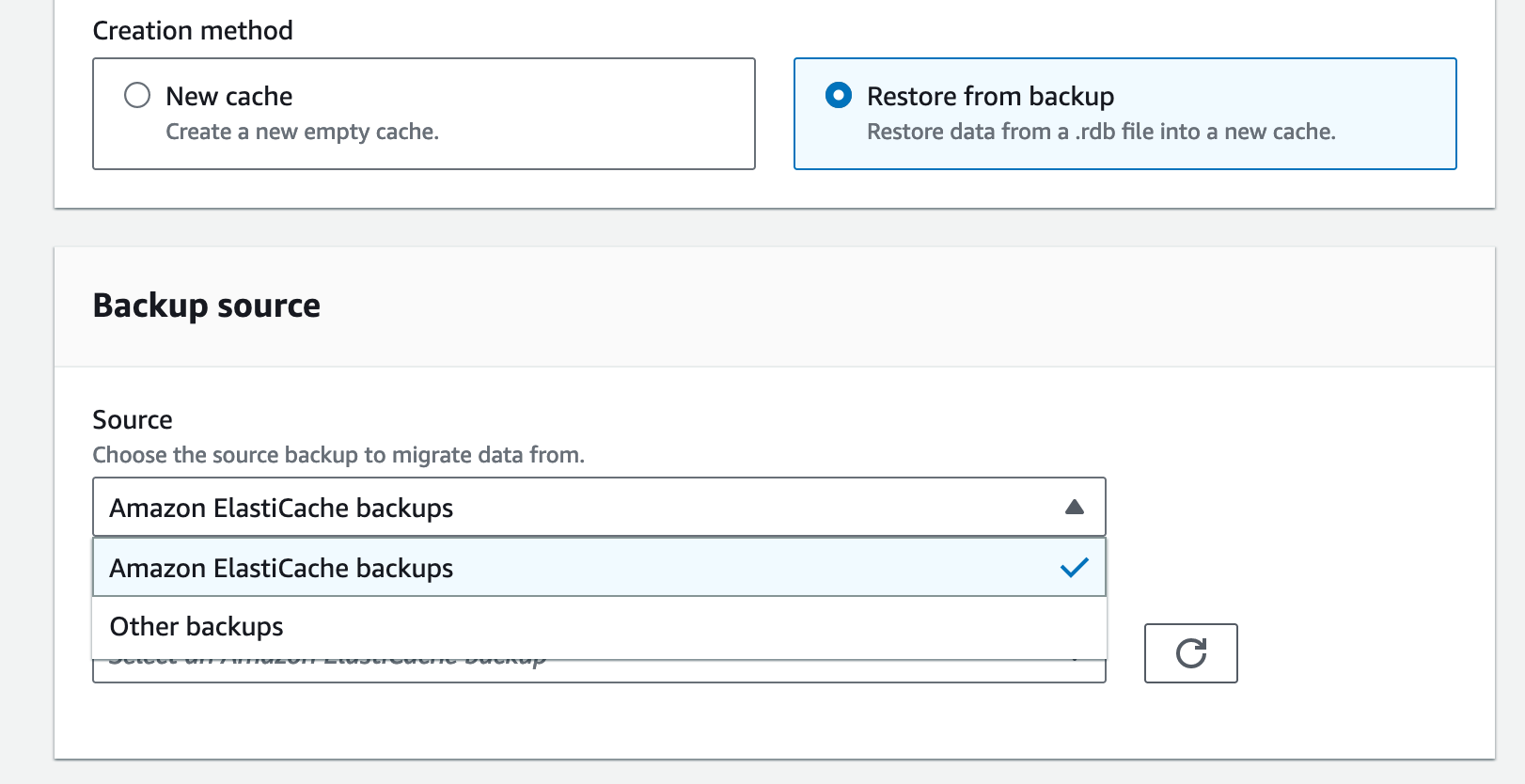

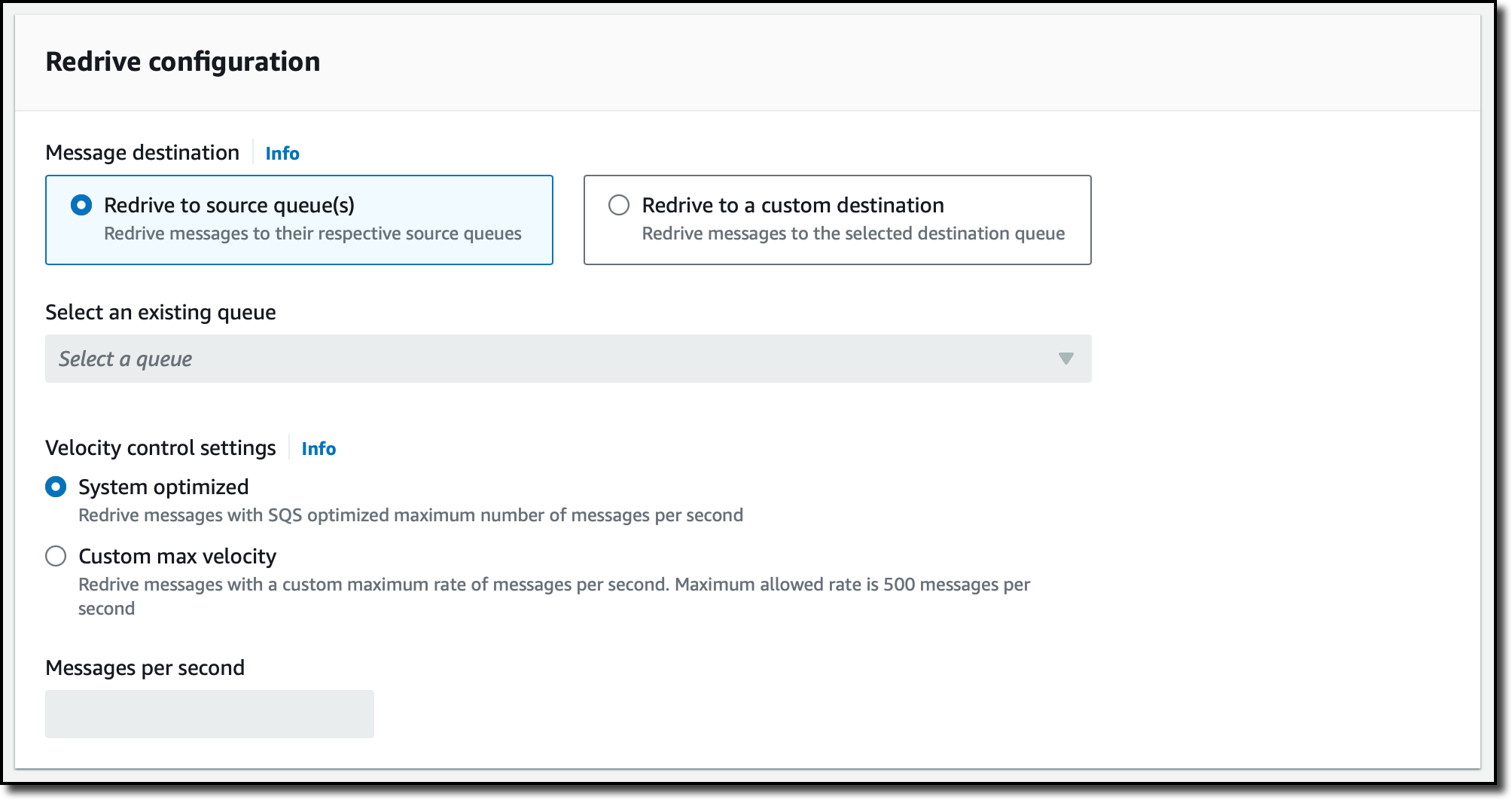

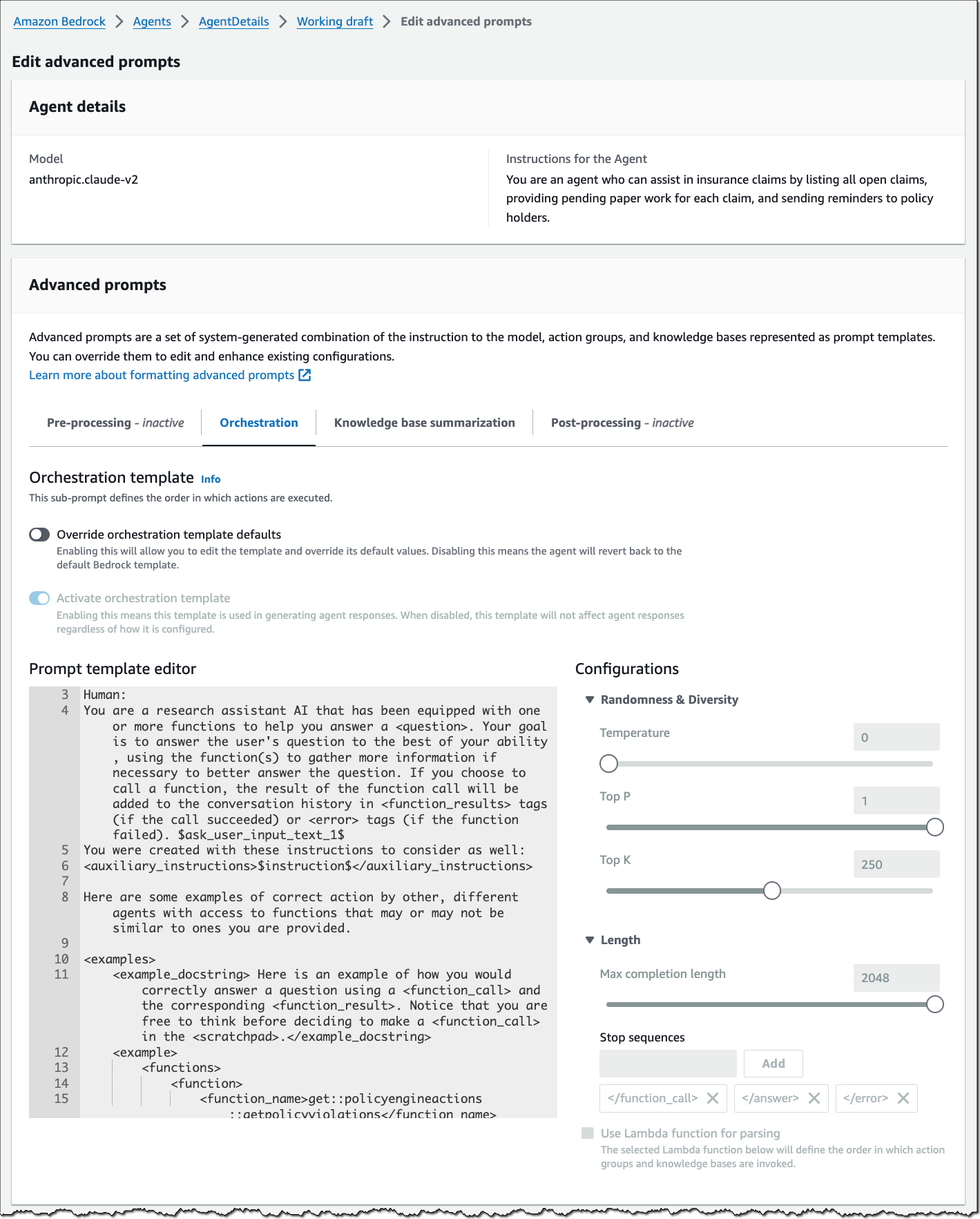

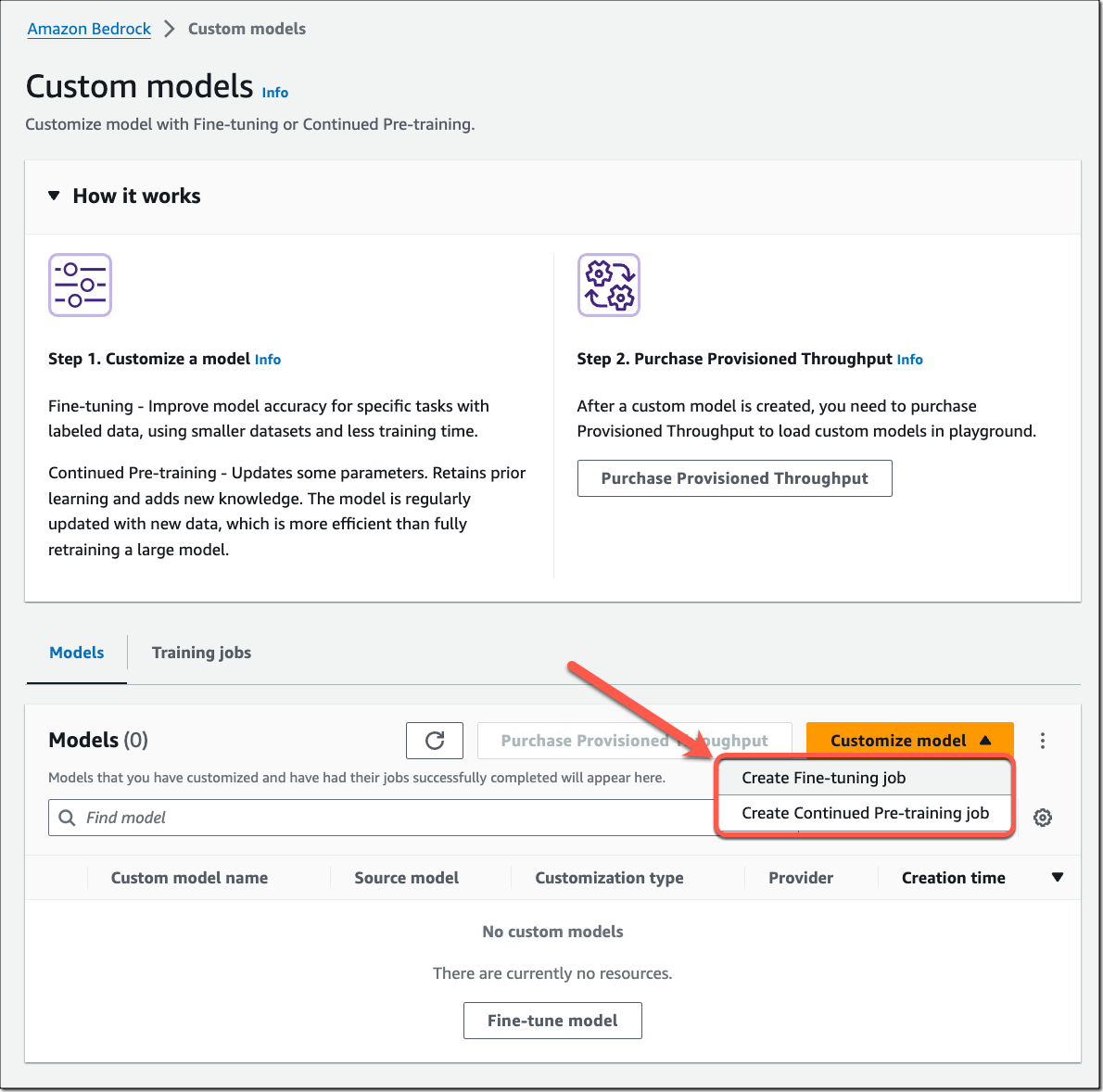

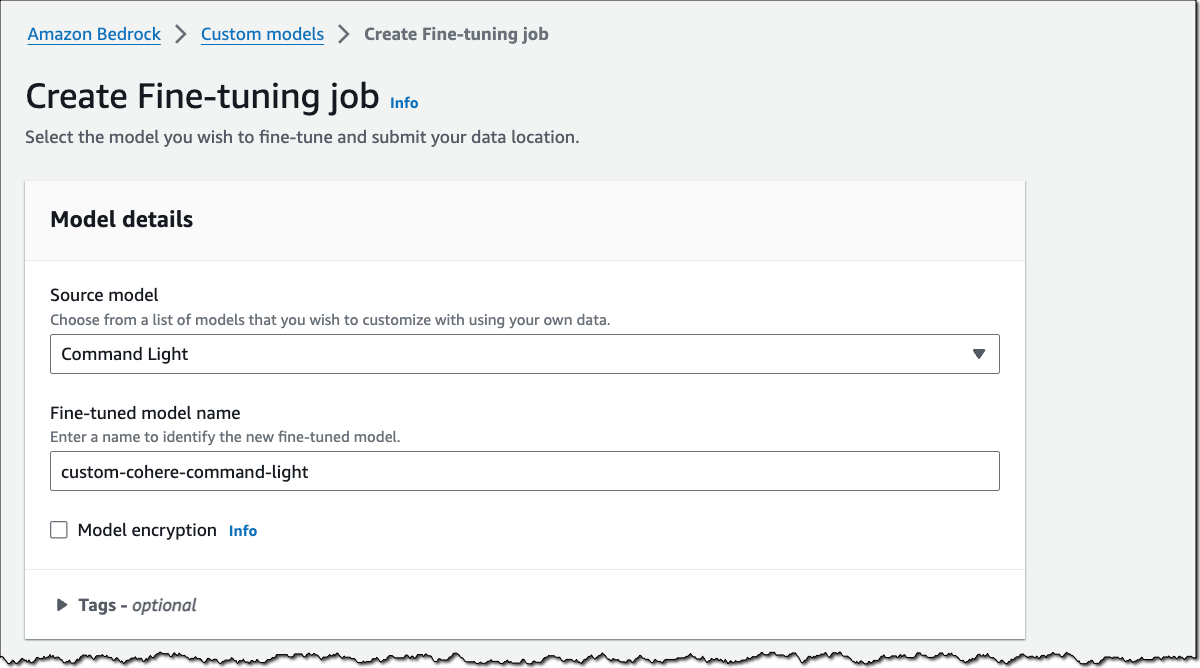

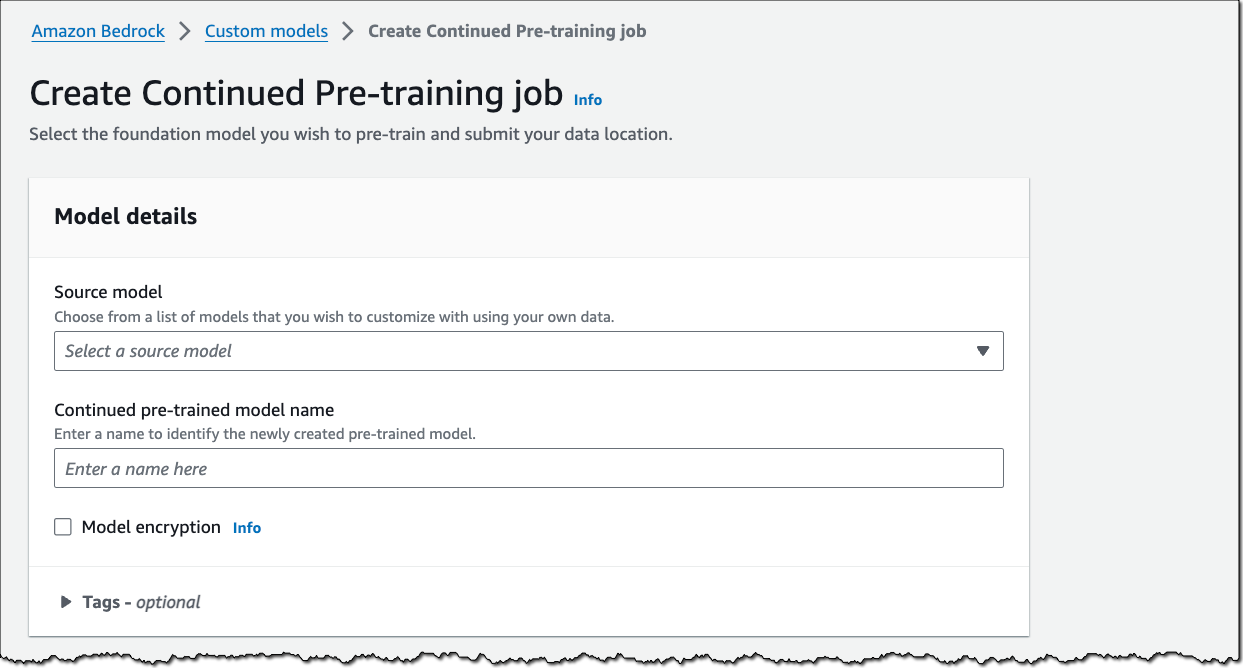

The agent automatically creates a prompt template from the provided instructions. You can update the preprocessing of user inputs, the orchestration plan, and the postprocessing of the FM response.

To get started, navigate to the Amazon Bedrock console and select the working draft of an existing agent. Then, select the Edit button next to Advanced prompts.

Here, you have access to four different types of templates. Preprocessing templates define how an agent

contextualizes and categorizes user inputs. The orchestration template equips an agent with short-term memory, a list of available actions and knowledge bases along with their descriptions, as well as few-shot examples of how to break down the problem and use these actions and knowledge in different sequences or combinations. Knowledge base response generation templates define how knowledge bases will be used and summarized in the response. Postprocessing templates define how an agent will format and present a final response to the end user. You can either keep using the template defaults or edit and override the template defaults.

Things to know

Here are a few best practices and important things to know when you’re working with Agents for Amazon Bedrock.

Agents perform best when you allow them to focus on a specific task. The clearer the objective (instructions) and the more focused the available set of actions (APIs), the easier it will be for the FM to reason and identify the right steps. If you need agents to cover various tasks, consider creating separate, individual agents.

Here are a few additional guidelines:

- Number of APIs – Use three to five APIs with a couple of input parameters in your agents.

- API design – Follow general best practices for designing APIs, such as ensuring idempotency.

- API call validations – Follow best practices of API design by employing exhaustive validation for all API calls. This is particularly important because large language models (LLMs) may generate hallucinated inputs and outputs, and these validations prove helpful during such occurrences.

Availability and pricing

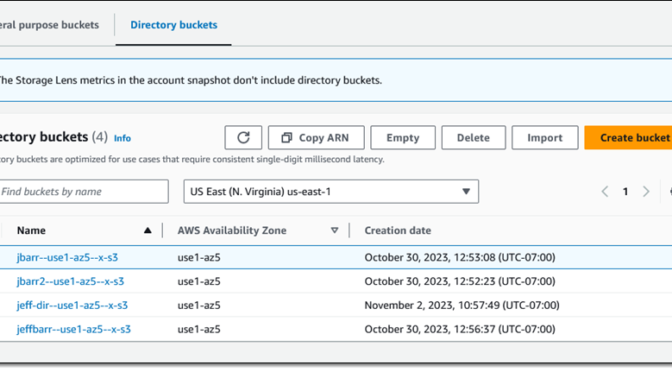

Agents for Amazon Bedrock are available today in AWS Regions US East (N. Virginia) and US West (Oregon). You will be charged for the inference calls (InvokeModel API) made by agents. The InvokeAgent API is not charged separately. Amazon Bedrock Pricing has all the details.

Learn more

- Agents for Amazon Bedrock product page

- Agents for Amazon Bedrock User Guide

- Agents for Bedrock in the console

— Antje

I’m happy to be able to tell you about the latest in our series of innovative

I’m happy to be able to tell you about the latest in our series of innovative

3. Access to cutting-edge capabilities

3. Access to cutting-edge capabilities