PowerShell 7.5

We continue to follow our yearly release schedule for PowerShell 7 and the next version will align with .NET 9.

Pseudo-terminal support

PowerShell currently has a design limitation that prevents full capture of output from native commands by PowerShell itself.

Native commands (meaning executables you run directly) will write output to STDERR or STDOUT pipes.

However, if the output is not redirected, PowerShell will simply have the native command write directly to the console.

PowerShell can’t just always redirect the output to capture it because:

- The order of output from STDERR and STDOUT can be non-deterministic because they are on different pipes,

but the order written to the console has meaning to the user. - Native commands can use detection of redirection to determine if the command is being run interactive or non-interactively

and behave differently such as prompting for input or defaulting to adding text decoration to the output.

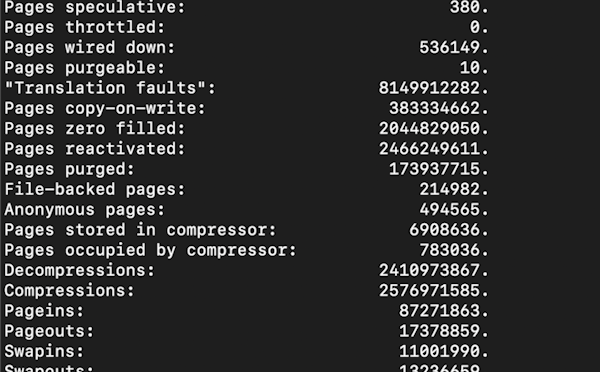

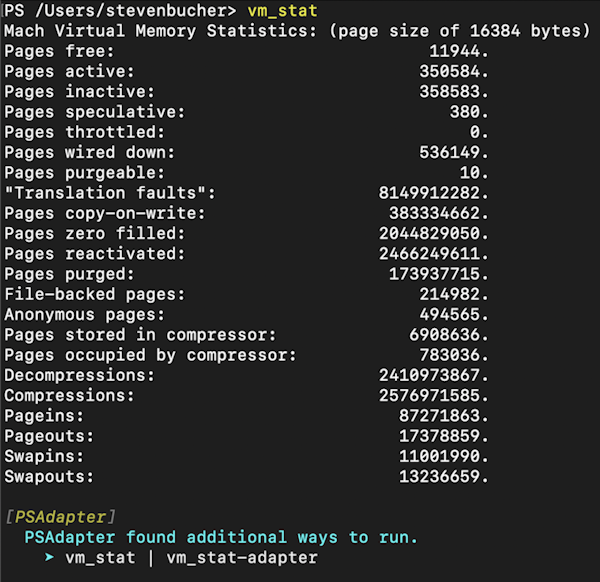

To address this, we are working on an experimental feature to leverage pseudoterminals

to enable PowerShell to capture the output of native commands while still allowing the native command to seemingly write directly to the console.

This feature can then further be leveraged to:

- Ensure complete transcription of native commands

- Proper rendering of PowerShell progress bars in scripts that call native commands

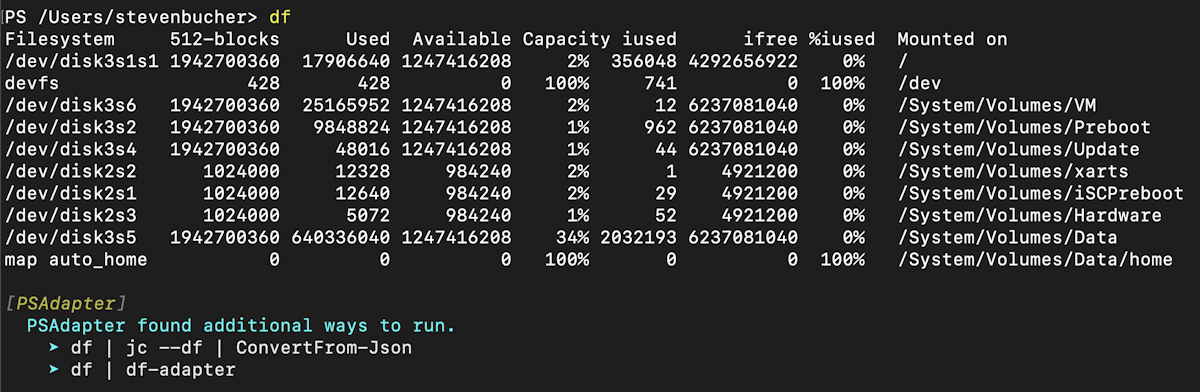

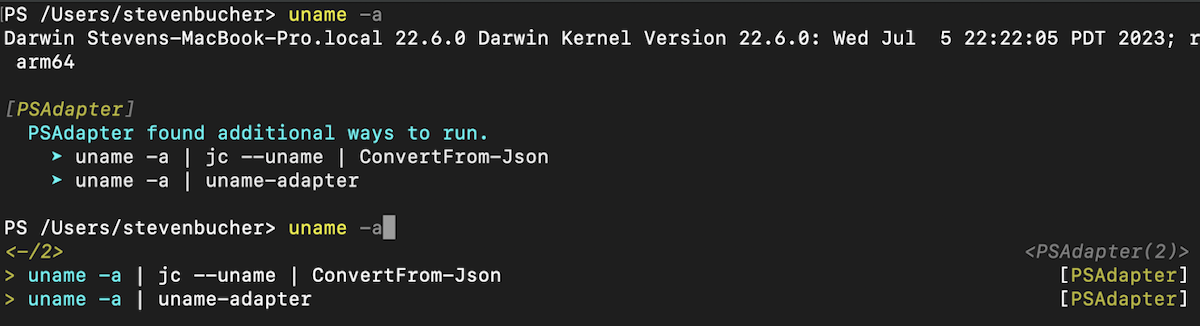

- Enable feedback providers to act upon native command output

- For example, it would be possible to write a feedback provider that looked at the output of

gitcommands

and provided suggestions for what to do next based on the output.

- For example, it would be possible to write a feedback provider that looked at the output of

Once this feature is part of PowerShell 7, there are other interesting scenarios that can be enabled in the future.

Platform support

Operating system versions and distributions are constantly evolving.

We want to ensure that a supported platform is a platform that is tested and validated by the team.

During 2024, the engineering team will focus on:

- Making our tests reliable so we are only spending manual effort investigating real issues when test fails

- Simplify how we add new platforms to our test matrix so new distro requests can be fulfilled more quickly

- More actively track the lifecycle of platforms we support

- Automate publishing the supported platforms list so that our docs are always up to date

Bug fixes and community PRs

The community has been great at opening issues and pull requests to help improve PowerShell.

For this release, we will focus on addressing issues and PRs that have been opened by the community.

This means less new features from the team, but we hope to make up for that with the community contributions

getting merged into the product. We will also be investing in the Working Group application process to expand the reach of those groups.

Please use reactions in GitHub issues and PRs to help us prioritize what to focus our limited time on.

Artifact management

Fundamentals work

Ensure PowerShell Gallery addresses the latest compliance requirements for security, accessibility, and reliability.

Include new types of repositories for PSResourceGet

We plan to introduce integration with container registries, both public and private, which will

help enterprise customers create a differentiation between trusted and untrusted content.

This change will allow for a Microsoft trusted repository while the PowerShellGallery continues as untrusted by default.

By having more options for private galleries, in addition to a Mirosoft trusted repository and the PowerShell Gallery,

this enables customers to have control over package availability suitable for their environments.

Concurrent installs

To improve performance during long-running installations, we plan to enable parallel operations

so multiple module installations can happen at the same time.

This change will be particularly impactful in modules with many dependencies, such as the Az module,

which currently can take significant time to install.

Local caching of artifact details

Currently the find-psresource cmdlet pulls information about available artifacts from service endpoints

and outputs the list locally. We believe there is opportunity to locally cache the metadata about available

artifacts to reduce network dependency and improve performance when resolving dependency relationships.

This would also help enable implementing a feedback provider to suggest how to install module that is not currently installed.

So if a user tries to run a cmdlet that is not installed, the feedback provider will suggest what module to install to get the cmdlet to work.

Intelligence in the shell

We are obvserving and being thoughtful about what it will mean to integrate the experiences

provided by large language models into shell experience.

Our current outlook is to think beyond natural language chat to deep integration of learning opportunities.

We also believe there are lots of improvements to the interactivity of PowerShell that does not require a large language model.

This includes some more subtle improvements to the interactive experience of PowerShell that would help increase productivity

and efficiency at the command line.

Configuration

Desired State Configuration (DSC) helped to enable configuration as code for Windows.

With v3, we are focusing on enabling cross-platform use, simplifying resource development, improving experience

to integrate with higher level configuration management tools, and improving the experience for end users.

Our goal is to be code complete by end of March and work towards a release candidate by middle of the year.

This is a complete rewrite of DSC and we welcome feedback during the design and development process.

Remoting

Win32_OpenSSH

We hope to continue bringing new versions of OpenSSH to the Windows Server platform. Another goal

is to reduce the complex steps required to install and manage SSH at scale, to enable

partners that create automation tools to use the same mechanism when connecting to Windows servers

as they use for Linux.

SSHDConfig

Monitoring and management of the sshd_config file at scale across platforms can be challenging.

We are working on a DSC v3 resource to enable management of sshd_config using a syntax that is

closer aligned to the command line tools used by modern cloud platforms.

Initially, we’ll be targeting auditing scenarios but we hope to enable full management of the file in the future.

Help system

platyPS is a module that enables you to write PowerShell help

documentation in Markdown and convert it to PowerShell help format.

This tool is used by Microsoft teams and the community of module authors to more easily write and maintain help documentation.

We hope to continue work in this area to address partner feedback.

Other projects

The projects above will already keep the team very busy, but we will continue to maintain other existing projects.

We appreciate the community contributions to these projects and will continue to review issues and PRs:

- VSCode extension

- PSScriptAnalyzer module

- ConsoleGuiTools module

- TextUtility module

- PSReadLine module

- SecretManagement module

Our other projects will continue to be serviced on an as needed basis.

Thanks to the community from Steve Lee and Michael Greene on behalf of our team!

The post PowerShell and OpenSSH team investments for 2024 appeared first on PowerShell Team.