This

post was originally published on

this siteWith Amazon Simple Queue Service (Amazon SQS), you can send, store, and receive messages between software components at any volume. Today, Amazon SQS has introduced two new capabilities for first-in, first-out (FIFO) queues:

- Maximum throughput has been increased up to 70,000 transactions per second (TPS) per API action in selected AWS Regions, supporting sending or receiving up to 700,000 messages per second with batching.

- Dead letter queue (DLQ) redrive support to handle messages that are not consumed after a specific number of retries in a way similar to what was already available for standard queues.

Let’s take a more in-depth look at how these work in practice.

FIFO queues throughput increase up to 70K TPS

FIFO queues are designed for applications that require messages to be processed exactly once and in the order in which they are sent. While standard queues have an unlimited throughput, FIFO queues have an upper quota in the number of TPS per API action.

Standard and FIFO queues support batch actions that can send and receive up to 10 messages with a single API call (up to a maximum total payload of 256 KB). This means that a FIFO queue can process up to 10 times more messages per second than its maximum throughput.

At launch in 2016, FIFO queues supported up to 300 TPS per API action (3,000 messages per second with batching). This was enough for many use cases, but some customers asked for more throughput.

With high throughput mode launched in 2021, FIFO queues introduced a tenfold increase of the maximum throughput and could process up to 3,000 TPS per API action, depending on the Region. One year later, that quota was doubled to up to 6,000 TPS per API action.

This year, Amazon SQS has already increased FIFO queue throughput quota two times, to up to 9,000 TPS per API action in August and up to 18,000 TPS per API action in October (depending on the Region).

Today, the Amazon SQS team has been able to increase the FIFO queue throughput quota again, allowing you to process up to 70,000 TPS per API action (up to 700,000 messages per second with batching) in the US East (N. Virginia), US West (Oregon), and Europe (Ireland) Regions. This is more than two hundred times the maximum throughput at launch.

DLQ redrive support for FIFO queues

With Amazon SQS, messages that are not consumed after a specific number of retries can automatically be moved to a DLQ. There, messages can be analyzed to understand the reason why they have not been processed correctly. Sometimes there is a bug or a misconfiguration in the consumer application. Other times the messages contain invalid data from the source applications that needs to be fixed to allow the messages to be processed again.

Either way, you can define a plan to reprocess these messages. For example, you can fix the consumer application and redrive all messages to the source queue. Or you can create a dedicated queue where a custom application receives the messages, fixes their content, and then sends them to the source queue.

To simplify moving the messages back to the source queue or to a different queue, Amazon SQS allows you to create a redrive task. Redrive tasks are already available for standard queues. Starting today, you can also start a redrive task for FIFO queues.

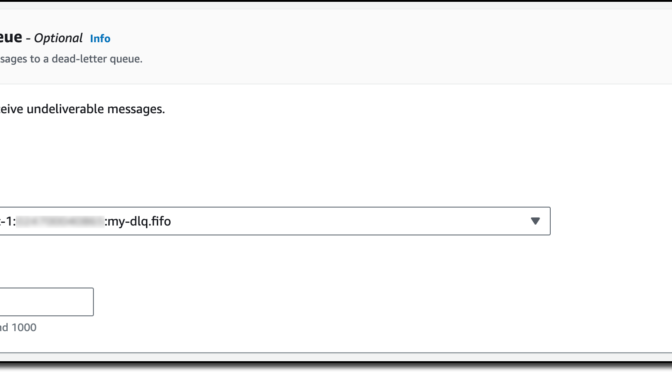

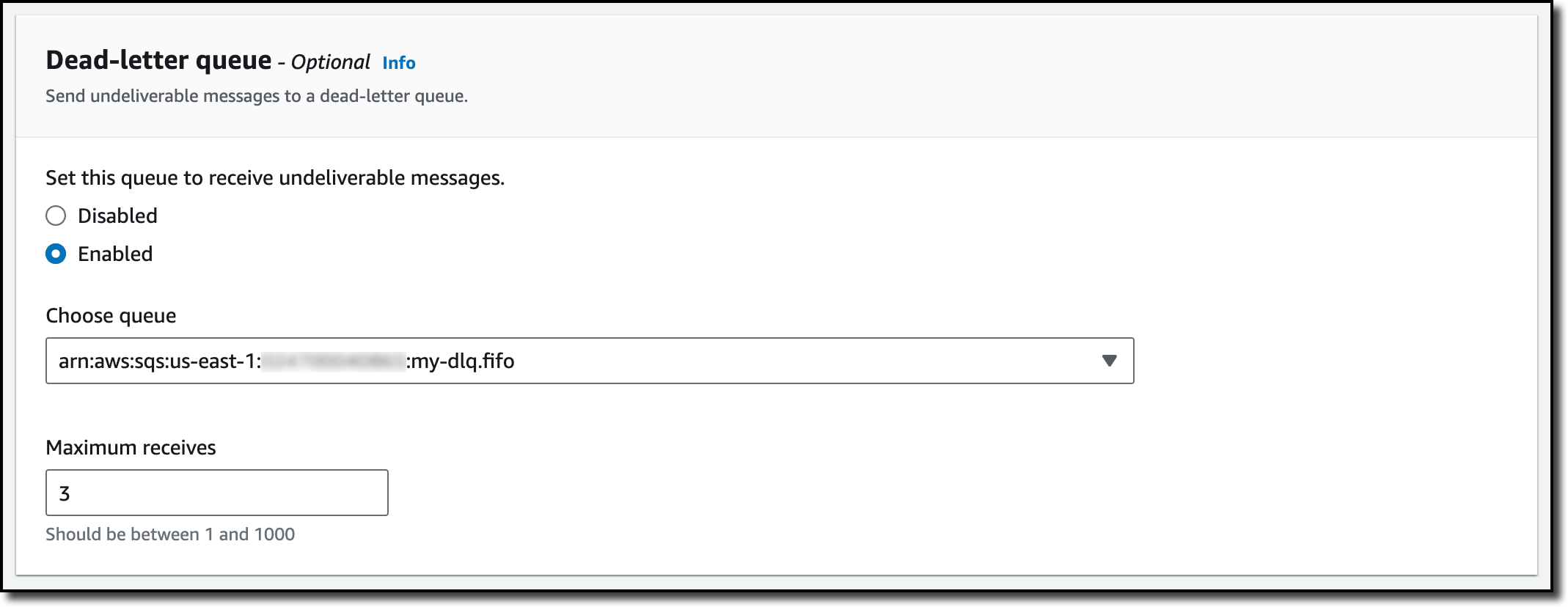

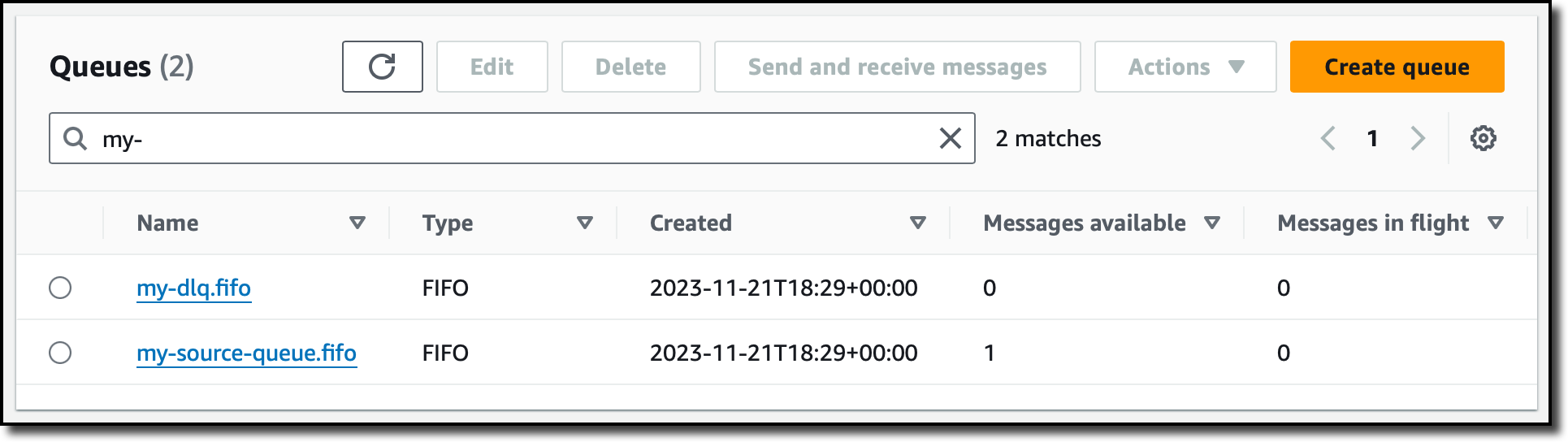

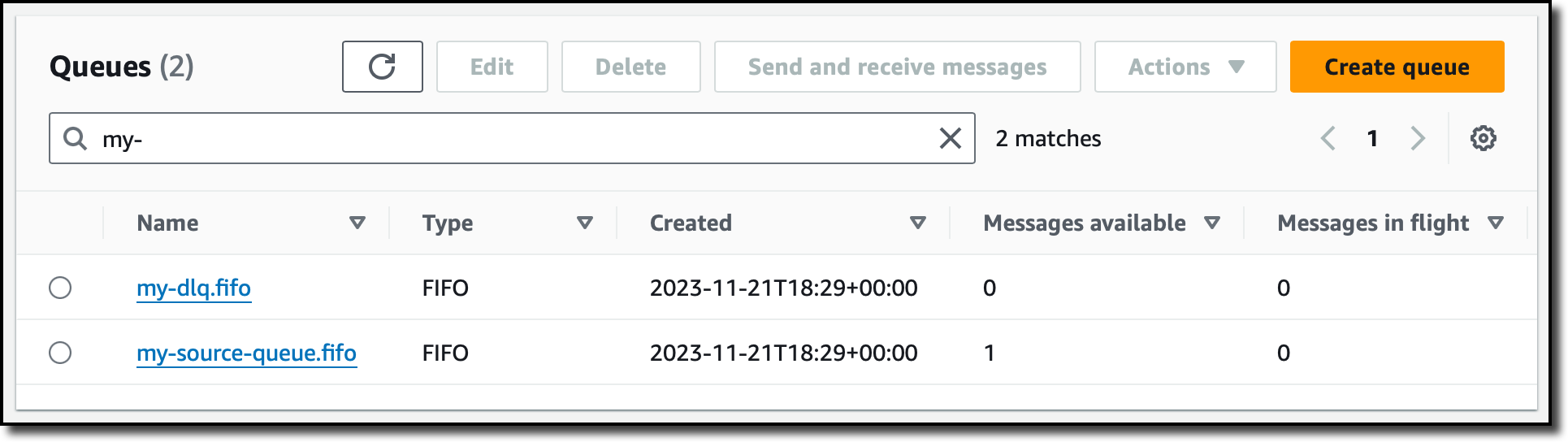

Using the Amazon SQS console, I create a first queue (my-dlq.fifo) to be used as a DLQ. To redrive messages back to the source FIFO queue, the queue type must match, so this is also a FIFO queue.

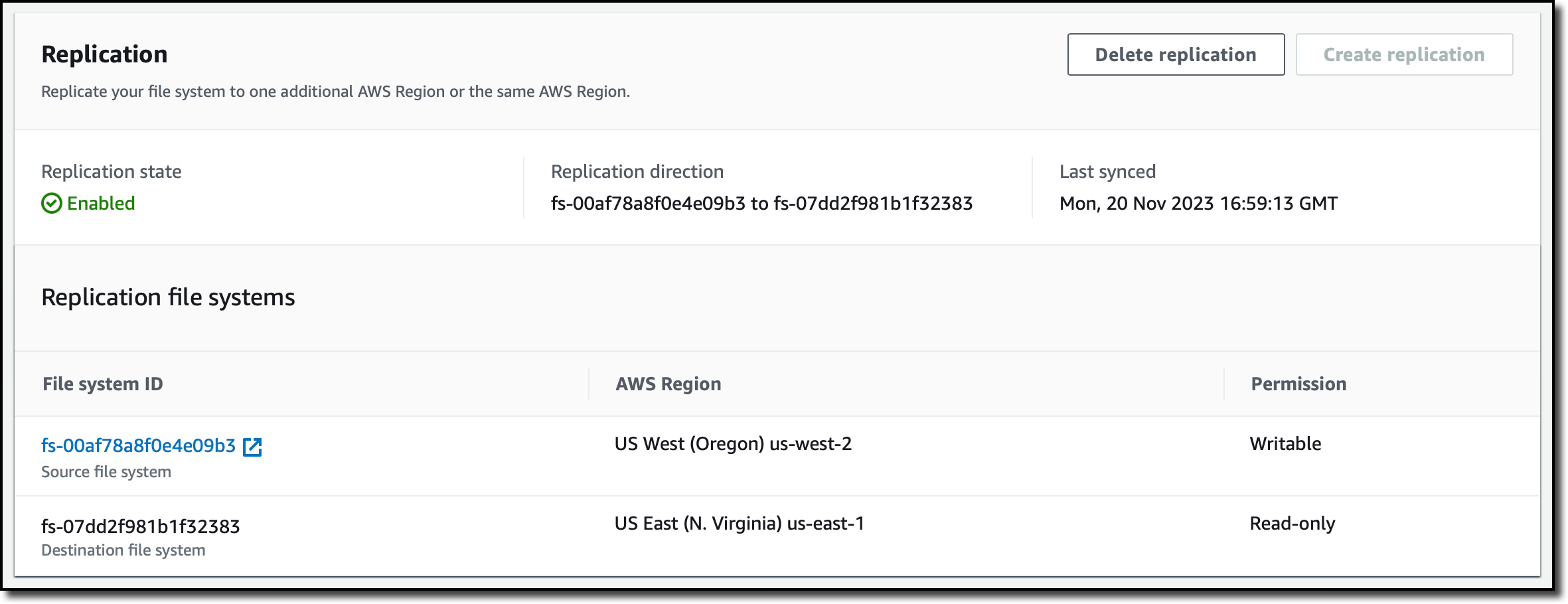

Then, I create a source FIFO queue (my-source-queue.fifo) to handle messages as usual. When I create the source queue, I configure the first queue (my-dlq.fifo) as the DLQ and specify 3 as the Maximum receives condition under which messages are moved from the source queue to the DLQ.

When a message has been received by a consumer for more than the number of times specified by this condition, Amazon SQS moves the message to the DLQ. The original message ID is retained and can be used to uniquely track the message.

To test this setup, I use the console to send a message to the source queue. Then, I use the AWS Command Line Interface (AWS CLI) to receive the message multiple times without deleting it.

aws sqs receive-message --queue-url https://sqs.eu-west-1.amazonaws.com/123412341234/my-source-queue.fifo

{

"Messages": [

{

"MessageId": "ef2f1c72-4bfe-4093-a451-03fe2dbd4d0f",

"ReceiptHandle": "...",

"MD5OfBody": "0f445a578fbcb0c06ca8aeb90a36fcfb",

"Body": "My important message."

}

]

}

To receive the same message more than once, I wait for the time specified in the queue visibility timeout to pass (30 seconds by default).

After the third time, the message is not in the source queue because it has been moved to the DLQ. When I try to receive messages from the source queue, the list is empty.

aws sqs receive-message --queue-url https://sqs.eu-west-1.amazonaws.com/123412341234/my-source-queue.fifo

{

"Messages": []

}

To confirm that the message has been moved, I poll the DLQ to see if the message is there.

aws sqs receive-message --queue-url https://sqs.eu-west-1.amazonaws.com/123412341234/my-dlq.fifo

{

"Messages": [

{

"MessageId": "ef2f1c72-4bfe-4093-a451-03fe2dbd4d0f",

"ReceiptHandle": "...",

"MD5OfBody": "0f445a578fbcb0c06ca8aeb90a36fcfb",

"Body": "My important message."

}

]

}

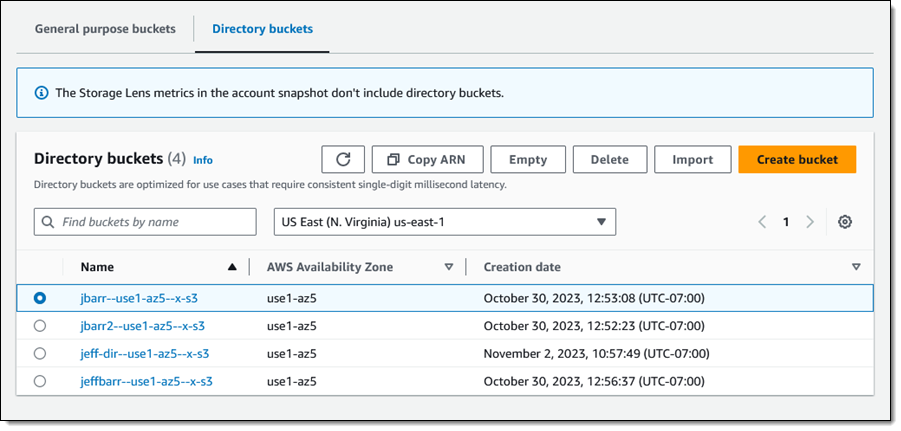

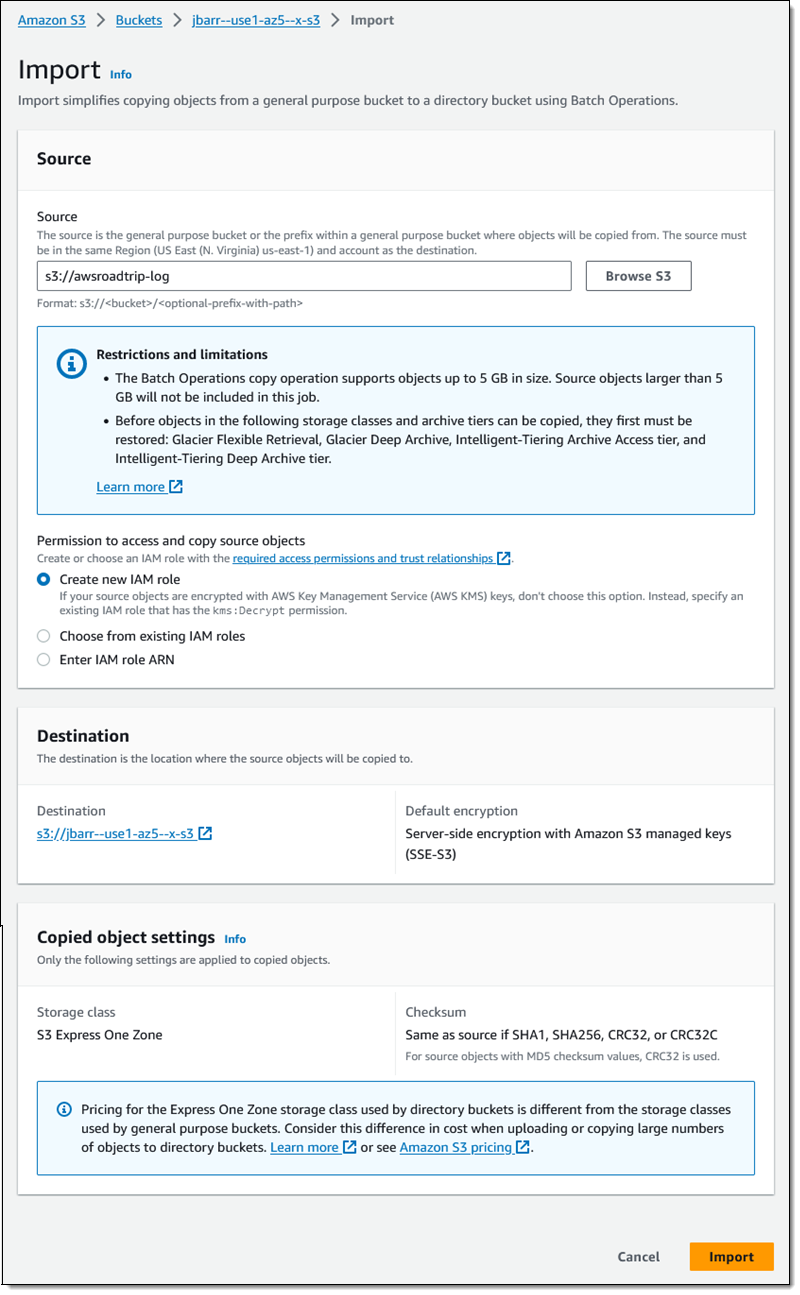

Now that the message is in the DLQ, I can investigate why the message has not been processed (well, I know the reason this time) and decide whether to redrive messages from the DLQ using the Amazon SQS console or the new redrive API that was introduced a few months ago. For this example, I use the console. Back on the Amazon SQS console, I select the DLQ queue and choose Start DLQ redrive.

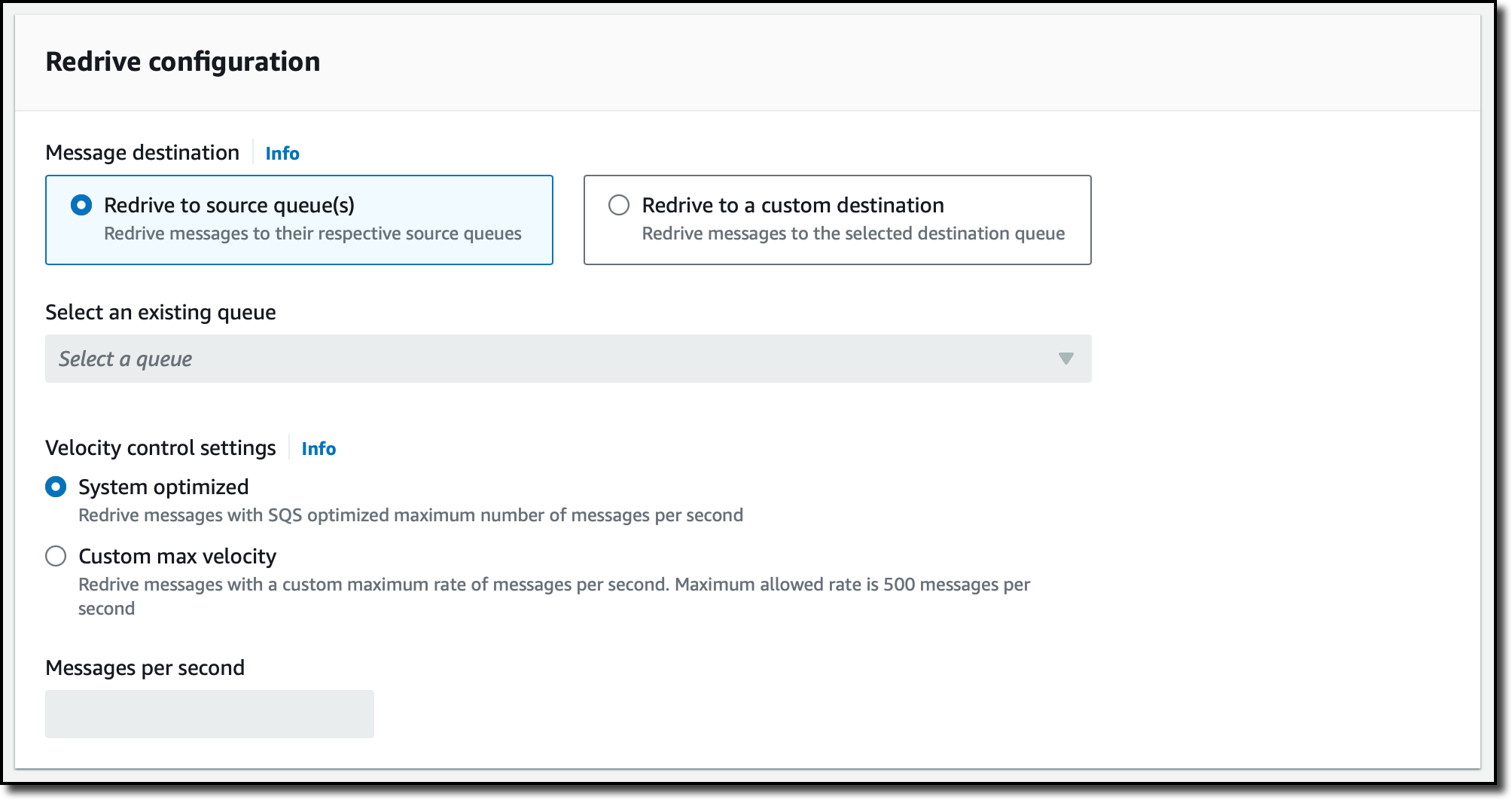

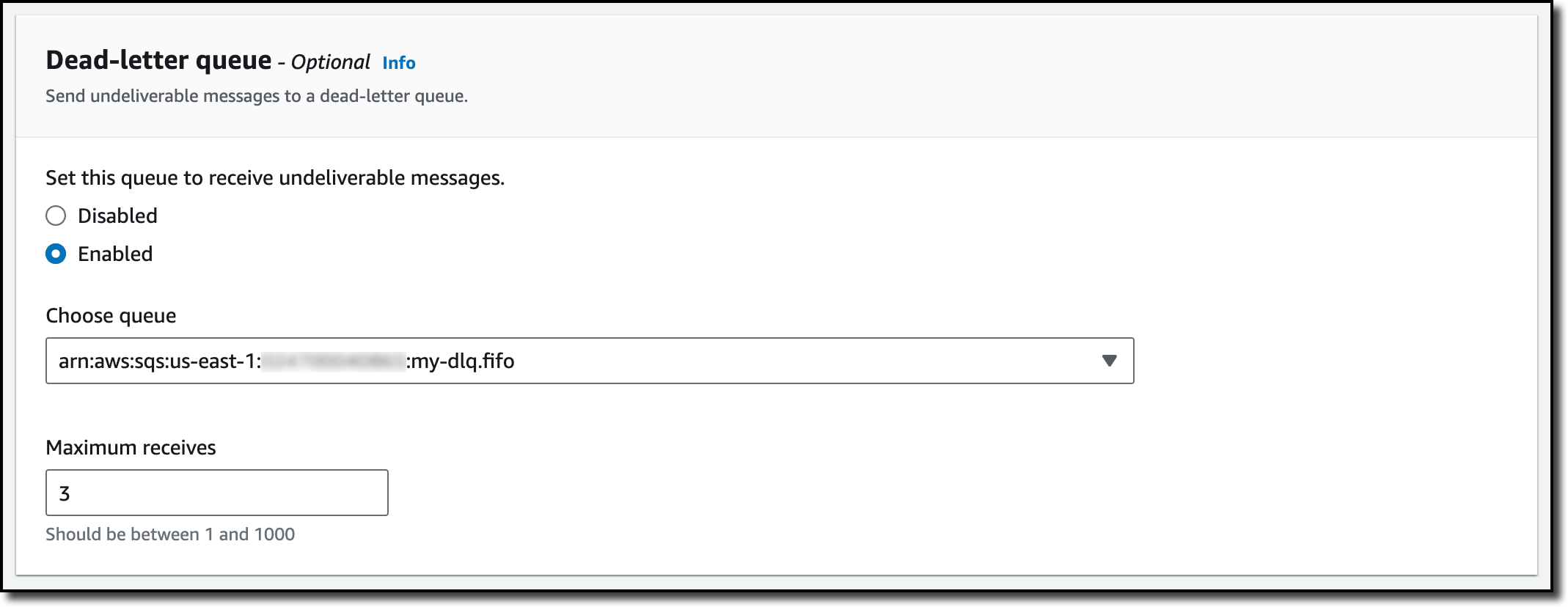

In Redrive configuration, I choose to redrive the messages to the source queue. Optionally, I can specify another FIFO queue as a custom destination. I use System optimized in Velocity control settings to redrive messages with the maximum number of messages per second optimized by Amazon SQS. Optionally, if there is a large number of messages in the DLQ, I can configure a custom maximum rate of messages per second to avoid overloading consumers.

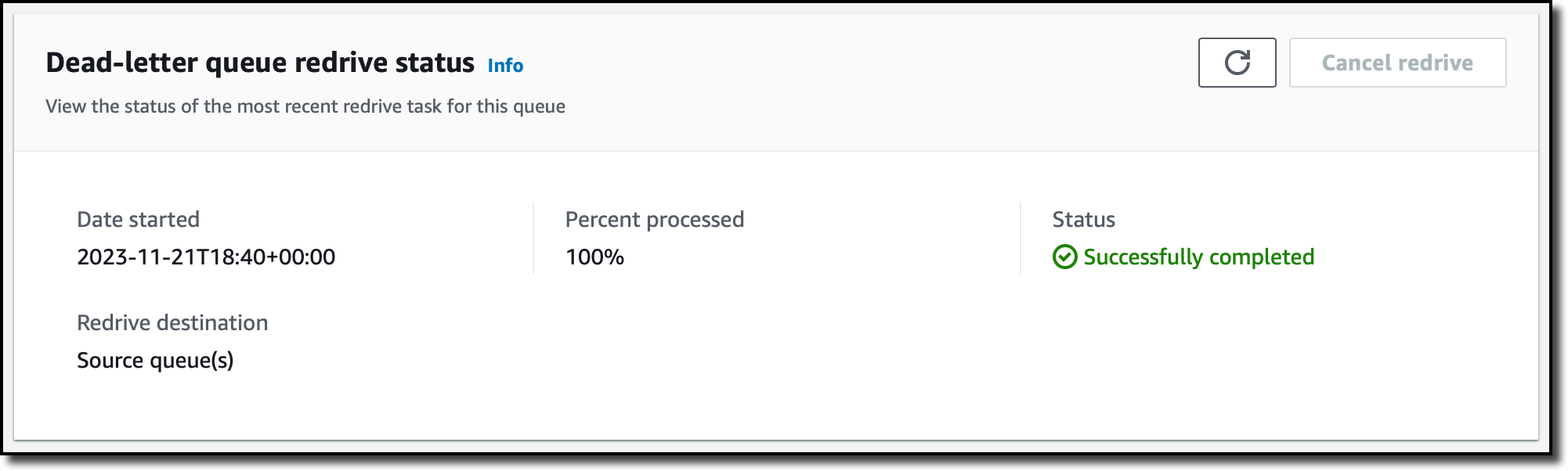

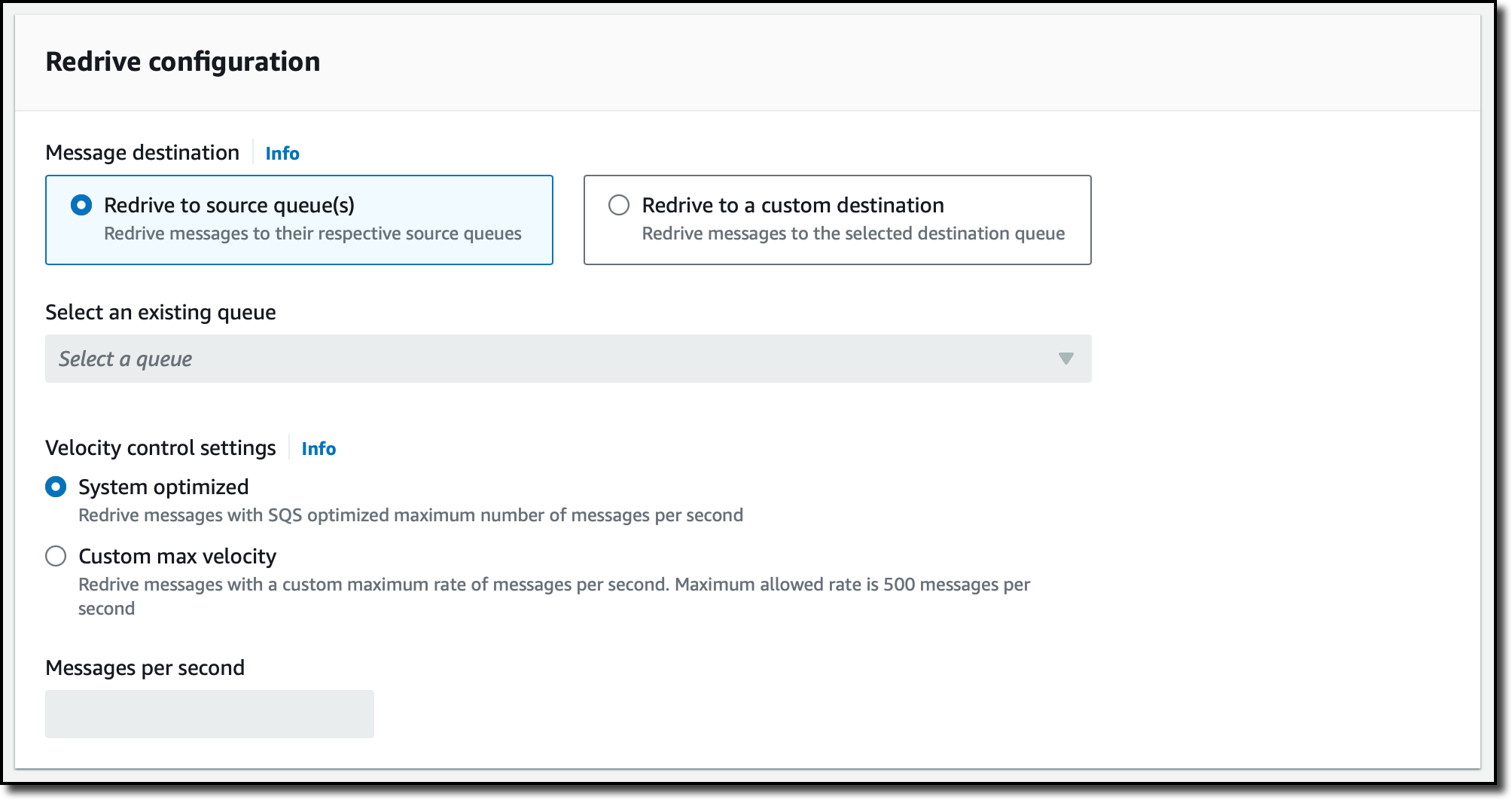

Before starting the redrive task, I can use the Inspect messages section to poll and check messages. I already decided what to do, so I choose DLQ redrive to start the task. I have only one message to process, so the redrive task completes very quickly.

As expected, the message is back in the source queue and is ready to be processed again.

Things to know

Dead letter queue (DLQ) support for FIFO queues is available today in all AWS Regions where Amazon SQS is offered with the exception of GovCloud Regions and those based in China.

In the DLQ configuration, the maximum number of receives should be between 1 and 1,000.

There is no additional cost for using high throughput mode or a DLQ. Every Amazon SQS action counts as a request. A single request can send or receive from 1 to 10 messages, up to a maximum total payload of 256 KB. You pay based on the number of requests, and requests are priced differently between standard and FIFO queues.

As part of the AWS Free Tier, there is no cost for the first million requests per month for standard queues and for the first million requests per month for FIFO queues. For more information, see Amazon SQS pricing.

With these updates and the increased throughput, you can cover the vast majority of use cases with FIFO queues.

Use Amazon SQS FIFO queues to have high throughput, exactly-once processing, and first-in-first-out delivery.

— Danilo

I’m happy to be able to tell you about the latest in our series of innovative custom chip designs, the energy-efficient AWS Graviton4 processor.

I’m happy to be able to tell you about the latest in our series of innovative custom chip designs, the energy-efficient AWS Graviton4 processor.

3. Access to cutting-edge capabilities

3. Access to cutting-edge capabilities