Amazon Redshift puts artificial intelligence (AI) at your service to optimize efficiencies and make you more productive with two new capabilities that we are launching in preview today.

First, Amazon Redshift Serverless becomes smarter. It scales capacity proactively and automatically along dimensions such as the complexity of your queries, their frequency, the size of the dataset, and so on to deliver tailored performance optimizations. This allows you to spend less time tuning your data warehouse instances and more time getting value from your data.

Second, Amazon Q generative SQL in Amazon Redshift Query Editor generates SQL recommendations from natural language prompts. This helps you to be more productive in extracting insights from your data.

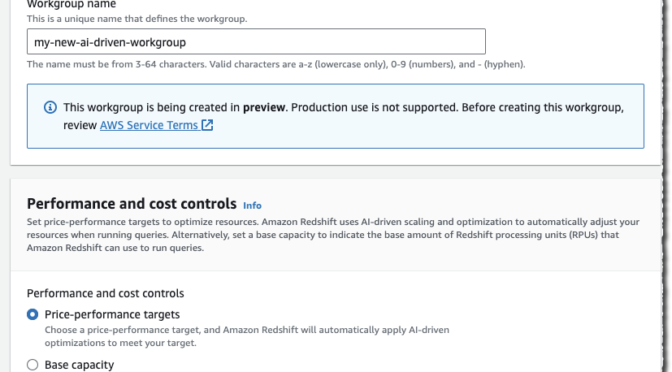

Let’s start with Amazon Redshift Serverless

When you use Amazon Redshift Serverless, you can now opt in for a preview of AI-driven scaling and optimizations. When enabled, the system observes and learns from your usage patterns, such as the concurrent number of queries, their complexity, and the time it takes to run them. Then, it automatically optimizes your serverless endpoint to meet your price performance target. Based on AWS internal testing, this new capability may give you up to ten times better price performance for variable workloads without any manual intervention.

AI-driven scaling and optimizations eliminate the time and effort to manually resize your workgroup and plan background optimizations based on workload needs. It continually runs automatic optimizations when they are most valuable for better performance, avoiding performance cliffs and time-outs.

This new capability goes beyond the existing self-tuning capabilities of Amazon Redshift Serverless, such as machine learning (ML)-enhanced techniques to adjust your compute, modify the physical schema of the database, create or drop materialized views as needed (the one we manage automatically, not yours), and vacuum tables. This new capability brings more intelligence to decide how to adjust the compute, what background optimizations are required, and when to apply them, and it makes its decisions based on more dimensions. We also orchestrate ML-based optimizations for materialized views, table optimizations, and workload management when your queries need it.

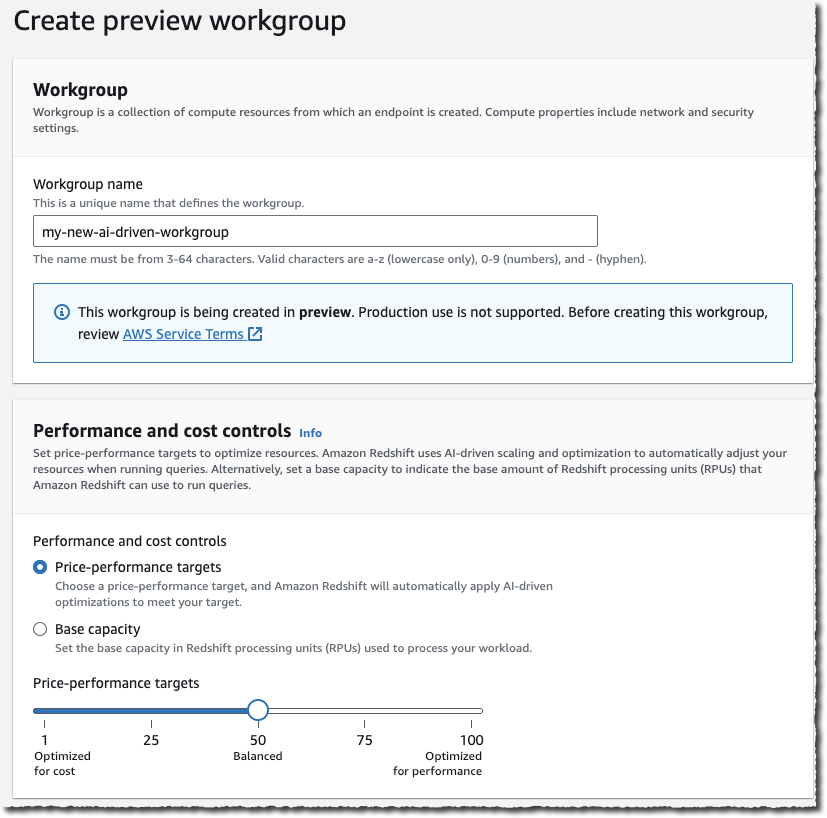

During the preview, you must opt in to enable these AI-driven scaling and optimizations on your workgroups. You configure the system to balance the optimization for price or performance. There is only one slider to adjust in the console.

As usual, you can track resource usage and associated changes through the console, Amazon CloudWatch metrics, and the system table SYS_SERVERLESS_USAGE.

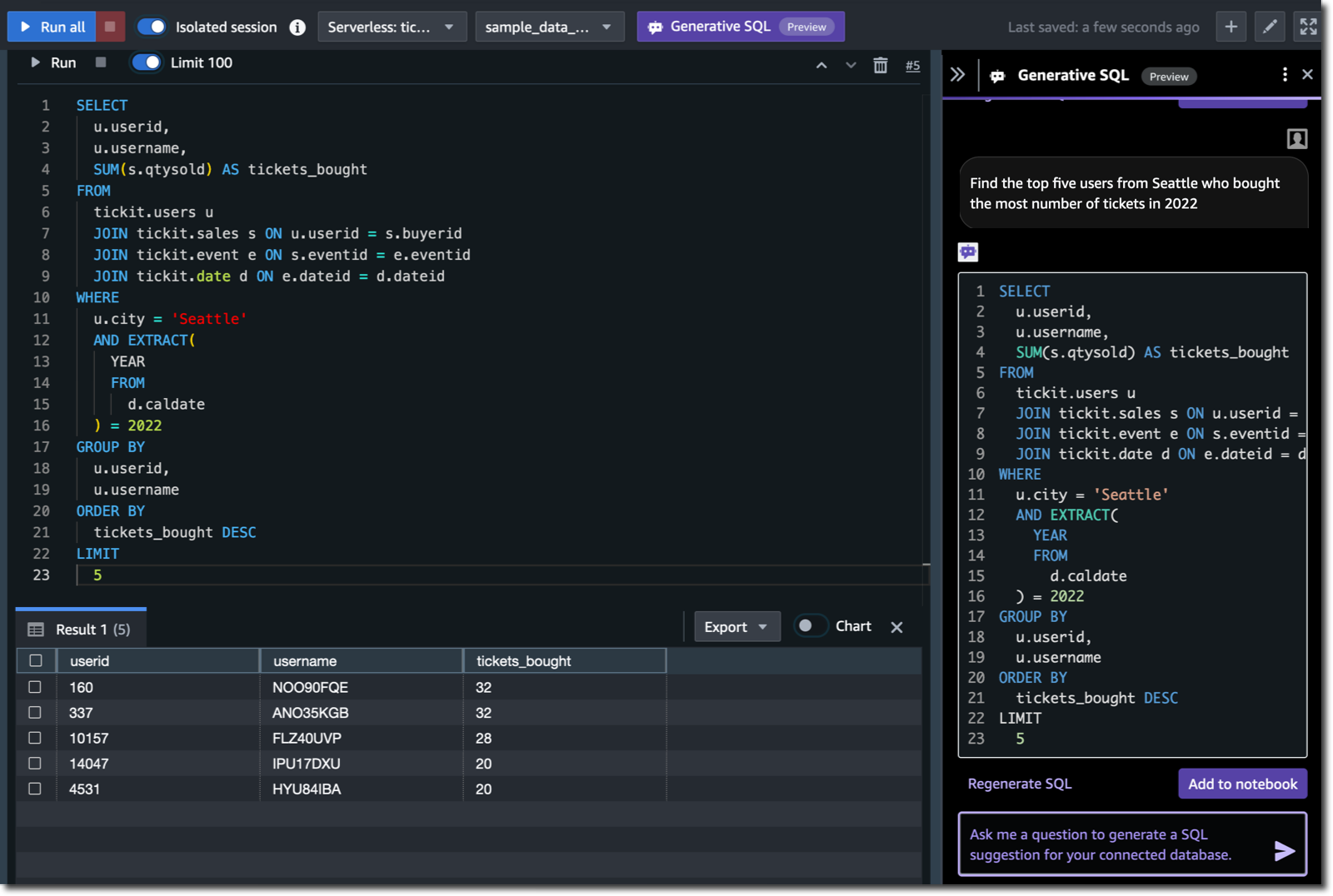

Now, let’s look at Amazon Q generative SQL in Amazon Redshift Query Editor

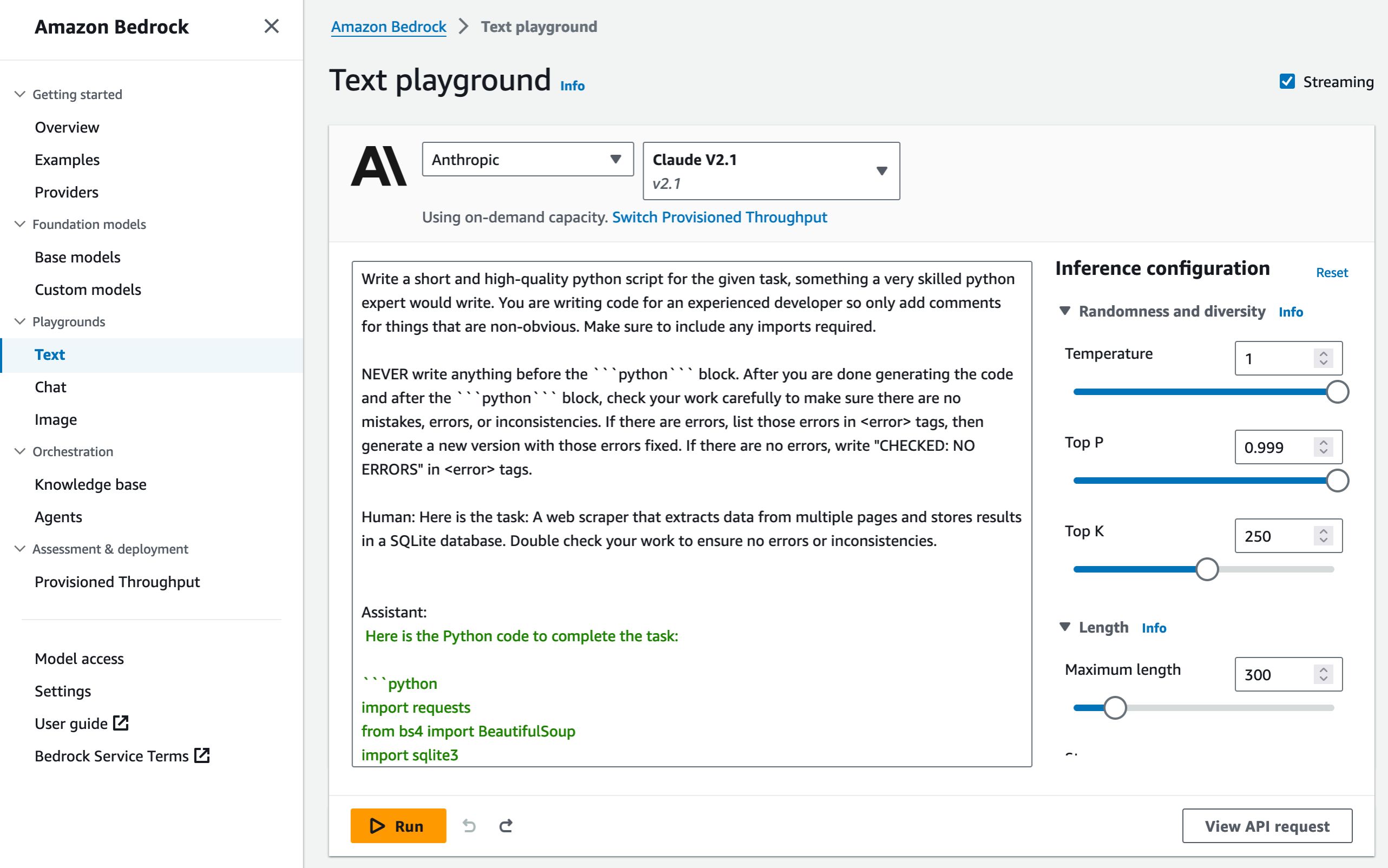

What if you could use generative AI to help analysts write effective SQL queries more rapidly? This is the new experience we introduce today in Amazon Redshift Query Editor, our web-based SQL editor.

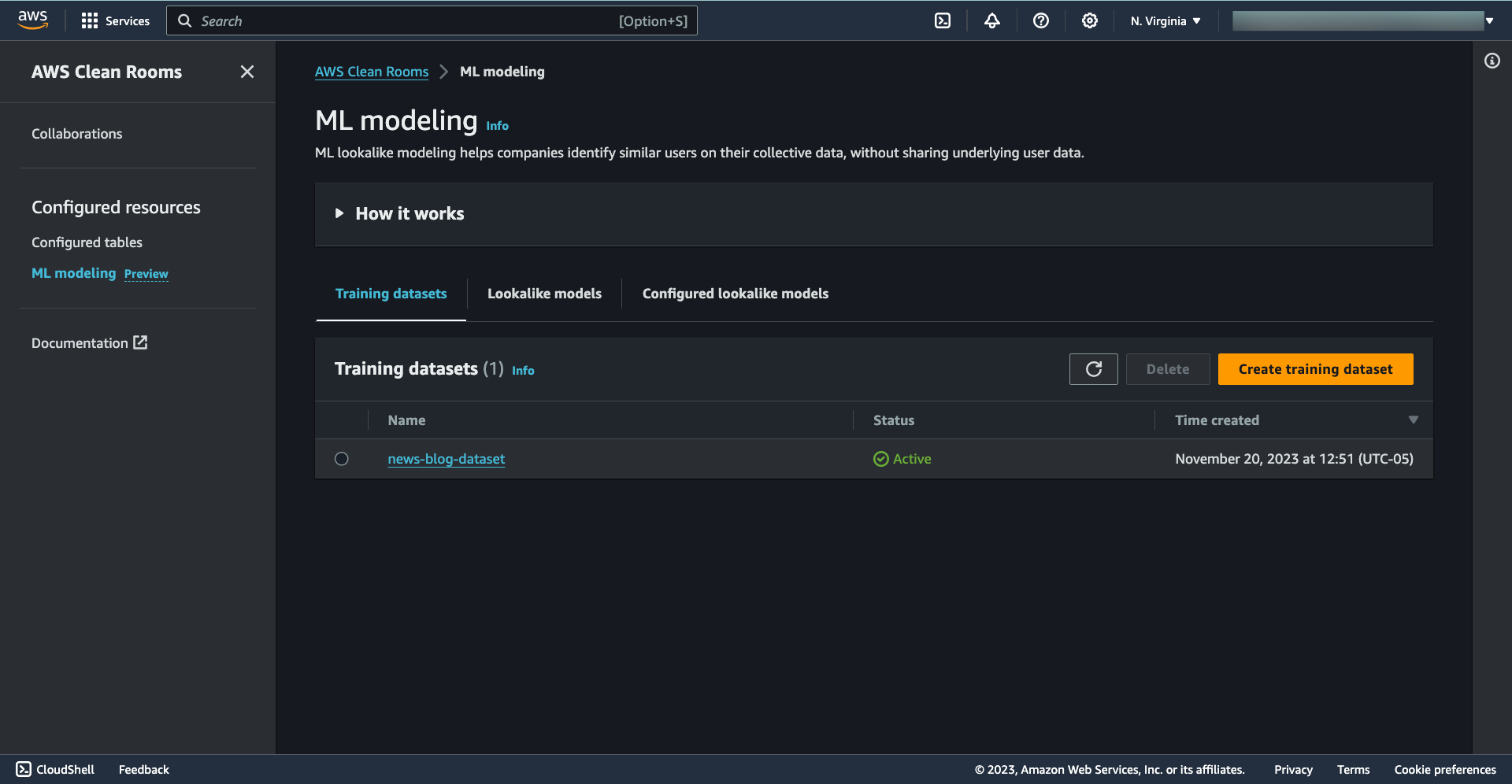

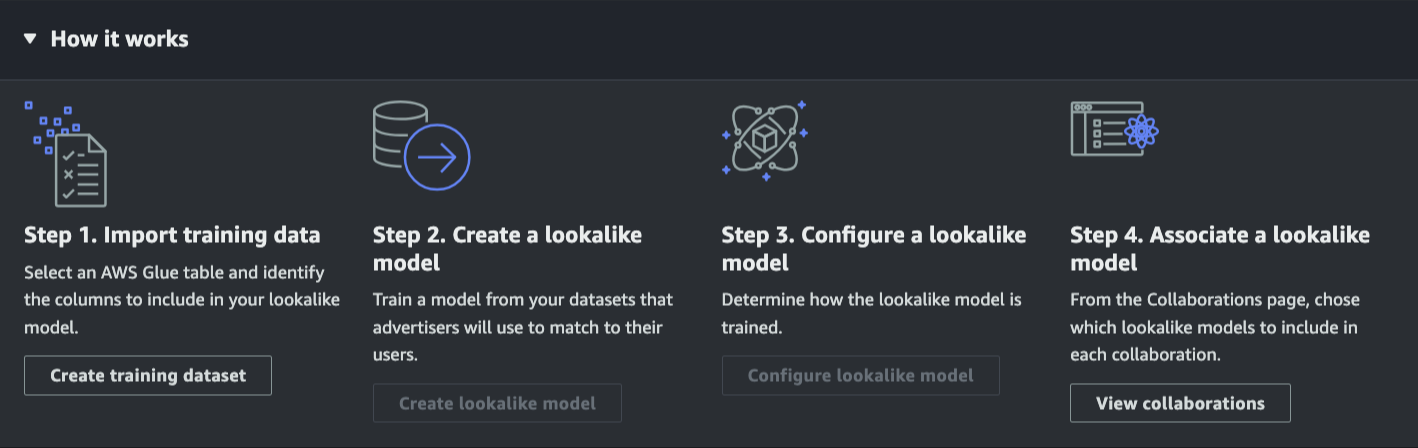

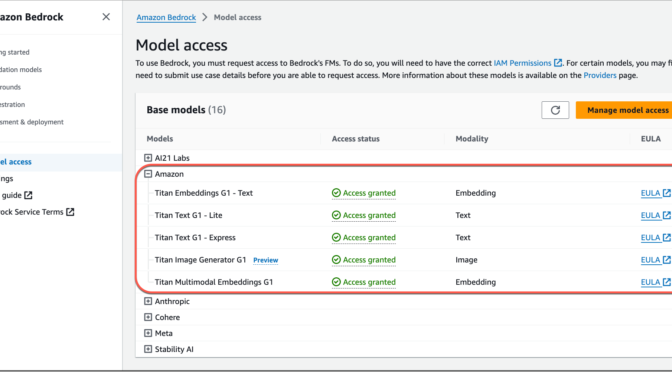

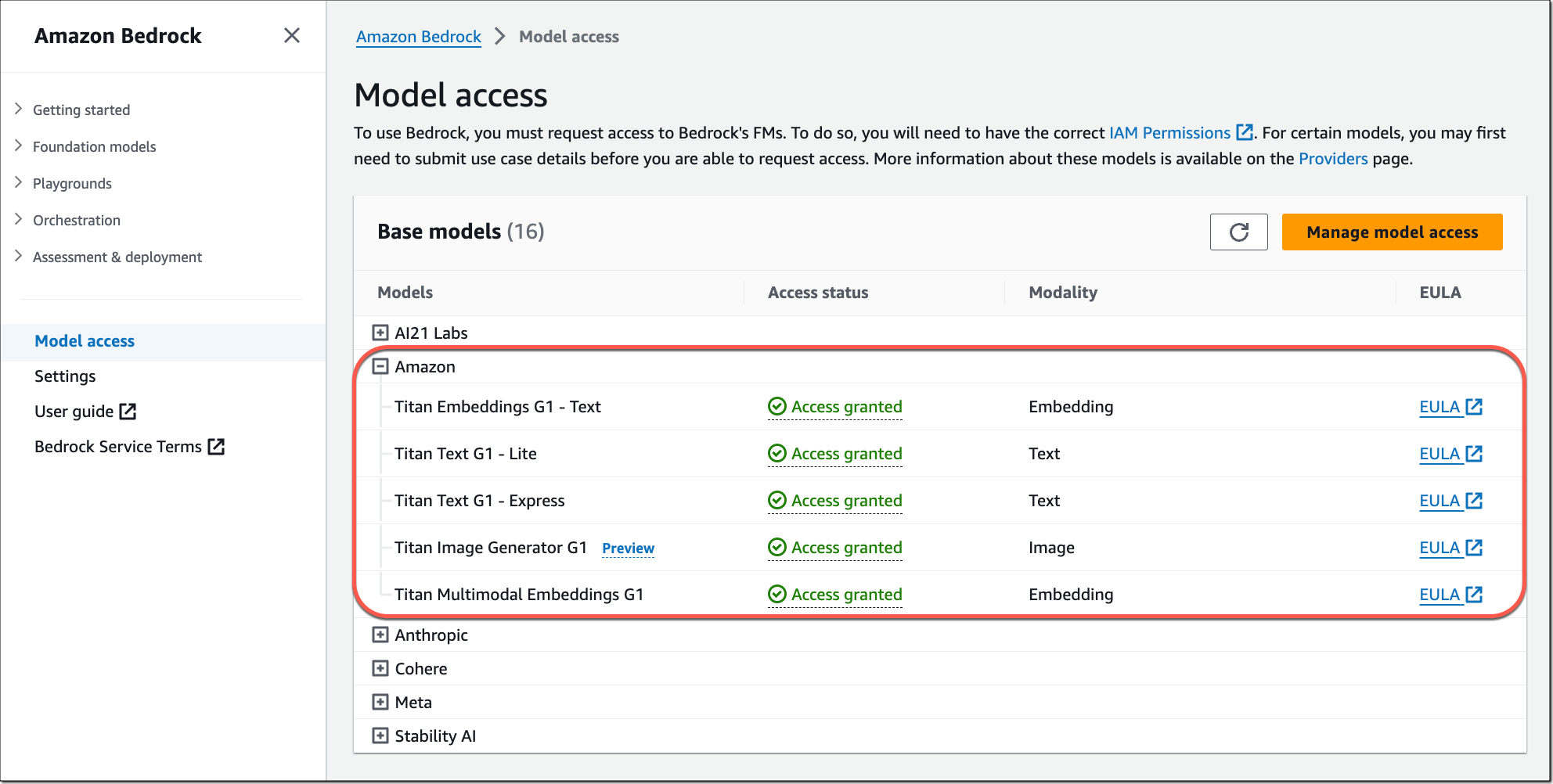

You can now describe the information you want to extract from your data in natural language, and we generate the SQL query recommendations for you. Behind the scenes, Amazon Q generative SQL uses a large language model (LLM) and Amazon Bedrock to generate the SQL query. We use different techniques, such as prompt engineering and Retrieval Augmented Generation (RAG), to query the model based on your context: the database you’re connected to, the schema you’re working on, your query history, and optionally the query history of other users connected to the same endpoint. The system also remembers previous questions. You can ask it to refine a previously generated query.

The SQL generation model uses metadata specific to your data schema to generate relevant queries. For example, it uses the table and column names and the relationship between the tables in your database. In addition, your database administrator can authorize the model to use the query history of all users in your AWS account to generate even more relevant SQL statements. We don’t share your query history with other AWS accounts and we don’t train our generation models with any data coming from your AWS account. We maintain the high level of privacy and security that you expect from us.

Using generated SQL queries helps you to get started when discovering new schemas. It does the heavy lifting of discovering the column names and relationships between tables for you. Senior analysts also benefit from asking what they want in natural language and having the SQL statement automatically generated. They can review the queries and run them directly from their notebook.

Let’s explore a schema and extract information

For this demo, let’s pretend I am a data analyst at a company that sells concert tickets. The database schema and data are available for you to download. My manager asks me to analyze the ticket sales data to send a thank you note with discount coupons to the highest-spending customers in Seattle.

I connect to Amazon Redshift Query Editor and connect the analytic endpoint. I create a new tab for a Notebook (SQL generation is available from notebooks only).

Instead of writing a SQL statement, I open the chat panel and type, “Find the top five users from Seattle who bought the most number of tickets in 2022.” I take the time to verify the generated SQL statement. It seems correct, so I decide to run it. I select Add to notebook and then Run. The query returns the list of the top five buyers in Seattle.

I had no previous knowledge of the data schema, and I did not type a single line of SQL to find the information I needed.

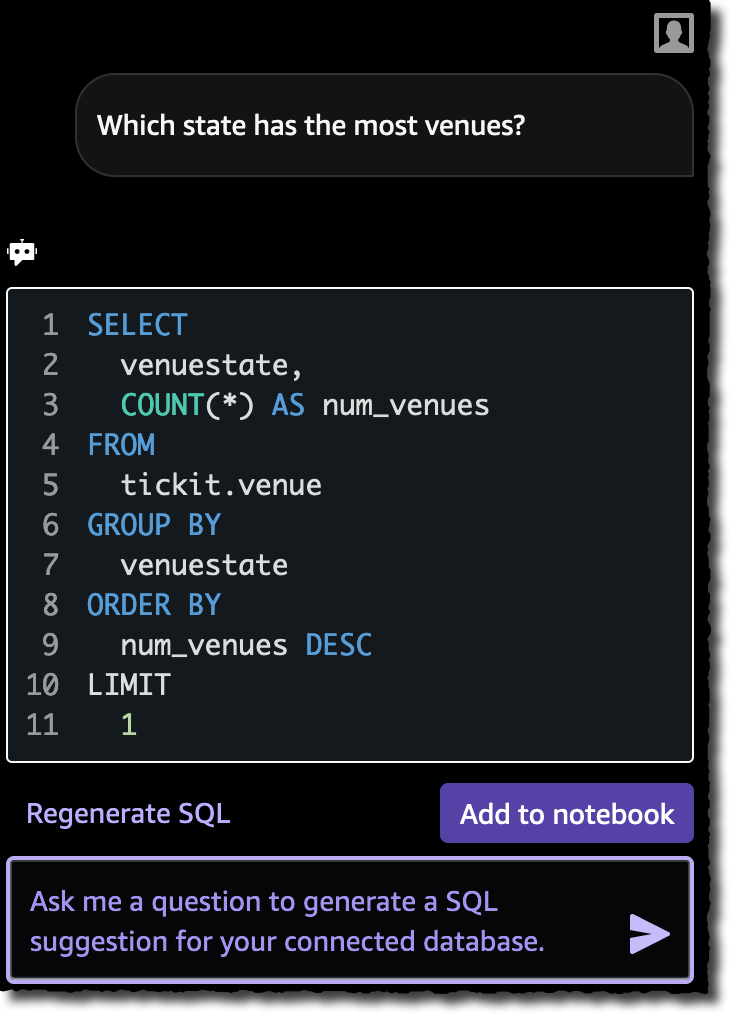

But generative SQL is not limited to a single interaction. I can chat with it to dynamically refine the queries. Here is another example.

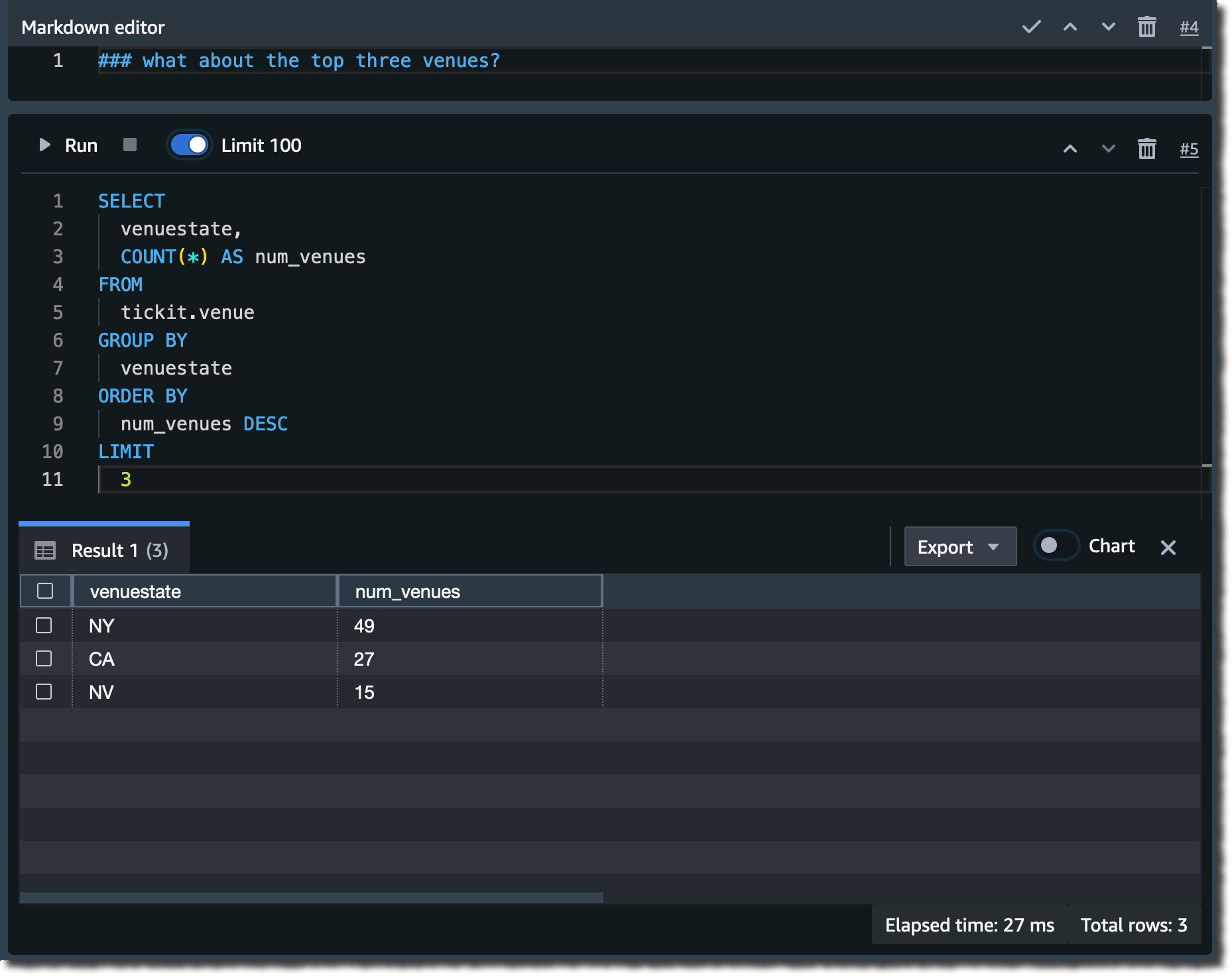

I ask “Which state has the most venues?” Generative SQL proposes the following query. The answer is New York, with 49 venues, if you’re curious.

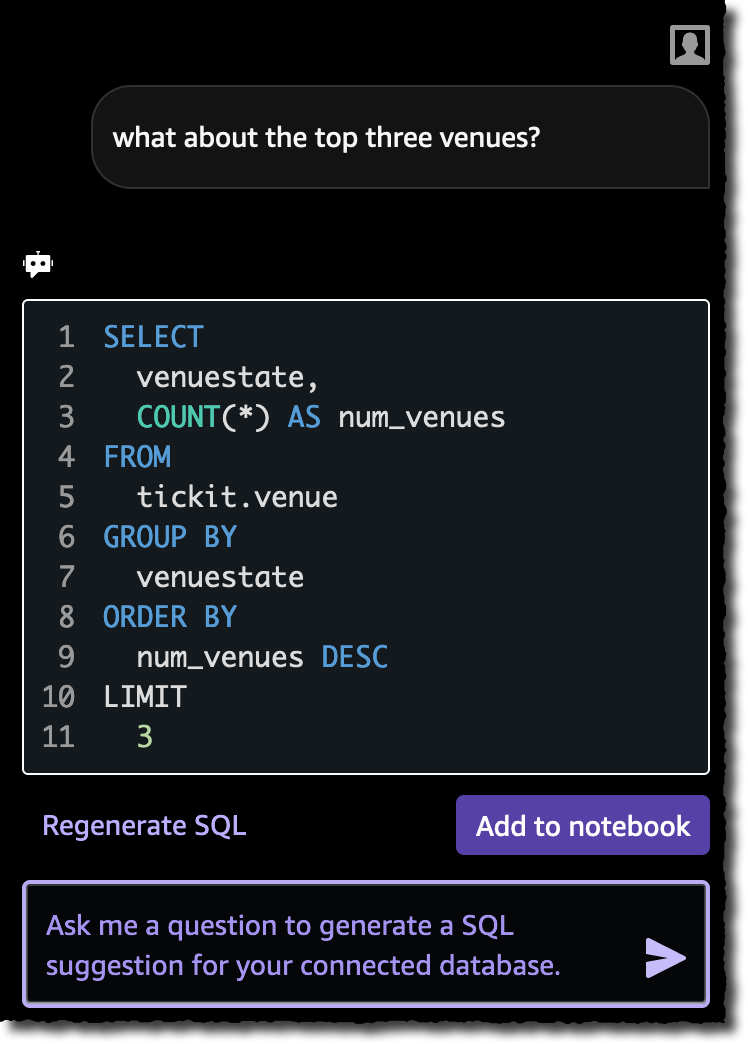

I changed my mind, and I want to know the top three cities with the most venues. I simply rephrase my question: “What about the top three venues?”

I add the query to the notebook and run it. It returns the expected result.

Best practices for prompting

Here are a couple of tips and tricks to get the best results out of your prompts.

Be specific – When asking questions in natural language, be as specific as possible to help the system understand exactly what you need. For example, instead of writing “find the top venues that sold the most tickets,” provide more details like “find the names of the top three venues that sold the most tickets in 2022.” Use consistent entity names like venue, ticket, and location instead of referring to the same entity in different ways, which can confuse the system.

Iterate – Break your complex requests into multiple simple statements that are easier for the system to interpret. Iteratively ask follow-up questions to get more detailed analysis from the system. For example, start by asking, “Which state has the most venues?” Then, based on the response, ask a follow-up question like “Which is the most popular venue from this state?”

Verify – Review the generated SQL before running it to ensure accuracy. If the generated SQL query has errors or does not match your intent, provide instructions to the system on how to correct it instead of rephrasing the entire request. For example, if the query is missing a filter clause on year, write “provide venues from year 2022.”

Availability and pricing

AI-driven scaling and optimizations are in preview in six AWS Regions: US East (Ohio, N. Virginia), US West (Oregon), Asia Pacific (Tokyo), and Europe (Ireland, Stockholm). They come at no additional cost. You pay only for the compute capacity your data warehouse consumes when it is active. Pricing is per Redshift Processing Unit (RPU) per hour. The billing is per second of used capacity. The pricing page for Amazon Redshift has the details.

Amazon Q generative SQL for Amazon Redshift Query Editor is in preview in two AWS Regions today: US East (N. Virginia) and US West (Oregon). There is no charge during the preview period.

These are two examples of how AI helps to optimize performance and increase your productivity, either by automatically adjusting the price-performance ratio of your Amazon Redshift Serverless endpoints or by generating correct SQL statements from natural language prompts.

Previews are essential for us to capture your feedback before we make these capabilities available for all. Experiment with these today and let us know what you think on the re:Post forums or using the feedback button on the bottom left side of the console.