Today we’re adding to Amazon FSx for OpenZFS the capability to send a snapshot from a file system to another file system in your account.

You can trigger the copy with one single API call or CLI command, and we take care of the rest. You don’t need to use commands like rsync and monitor the state of the transfer. The service takes care of the copy on your behalf. It manages potential network interruptions and retries automatically until the transfer completes. It transfers data incrementally at block level using OpenZFS’s native send and receive capabilities.

This new capability helps you to maintain agility by, for example, allowing quicker and easier creation of testing and development environments, and performance improvements by simplifying the management of read replicas to provide scale-out performance.

Amazon FSx for OpenZFS is a fully managed file storage service that lets you launch, run, and scale fully managed file systems built on the open source OpenZFS file system. FSx for OpenZFS makes it easy to migrate your on-premises ZFS file servers without changing your applications or how you manage data and to build new high-performance, data-intensive applications on the cloud.

Snapshots are one of the most powerful features of ZFS file systems. A snapshot is a read-only copy of a file system or volume. Snapshots can be created almost instantly and initially consume no additional disk space within the storage pool. When a snapshot is created, its space is initially shared between the snapshot and the file system and possibly with previous snapshots. As the file system changes, space that was previously shared becomes unique to the snapshot. The snapshot consumes incremental disk space by continuing to reference the old data and so prevents the space from being freed. Snapshots can be rolled back on-demand and almost instantly, even on very large file systems. Snapshots can also be cloned to form new volumes.

Snapshots are block-level copies. They are more efficient to transfer than traditional file-level copies, where the system must sometimes traverse millions of files to detect the ones that changed. Transferring an incremental snapshot is also more efficient than transferring an incremental file-based copy because snapshots are incremental at block level. They only contain blocks modified since the last snapshot.

On-demand replication of ZFS snapshots allows the transfer of terabytes of data using the native send and receive capability of OpenZFS without having to worry about the underlying infrastructure. We detect and manage network interruptions and other types of errors for you, making it easier for you to replicate data across file systems.

There are two main use cases where you might want to use this new capability.

Developers and quality assurance (QA) engineers might send on-demand snapshots to development and testing environments. It allows them to work with production data, ensuring accurate testing and development outcomes. The use of recent snapshots as consistent starting points for testing enhances the efficiency of the development and testing processes.

Data engineers might use on-demand replication to run parallel experiments on a dataset. Imagine your application processes a large dataset. You want to run multiple versions of your data processing algorithm on the same base dataset to find the best tuning for your use case. With on-demand data replication, you can create multiple identical copies of your file system and run each experiment in parallel.

Let’s see how it works

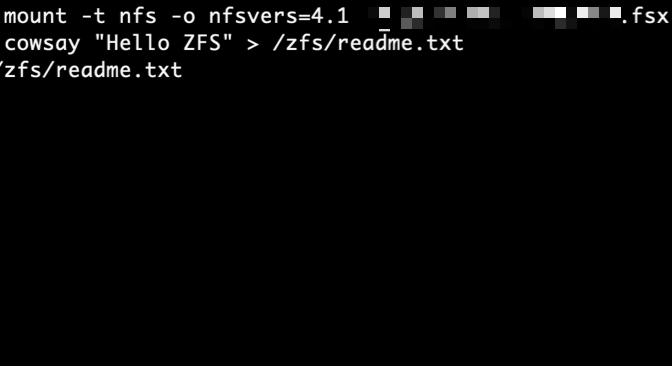

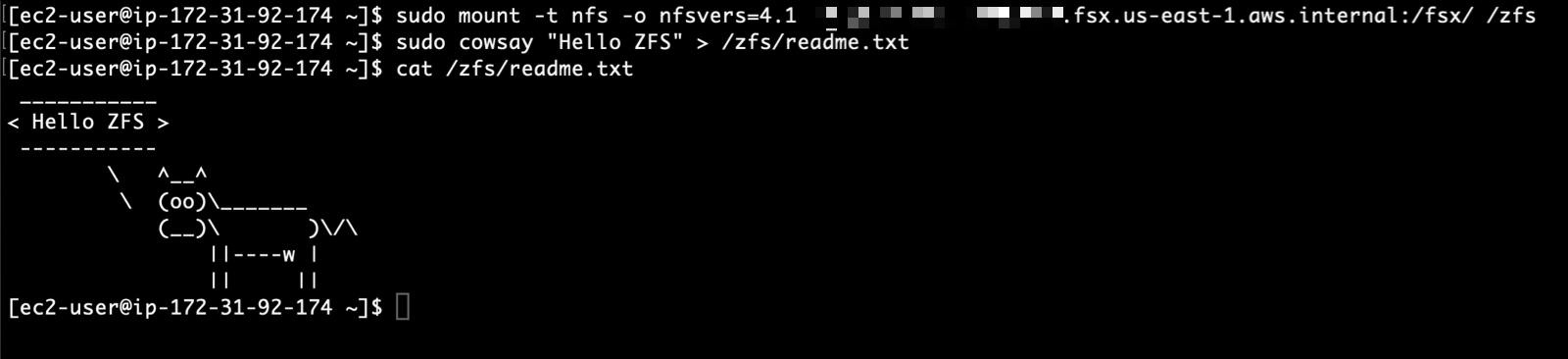

To prepare this demo, I use the FSx for OpenZFS section of the AWS Management Console. First, I create two Amazon FSx for OpenZFS volumes. Then, I mount the two file systems on one Amazon Linux instance (/zfs-filesystem1 and /zfs-filesystem2). I prepare a file on the first volume, and I expect to find the same file on the second volume after an on-demand replication.

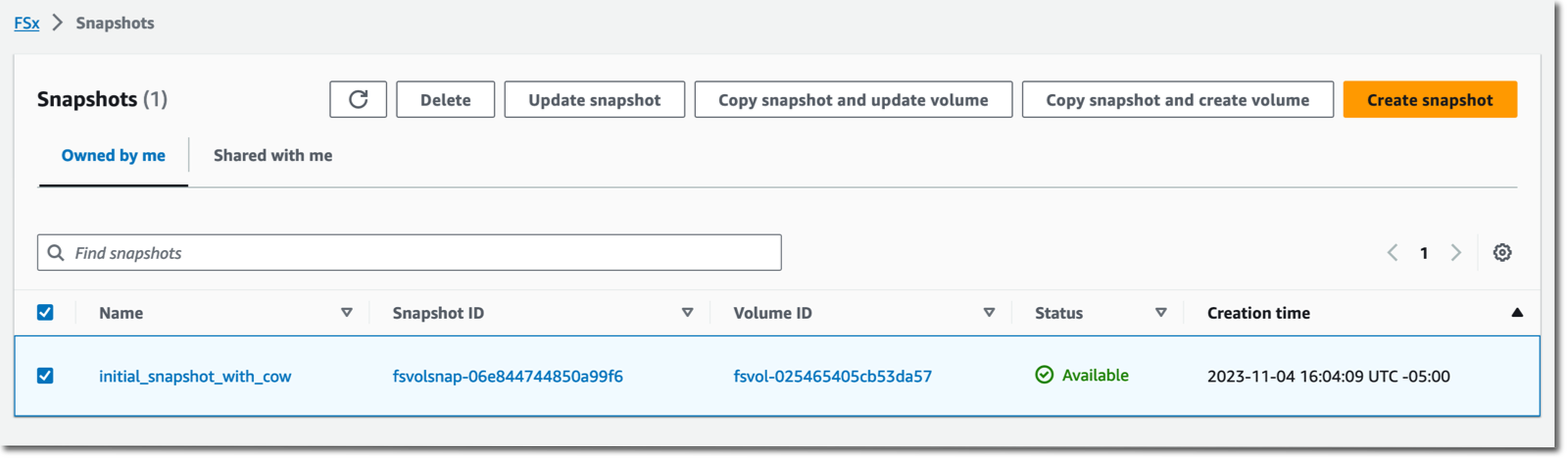

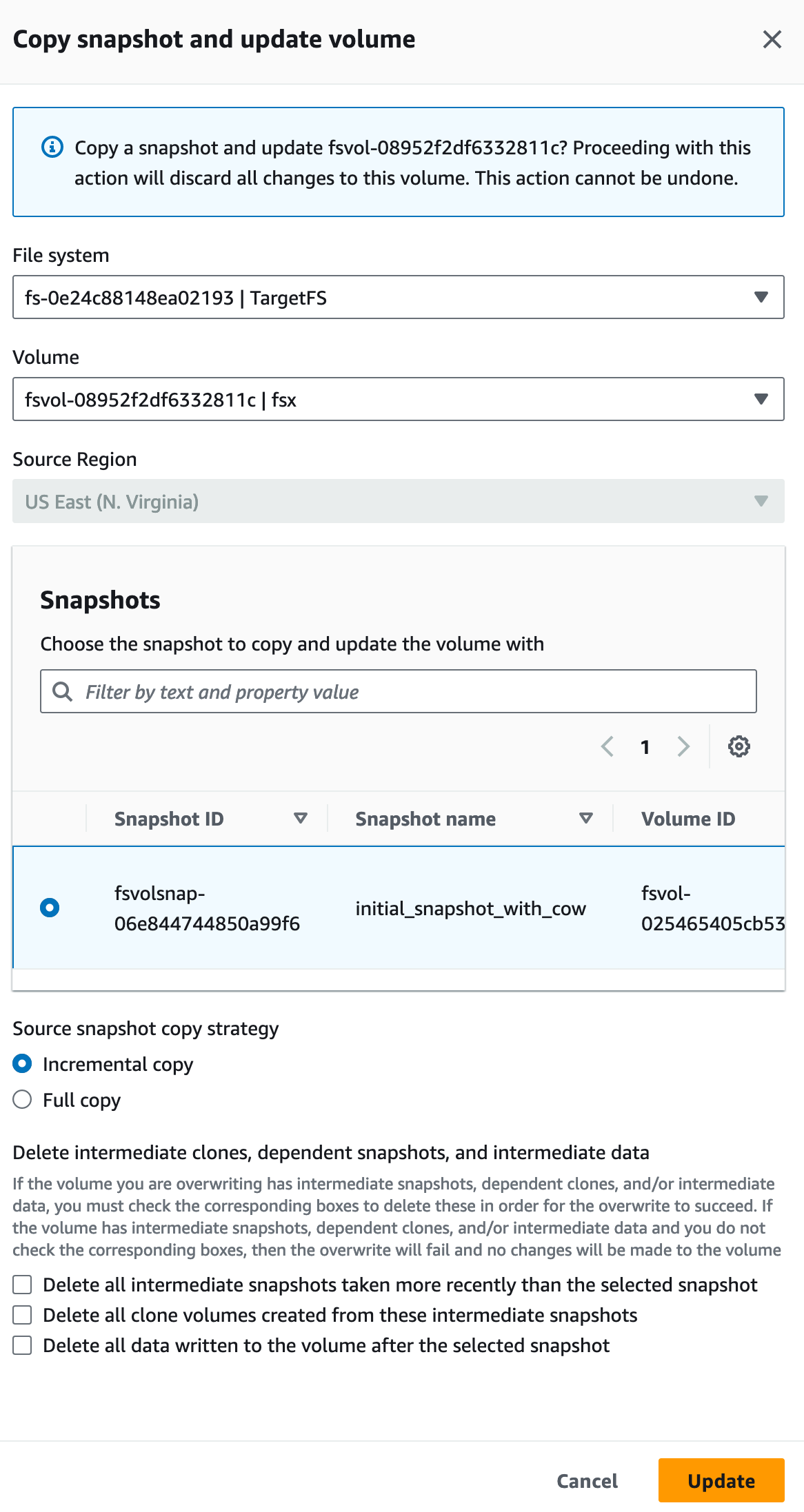

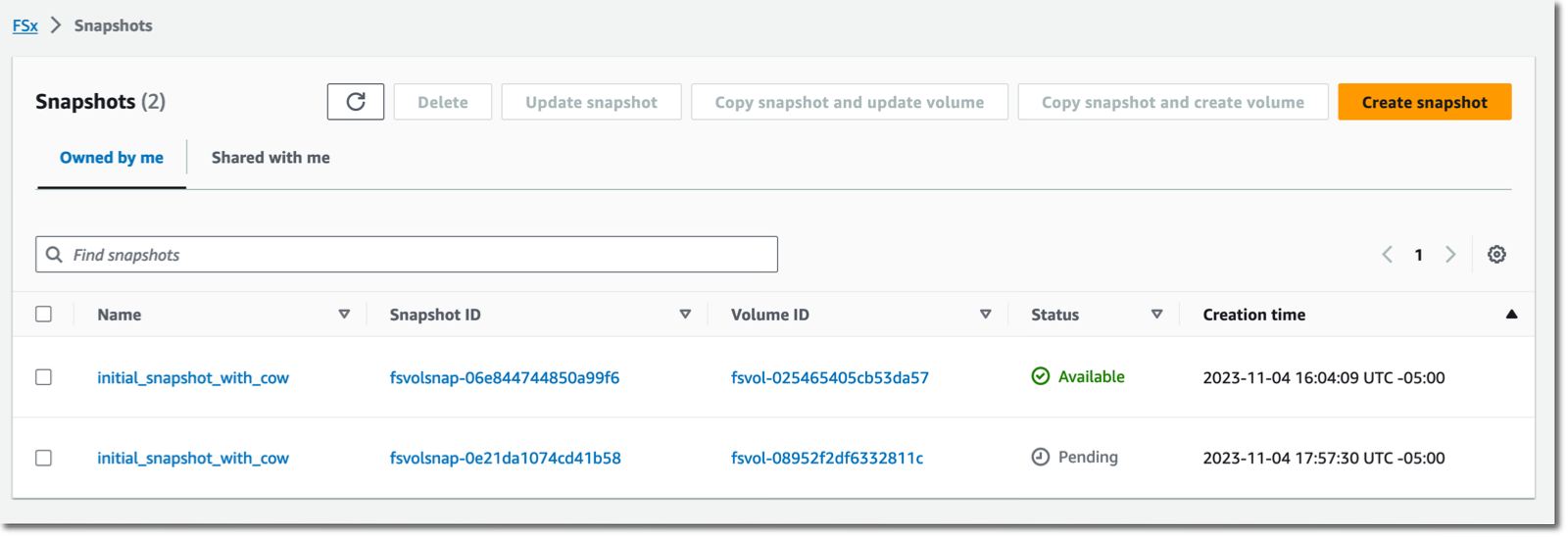

To synchronize data between my two volumes, I navigate to the snapshot section of the console. Then I select Copy snapshot and update volume. I also have the option to copy the snapshot to a new ZFS volume.

On the Copy snapshot and update volume page, I select the destination File system and Volume. I also confirm the source snapshot. I choose the Source snapshot copy strategy, either requesting a full copy or an incremental copy. When ready, I select Update.

After a while—how long depends on the amount of data to transfer—I observe a new snapshot listed on the destination volume. In my demo scenario, it just takes a few seconds.

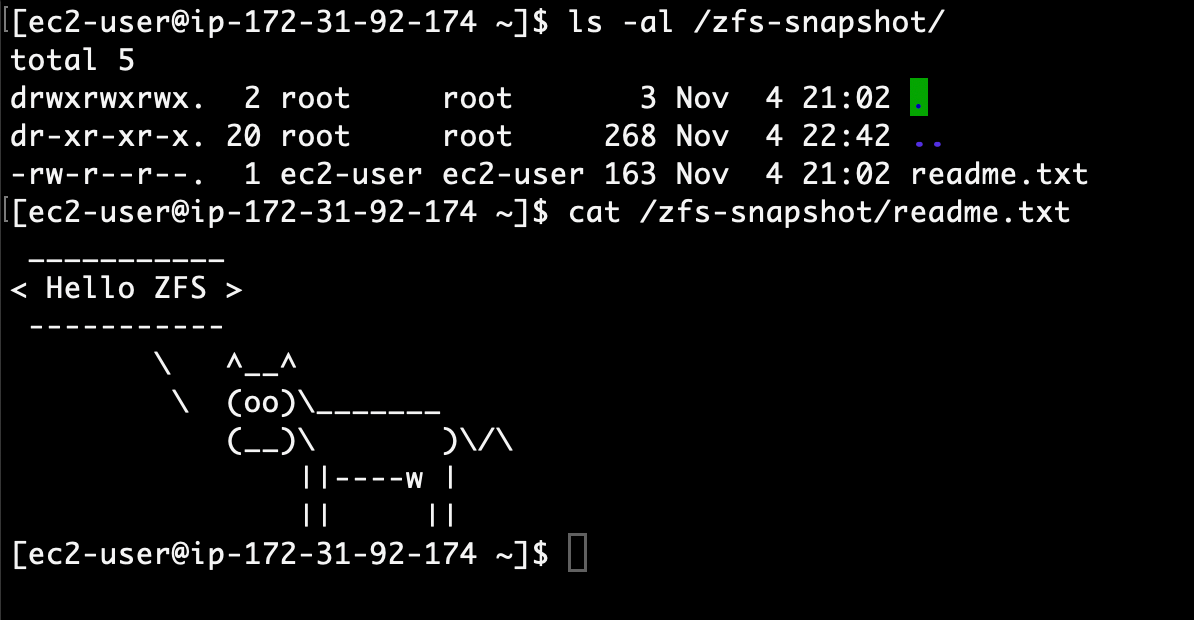

I return to my Linux instance and list the content available in my second mount point /zfs-snapshot. I am happy to see my cow ASCII art on the second file system

.

.

Alternatively, I can automate on-demand transfers using the new FSx APIs: CopySnapshotAndUpdateVolume and CopySnapshotAndCreateVolume.

To set up an ongoing periodic replication, I use the provided CloudFormation template to create an automated replication schedule. When deployed, the system periodically takes a snapshot of the volume on the source file system and performs an incremental replication to a volume on the destination file system. For example, I could schedule replication to a development file system to happen once every 15 minutes for testing purposes.

Pricing and availability

This new capability is available in all AWS Regions where FSx for OpenZFS is available.

It comes at no additional cost. AWS charges the usual fees for network data transfer between Availability Zones.

You pay standard FSx for OpenZFS charges for the amount of storage used by the remote file system.

The new on-demand replication for Amazon FSx for OpenZFS allows you to efficiently transfer incremental file system snapshots to a new volume on your account. It allows developers and QA engineers to work with copies of production data and data engineers to run parallel experiments on datases.

Now go build and configure your first on-demand replication today!