Today, we are announcing the availability of Amazon ElastiCache Serverless, a new serverless option that allows customers to create a cache in under a minute and instantly scale capacity based on application traffic patterns. ElastiCache Serverless is compatible with two popular open-source caching solutions, Redis and Memcached.

You can use ElastiCache Serverless to operate a cache for even the most demanding workloads without spending time in capacity planning or requiring caching expertise. ElastiCache Serverless constantly monitors your application’s memory, CPU, and network resource utilization and scales instantly to accommodate changes to the access patterns of workloads it serves. You can create a highly available cache with data automatically replicated across multiple Availability Zones and up to 99.99 percent availability Service Level Agreement (SLA) for all workloads, which saves you time and money.

Customers wanted to get radical simplicity to deploy and operate a cache. ElastiCache Serverless offers a simple endpoint experience abstracting the underlying cluster topology and cache infrastructure. You can reduce application complexity and have more operational excellence without handling reconnects and rediscovering nodes.

With ElastiCache Serverless, there are no upfront costs, and you pay for only the resources you use. You pay for the amount of cache data storage and ElastiCache Processing Units (ECPUs) resources consumed by your applications.

Getting started with Amazon ElastiCache Serverless

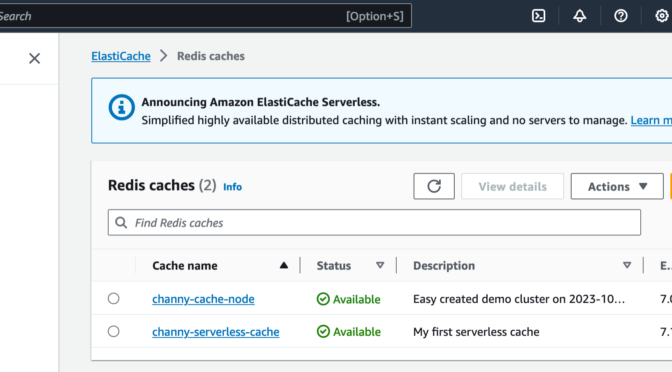

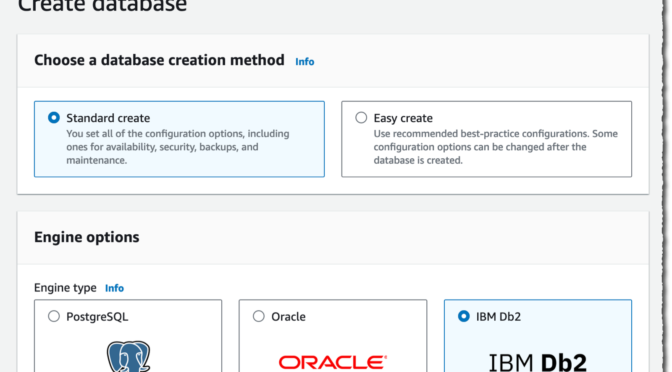

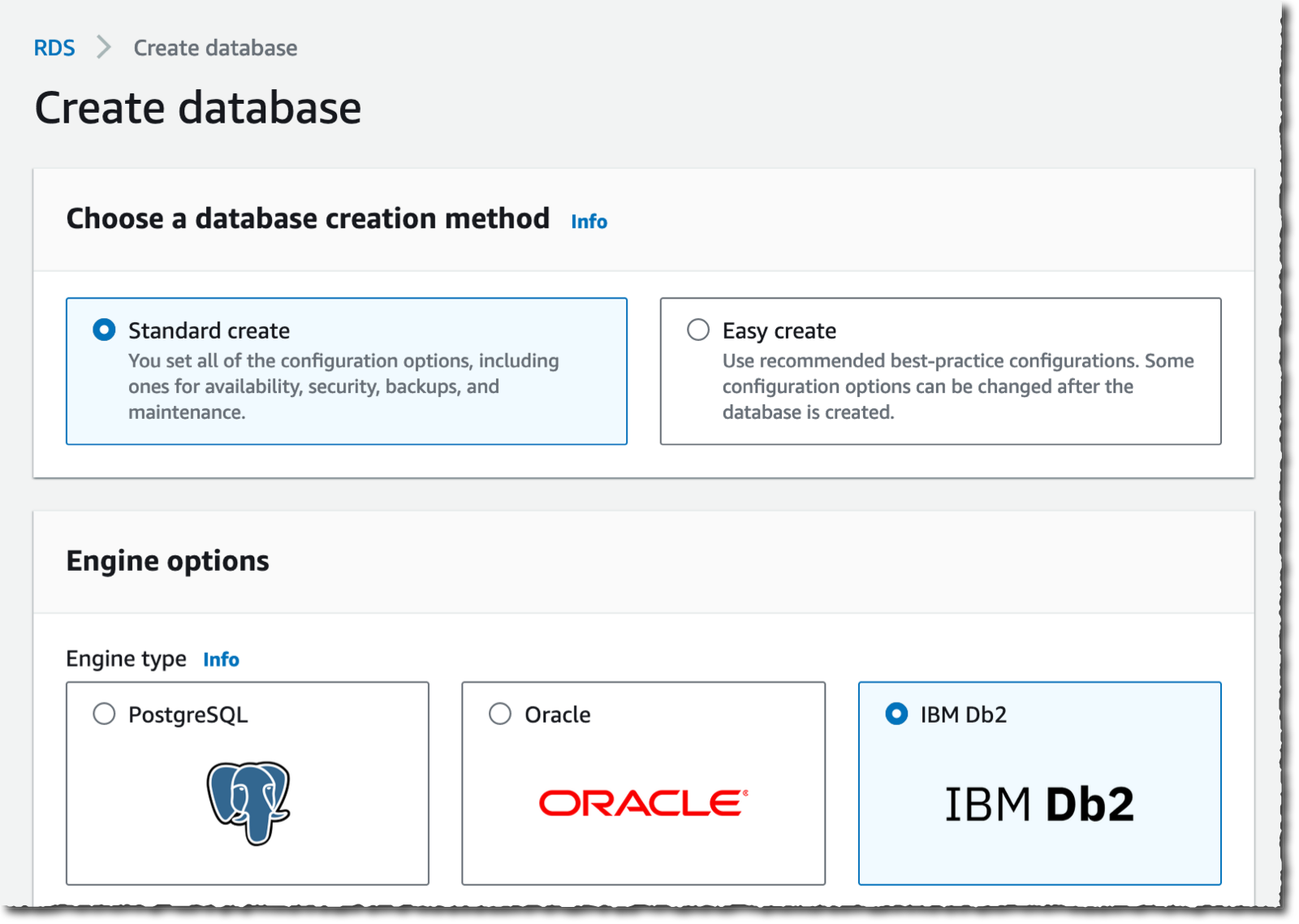

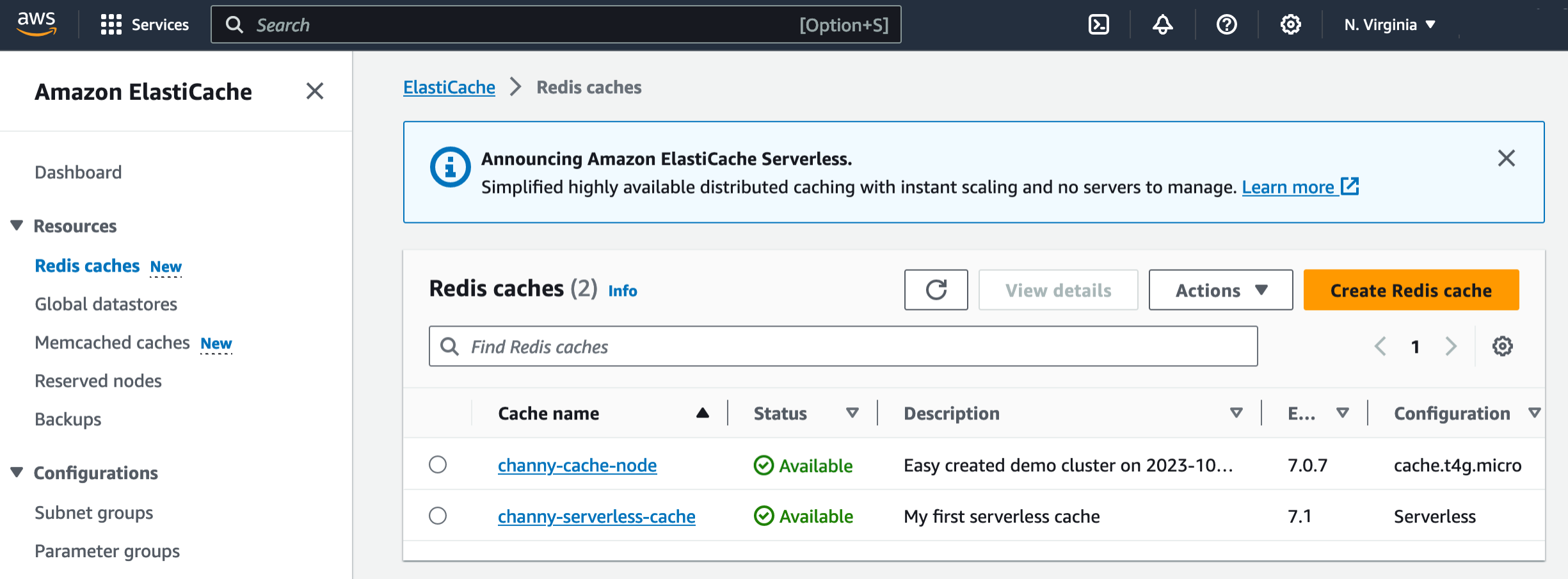

To get started, go to the ElastiCache console and choose Redis caches or Memcached caches in the left navigation pane. ElastiCache Serverless supports engine versions of Redis 7.1 or higher and Memcached 1.6 or higher.

For example, in the case of Redis caches, choose Create Redis cache.

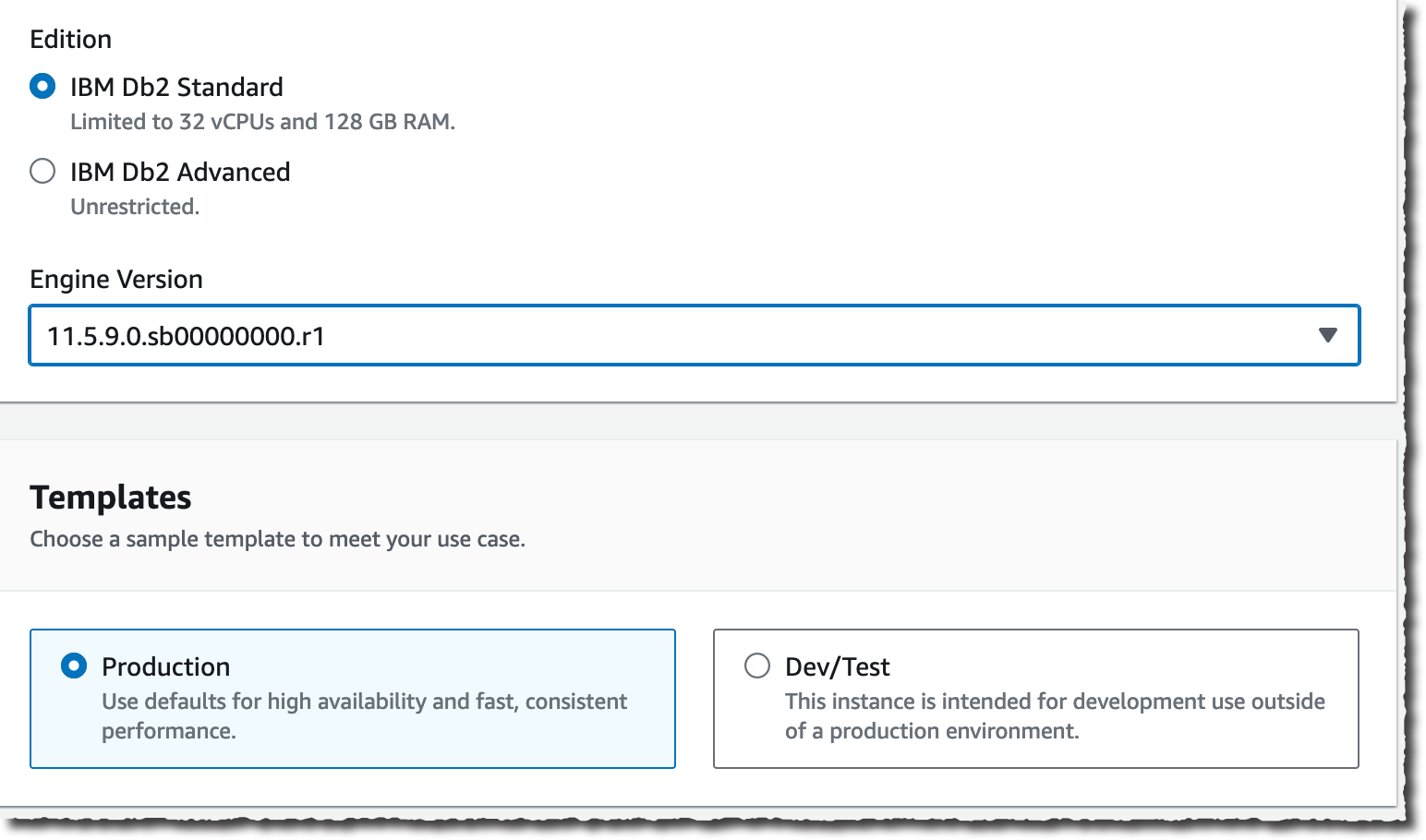

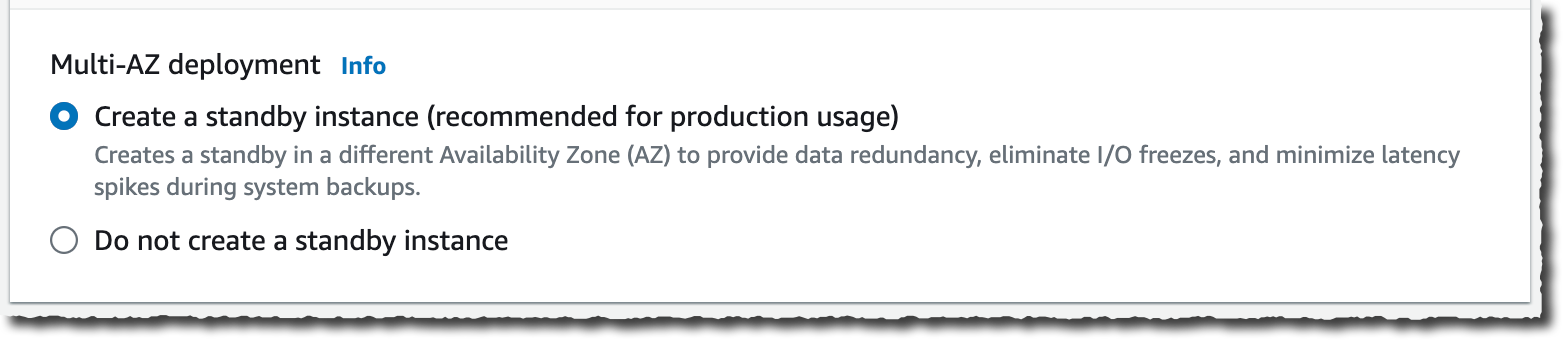

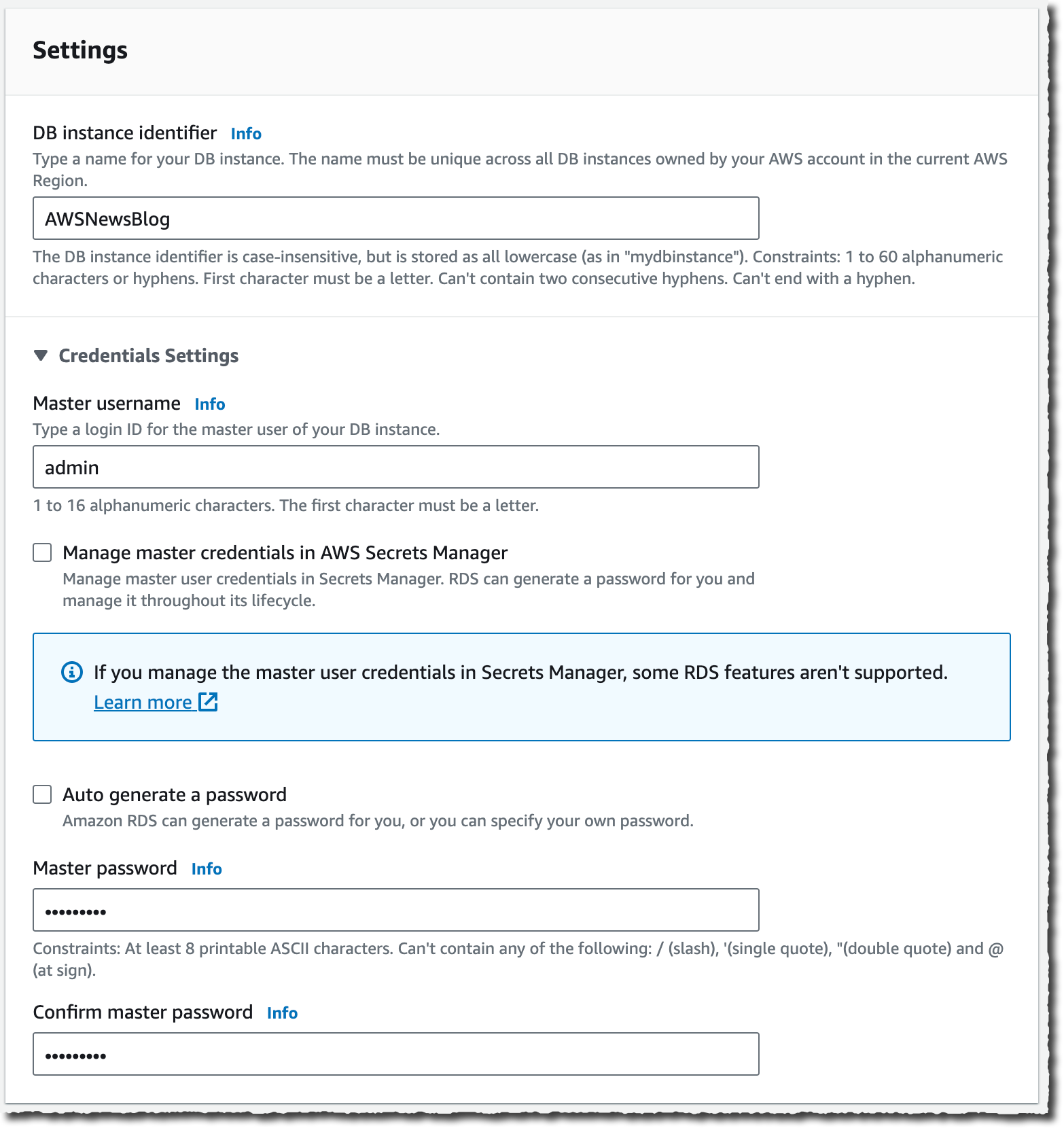

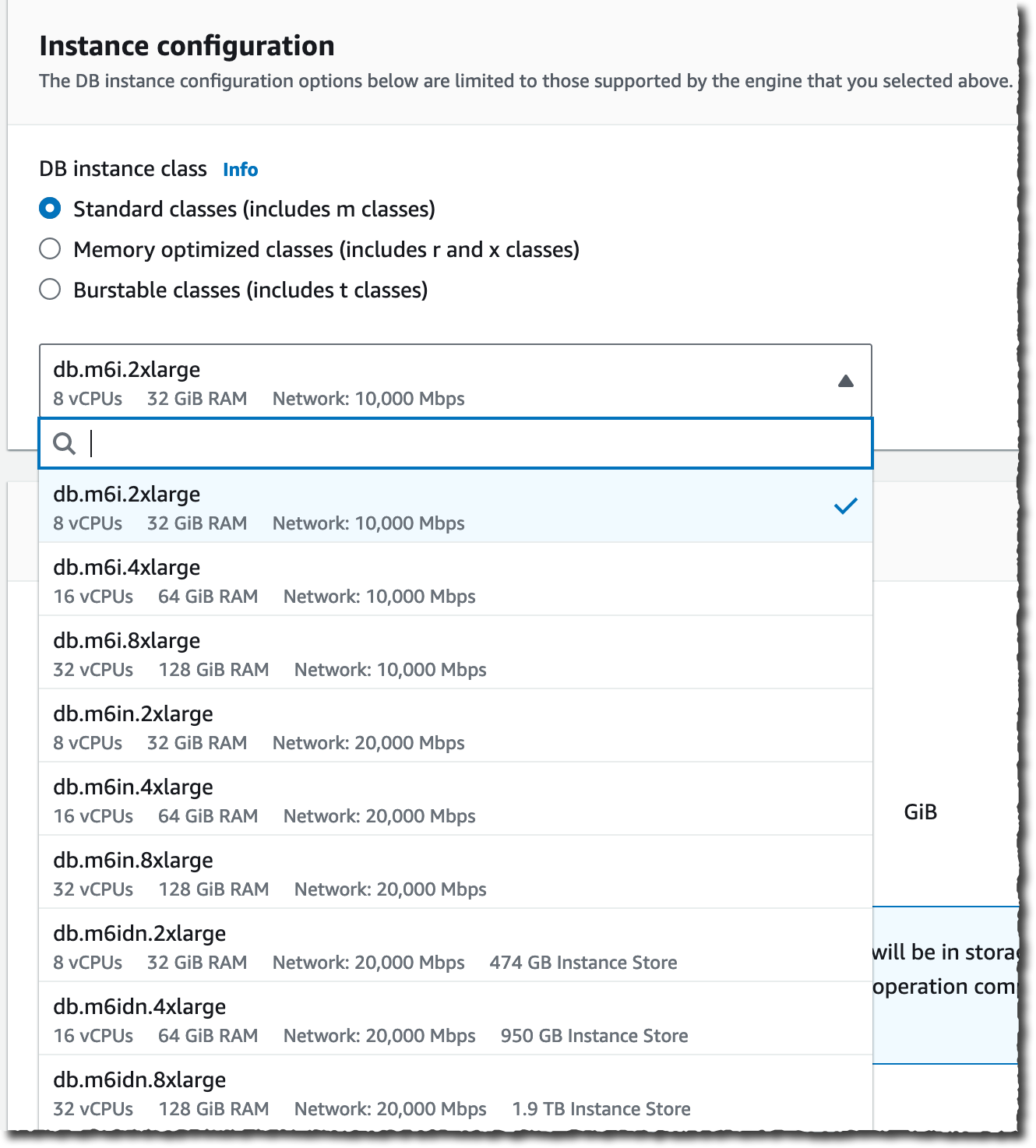

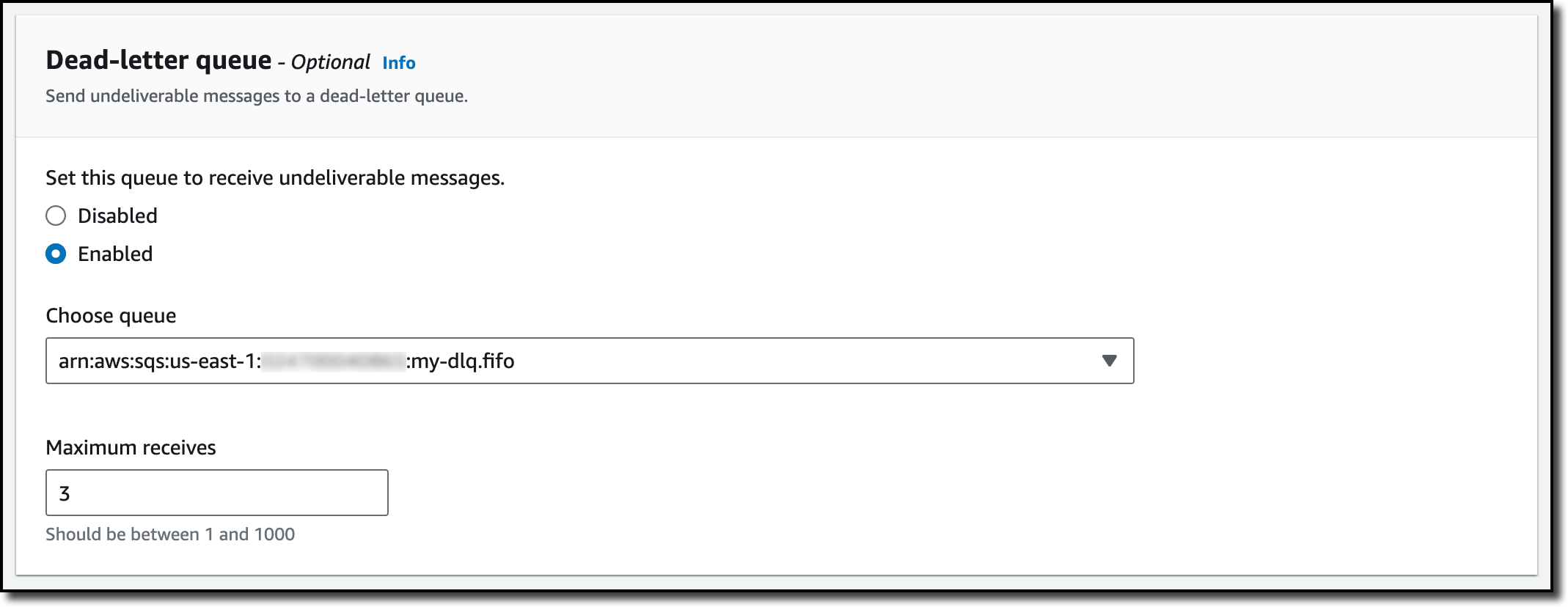

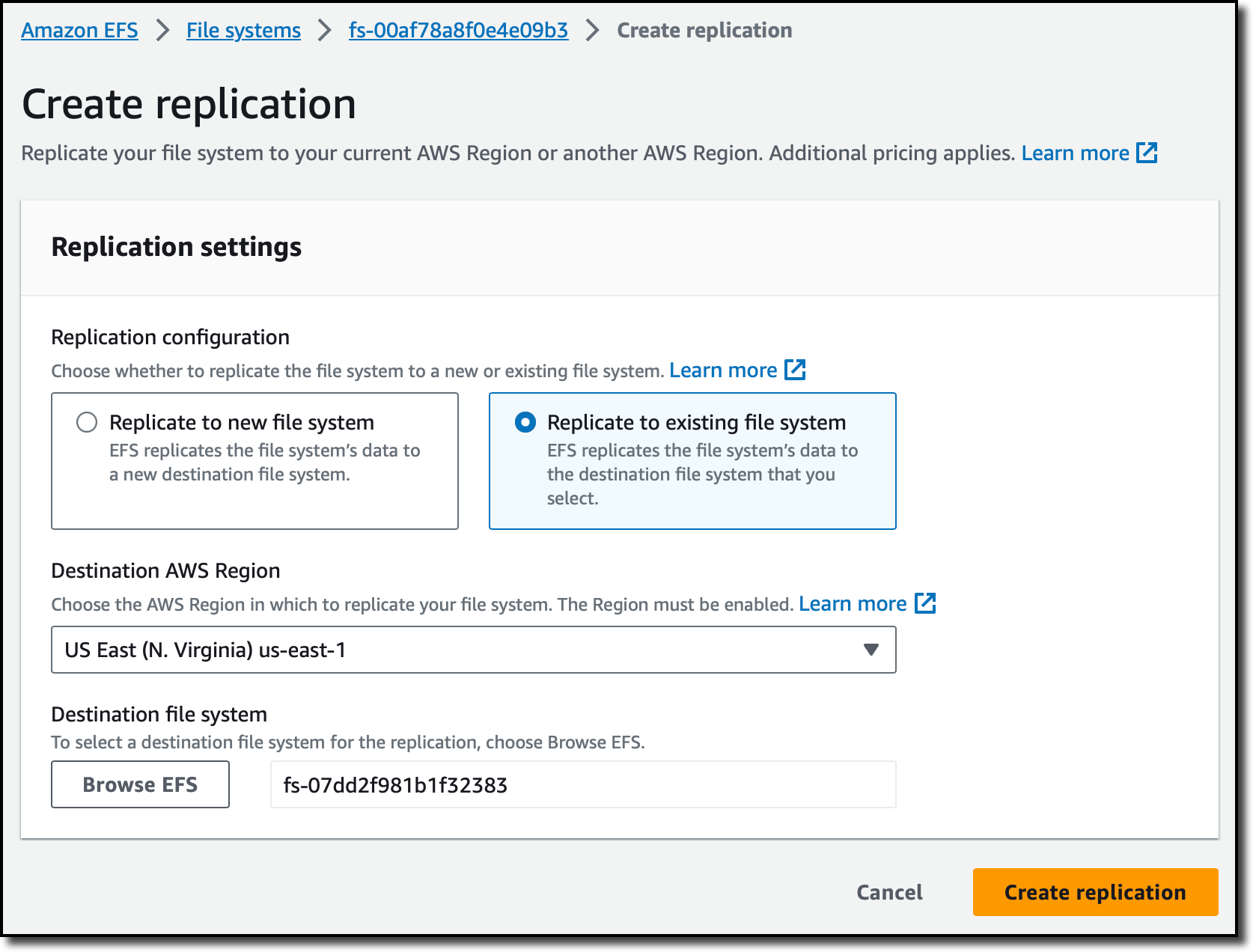

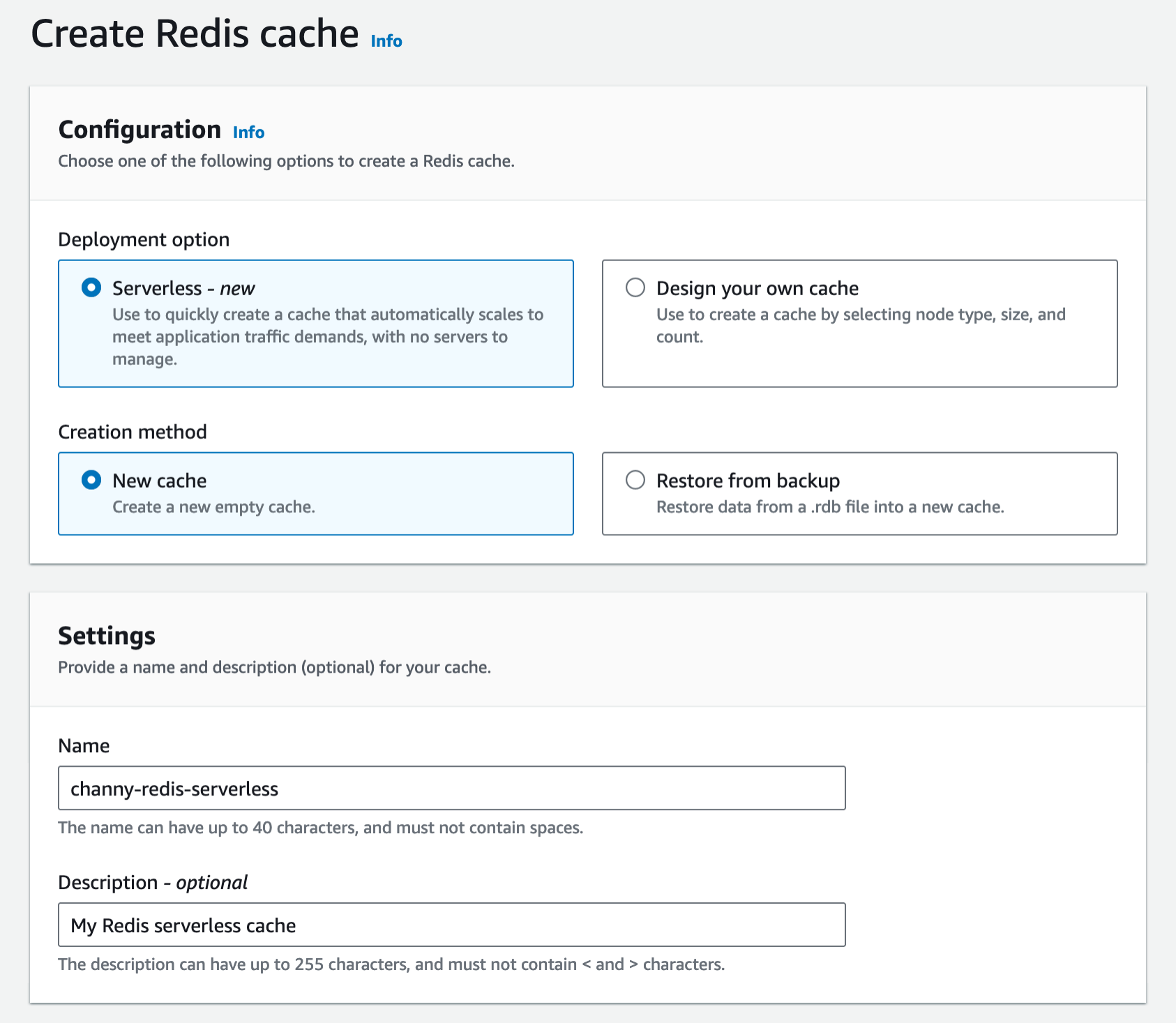

You see two deployment options: either Serverless or Design your own cache to create a node-based cache cluster. Choose the Serverless option, the New cache method, and provide a name.

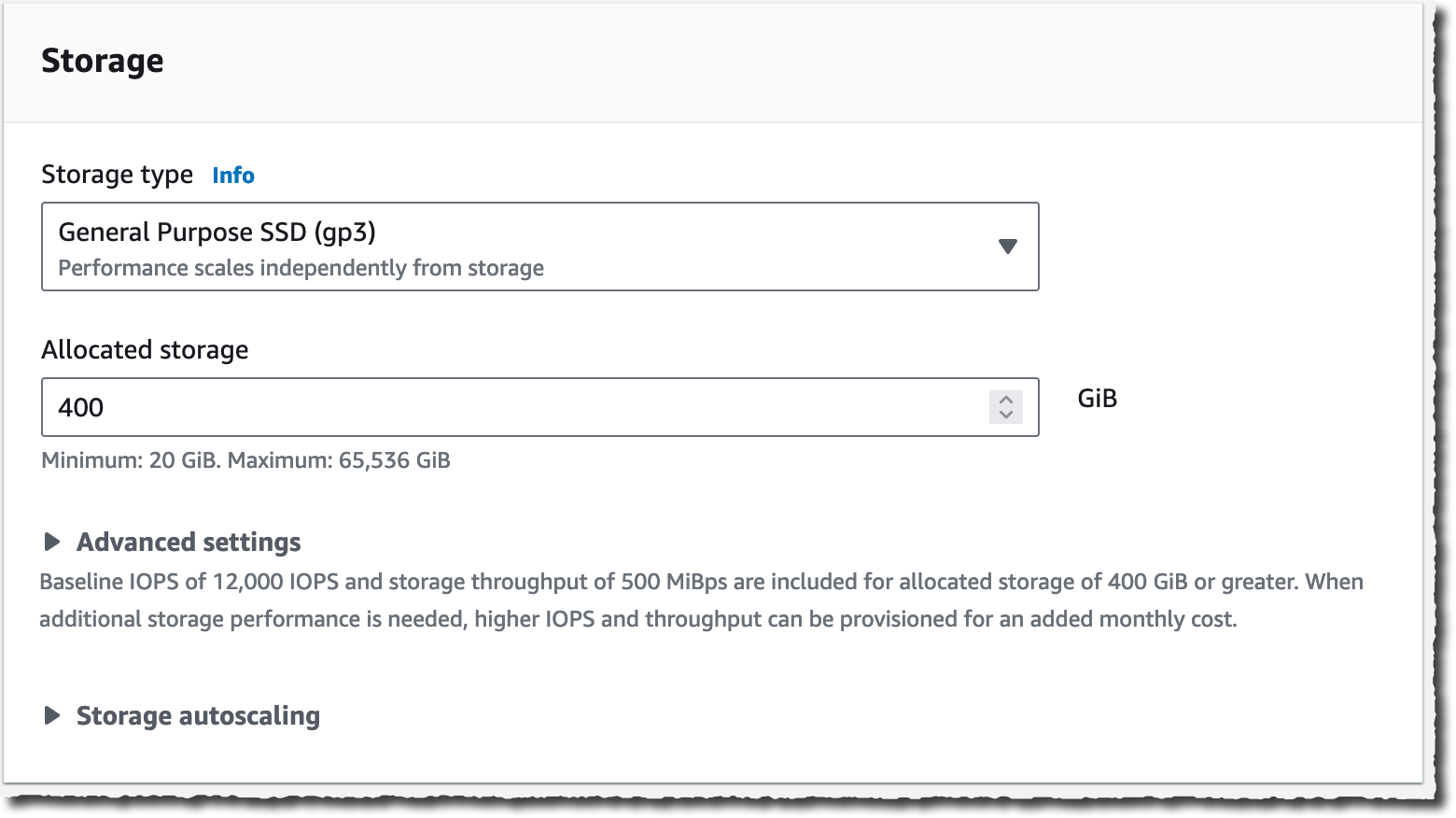

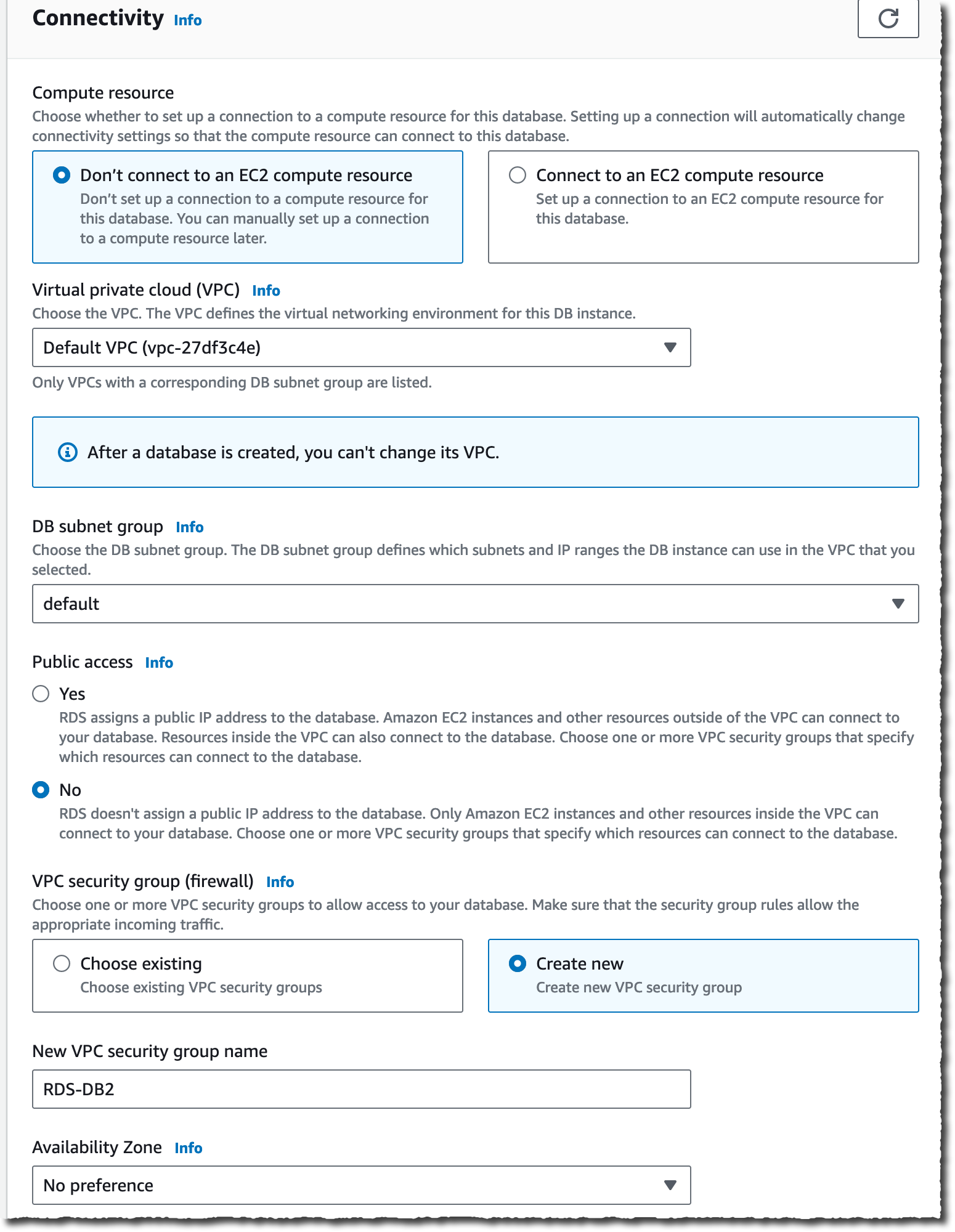

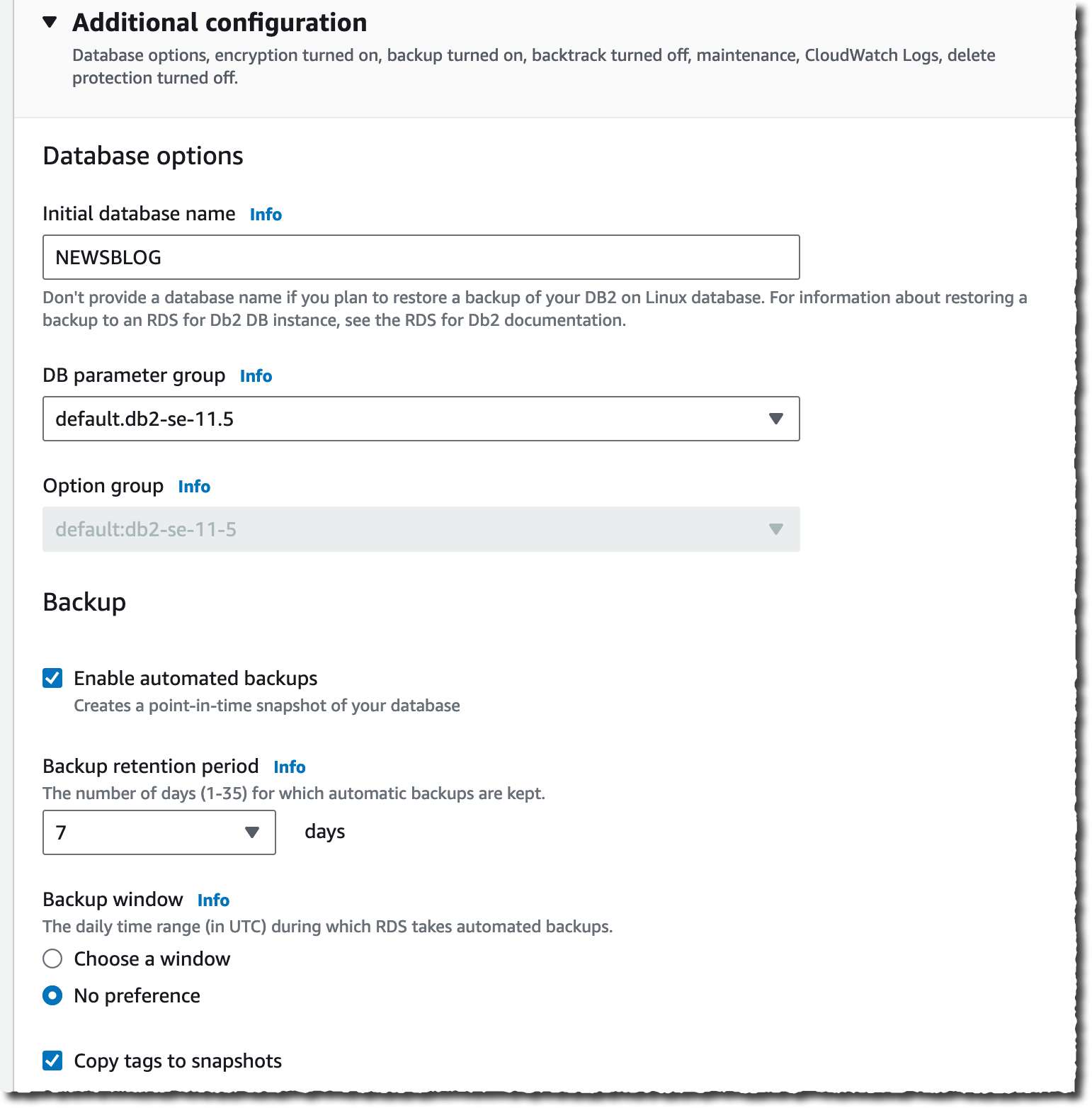

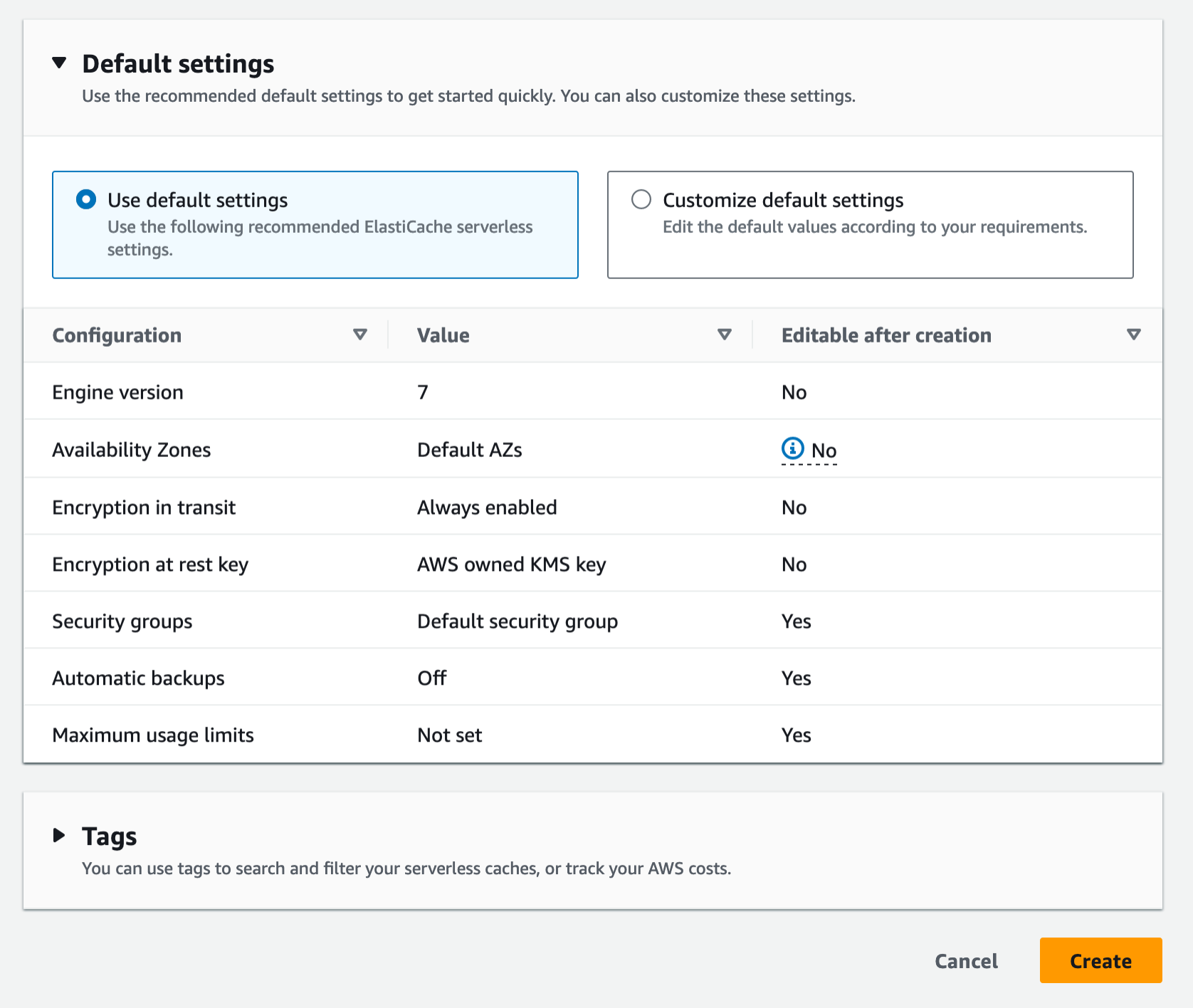

Use the default settings to create a cache in your default VPC, Availability Zones, service-owned encryption key, and security groups. We will automatically set recommended best practices. You don’t have to enter any additional settings.

If you want to customize default settings, you can set your own security groups, or enable automatic backups. You can also set maximum limits for your compute and memory usage to ensure your cache doesn’t grow beyond a certain size. When your cache reaches the memory limit, keys with a time to live (TTL) are evicted according to the least recently used (LRU) logic. When your compute limit is reached, ElastiCache will throttle requests, which will lead to elevated request latencies.

When you create a new serverless cache, you can see the details of settings for connectivity and data protection, including an endpoint and network environment.

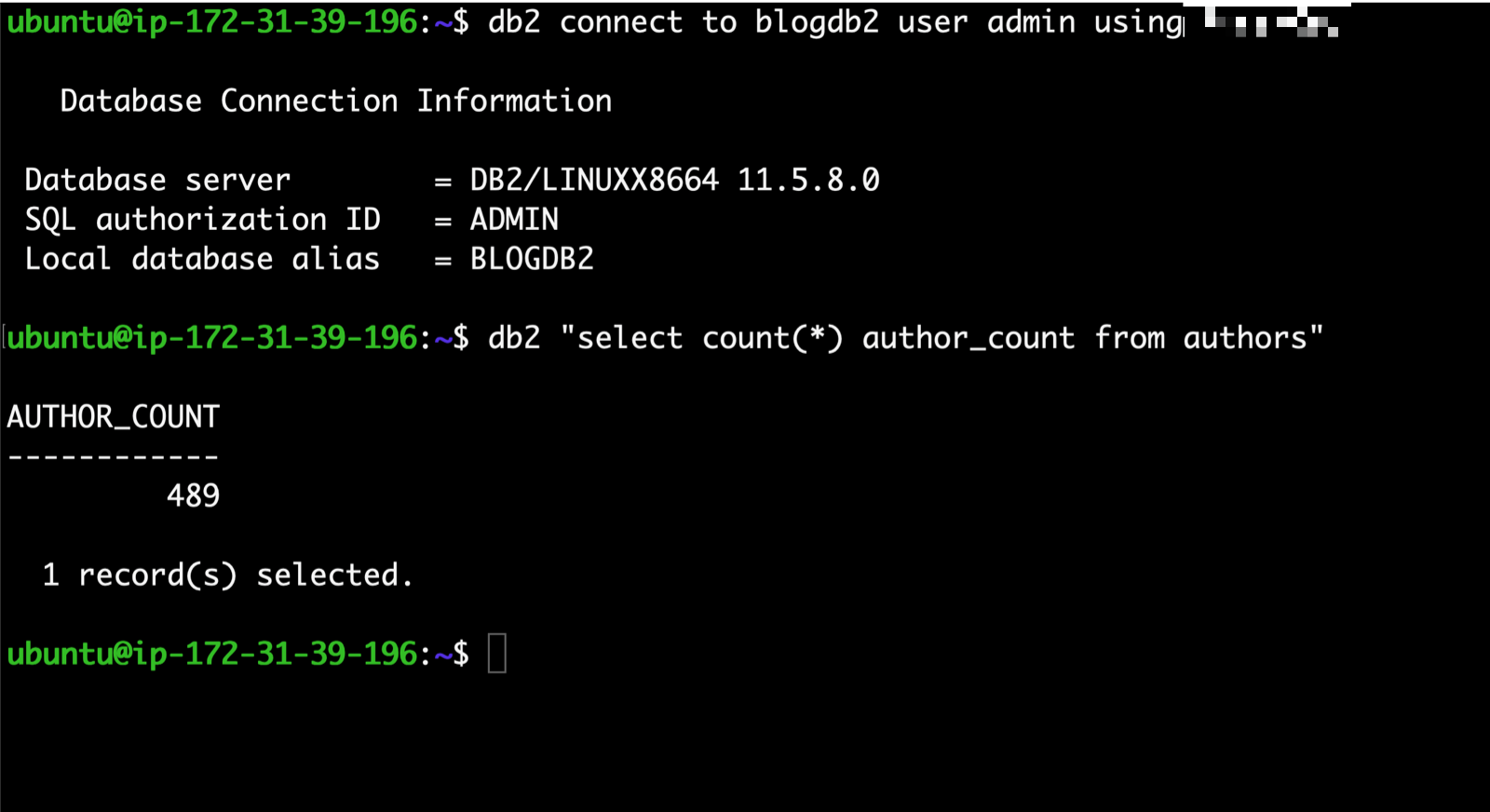

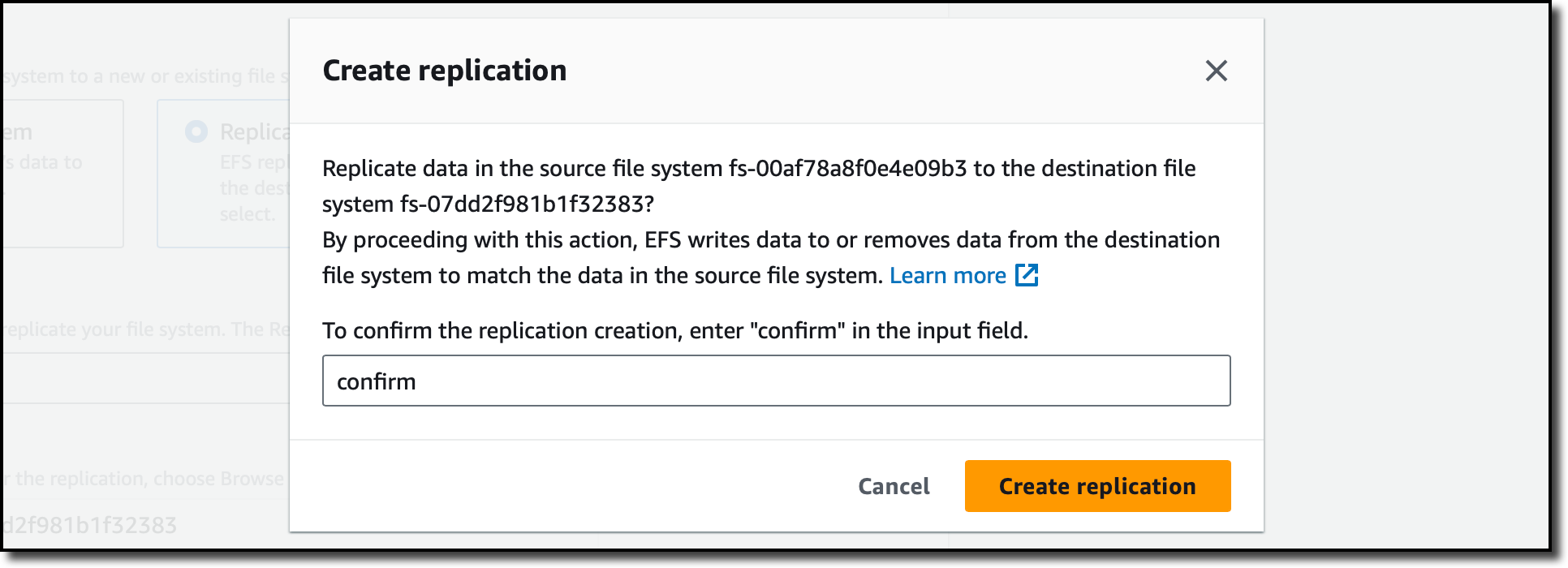

Now, you can configure the ElastiCache Serverless endpoint in your application and connect using any Redis client that supports Redis in cluster mode, such as redis-cli.

$ redis-cli -h channy-redis-serverless.elasticache.amazonaws.com --tls -c -p 6379

set x Hello

OK

get x

"Hello"You can manage the cache using AWS Command Line Interface (AWS CLI) or AWS SDKs. For more information, see Getting started with Amazon ElastiCache for Redis in the AWS documentation.

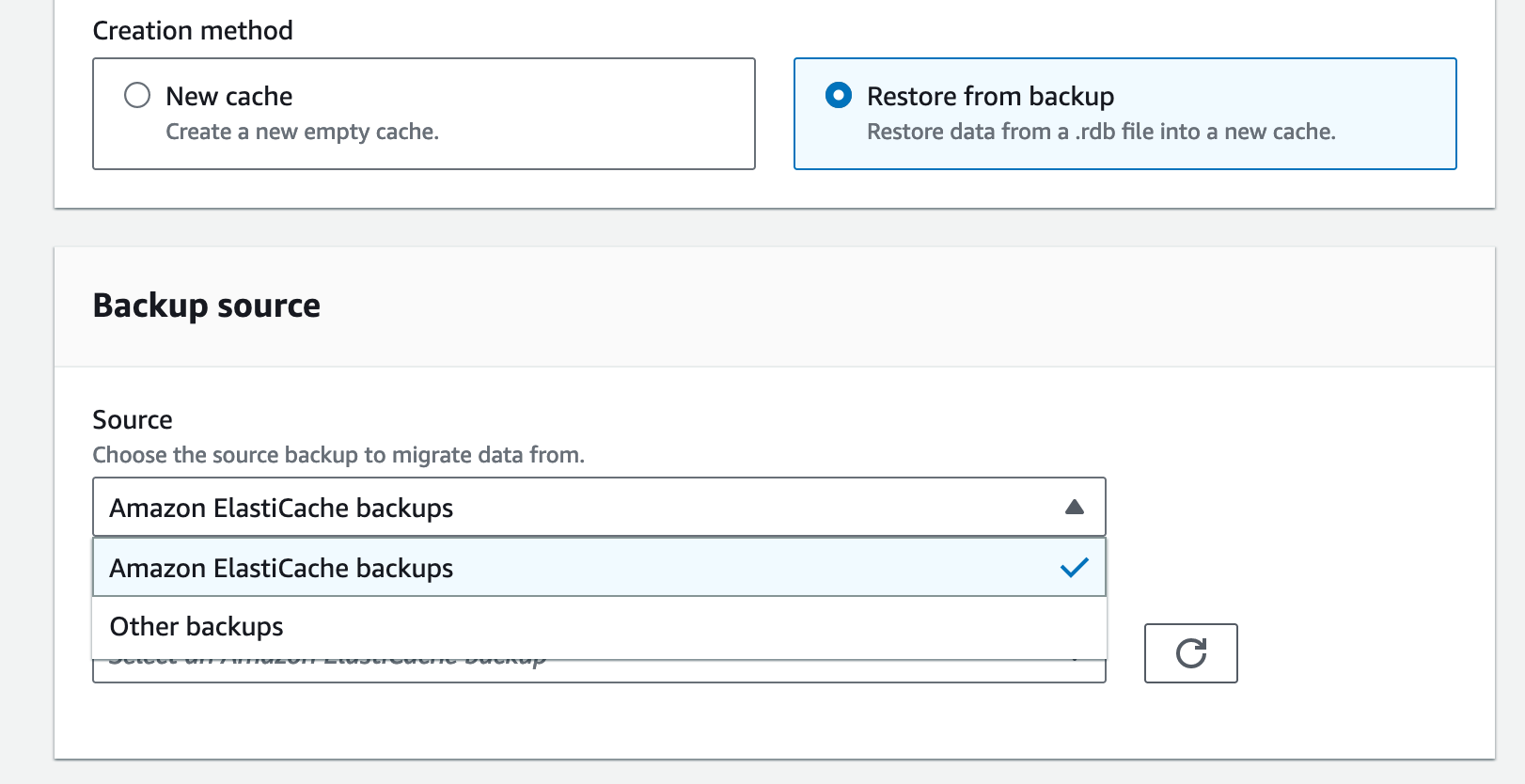

If you have an existing Redis cluster, you can migrate your data to ElastiCache Serverless by specifying the ElastiCache backups or Amazon S3 location of a backup file in a standard Redis rdb file format when creating your ElastiCache Serverless cache.

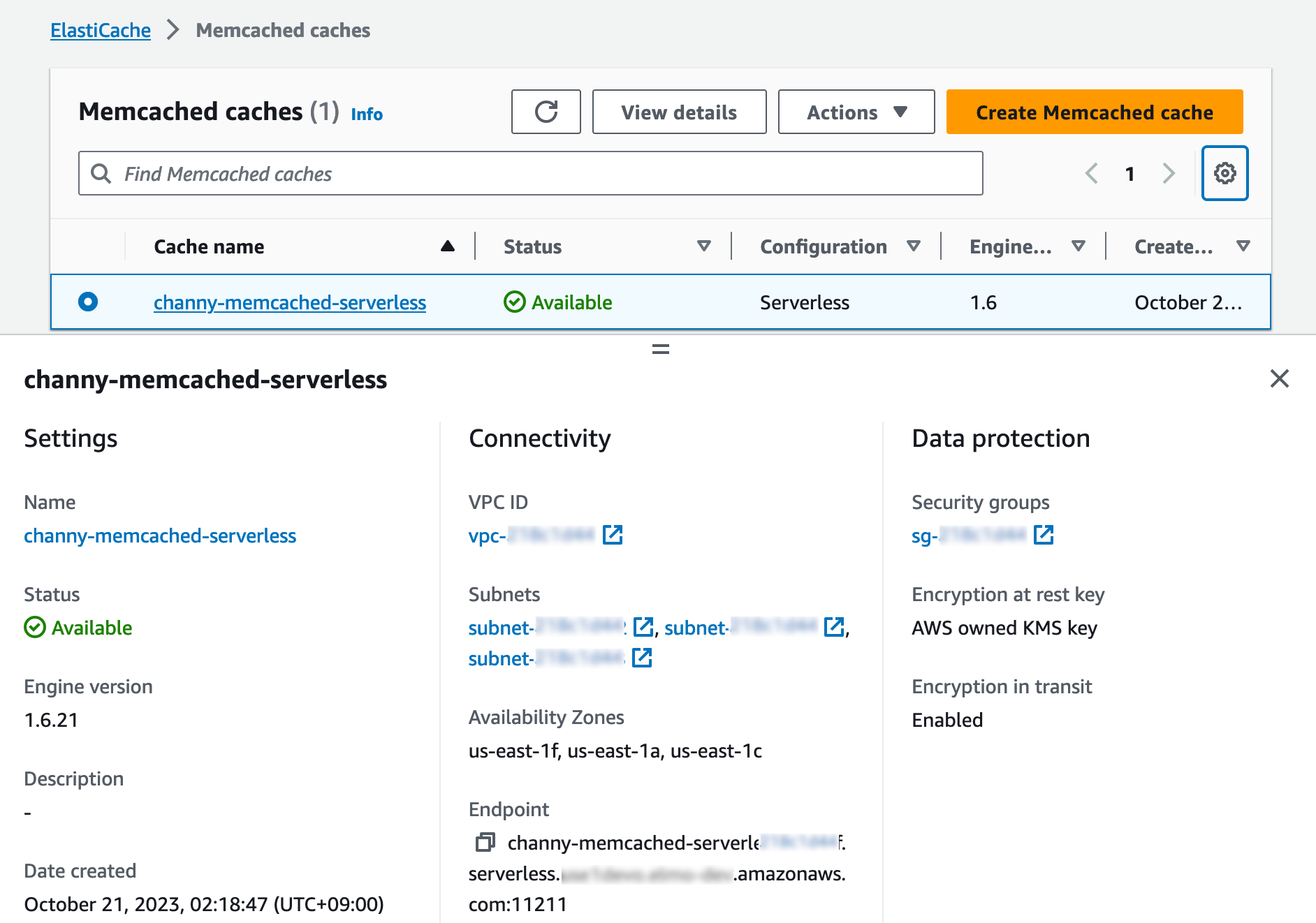

For a Memcached cache, you can create and use a new serverless cache in the same way as Redis.

If you use ElastiCache Serverless for Memcached, there are significant benefits of high availability and instant scaling because they are not natively available in the Memcached engine. You no longer have to write custom business logic, manage multiple caches, or use a third-party proxy layer to replicate data to get high availability with Memcached. Now you can get up to 99.99 percent availability SLA and data replication across multiple Availability Zones.

To connect to the Memcached endpoint, run the openssl client and Memcached commands as shown in the following example output:

$ /usr/bin/openssl s_client -connect channy-memcached-serverless.cache.amazonaws.com:11211 -crlf

set a 0 0 5

hello

STORED

get a

VALUE a 0 5

hello

ENDFor more information, see Getting started with Amazon ElastiCache Serverless for Memcached in the AWS documentation.

Scaling and performance

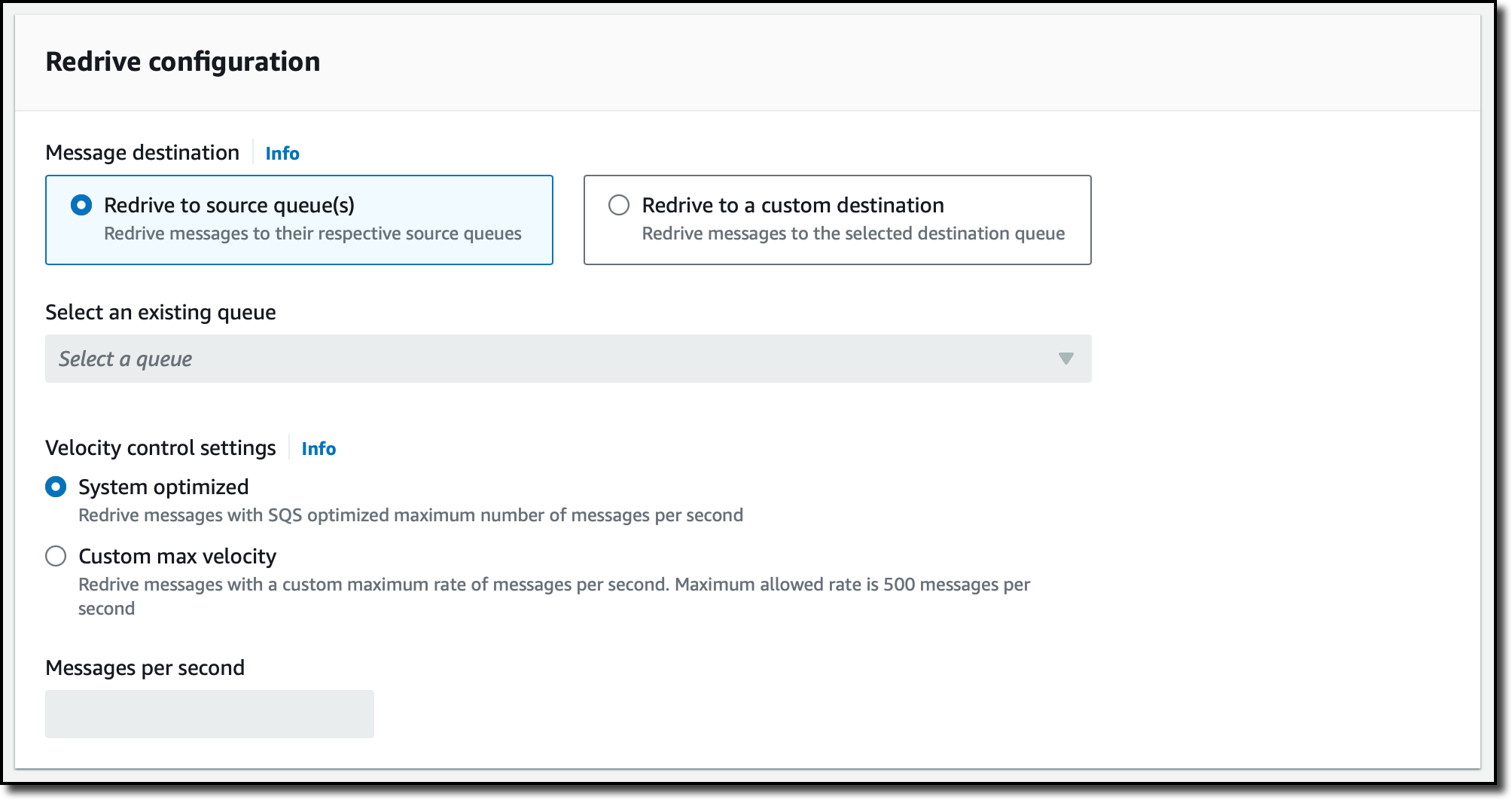

ElastiCache Serverless scales without downtime or performance degradation to the application by allowing the cache to scale up and initiating a scale-out in parallel to meet capacity needs just in time.

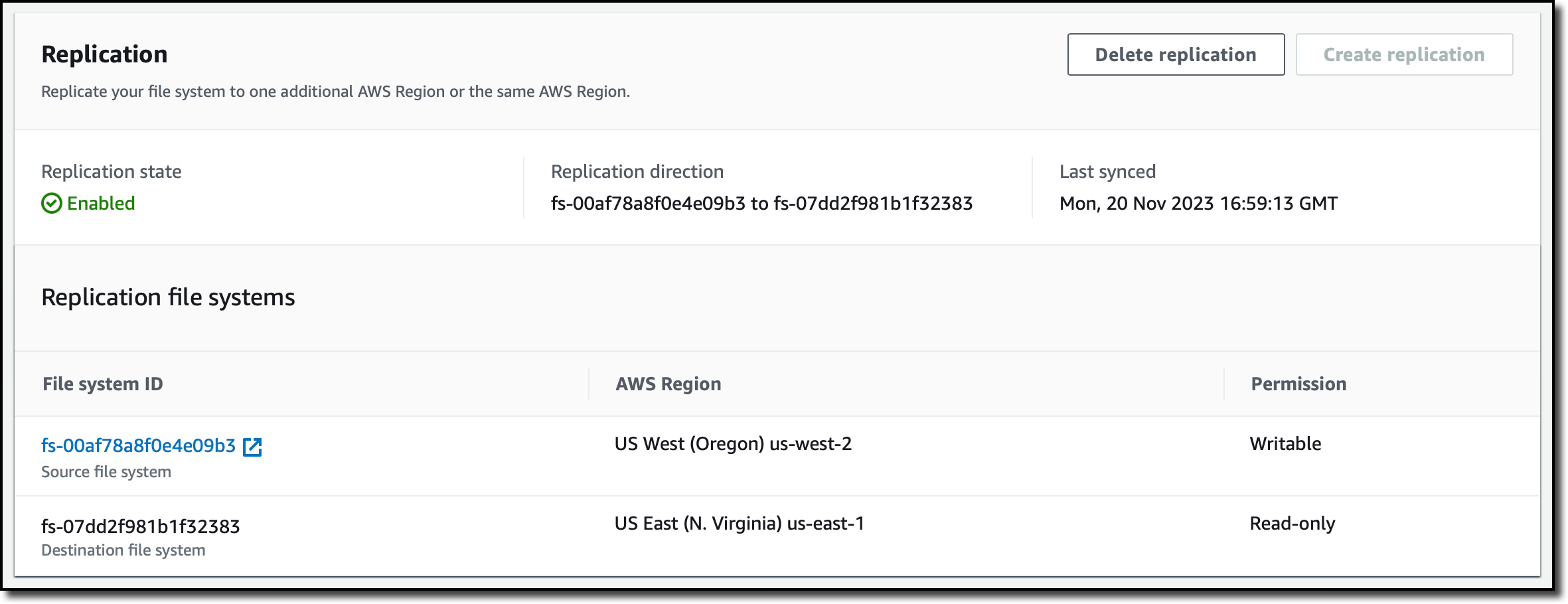

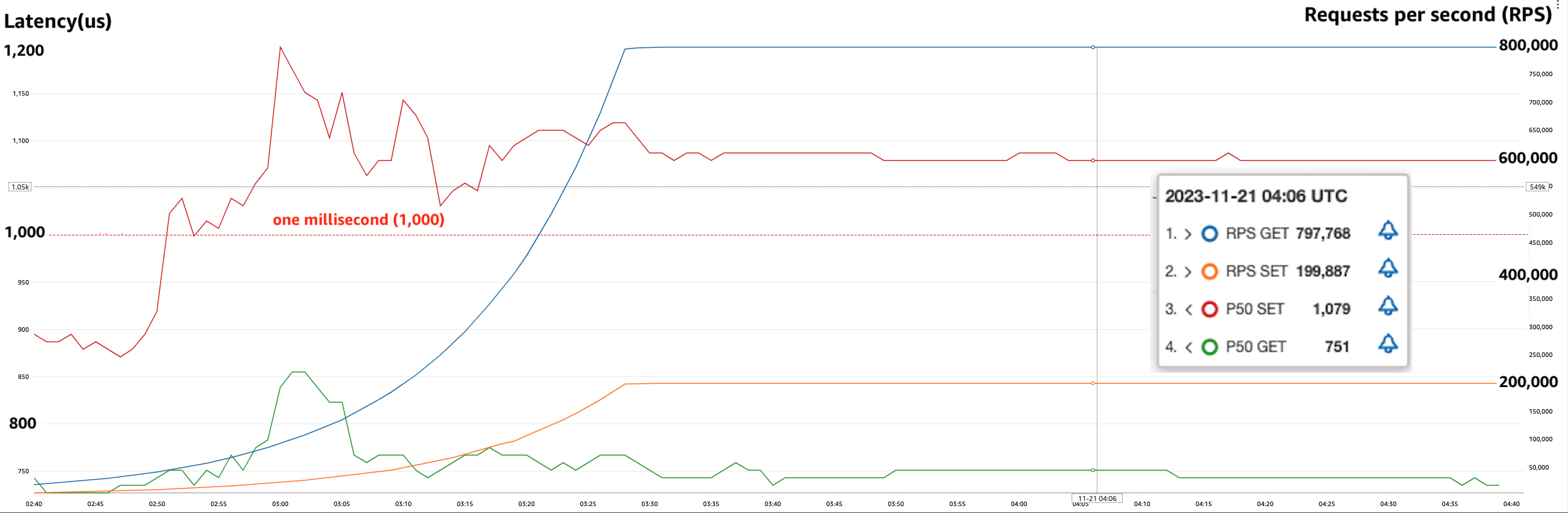

To show ElastiCache Serverless’ performance we conducted a simple scaling test. We started with a typical Redis workload with an 80/20 ratio between reads and writes with a key size of 512 bytes. Our Redis client was configured to Read From Replica (RFR) using the READONLY Redis command, for optimal read performance. Our goal is to show how fast workloads can scale on ElastiCache Serverless without any impact on latency.

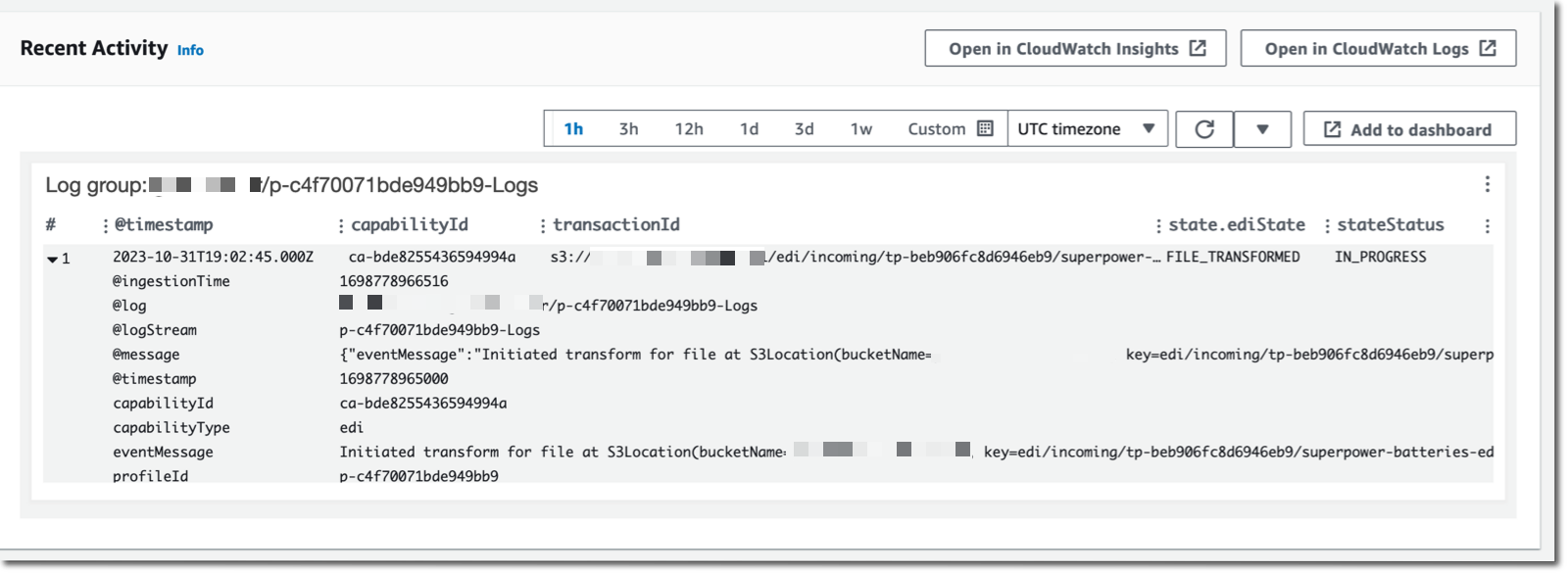

As you can see in the graph above, we were able to double the requests per second (RPS) every 10 minutes up until the test’s target request rate of 1M RPS. During this test, we observed that p50 GET latency remained around 751 microseconds and at all times below 860 microseconds. Similarly, we observed p50 SET latency remained around 1,050 microseconds, not crossing the 1,200 microseconds even during the rapid increase in throughput.

Things to know

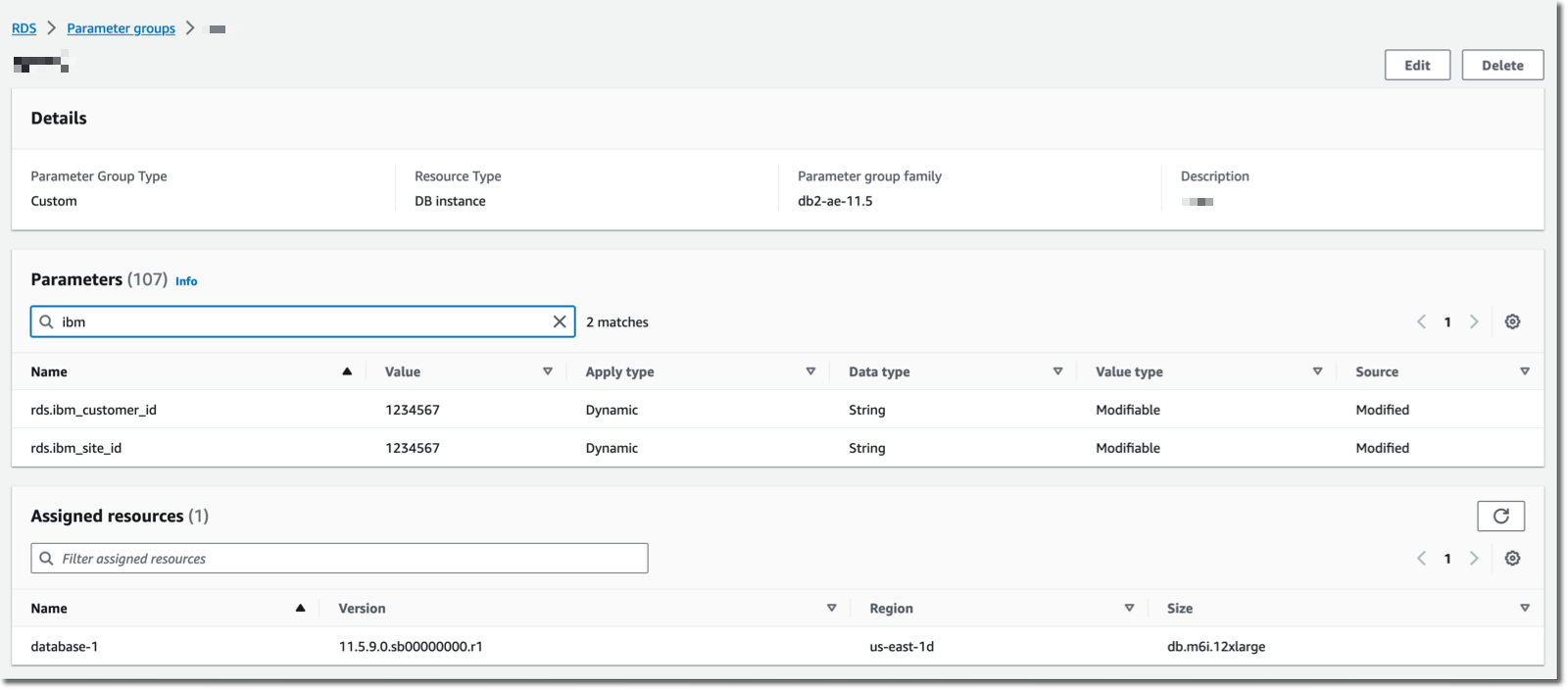

- Upgrading engine version – ElastiCache Serverless transparently applies new features, bug fixes, and security updates, including new minor and patch engine versions on your cache. When a new major version is available, ElastiCache Serverless will send you a notification in the console and an event in Amazon EventBridge. ElastiCache Serverless major version upgrades are designed for no disruption to your application.

- Performance and monitoring – ElastiCache Serverless publishes a suite of metrics to Amazon CloudWatch, including memory usage (

BytesUsedForCache), CPU usage (ElastiCacheProcessingUnits), and cache metrics, includingCacheMissRate,CacheHitRate,CacheHits,CacheMisses, andThrottledRequests. ElastiCache Serverless also publishes Amazon EventBridge events for significant events, including cache creation, deletion, and limit updates. For a full list of available metrics and events, see the documentation. - Security and compliance – ElastiCache Serverless caches are accessible from within a VPC. You can access the data plane using AWS Identity and Access Management (IAM). By default, only the AWS account creating the ElastiCache Serverless cache can access it. ElastiCache Serverless encrypts all data at rest and in-transit by transport layer security (TLS) encrypting each connection to ElastiCache Serverless. You can optionally choose to limit access to the cache within your VPCs, subnets, IAM access, and AWS Key Management Service (AWS KMS) key for encryption. ElastiCache Serverless is compliant with PCI-DSS, SOC, and ISO and is HIPAA eligible.

Now available

Amazon ElastiCache Serverless is now available in all commercial AWS Regions, including China. With ElastiCache Serverless, there are no upfront costs, and you pay for only the resources you use. You pay for cached data in GB-hours, ECPUs consumed, and Snapshot storage in GB-months.

To learn more, see the ElastiCache Serverless page and the pricing page. Give it a try, and please send feedback to AWS re:Post for Amazon ElastiCache or through your usual AWS support contacts.

— Channy