Customers in industries such as architecture, engineering, & construction (AEC) and media & entertainment (M&E) generate the final frames for film, TV, games, industrial design visualizations, and other digital media with a process called rendering, which takes 2D/3D digital content data and computes an output, such as an image or video file. Rendering also requires significant compute power, especially to generate 3D graphics and visual effects (VFX) with resolutions as high as 16K for films and TV. This constrains the number of rendering projects that customers can take on at once.

To address this growing demand for rendering high-resolution content, customers often build what are called “render farms” which combine the power of hundreds or thousands of computing nodes to process their rendering jobs. Render farms can traditionally take weeks or even months to build and deploy, and they require significant planning and upfront commitments to procure hardware.

As a result, customers increasingly are transitioning to scalable, cloud-based render farms for efficient production instead of a dedicated render farm on-premises, which can require extremely high fixed costs. But, rendering in the cloud still requires customers to manage their own infrastructure, build bespoke tooling to manage costs on a project-by-project basis, and monitor software licensing costs with their preferred partners themselves.

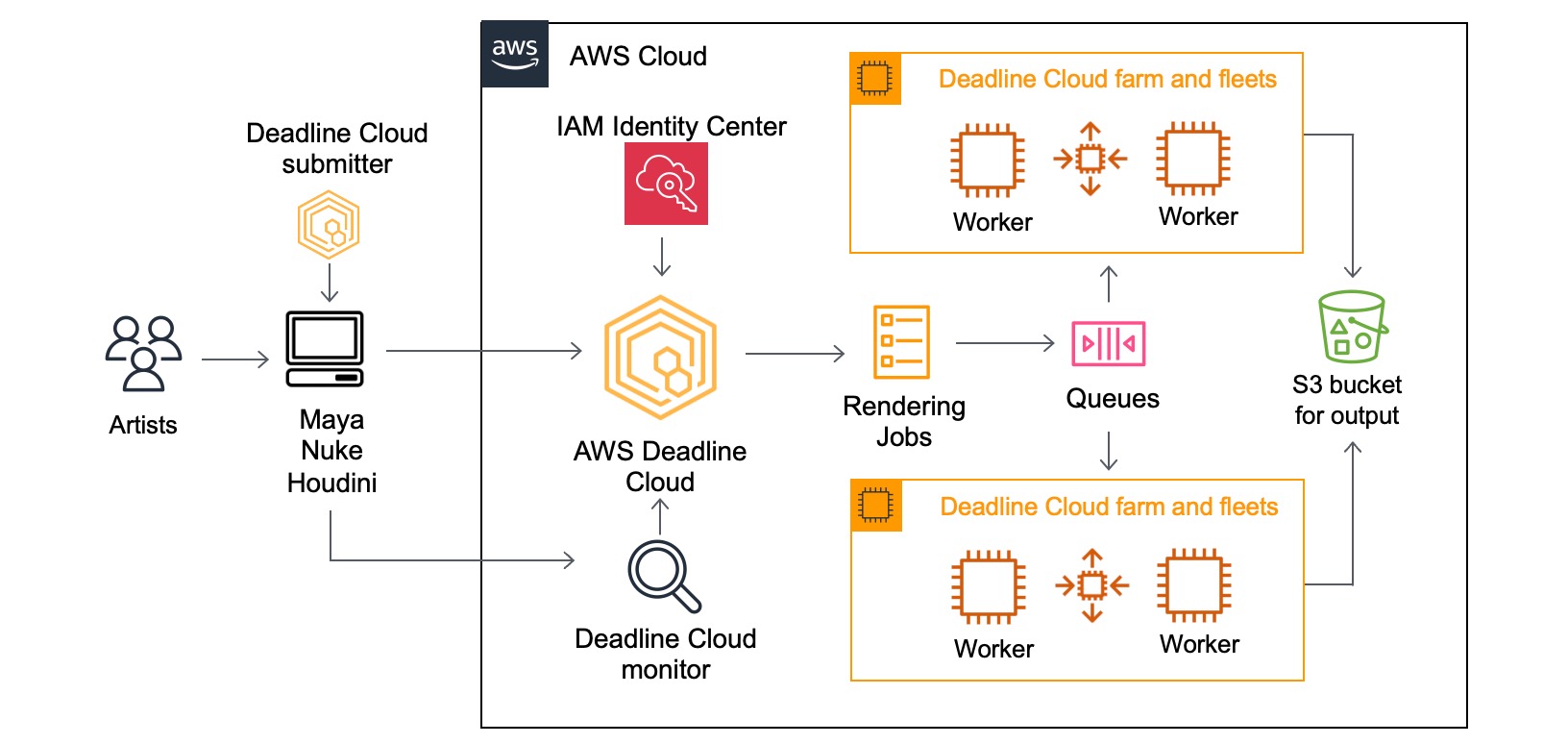

Today, we are announcing the general availability of AWS Deadline Cloud, a new fully managed service that enables creative teams to easily set up a render farm in minutes, scale to run more projects in parallel, and only pay for what resources they use. AWS Deadline Cloud provides a web-based portal with the ability to create and manage render farms, preview in-progress renders, view and analyze render logs, and easily track these costs.

With Deadline Cloud, you can go from zero to render faster with integrations of digital content creation (DCC) tools and customization tools are built-in. You can reduce the effort and development time required to tailor your rendering pipeline to the needs of each job. You also have the flexibility to use licenses you already own or they are provided by the service for third-party DCC software and renderers such as Maya, Nuke, and Houdini.

Concepts of AWS Deadline Cloud

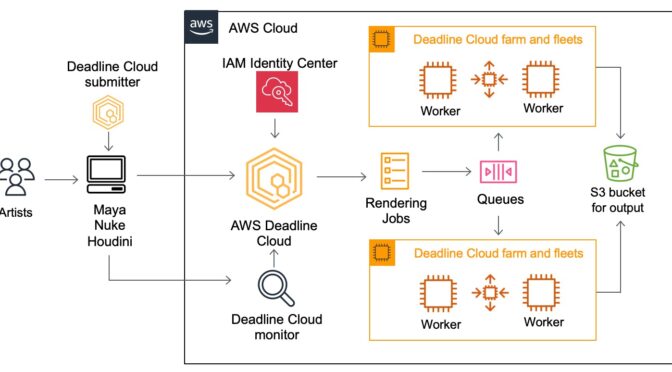

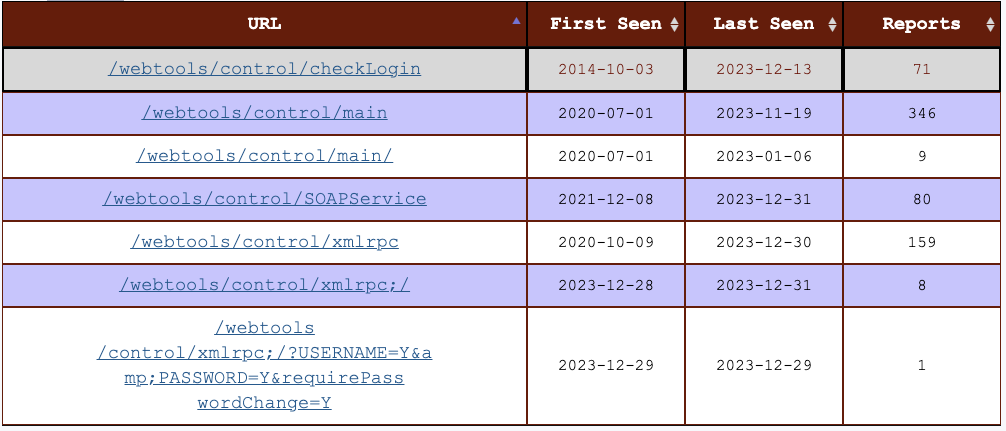

AWS Deadline Cloud allows you to create and manage rendering projects and jobs on Amazon Elastic Compute Cloud (Amazon EC2) instances directly from DCC pipelines and workstations. You can create a rendering farm, a collection of queues, and fleets. A queue is where your submitted jobs are located and scheduled to be rendered. A fleet is a group of worker nodes that can support multiple queues. A queue can be processed by multiple fleets.

Before you can work on a project, you should have access to the required resources, and the associated farm must be integrated with AWS IAM Identity Center to manage workforce authentication and authorization. IT administrators can create and grant access permissions to users and groups at different levels, such as viewers, contributors, managers, or owners.

Here are four key components of Deadline Cloud:

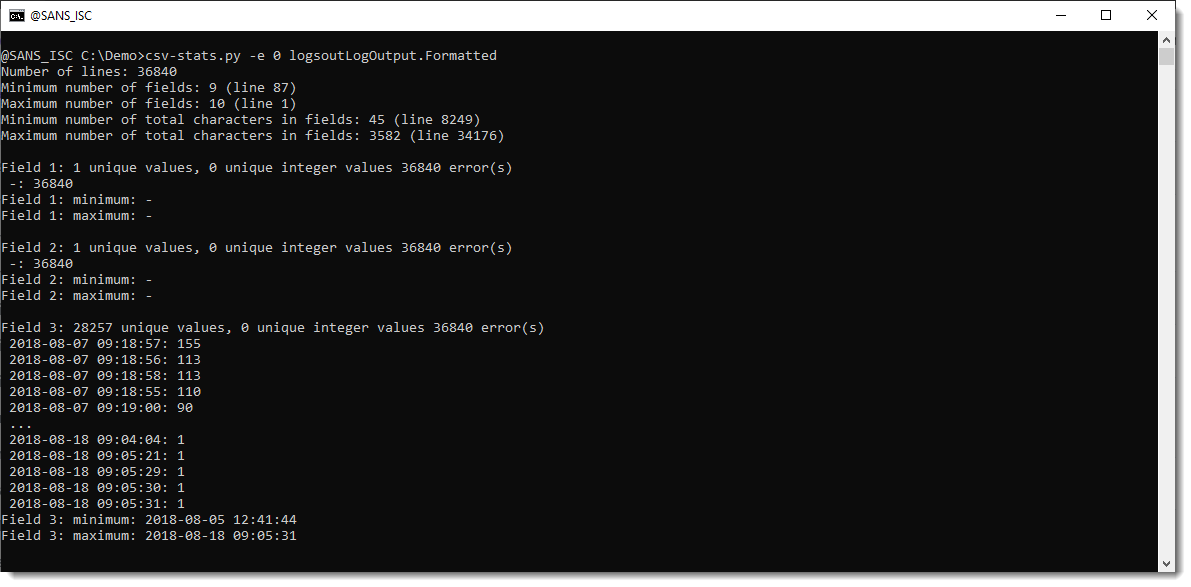

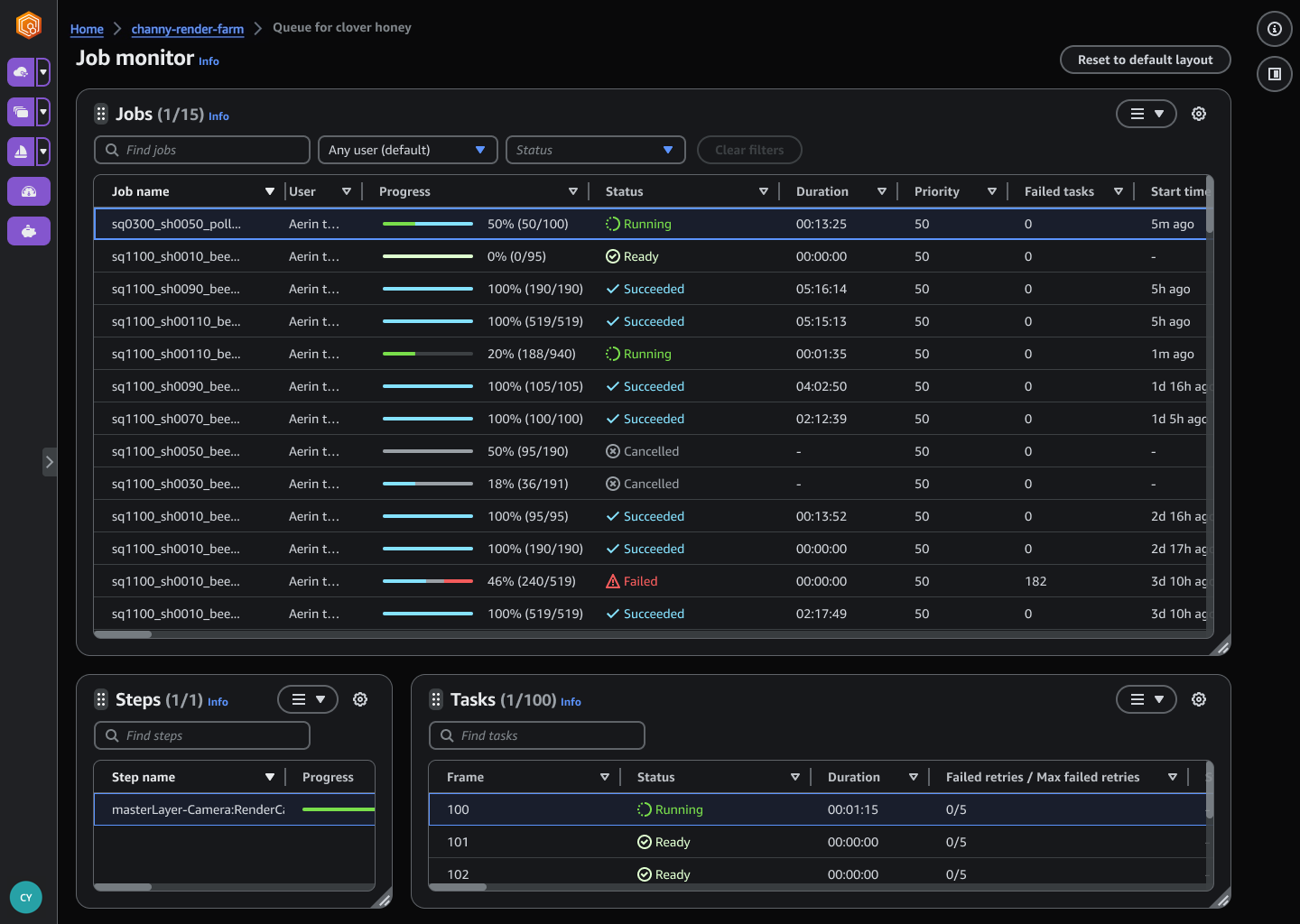

- Deadline Cloud monitor – You can access statuses, logs, and other troubleshooting metrics for jobs, steps, and tasks. The Deadline Cloud monitor provides real-time access and updates to job progress. It also provides access to logs and other troubleshooting metrics, and you can browse multiple farm, fleet, and queue listings to view system utilization.

- Deadline Cloud submitter – You can submit a rendering job directly using AWS SDK or AWS Command Line Interface (AWS CLI). You can also submit from DCC software using a Deadline Cloud submitter, which is a DCC-integrated plugin that supports Open Job Description (OpenJD), an open source template specification. With it, artists can submit rendering jobs from a third-party DCC interface they are more familiar with, such as Maya or Nuke, to Deadline Cloud, where project resources are managed and jobs are monitored in one location.

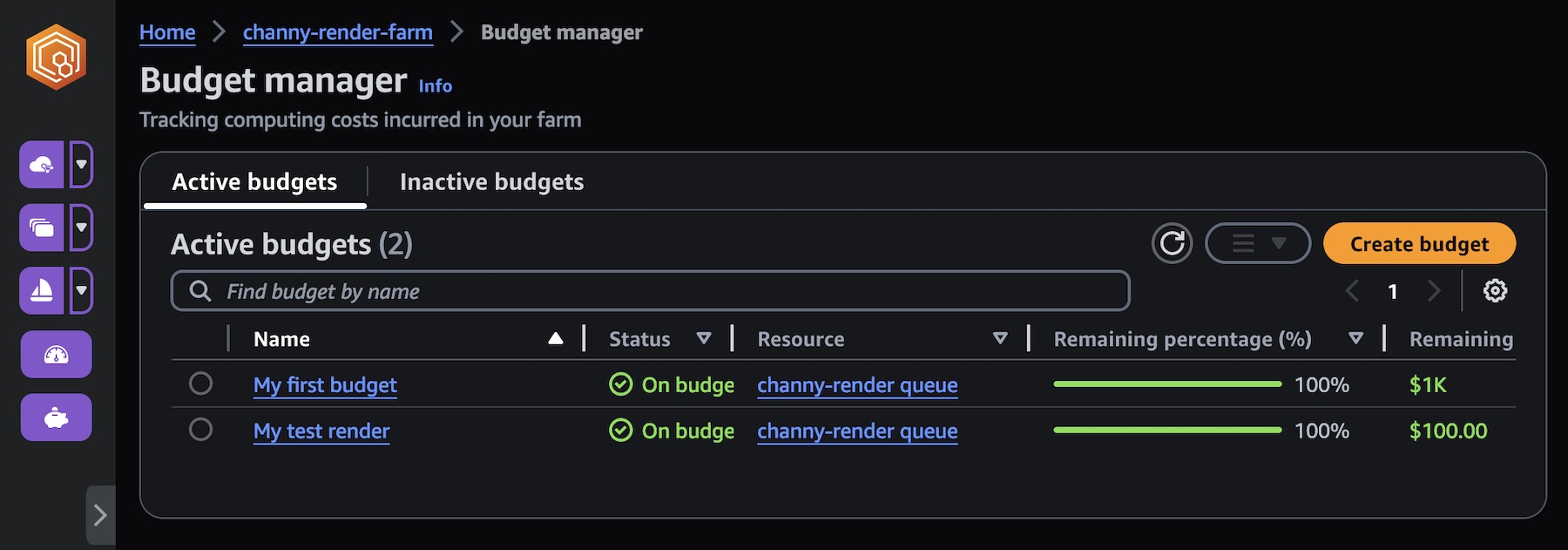

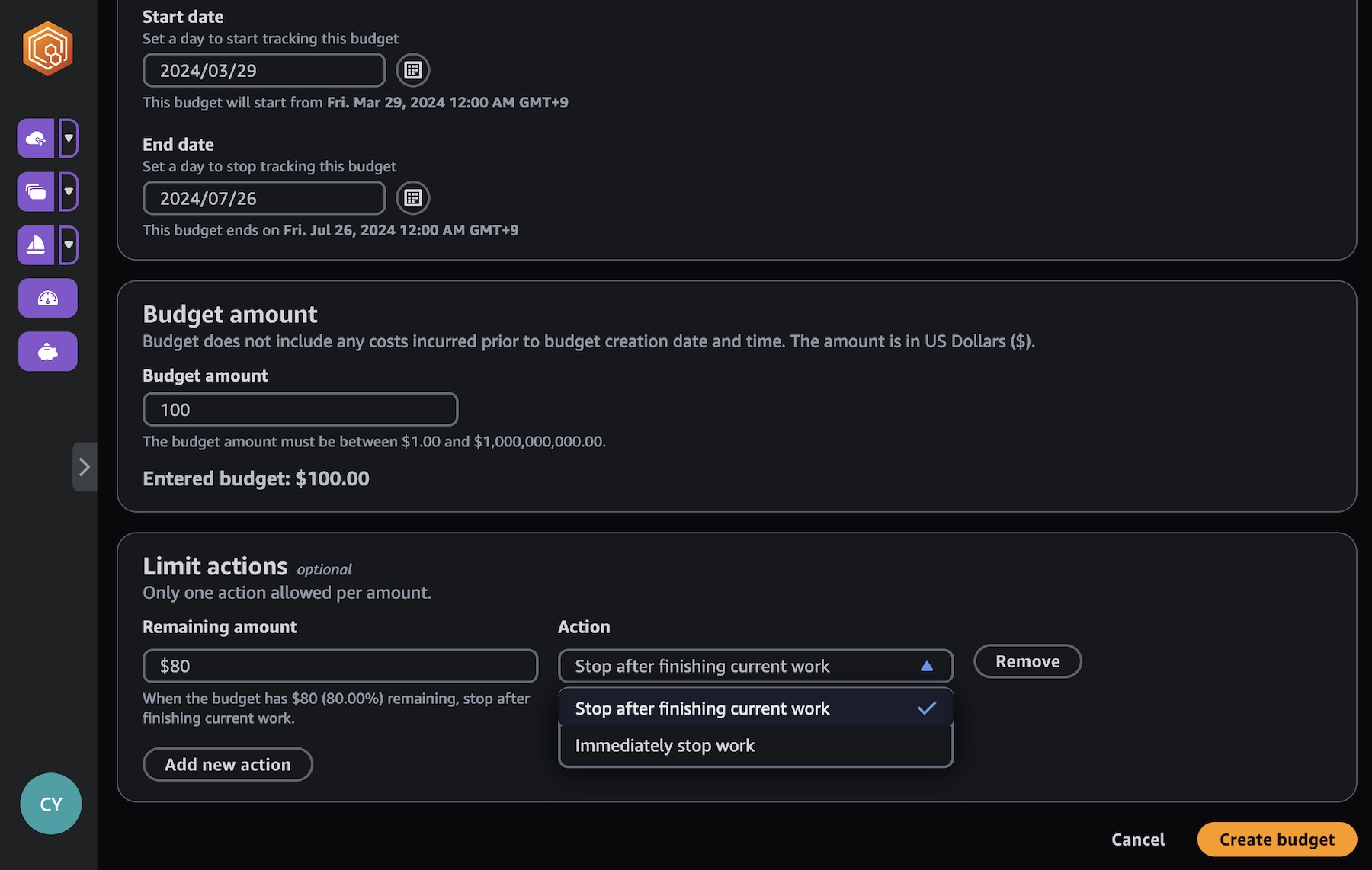

- Deadline Cloud budget manager – You can create and edit budgets to help manage project costs and view how many AWS resources are used and the estimated costs for those resources.

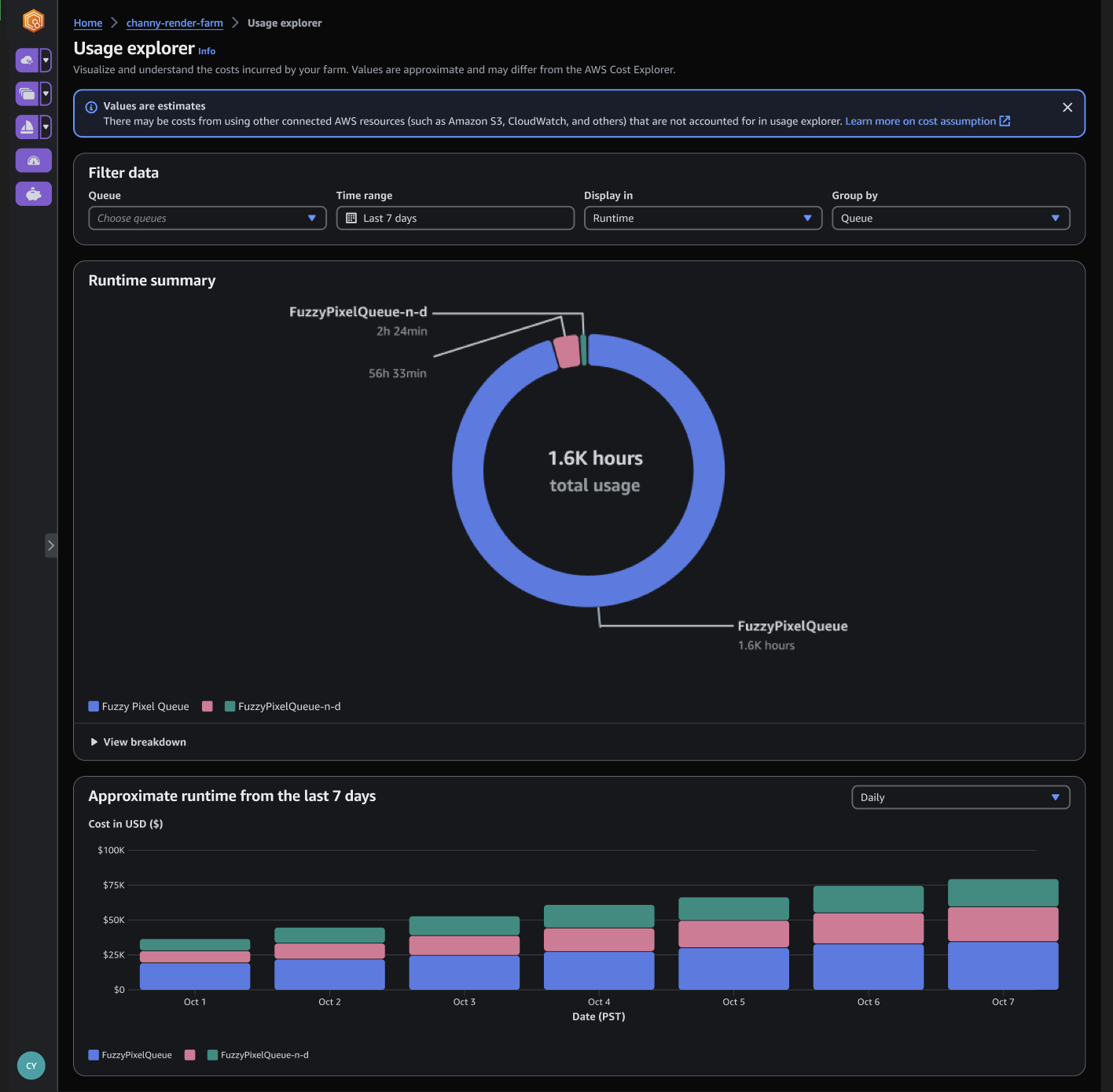

- Deadline Cloud usage explorer – You can use the usage explorer to track approximate compute and licensing costs based on public pricing rates in Amazon EC2 and Usage-Based Licensing (UBL).

Get started with AWS Deadline Cloud

To get started with AWS Deadline Cloud, define and create a farm with Deadline Cloud monitor, download the Deadline Cloud submitter, and install plugins for your favorite DCC applications with just a few clicks. You can define your rendering jobs in your DCC application and submit them to your created farm within the plugin’s user interfaces.

The DCC plugins detect the necessary input scene data and build a job bundle that uploads to the Amazon Simple Storage Service (Amazon S3) bucket in your account, transfer to Deadline Cloud for rendering the job, and provide completed frames to the S3 bucket for your customers to access.

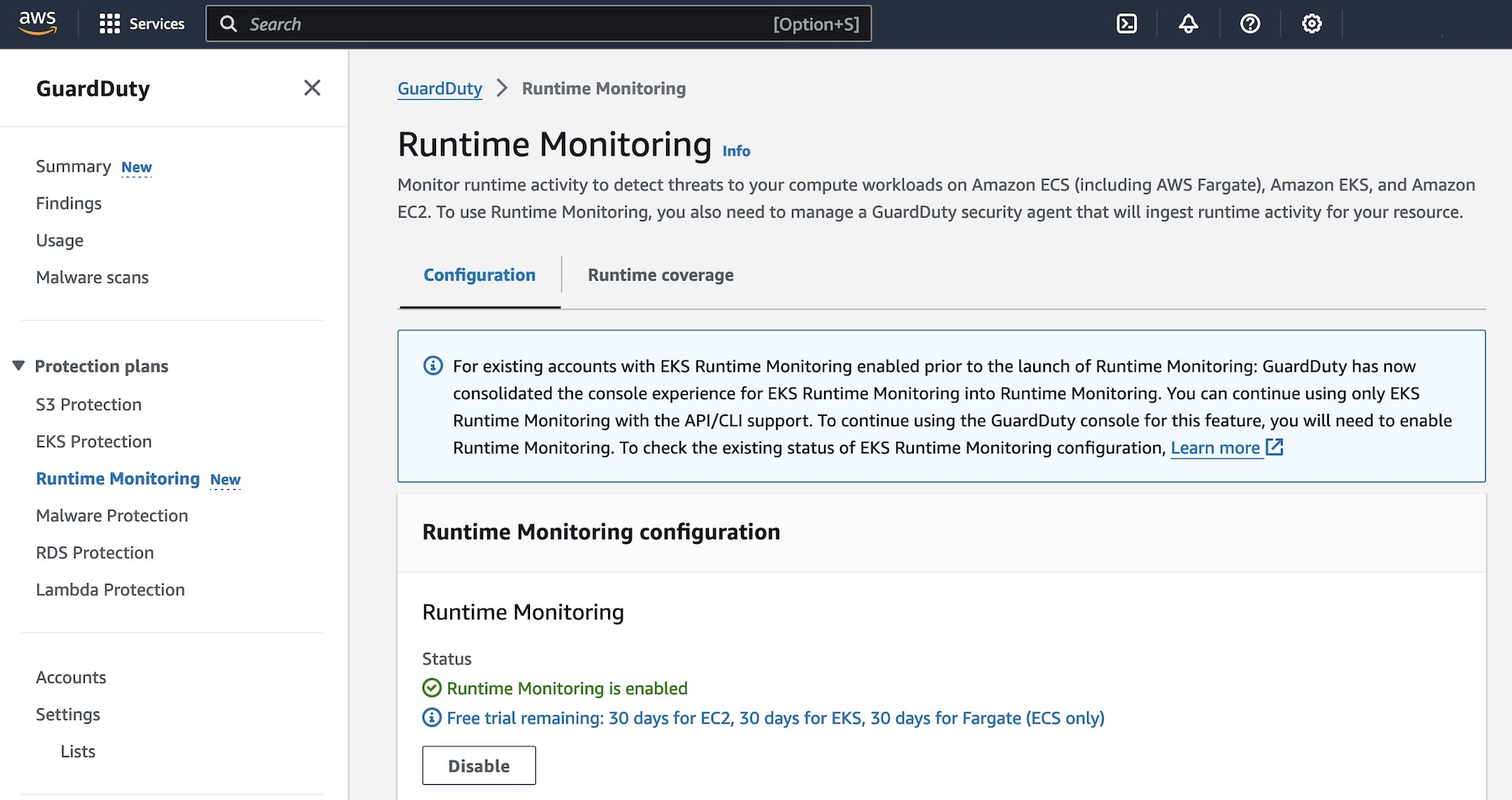

1. Define a farm with Deadline Cloud monitor

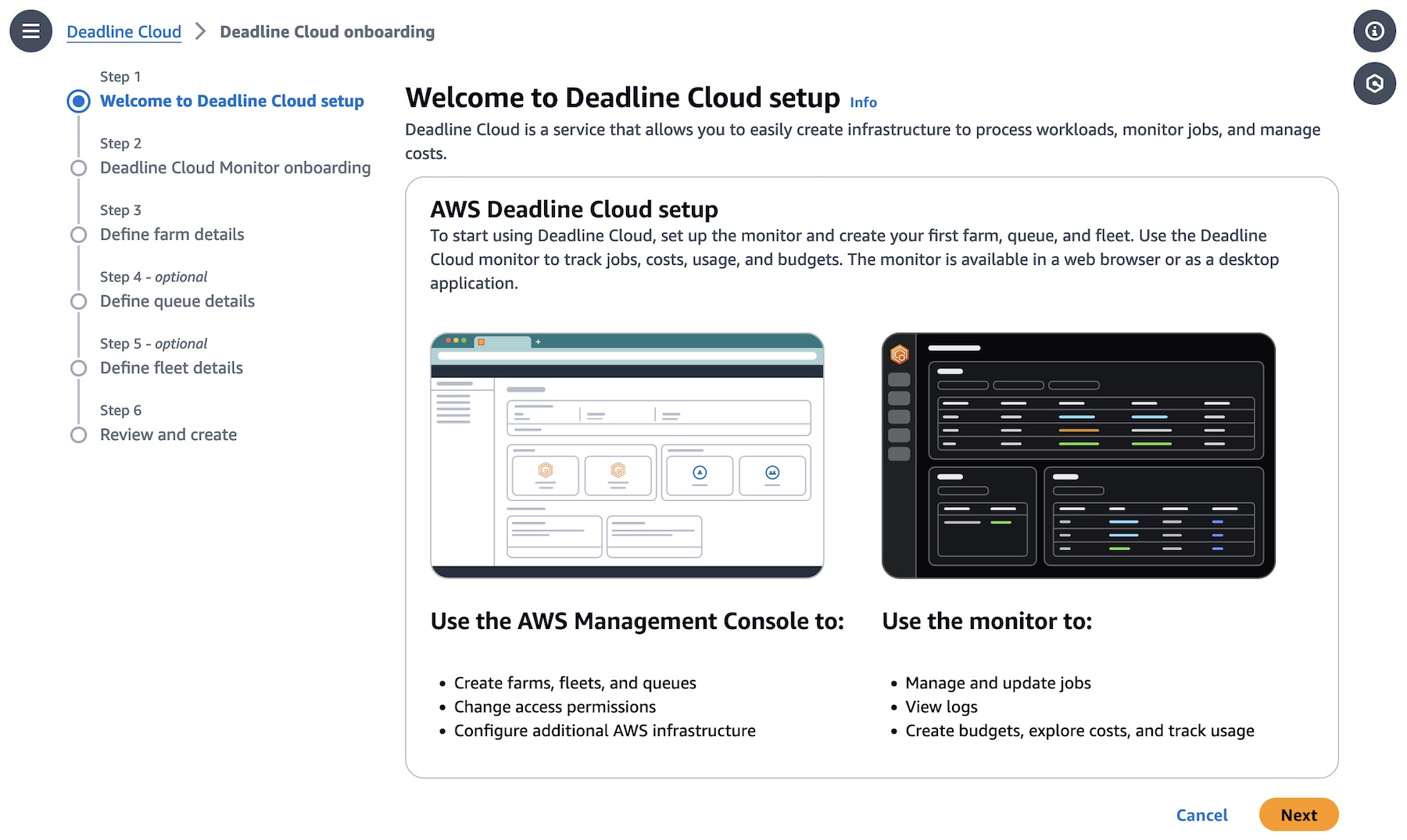

Let’s create your Deadline Cloud monitor infrastructure and define your farm first. In the Deadline Cloud console, choose Set up Deadline Cloud to define a farm with a guided experience, including queues and fleets, adding groups and users, choosing a service role, and adding tags to your resources.

In this step, to choose all the default settings for your Deadline Cloud resources, choose Skip to Review in Step 3 after monitor setup. Otherwise choose Next and customize your Deadline Cloud resources.

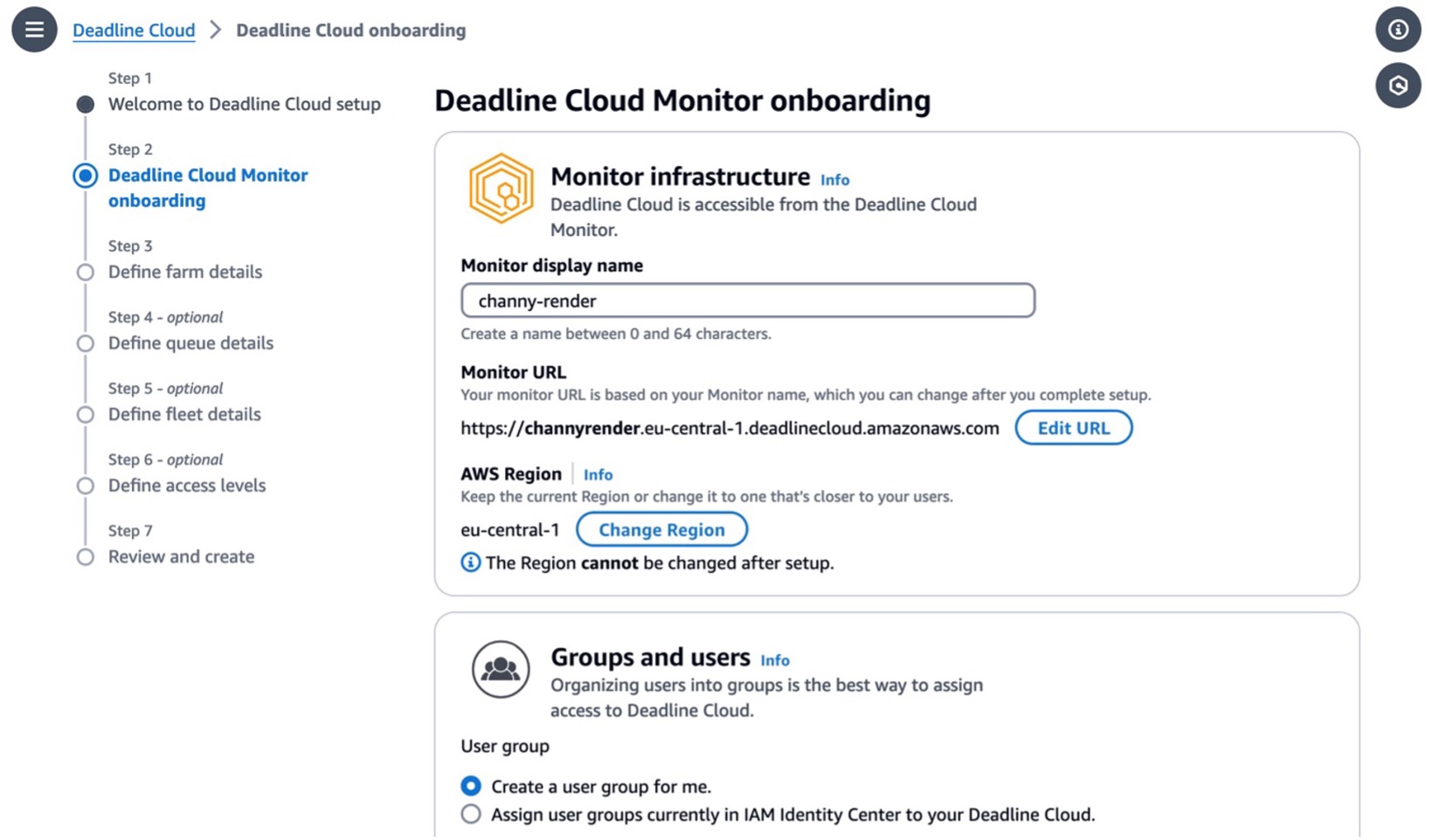

Set up your monitor’s infrastructure and enter your Monitor display name. This name makes the Monitor URL, a web portal to manage your farms, queues, fleets, and usages. You can’t change the monitor URL after you finish setting up. The AWS Region is the physical location of your rendering farm, so you should choose the closest Region from your studio to reduce the latency and improve data transfer speeds.

To access the monitor, you can create new users and groups and manage users (such as by assigning them groups, permissions, and applications) or delete users from your monitor. Users, groups, and permissions can also be managed in the IAM Identity Center. So, if you don’t set up the IAM Identity Center in your Region, you should enable it first. To learn more, visit Managing users in Deadline Cloud in the AWS documentation.

To access the monitor, you can create new users and groups and manage users (such as by assigning them groups, permissions, and applications) or delete users from your monitor. Users, groups, and permissions can also be managed in the IAM Identity Center. So, if you don’t set up the IAM Identity Center in your Region, you should enable it first. To learn more, visit Managing users in Deadline Cloud in the AWS documentation.

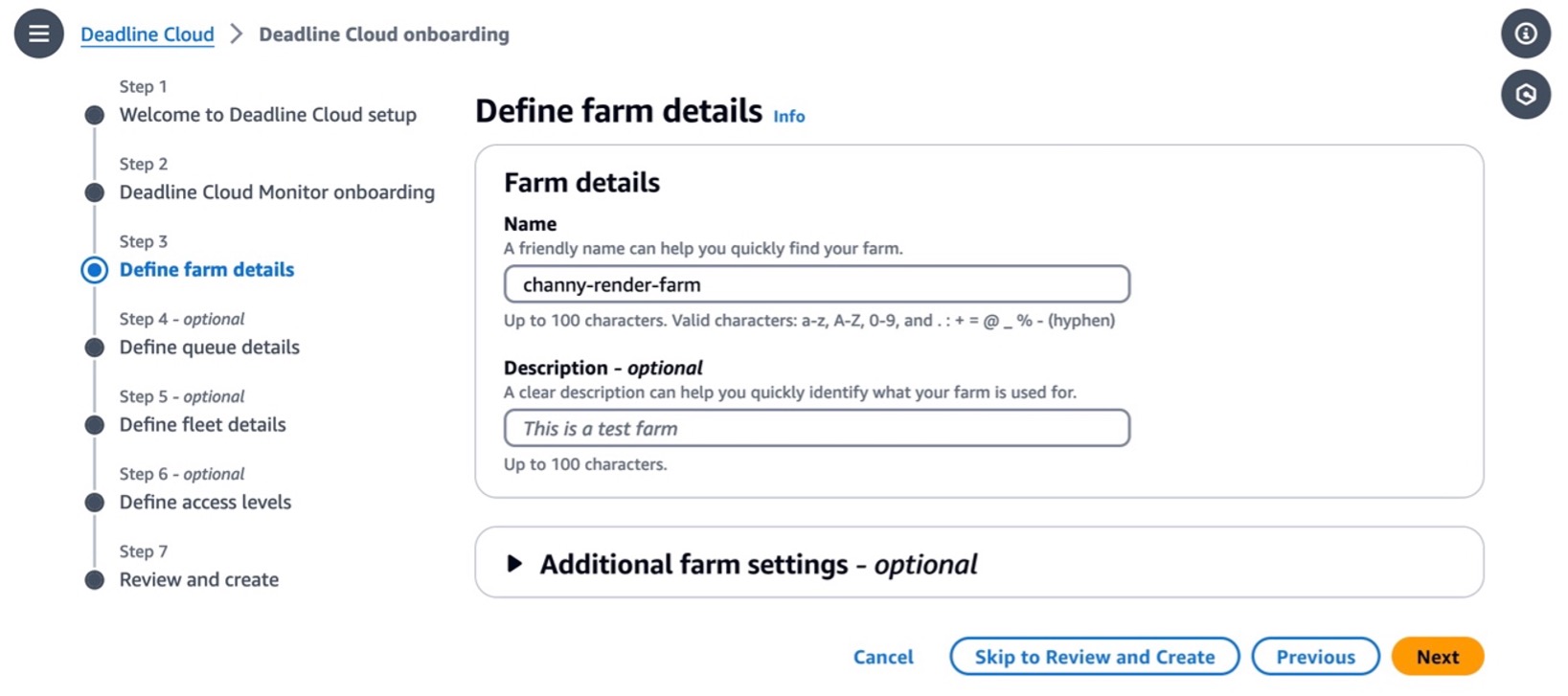

In Step 2, you can define farm details such as the name and description of your farm. In Additional farm settings, you can set an AWS Key Management Service (AWS KMS) key to encrypt your data and tags to assign AWS resources for filtering your resources or tracking your AWS costs. Your data is encrypted by default with a key that AWS owns and manages for you. To choose a different key, customize your encryption settings.

You can choose Skip to Review and Create to finish the quick setup process with the default settings.

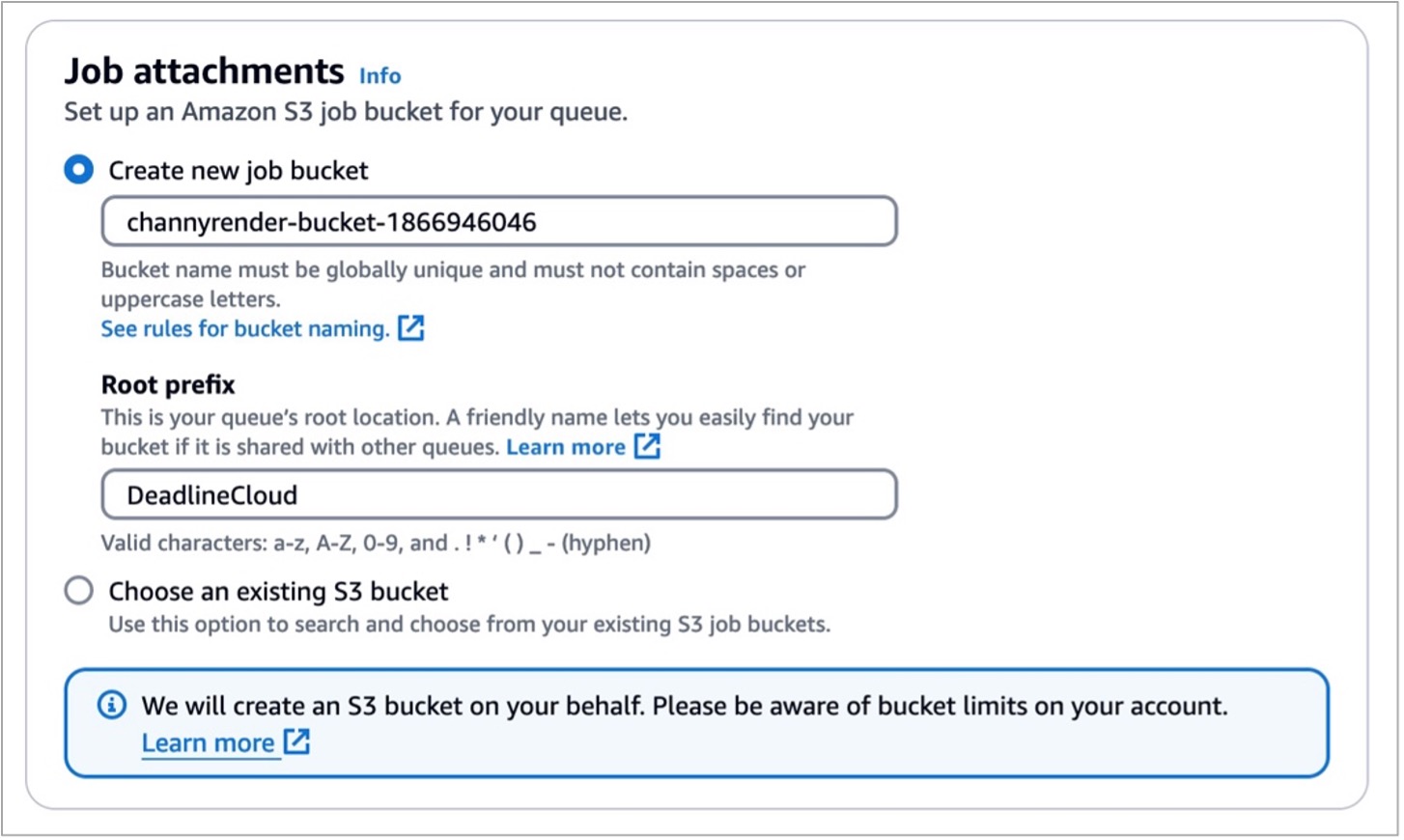

Let’s look at more optional configurations! In the step for defining queue details, you can set up an S3 bucket for your queue. Job assets are uploaded as job attachments during the rendering process. Job attachments are stored in your defined S3 bucket. Additionally, you can set up the default budget action, service access roles, and environment variables for your queue.

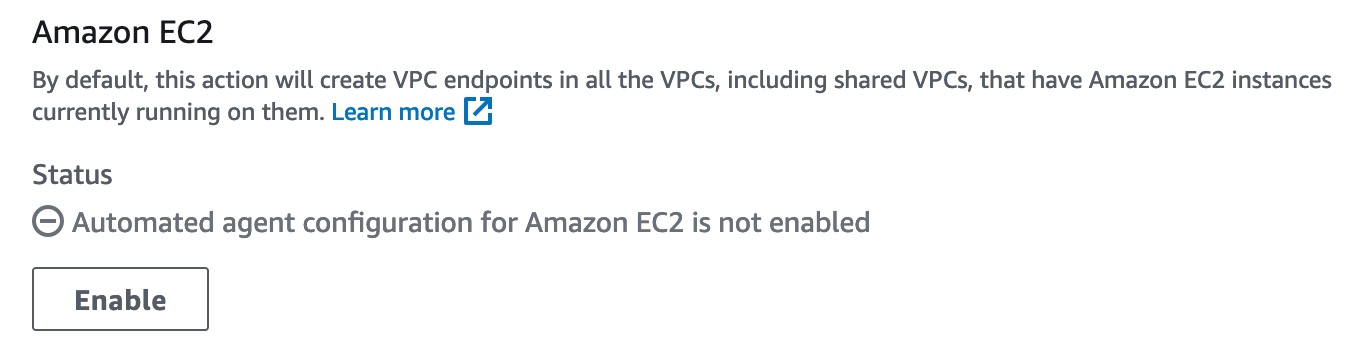

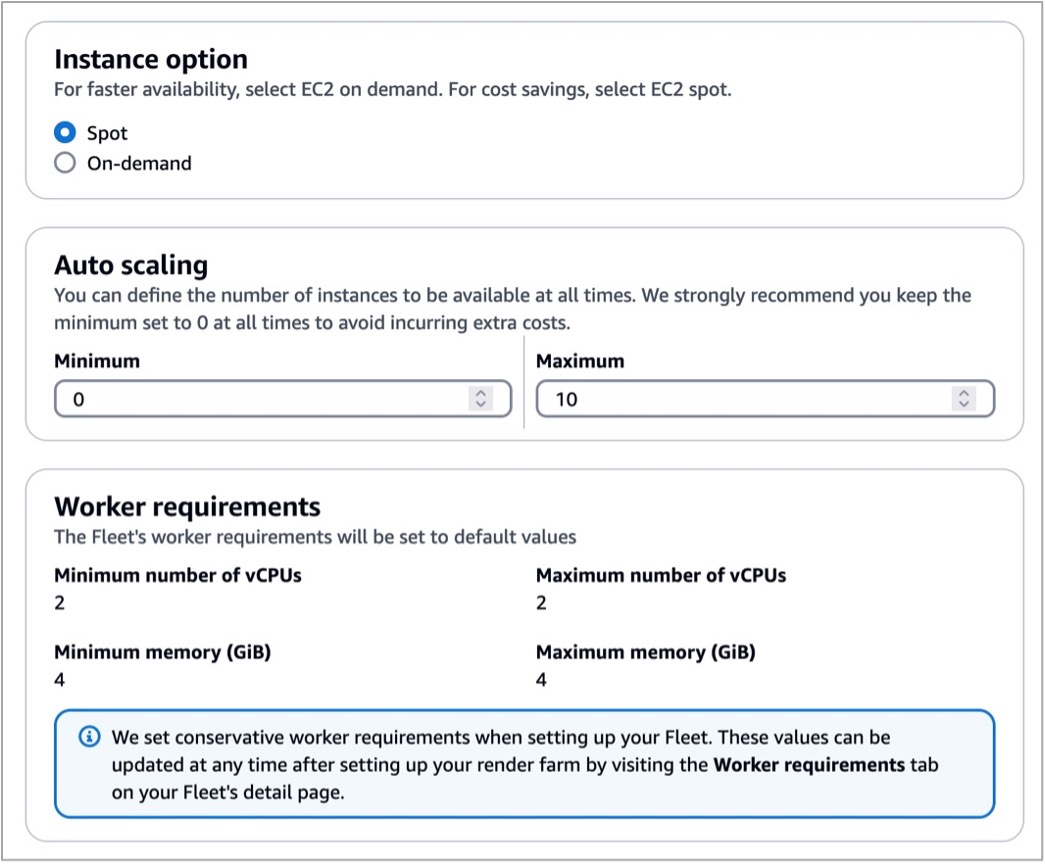

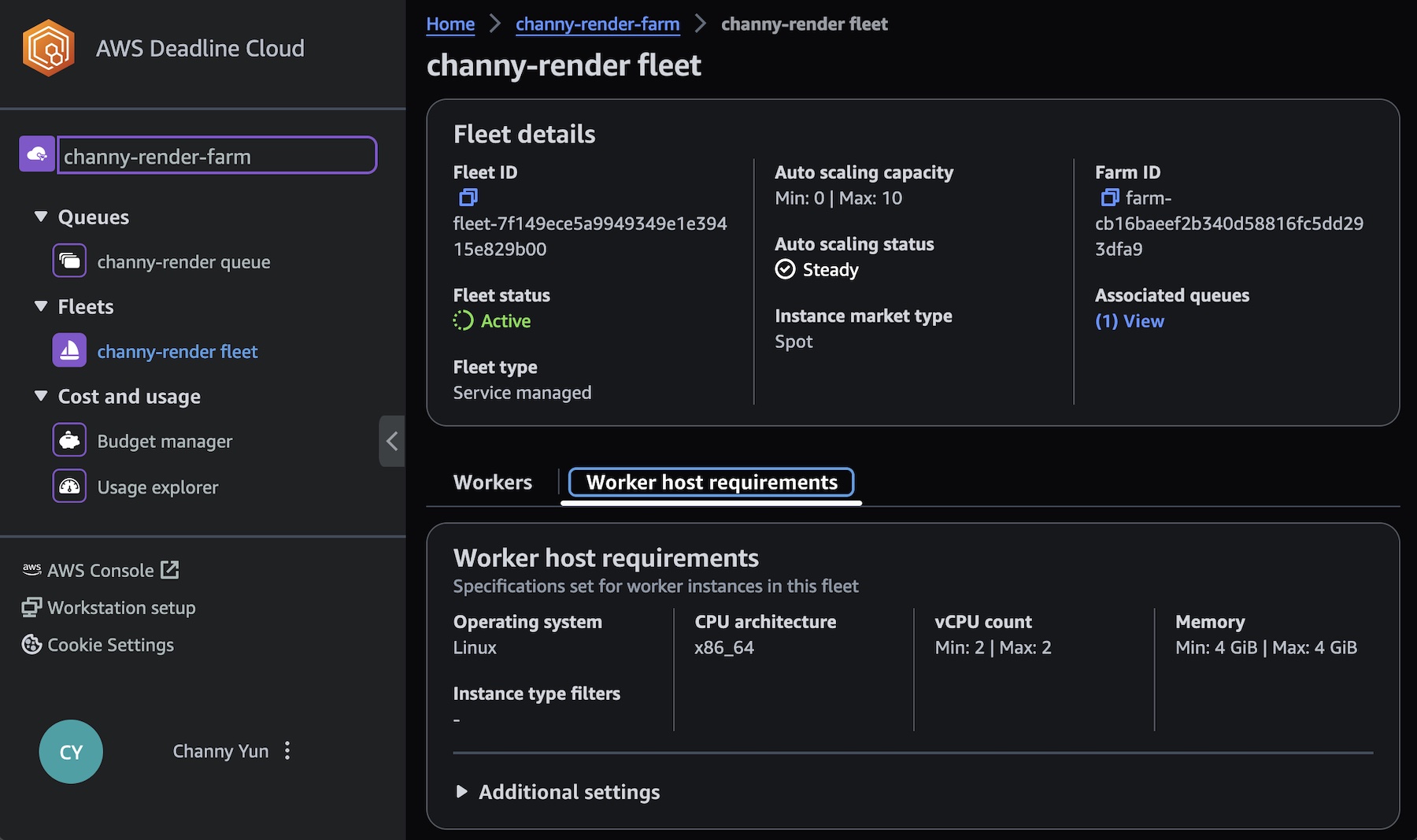

In the step for defining fleet details, set the fleet name, description, Instance option (either Spot or On-Demand Instance), and Auto scaling configuration to define the number of instances and the fleet’s worker requirements. We set conservative worker requirements by default. These values can be updated at any time after setting up your render farm. To learn more, visit Manage Deadline Cloud fleets in the AWS documentation.

Worker instances define EC2 instance types with vCPUs and memory size, for example, c5.large, c5a.large, and c6i.large. You can filter up to 100 EC2 instance types by either allowing or excluding types of worker instances.

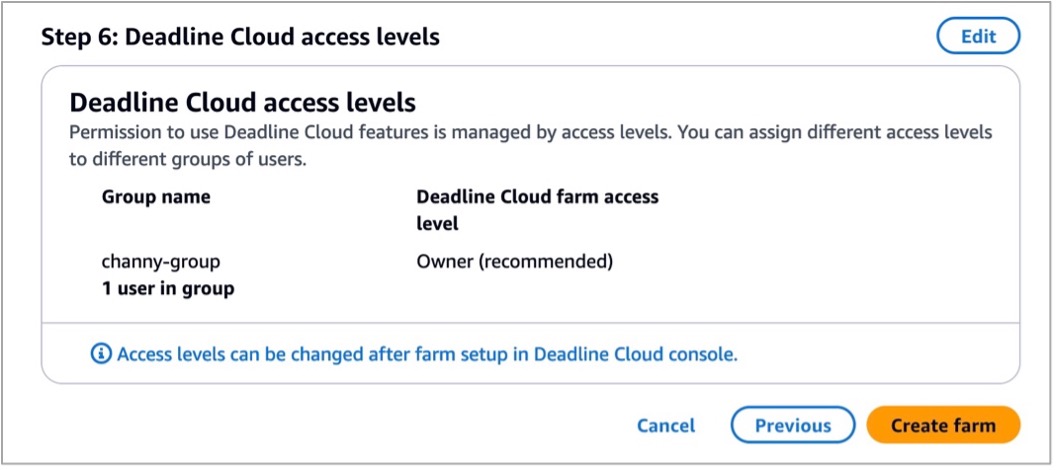

Review all of the information entered to create your farm and choose Create farm.

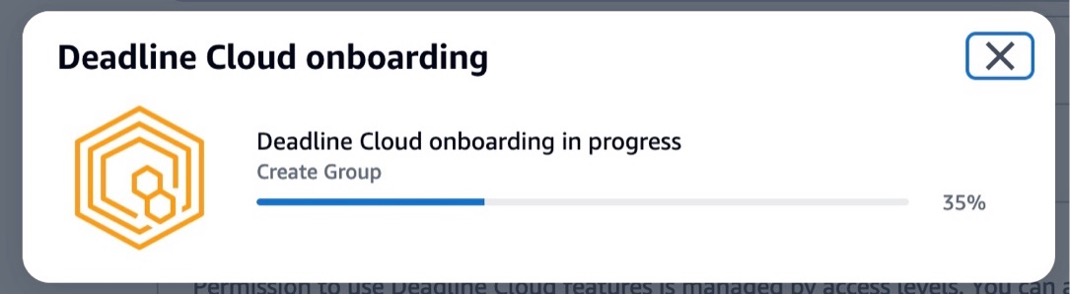

The progress of your Deadline Cloud onboarding is displayed, and a success message displays when your monitor and farm are ready for use. To learn more details about the process, visit Set up a Deadline Cloud monitor in the AWS documentation.

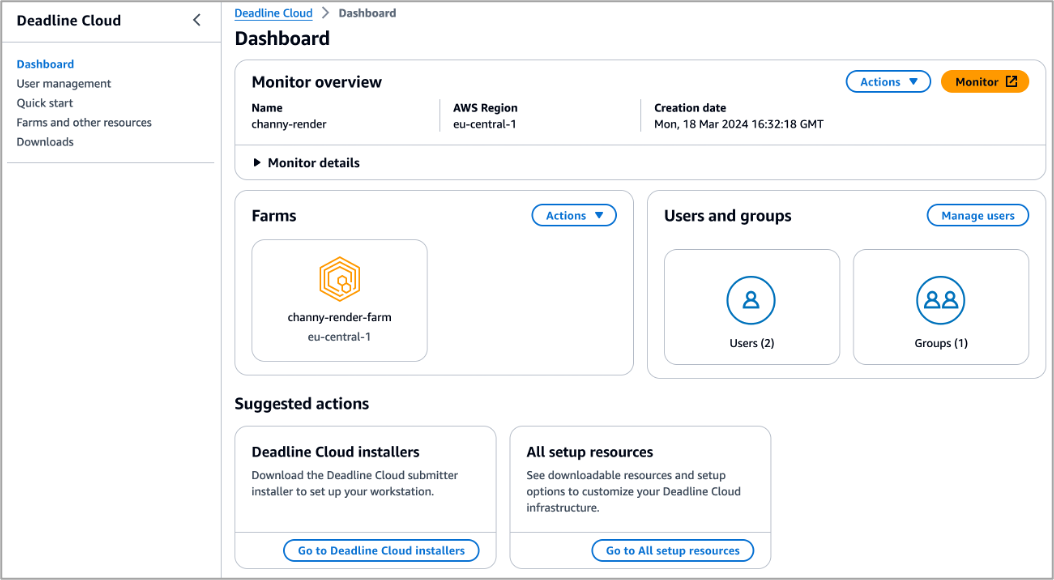

In the Dashboard in the left pane, you can visit the overview of the monitor, farms, users, and groups that you created.

Choose Monitor to visit a web portal to manage your farms, queues, fleets, usages, and budgets. After signing in to your user account, you can enter a web portal and explore the Deadline Cloud resources you created. You can also download a Deadline Cloud monitor desktop application with the same user experiences from the Downloads page.

To learn more about using the monitor, visit Using the Deadline Cloud monitor in the AWS documentation.

2. Set up a workstation and submit your render job to Deadline Cloud

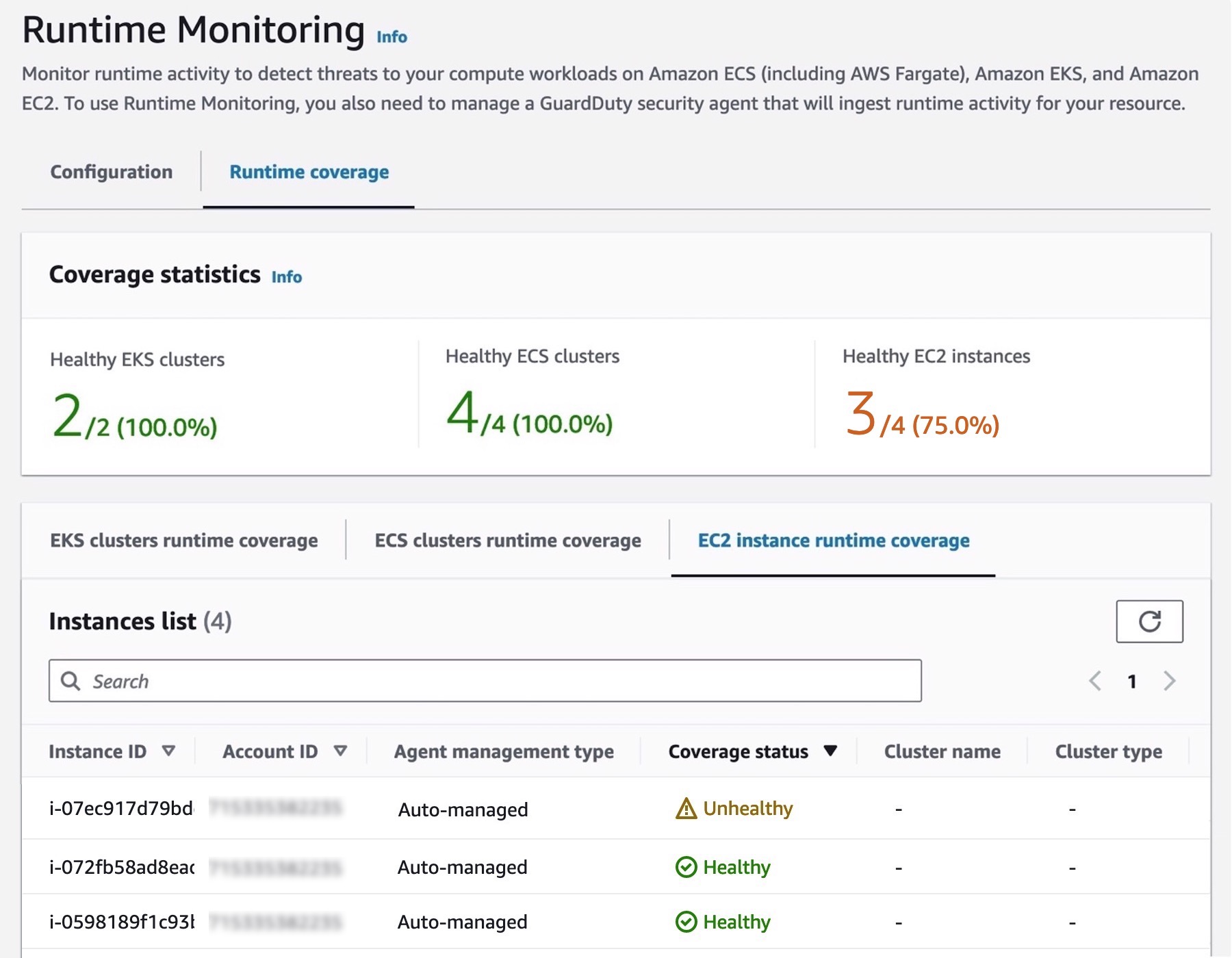

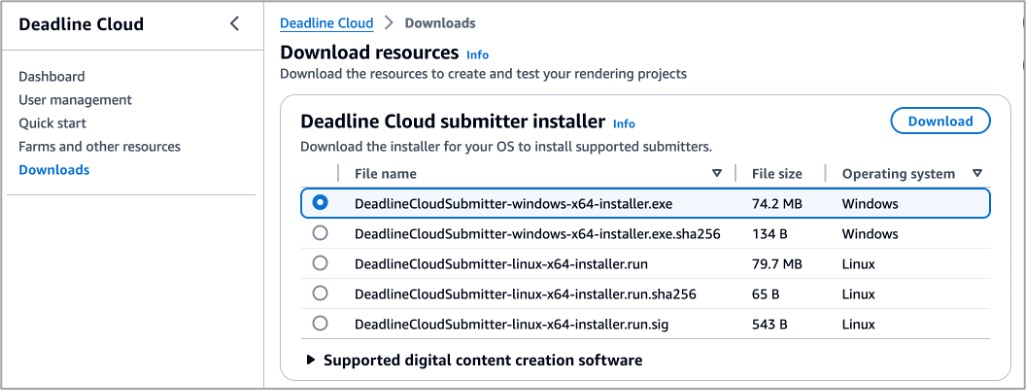

Let’s set up a workstation for artists on their desktops by installing the Deadline Cloud submitter application so they can easily submit render jobs from within Maya, Nuke, and Houdini. Choose Downloads in the left menu pane and download the proper submitter installer for your operating system to test your render farm.

This program installs the latest integrated plugin for Deadline Cloud submitter for Maya, Nuke, and Houdini.

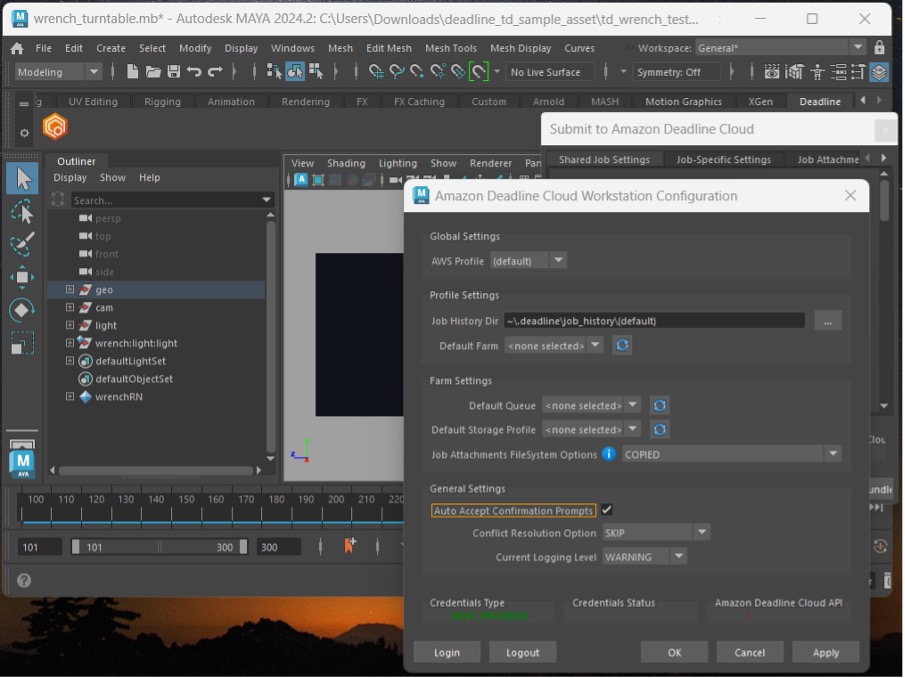

For example, open a Maya on your desktop and your asset. I have an example of a wrench file I’m going to test with. Choose Windows in the menu bar and Settings/Preferences in the sub menu. In the Plugin Manager, search for DeadlineCloudSubmitter. Select Loaded to load the Deadline Cloud submitter plugin.

If you are not already authenticated in the Deadline Cloud submitter, the Deadline Cloud Status tab will display. Choose Login and sign in with your user credentials in a browser sign-in window.

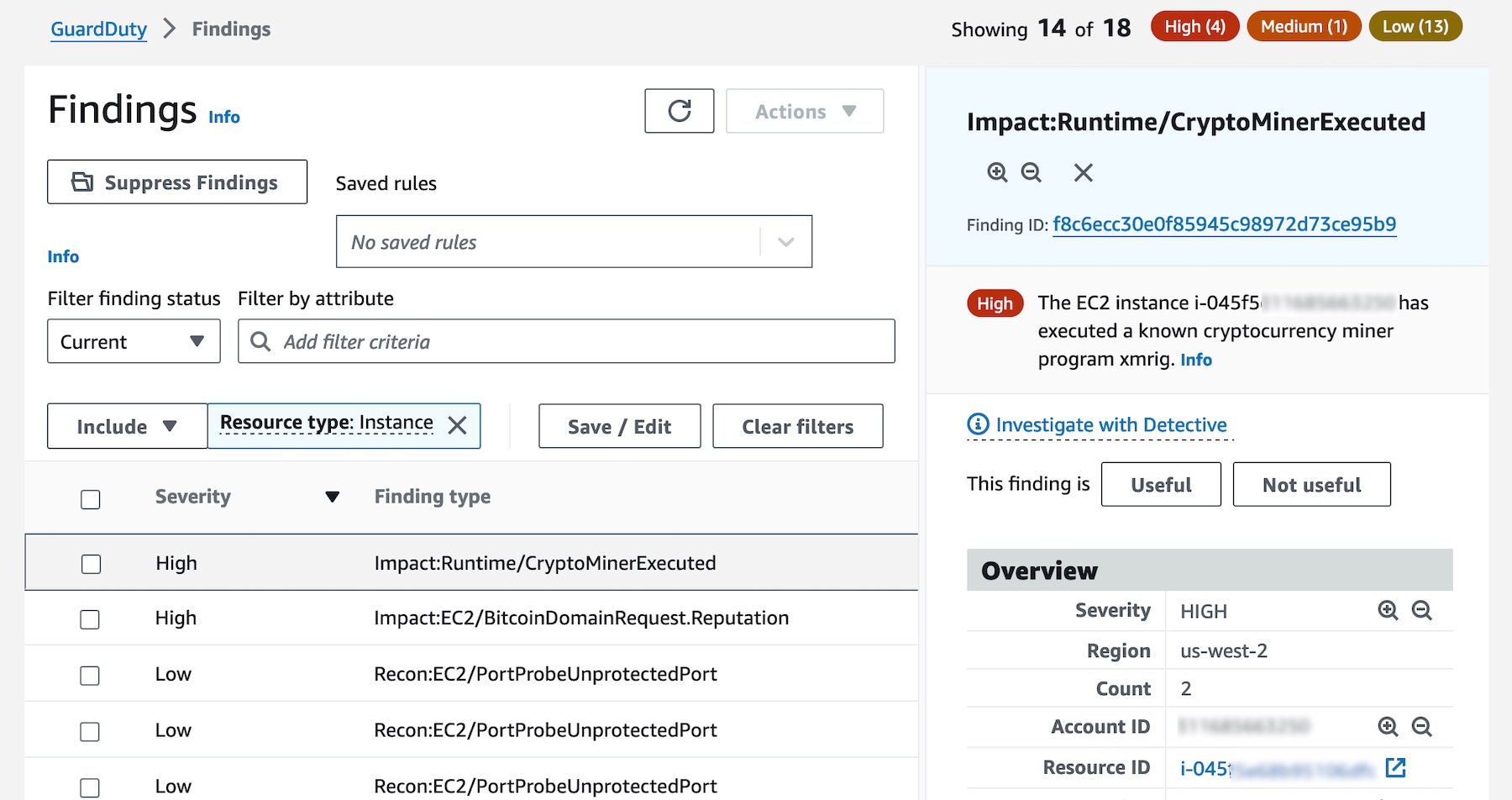

![]() Now, select the Deadline Cloud shelf, then choose the orange deadline cloud logo on the ‘Deadline’ shelf to launch the submitter. From the submitter window, choose the farm and queue you want your render submitted to. If desired, in the Scene Settings tab, you can override the frame range, change the Output Path, or both.

Now, select the Deadline Cloud shelf, then choose the orange deadline cloud logo on the ‘Deadline’ shelf to launch the submitter. From the submitter window, choose the farm and queue you want your render submitted to. If desired, in the Scene Settings tab, you can override the frame range, change the Output Path, or both.

If you choose Submit, the wrench turntable Maya file, along with all of the necessary textures and alembic caches, will be uploaded to Deadline Cloud and rendered on the farm. You can monitor rendering jobs in your Deadline Cloud monitor.

When your render is finished, as indicated by the Succeeded status in the job monitor, choose the job, Job Actions, and Download Output. To learn more about scheduling and monitoring jobs, visit Deadline Cloud jobs in the AWS documentation.

View your rendered image with an image viewing application such as DJView. The image will look like this:

To learn more in detail about the developer-side setup process using the command line, visit Setting up a developer workstation for Deadline Cloud in the AWS documentation.

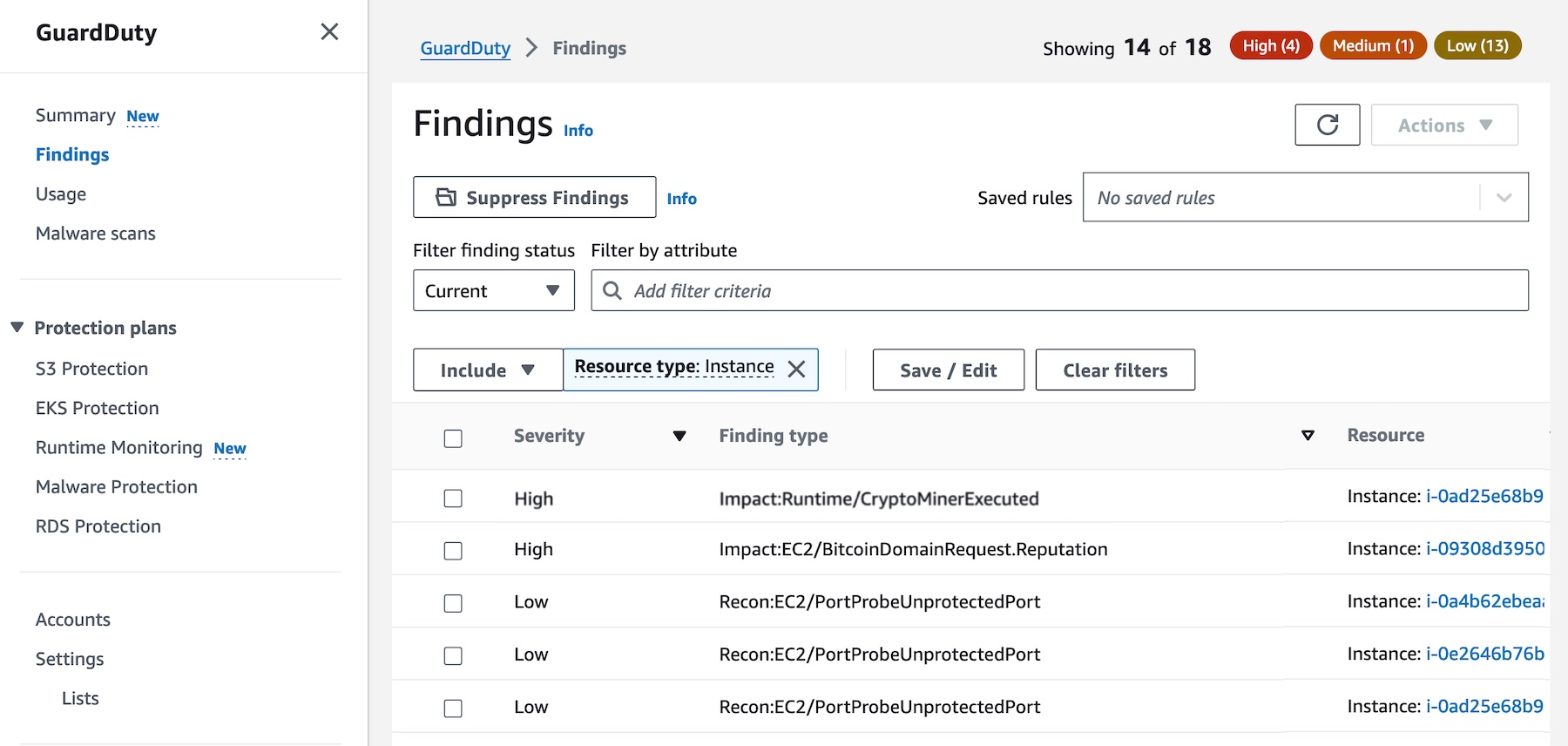

3. Managing budgets and usage for Deadline Cloud

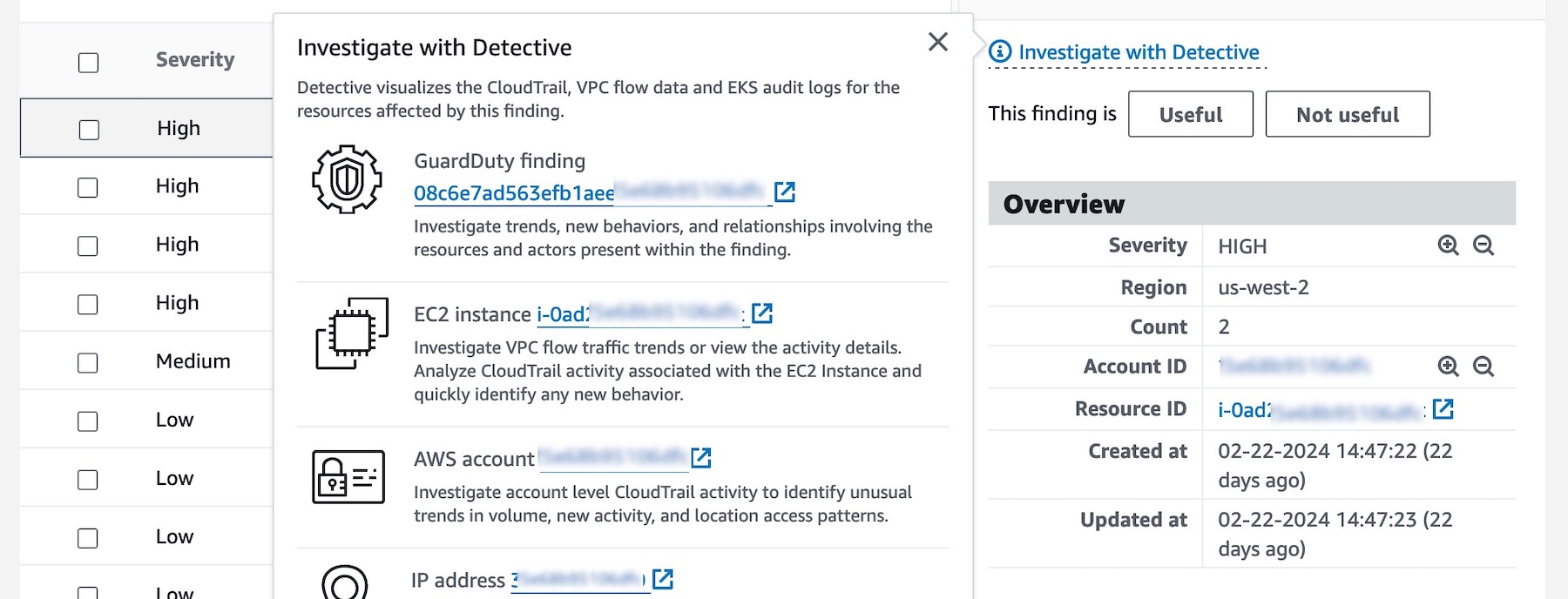

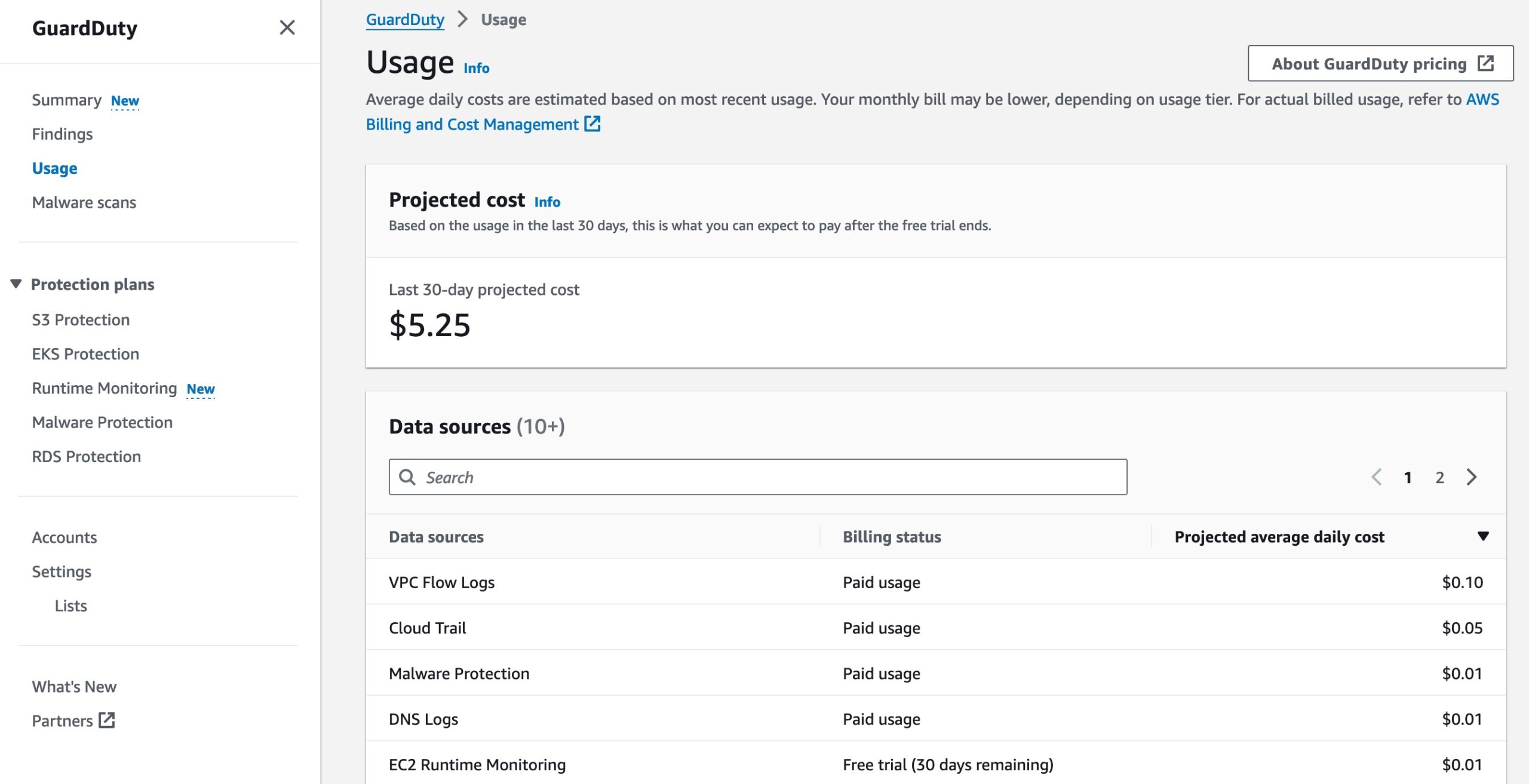

To help you manage costs for Deadline Cloud, you can use a budget manager to create and edit budgets. You can also use a usage explorer to view how many AWS resources are used and the estimated costs for those resources.

Choose Budgets on the Deadline Cloud monitor page to create your budget for your farm.

You can create budget amounts and limits and set automated actions to help reduce or stop additional spend against the budget.

Choose Usage in the Deadline Cloud monitor page to find real-time metrics on the activity happening on each farm. You can look at the farm’s costs by different variables, such as queue, job, or user. Choose various time frames to find usage during a specific period and look at usage trends over time.

The costs displayed in the usage explorer are approximate. Use them as a guide for managing your resources. There may be other costs from using other connected AWS resources, such as Amazon S3, Amazon CloudWatch, and other services that are not accounted for in the usage explorer.

To learn more, visit Managing budgets and usage for Deadline Cloud in the AWS documentation.

Now available

AWS Deadline Cloud is now available in US East (Ohio), US East (N. Virginia), US West (Oregon), Asia Pacific (Singapore), Asia Pacific (Sydney), Asia Pacific (Tokyo), Europe (Frankfurt), and Europe (Ireland) Regions.

Give AWS Deadline Cloud a try in the Deadline Cloud console. For more information, visit the Deadline Cloud product page, Deadline Cloud User Guide in the AWS documentation, and send feedback to AWS re:Post for AWS Deadline Cloud or through your usual AWS support contacts.

— Channy