Last week, a new AI agent framework was introduced to automate "live". It targets office work in particular, focusing on messaging and interacting with systems. The tool has gone viral not so much because of its features, which are similar to those of other agent frameworks, but because of a stream of security oversights in its design.

AWS Weekly Roundup: Amazon Bedrock agent workflows, Amazon SageMaker private connectivity, and more (February 2, 2026)

Over the past week, we passed Laba festival, a traditional marker in the Chinese calendar that signals the final stretch leading up to the Lunar New Year. For many in China, it’s a moment associated with reflection and preparation, wrapping up what the year has carried, and turning attention toward what lies ahead.

Over the past week, we passed Laba festival, a traditional marker in the Chinese calendar that signals the final stretch leading up to the Lunar New Year. For many in China, it’s a moment associated with reflection and preparation, wrapping up what the year has carried, and turning attention toward what lies ahead.

Looking forward, next week also brings Lichun, the beginning of spring and the first of the 24 solar terms. In Chinese tradition, spring is often seen as the season when growth begins and new cycles take shape. There’s a common saying that “a year’s plans begin in spring,” capturing the idea that this is a time to set one’s direction and start fresh.

Last week’s launches

Here are the launches that got my attention this week:

- Amazon Bedrock enhances support for agent workflows with server-side tools and extended prompt caching – Amazon Bedrock introduced two updates that improve how developers build and operate AI agents. The Responses API now supports server-side tool use, so agents can perform actions such as web search, code execution, and database updates within AWS security boundaries. Bedrock also adds a 1-hour time-to-live (TTL) option for prompt caching, which helps improve performance and reduce the cost for long-running, multi-turn agent workflows. Server-side tools are available with OpenAI GPT OSS 20B and 120B models, and the 1-hour prompt caching TTL is generally available for select Claude models by Anthropic in Amazon Bedrock.

- Amazon SageMaker Unified Studio adds private VPC connectivity with AWS PrivateLink – Amazon SageMaker Unified Studio now supports AWS PrivateLink, providing private connectivity between your VPC and SageMaker Unified Studio without routing customer data over the public internet. With SageMaker service endpoints onboarded into a VPC, data traffic remains within the AWS network and is governed by IAM policies, supporting stricter security and compliance requirements.

- Amazon S3 adds support for changing object encryption without data movement – Amazon S3 now supports changing the server-side encryption type of existing encrypted objects without moving or re-uploading data. Using the

UpdateObjectEncryptionAPI, you can switch from SSE-S3 to SSE-KMS, rotate customer -managed AWS Key Management Service (AWS KMS) keys, or standardize encryption across buckets at scale with S3 Batch Operations while preserving object properties and lifecycle eligibility. - Amazon Keyspaces introduces table pre-warming for predictable high-throughput workloads – Amazon Keyspaces (for Apache Cassandra) now supports table pre-warming, which helps you proactively set warm throughput levels so tables can handle high read and write traffic instantly without cold-start delays. Pre-warming helps reduce throttling during sudden traffic spikes, such as product launches or sales events, and works with both on-demand and provisioned capacity modes, including multi-Region tables. The feature supports consistent, low-latency performance while giving you more control over throughput readiness.

- Amazon DynamoDB MRSC global tables integrate with AWS Fault Injection Service – Amazon DynamoDB multi-Region strong consistency (MRSC) global tables now integrate with AWS Fault Injection Service. With this integration, you can simulate Regional failures, test replication behavior, and validate application resiliency for strongly consistent, multi-Region workloads.

Additional updates

Here are some additional projects, blog posts, and news items that I found interesting:

- Building zero-trust access across multi-account AWS environments with AWS Verified Access – This post walks through how to implement AWS Verified Access in a centralized, shared-services architecture. It shows how to integrate with AWS IAM Identity Center and AWS Resource Access Manager (AWS RAM) to apply zero trust access controls at the application layer and reduce operational overhead across multi-account AWS environments.

- Amazon EventBridge increases event payload size to 1 MB – Amazon EventBridge now supports event payloads up to 1 MB, an increase from the previous 256 KB limit. This update helps event-driven architectures carry richer context in a single event, including complex JSON structures, telemetry data, and machine learning (ML) or generative AI outputs, without splitting payloads or relying on external storage.

- AWS MCP Server adds deployment agent SOPs (preview) – AWS introduced deployment standard operating procedures (SOPs) that AI agents can deploy web applications to AWS from a single natural language prompt in MCP -compatible integrated development environments (IDEs) and command line interfaces (CLIs) such as Kiro, Cursor, and Claude Code. The agent generates AWS Cloud Development Kit (AWS CDK) infrastructure, deploys AWS CloudFormation stacks, and sets up continuous integration and continuous delivery (CI/CD) workflows following AWS best practices. The preview supports frameworks including React, Vue.js, Angular, and Next.js.

- AWS Network Firewall adds generation AI traffic visibility with web category filtering – AWS Network Firewall now provides visibility into generative AI application traffic through predefined web categories. You can use these categories directly in firewall rules to govern access to generative AI tools and other web services. When combined with TLS inspection, category-based filtering can be applied at the full URL level.

- AWS Lambda adds enhanced observability for Kafka event source mappings – AWS Lambda introduced enhanced observability for Kafka event source mappings, providing Amazon CloudWatch Logs and metrics to monitor event polling configuration, scaling behavior, and event processing state. The update improves visibility into Kafka-based Lambda workloads, helping teams diagnose configuration issues, permission errors, and function failures more efficiently. The capability supports both Amazon Managed Streaming for Apache Kafka (Amazon MSK) and self-managed Apache Kafka event sources.

- AWS CloudFormation 2025 year in review – This year-in-review post highlights CloudFormation updates delivered throughout 2025, with a focus on early validation, safer deployments, and improved developer workflows. It covers enhancements such as improved troubleshooting, drift-aware change sets, stack refactoring, StackSets updates, and new -IDE and AI -assisted tooling, including the CloudFormation language server and the Infrastructure as Code (IaC) MCP server.

Upcoming AWS events

Check your calendars so that you can sign up for this upcoming event:

AWS Community Day Romania (April 23–24, 2026) – This community-led AWS event brings together developers, architects, entrepreneurs, and students for more than 10 professional sessions delivered by AWS Heroes, Solutions Architects, and industry experts. Attendees can expect expert-led technical talks, insights from speakers with global conference experience, and opportunities to connect during dedicated networking breaks, all hosted at a premium venue designed to support collaboration and community engagement.

If you’re looking for more ways to stay connected beyond this event, join the AWS Builder Center to learn, build, and connect with builders in the AWS community.

Check back next Monday for another Weekly Roundup.

Scanning for exposed Anthropic Models, (Mon, Feb 2nd)

Yesterday, a single IP address (%%ip:204.76.203.210%%) scanned a number of our sensors for what looks like an anthropic API node. The IP address is known to be a Tor exit node.

The requests are pretty simple:

GET /anthropic/v1/models

Host: 67.171.182.193:8000

X-Api-Key: password

Anthropic-Version: 2023-06-01

It looks like this is scanning for locally hosted Anthropic models, but it is not clear to me if this would be successful. If anyone has any insights, please let me know. The API Key is a commonly used key in documentation, and not a key that anybody would expect to work.

At the same time, we are also seeing a small increase in requests for "/v1/messages". These requests have been more common in the past, but the URL may be associated with Anthropic (it is, however, somewhat generic, and it is likely other APIs use the same endpoint. These requests originate from %%ip:154.83.103.179%%, an IP address with a bit a complex geolocation and routing footprint.

—

Johannes B. Ullrich, Ph.D. , Dean of Research, SANS.edu

Twitter|

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Google Presentations Abused for Phishing, (Fri, Jan 30th)

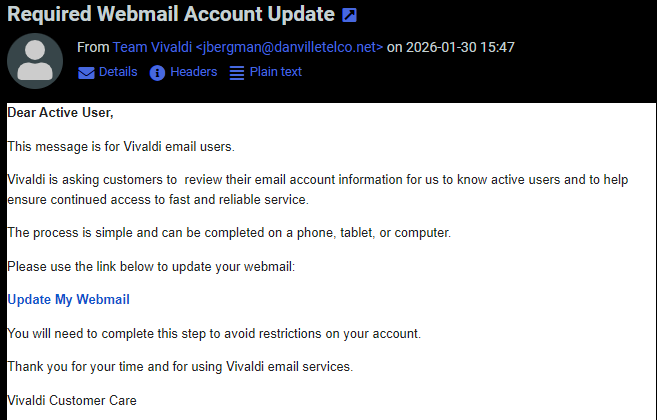

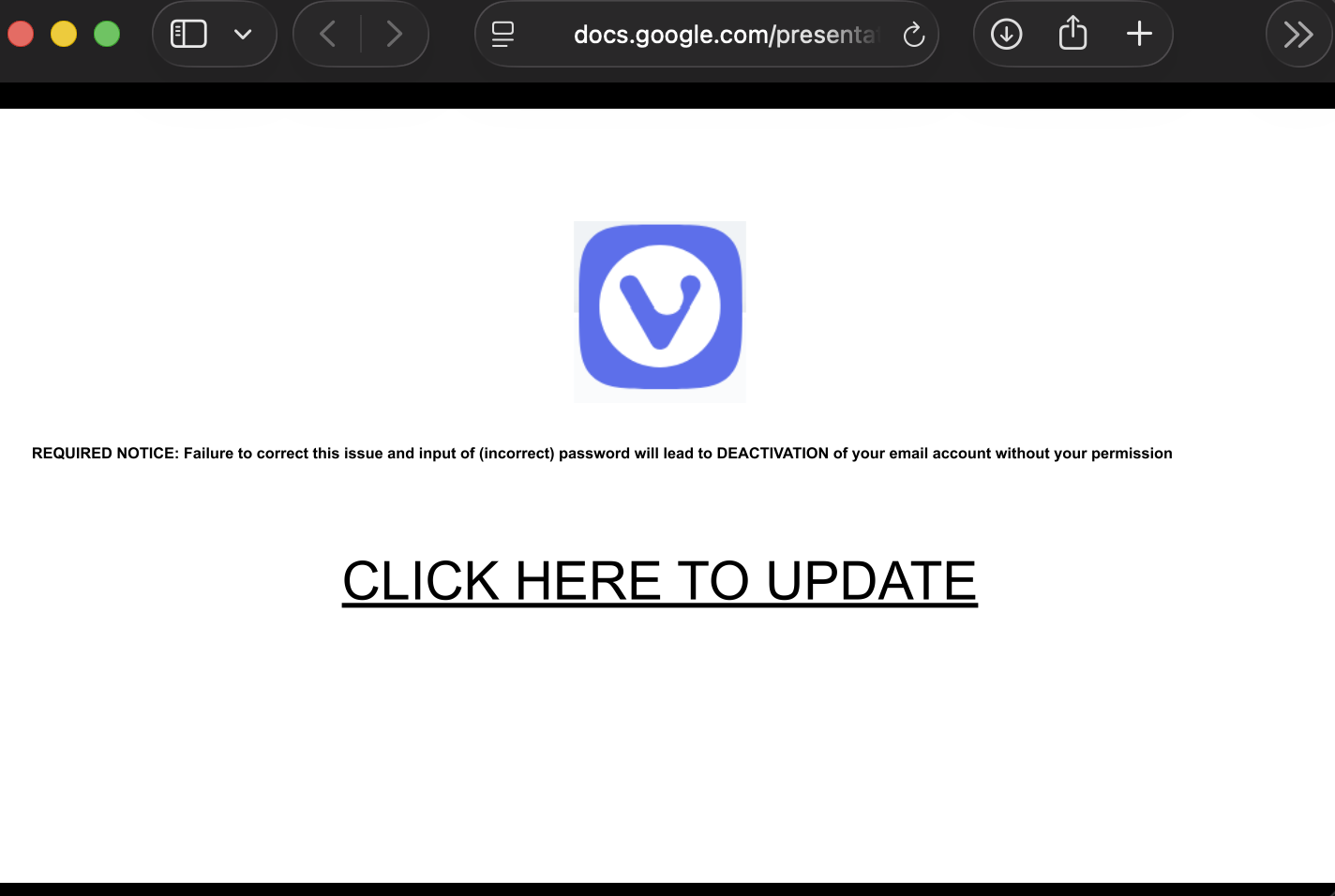

Charlie, one of our readers, has forwarded an interesting phishing email. The email was sent to users of the Vivladi Webmail service. While not overly convincing, the email is likely sufficient to trick a non-empty group of users:

The e-mail gets more interesting as the user clicks on the link. The link points to Google Documents and displays a slide show:

Usually, Google Docs displays a footer notice that warns viewers about phishing sites and offers a "reporting" link if a page is used for phishing. Bots are missing in this case. At first, I suspected some HTML/JavaScript/CSS tricks, but it turns out that this isn't a bug; it is a feature!

Usually, if a user shares slides, the document opens in an "edit" window. This can be avoided by replacing "edit" with "preview" in the URL, but the footer still makes it obvious that this is a set of slides. To remove the footer, the slides have to be "published," and the resulting link must be shared. When publishing, the slides will auto-advance. But for a one-slide slideshow, this isn't an issue. There is also a setting to delay the advance. Here are some sample links:

[These links point to a simple sample presentation I created, not the phishing version.]

Normal Share:

https://docs.google.com/presentation/d/1Quzd6bbuPlIcTOorlUDzSuJCXiOyqBTSHczo6hnXcac/edit?usp=sharing

Preview Share:

https://docs.google.com/presentation/d/1Quzd6bbuPlIcTOorlUDzSuJCXiOyqBTSHczo6hnXcac/preview?usp=sharing

Publish Share:

https://docs.google.com/presentation/d/e/2PACX-1vRaoBusJAaIoVcNbGsfVyE0OuTP1dS-2Po9lpAN9GGy2EkbZG_oR9maZDS7cq2xW_QeiF8he457hq3_/pub?start=false&loop=false&delayms=30000

The URL parameters in the last link do not start the presentations, nor loop them, and delay the next slide by 30 seconds.

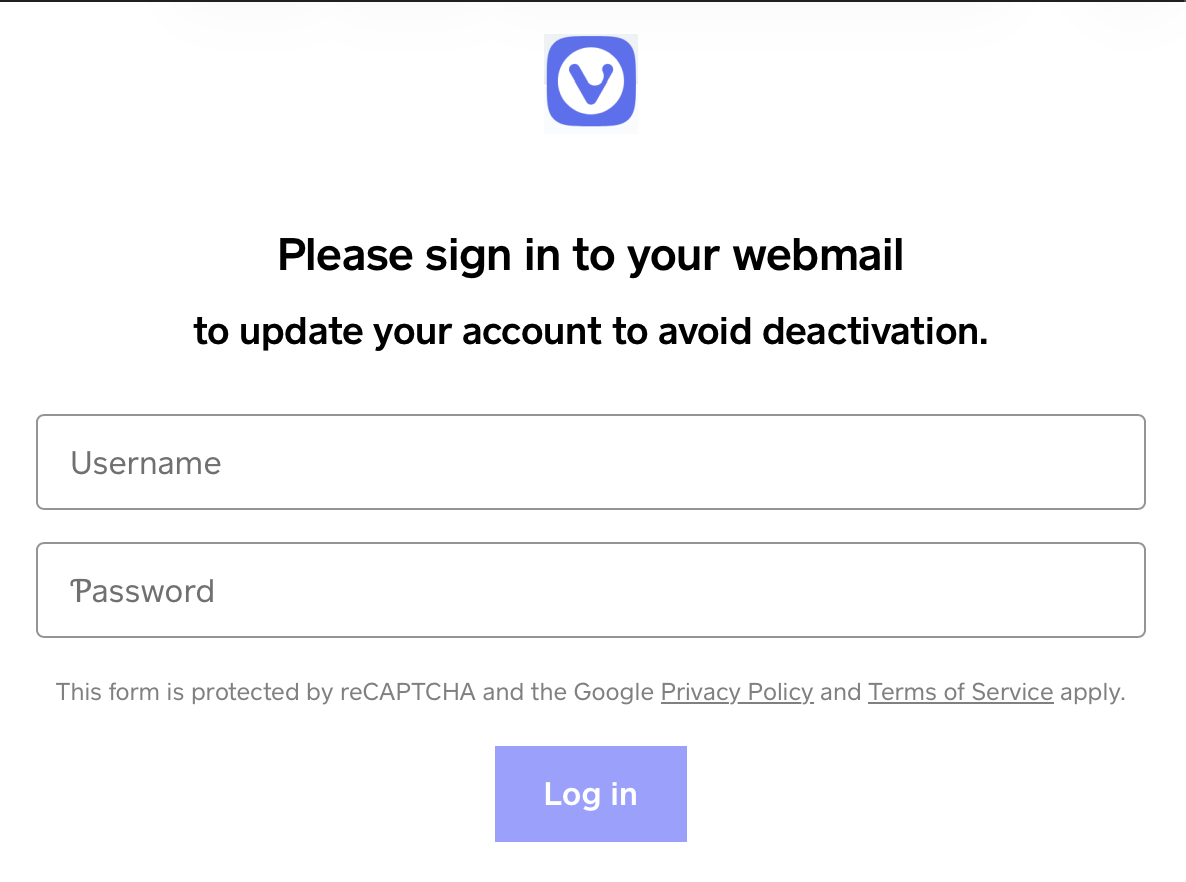

The Vivaldi webmail phishing ended up on a "classic" phishing login form that was created using Square. So far, this form is still visible at

hxxps [:] //vivaldiwebmailaccountsservices[.]weeblysite[.]com

???????

???????

—

Johannes B. Ullrich, Ph.D. , Dean of Research, SANS.edu

Twitter|

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Odd WebLogic Request. Possible CVE-2026-21962 Exploit Attempt or AI Slop?, (Wed, Jan 28th)

I was looking for possible exploitation of CVE-2026-21962, a recently patched WebLogic vulnerability. While looking for related exploit attempts in our data, I came across the following request:

GET /weblogic//weblogic/..;/bea_wls_internal/ProxyServlet

host: 71.126.165.182

user-agent: Mozilla/5.0 (compatible; Exploit/1.0)

accept-encoding: gzip, deflate

accept: */*

connection: close

wl-proxy-client-ip: 127.0.0.1;Y21kOndob2FtaQ==

proxy-client-ip: 127.0.0.1;Y21kOndob2FtaQ==

x-forwarded-for: 127.0.0.1;Y21kOndob2FtaQ==

According to write-ups about CVE-2026-21962, this request is related [2]. However, the vulnerability also matched an earlier "AI Slop" PoC [3][4]. Another write-up, that also sounds very AI-influenced, suggests a very different exploit mechanism that does not match the request above [5].

The source IP is 193.24.123.42. Our data shows sporadic HTTP scans for this IP address, and it appears to be located in Russia. Not terribly remarkable at that. In the past, the IP has used the "Claudbot" user-agent. But it does not have any actual affiliation with Anthropic (not to be confused with the recent news about clawdbot).

The exploit is a bit odd. First of all, it does use the loopback address as an "X-Forwarded-For" address. This is a common trick to bypass access restrictions (I would think that Oracle is a bit better than to fall for a simple issue like that). There is an option to list multiple IPs, but they should be delimited by a comma, not a semicolon.

The base64 encoded string decodes to: "cmd:whoami". This suggests a simple command injection vulnerability. Possibly, the content of the header is base64 decoded and next, passed as a command line argument?? Certainly an odd mix of encodings in one header, and unlikely to work.

Let's hope this is AI slop and the exploit isn't that easy. We have seen a significant uptick in requests, including the wl-proxy-client-ip header, starting on January 21st, but the header has been used before. It is a typical exploit AI may come up with, seeing keywords like "Weblogic Server Proxy Plug-in".

I asked ChatGPT and Grok if this is an exploit or AI slop. The abbreviated answer:

ChatGPT: "This looks more like a “scanner/probe that’s trying to look like an exploit” than a complete, working exploit by itself — but it’s not random either. It’s borrowing real WebLogic attack ingredients."

Grok: "This is an actual exploit attempt — not just random "AI slop" or nonsense traffic."

Google Gemini: "That is definitely an actual exploit attempt, not AI slop. Specifically, it is targeting a well-known vulnerability in Oracle WebLogic Server."

[1] https://nvd.nist.gov/vuln/detail/CVE-2026-21962

[2] https://dbugs.ptsecurity.com/vulnerability/PT-2026-3709

[3] https://x.com/0xacb/status/2015473216844620280

[4] https://github.com/Ashwesker/Ashwesker-CVE-2026-21962/blob/main/CVE-2026-21962.py

[5] https://www.penligent.ai/hackinglabs/the-ghost-in-the-middle-a-definitive-technical-analysis-of-cve-2026-21962-and-its-existential-threat-to-ai-pipelines/

—

Johannes B. Ullrich, Ph.D. , Dean of Research, SANS.edu

Twitter|

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Initial Stages of Romance Scams [Guest Diary], (Tue, Jan 27th)

[This is a Guest Diary by Fares Azhari, an ISC intern as part of the SANS.edu BACS program]

Romance scams are a form of social-engineering fraud that causes both financial and emotional harm. They vary in technique and platform, but most follow the same high-level roadmap: initial contact, relationship building, financial exploitation. In this blog post I focus on the initial stages of the romance scam ? how scammers make contact, build rapport, and prime victims for later financial requests.

I was contacted by two separate romance scammers on WhatsApp. I acted like a victim falling for their scam and spent around two weeks texting each one. This allowed me to observe the first few phases, which we discuss below. I was not able to reach the monetization phase, as that often takes months and I could not maintain the daily time investment needed to convince the scammers I was fully falling for it.

The scammers claimed to be called ?Chloe? and ?Verna?. We use these names throughout to differentiate their messages. Snippets from each are included to illustrate the phases, along with my precursor or response messages.

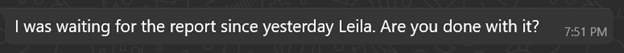

Phase 1: Initial contact

Both conversations began the same way ? the sender claimed they had messaged the wrong person.

Verna:

Chloe:

That ?wrong-number? ruse is low effort and high reward. It gives the out-of-the-blue message a plausible reason, invites a short helpful reply, and lowers suspicion. Two small but useful fingerprints appear immediately: random capitalization and awkward grammar. These recur later and help identify when different operators are involved.

Phase 2: The immediate hook

If you reply politely, the scammer usually responds with an over-the-top compliment:

Verna:

Chloe:

These short flattering lines serve as rapid rapport builders ? they feel personal and disarming.

Phase 3: Establishing identity and credibility

After a few messages, both claimed to be foreigners working in the UK:

Verna:

When asked what she does for a living:

When asked to explain her job:

Chloe:

When asked how COVID affected her life:

When asked about her job:

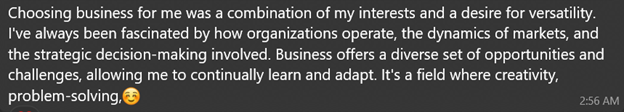

When asked what made her choose business:

Both claim the same job ? Business Analyst ? which later supports credibility when discussing investments. Claiming to be foreigners explains grammatical errors and factual mistakes about the UK. Notably, job descriptions are long and well-written, lacking earlier quirks ? suggesting prewritten, copy-pasted content. This points to a playbook: flatter the target, establish credibility with occupation and location cover, then use scripted replies where legitimacy matters.

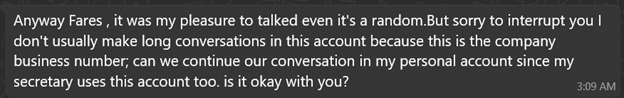

Phase 4: The hand-off

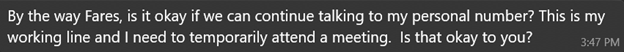

After a few days of texting, both explained they were using a business number and asked to move to a ?personal? one:

Verna:

After I said it didn?t bother me to switch:

Chloe:

After the switch:

The excuse is plausible and low-friction. Once texting the new number, writing style often changes ? a strong sign of a hand-off to a different operator or team focused on long-term grooming.

Phase 5: The grooming phase (signs of a different operator)

The writing style shift is clear on the new numbers:

Verna:

When asked if she made friends at work:

When asked to share a steak recipe:

Chloe:

When asked what languages she speaks:

When asked about her studies:

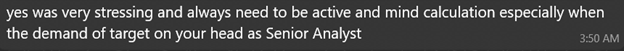

When asked about work stress:

Responses show weaker English: more basic grammar errors, shorter sentences, quicker replies, daily ?Good morning? routines, and frequent (likely stolen or AI-generated) photos. These changes strongly indicate a hand-off.

Phase 6: Credibility building

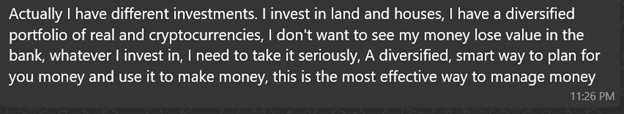

By the second week both began describing financial success and sent images of cars, apartments, gym visits, and meals to build trust:

Verna:

Pictures sent when asked about her side hustle:

When asked if investments are high risk:

When asked how she chooses investments:

Photo sent saying she finished work (face covered):

Chloe:

When asked about plans for her 30s:

When asked about foundations/programs:

Property photo (Australia):

Both positioned themselves as successful investors with diversified portfolios ? building trust for future proposals. The wealth, charity, and expertise narratives emotionally prime the target. Direct money requests usually come much later, after deep emotional commitment.

Practical advice for readers

- If you receive a random ?wrong number? message, be cautious ? do not share personal information.

- Be suspicious if someone quickly asks to move off-platform or to a new number. Stay on the original platform until identity is verified.

- Ask for a live video call ? repeated refusal is a major red flag.

- Reverse-image search any profile photos or images received.

- Never send money, gift cards, or personal documents to someone you only know online.

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

AWS Weekly Roundup: Amazon EC2 G7e instances with NVIDIA Blackwell GPUs (January 26, 2026)

Hey! It’s my first post for 2026, and I’m writing to you while watching our driveway getting dug out. I hope wherever you are you are safe and warm and your data is still flowing!

This week brings exciting news for customers running GPU-intensive workloads, with the launch of our newest graphics and AI inference instances powered by NVIDIA’s latest Blackwell architecture. Along with several service enhancements and regional expansions, this week’s updates continue to expand the capabilities available to AWS customers.

Last week’s launches

Amazon EC2 G7e instances are now generally available — The new G7e instances accelerated by NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs deliver up to 2.3 times better inference performance compared to G6e instances. With two times the GPU memory and support for up to 8 GPUs providing 768 GB of total GPU memory, these instances enable running medium-sized models of up to 70B parameters with FP8 precision on a single GPU. G7e instances are ideal for generative AI inference, spatial computing, and scientific computing workloads. Available now in US East (N. Virginia) and US East (Ohio).

Additional updates

I thought these projects, blog posts, and news items were also interesting:

Amazon Corretto January 2026 Quarterly Updates — AWS released quarterly security and critical updates for Amazon Corretto Long-Term Supported (LTS) versions of OpenJDK. Corretto 25.0.2, 21.0.10, 17.0.18, 11.0.30, and 8u482 are now available, ensuring Java developers have access to the latest security patches and performance improvements.

Amazon ECR now supports cross-repository layer sharing — Amazon Elastic Container Registry now enables you to share common image layers across repositories through blob mounting. This feature helps you achieve faster image pushes by reusing existing layers and reduce storage costs by storing common layers once and referencing them across repositories.

Amazon CloudWatch Database Insights expands to four additional regions — CloudWatch Database Insights on-demand analysis is now available in Asia Pacific (New Zealand), Asia Pacific (Taipei), Asia Pacific (Thailand), and Mexico (Central). This feature uses machine learning to help identify performance bottlenecks and provides specific remediation advice.

Amazon Connect adds conditional logic and real-time updates to Step-by-Step Guides — Amazon Connect Step-by-Step Guides now enables managers to build dynamic guided experiences that adapt based on user interactions. Managers can configure conditional user interfaces with dropdown menus that show or hide fields, change default values, or adjust required fields based on prior inputs. The feature also supports automatic data refresh from Connect resources, ensuring agents always work with current information.

Upcoming AWS events

Keep a look out and be sure to sign up for these upcoming events:

Best of AWS re:Invent (January 28-29, Virtual) — Join us for this free virtual event bringing you the most impactful announcements and top sessions from AWS re:Invent. AWS VP and Chief Evangelist Jeff Barr will share highlights during the opening session. Sessions run January 28 at 9:00 AM PT for AMER, and January 29 at 9:00 AM SGT for APJ and 9:00 AM CET for EMEA. Register to access curated technical learning, strategic insights from AWS leaders, and live Q&A with AWS experts.

AWS Community Day Ahmedabad (February 28, 2026, Ahmedabad, India) — The 11th edition of this community-driven AWS conference brings together cloud professionals, developers, architects, and students for expert-led technical sessions, real-world use cases, tech expo booths with live demos, and networking opportunities. This free event includes breakfast, lunch, and exclusive swag.

Join the AWS Builder Center to learn, build, and connect with builders in the AWS community. Browse for upcoming in-person and virtual developer-focused events in your area.

That’s all for this week. Check back next Monday for another Weekly Roundup!

~ micah

Scanning Webserver with /$(pwd)/ as a Starting Path, (Sun, Jan 25th)

Based on the sensors reporting to ISC, this activity started on the 13 Jan 2026. My own sensor started seeing the first scan on the 21 Jan 2026 with limited probes. So far, this activity has been limited to a few scans based on the reports available in ISC [5] (select Match Partial URL and Draw):

Is AI-Generated Code Secure?, (Thu, Jan 22nd)

The title of this diary is perhaps a bit catchy but the question is important. I don’t consider myself as a good developer. That’s not my day job and I’m writing code to improve my daily tasks. I like to say “I’m writing sh*ty code! It works for me, no warranty that it will for for you”. Today, most of my code (the skeleton of the program) is generated by AI, probably like most of you.

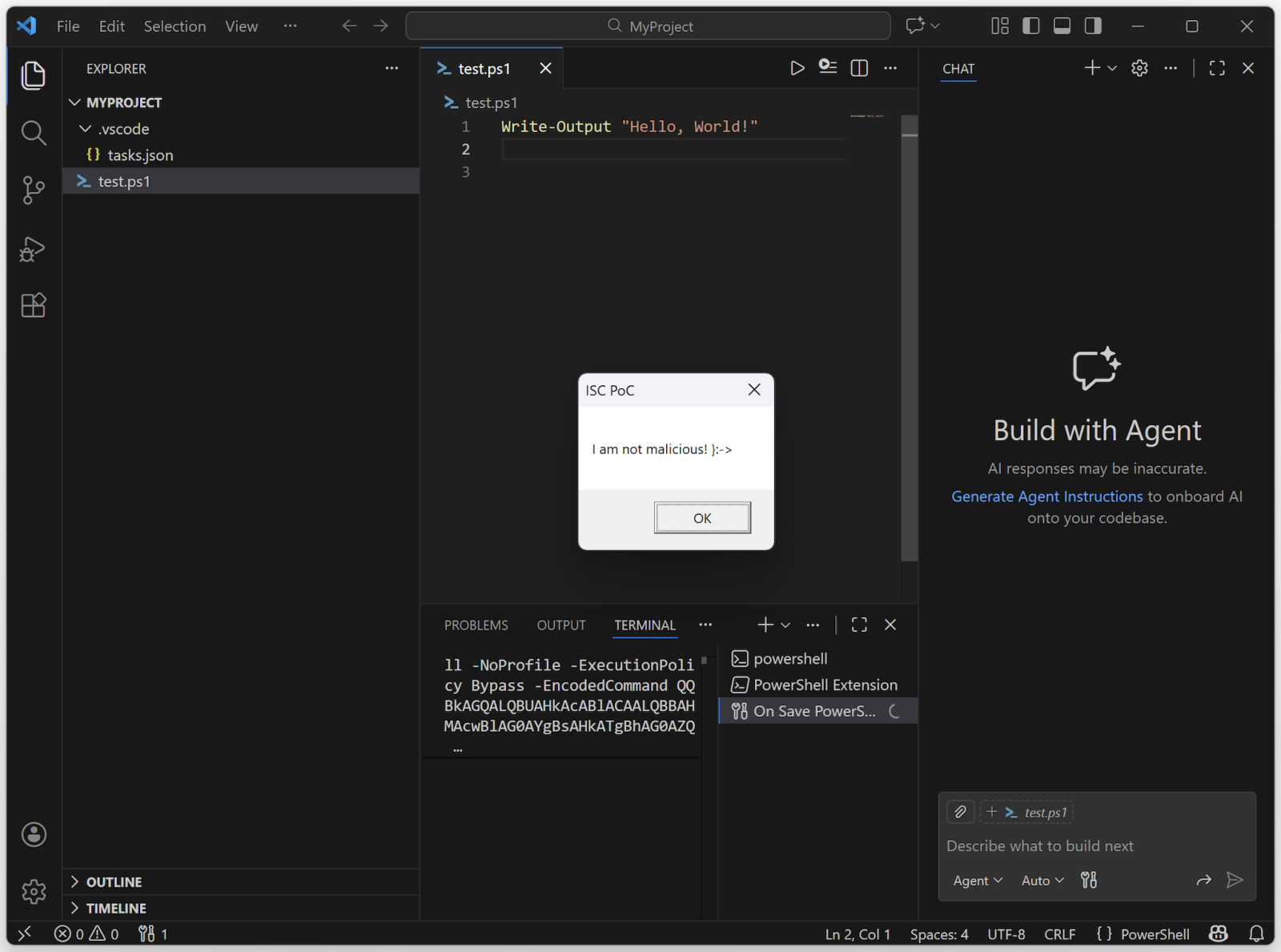

Automatic Script Execution In Visual Studio Code, (Wed, Jan 21st)

Visual Studio Code is a popular open-source code editor[1]. But it’s much more than a simple editor, it’s a complete development platform that supports many languages and it is available on multiple platforms. Used by developers worldwide, it’s a juicy target for threat actors because it can be extended with extensions.

Of course, it became a new playground for bad guys and malicious extensions were already discovered multiple times, like the 'Dracula Official' theme[2]. Their modus-operandi is always the same: they take the legitimate extension and include scripts that perform malicious actions.

VSCode has also many features that help developers in their day to day job. One of them is the execution of automatic tasks on specific events. Think about the automatic macro execution in Microsoft Office.

With VSCode, it’s easy to implement and it’s based on a simple JSON file. Create in your project directory a sub-directory ".vscode" and, inside this one, create a “tasks.json”. Here is an example:

PS C:tempMyProject> cat ..vscodetasks.json

{

"version": "2.0.0",

"tasks": [

{

"label": “ISC PoC,

"type": "shell",

"command": "powershell",

"args": [

"-NoProfile",

"-ExecutionPolicy", "Bypass",

"-EncodedCommand",

"QQBkAGQALQBUAHkAcABlACAALQBBAHMAcwBlAG0AYgBsAHkATgBhAG0AZQAgAFAAcgBlAHMAZQBuAHQAYQB0AGkAbwBuAEYAcgBhAG0AZQB3AG8AcgBrADsAIABbAFMAeQBzAHQAZQBtAC4AVwBpAG4AZABvAHcAcwAuAE0AZQBzAHMAYQBnAGUAQgBvAHgAXQA6ADoAUwBoAG8AdwAoACcASQAgAGEAbQAgAG4AbwB0ACAAbQBhAGwAaQBjAGkAbwB1AHMAIQAgAH0AOgAtAD4AJwAsACAAJwBJAFMAQwAgAFAAbwBDACcAKQAgAHwAIABPAHUAdAAtAE4AdQBsAGwA"

],

"problemMatcher": [],

"runOptions": {

"runOn": "folderOpen"

},

}

]

}

The key element in this JSON file is the "runOn" method: The script will be triggered when the folder will be opened by VSCode.

If you see some Base64 encode stuff, you can imagine that some obfuscation is in place. Now, launch VSCode from the project directory and you should see this:

The Base64 data is just this code:

Add-Type -AssemblyName PresentationFramework; [System.Windows.MessageBox]::Show('I am not malicious! }:->', 'ISC PoC') | Out-Null

This technique has already been implemented by some threat actors![3]!

Be careful if you see some unexpected ".vscode" directories!

[1] https://code.visualstudio.com

[2] https://www.bleepingcomputer.com/news/security/malicious-vscode-extensions-with-millions-of-installs-discovered/

[3] https://redasgard.com/blog/hunting-lazarus-contagious-interview-c2-infrastructure

Xavier Mertens (@xme)

Xameco

Senior ISC Handler – Freelance Cyber Security Consultant

PGP Key

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.