I am TA-ing for Taz for the new SANS FOR577 class again and I figured it was time to release some fixes to my le-hex-to-ip.py script that I wrote up last fall while doing the same. I still plan to make some additional updates to the script to be able to take the hex strings from stdin, but in the meantime, figured I should release this fix. I was already using Python3's inet_ntoa() function to convert the IPv4 address, so I simplified the script by using the inet_ntop() function since it can handle both the IPv4 and IPv6 addresses instead of my kludgy handling of the IPv6. As a side-effect it also quite nicely handles the IPv4-mapped IPv6 addresses (of the form ::ffff:192.168.1.75).

Monthly Archives: March 2024

1768.py's Experimental Mode, (Sat, Mar 23rd)

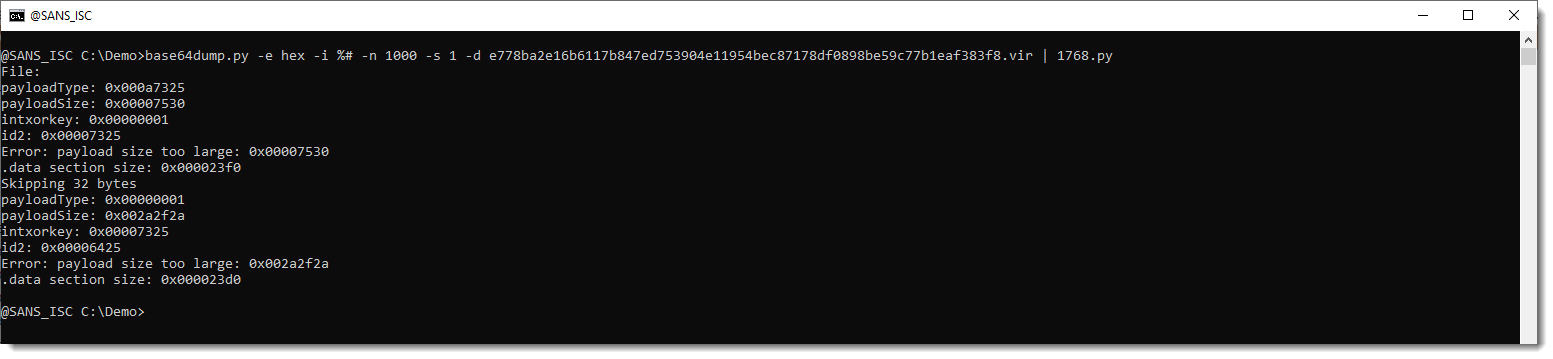

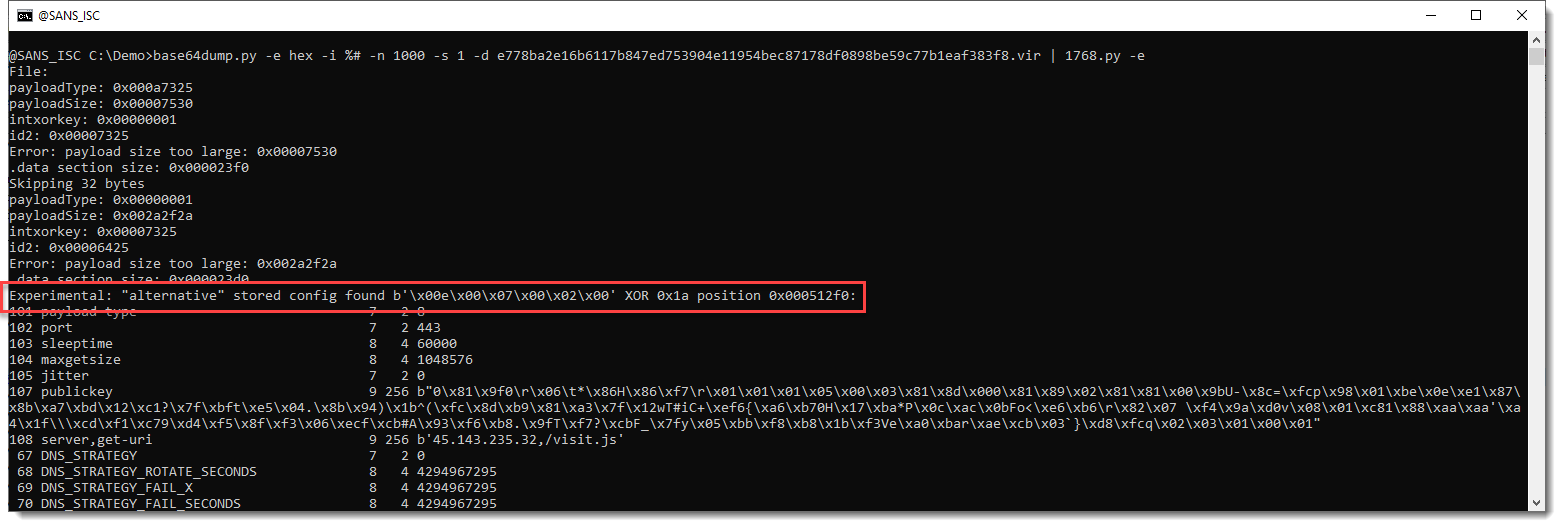

The reason I extracted a PE file in my last diary entry, is that I discovered it was the dropper of a Cobalt Strike beacon @DebugPrivilege had pointed me to. My 1768.py tool crashed on the process memory dump. This is fixed now, but it still doesn't extract the configuration.

I did a manual analysis of the Cobalt Strike beacon, and found that it uses alternative datastructures for the stored and runtime config.

I'm not sure if this is a (new) feature of Cobalt Strike, or a hack someone pulled of. I'm seeing very few similar samples on VirusTotal, so for the moment, I'm adding the decoders I developed for this to 1768.py as experimental features. These decoders won't run (like in the screenshot above), unless you use option -e.

With option -e, the alternative stored config is found and decoded:

And it can also analyze the process memory dumps I was pointed to:

Didier Stevens

Senior handler

Microsoft MVP

blog.DidierStevens.com

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Improve the security of your software supply chain with Amazon CodeArtifact package group configuration

Starting today, administrators of package repositories can manage the configuration of multiple packages in one single place with the new AWS CodeArtifact package group configuration capability. A package group allows you to define how packages are updated by internal developers or from upstream repositories. You can now allow or block internal developers to publish packages or allow or block upstream updates for a group of packages.

CodeArtifact is a fully managed package repository service that makes it easy for organizations to securely store and share software packages used for application development. You can use CodeArtifact with popular build tools and package managers such as NuGet, Maven, Gradle, npm, yarn, pip, twine, and the Swift Package Manager.

CodeArtifact supports on-demand importing of packages from public repositories such as npmjs.com, maven.org, and pypi.org. This allows your organization’s developers to fetch all their packages from one single source of truth: your CodeArtifact repository.

Simple applications routinely include dozens of packages. Large enterprise applications might have hundreds of dependencies. These packages help developers speed up the development and testing process by providing code that solves common programming challenges such as network access, cryptographic functions, or data format manipulation. These packages might be produced by other teams in your organization or maintained by third parties, such as open source projects.

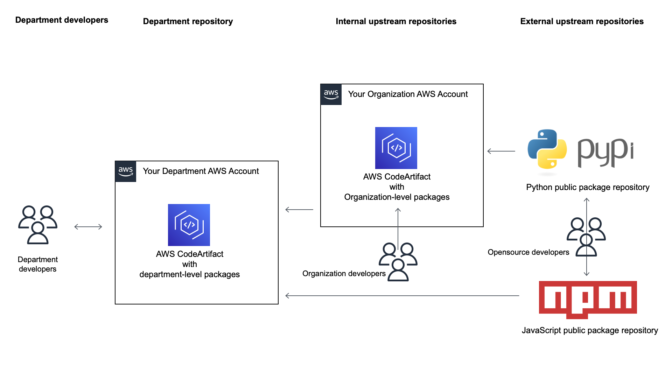

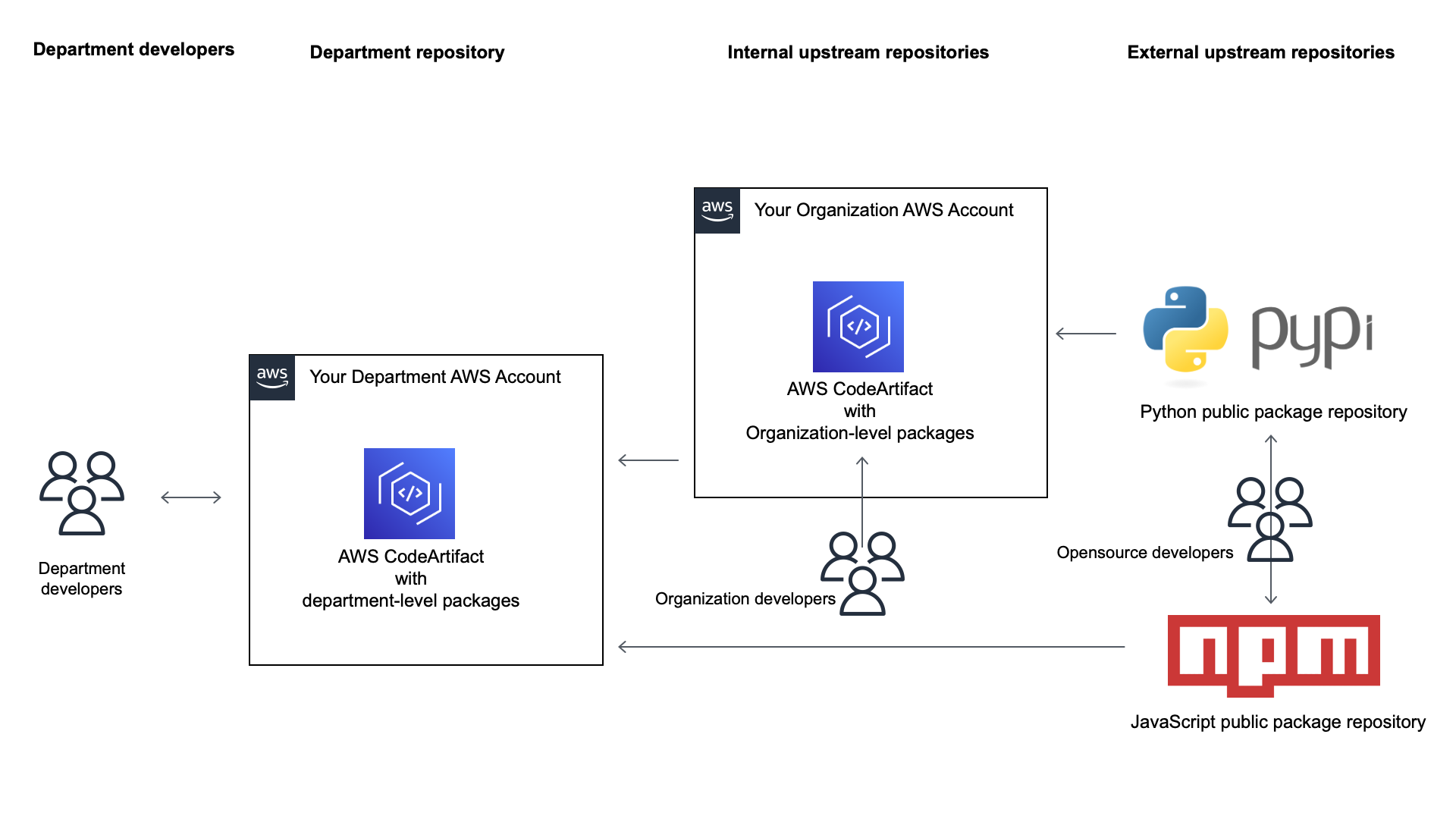

To minimize the risks of supply chain attacks, some organizations manually vet the packages that are available in internal repositories and the developers who are authorized to update these packages. There are three ways to update a package in a repository. Selected developers in your organization might push package updates. This is typically the case for your organization’s internal packages. Packages might also be imported from upstream repositories. An upstream repository might be another CodeArtifact repository, such as a company-wide source of approved packages or external public repositories offering popular open source packages.

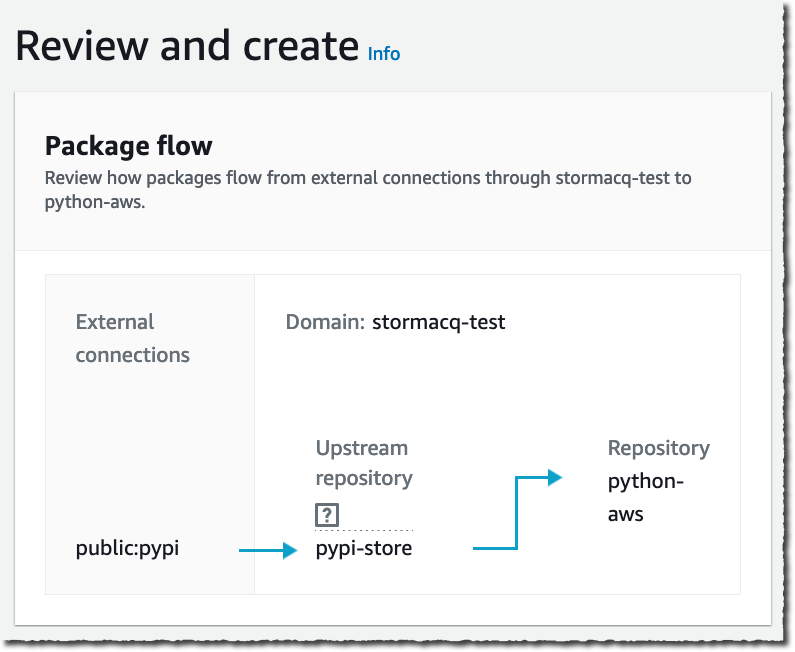

Here is a diagram showing different possibilities to expose a package to your developers.

When managing a repository, it is crucial to define how packages can be downloaded and updated. Allowing package installation or updates from external upstream repositories exposes your organization to typosquatting or dependency confusion attacks, for example. Imagine a bad actor publishing a malicious version of a well-known package under a slightly different name. For example, instead of coffee-script, the malicious package is cofee-script, with only one “f.” When your repository is configured to allow retrieval from upstream external repositories, all it takes is a distracted developer working late at night to type npm install cofee-script instead of npm install coffee-script to inject malicious code into your systems.

CodeArtifact defines three permissions for the three possible ways of updating a package. Administrators can allow or block installation and updates coming from internal publish commands, from an internal upstream repository, or from an external upstream repository.

Until today, repository administrators had to manage these important security settings package by package. With today’s update, repository administrators can define these three security parameters for a group of packages at once. The packages are identified by their type, their namespace, and their name. This new capability operates at the domain level, not the repository level. It allows administrators to enforce a rule for a package group across all repositories in their domain. They don’t have to maintain package origin controls configuration in every repository.

Let’s see in detail how it works

Imagine that I manage an internal package repository with CodeArtifact and that I want to distribute only the versions of the AWS SDK for Python, also known as boto3, that have been vetted by my organization.

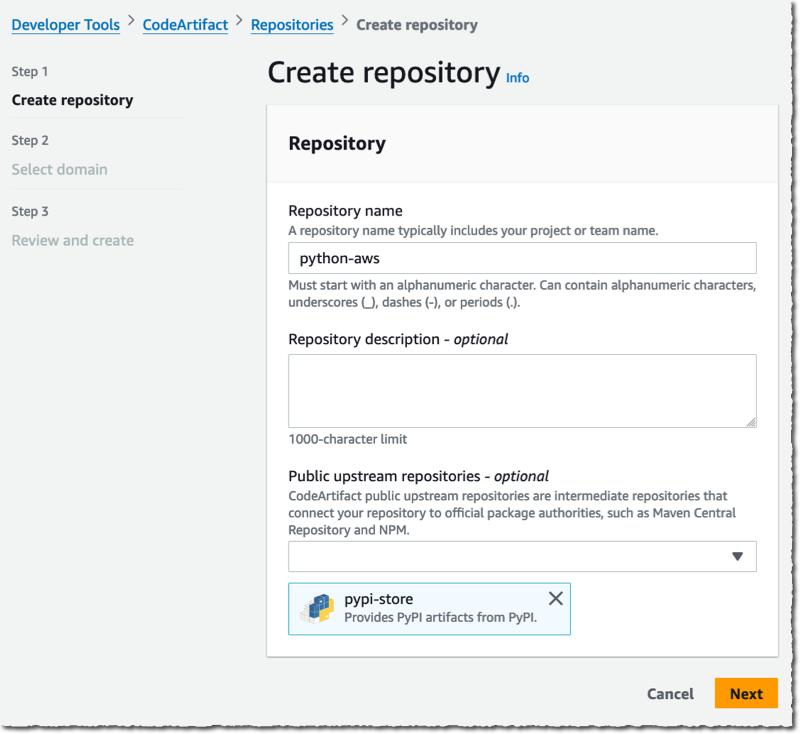

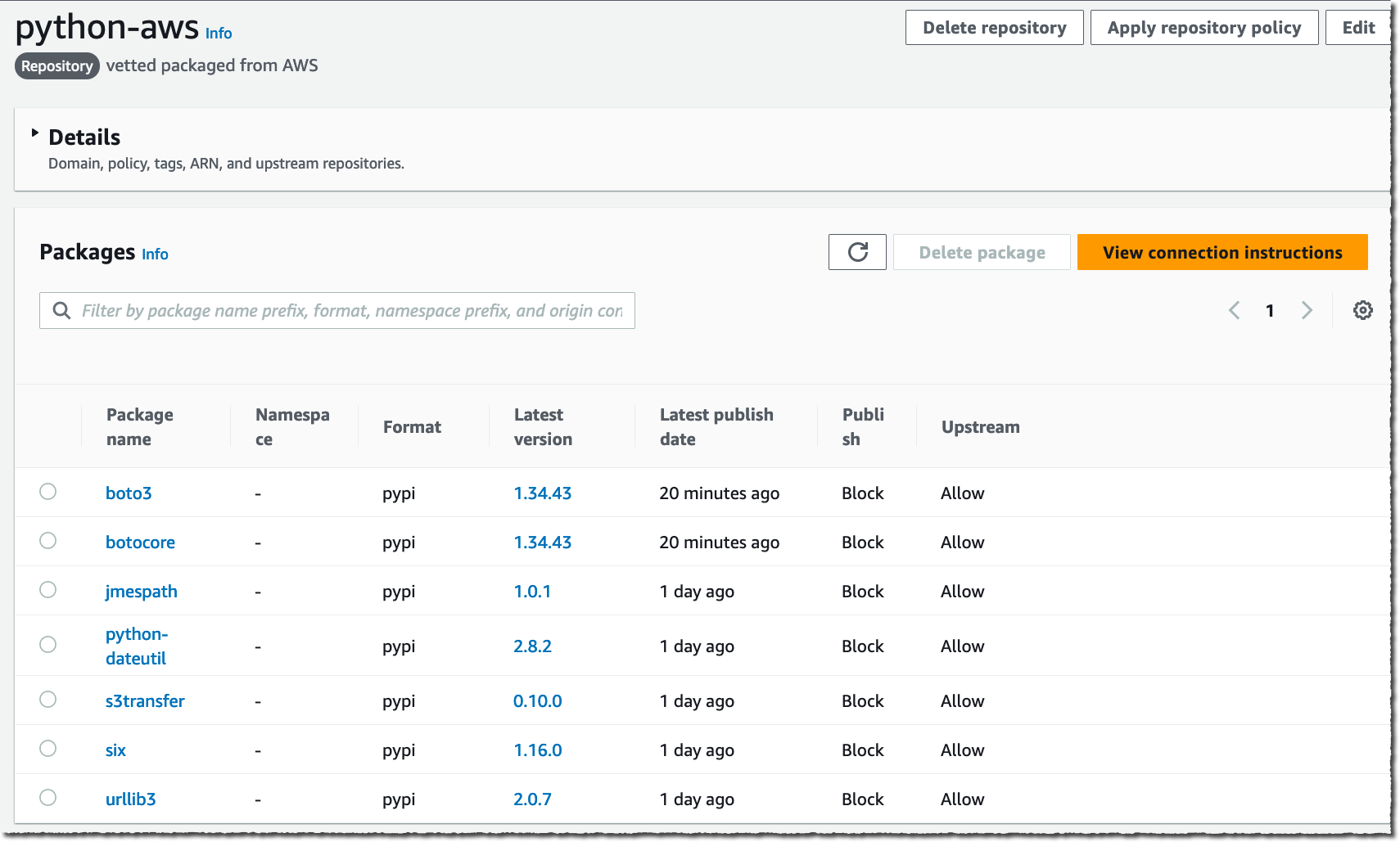

I navigate to the CodeArtifact page in the AWS Management Console, and I create a python-aws repository that will serve vetted packages to internal developers.

This creates a staging repository in addition to the repository I created. The external packages from pypi will first be staged in the pypi-store internal repository, where I will verify them before serving them to the python-aws repository. Here is where my developers will connect to download them.

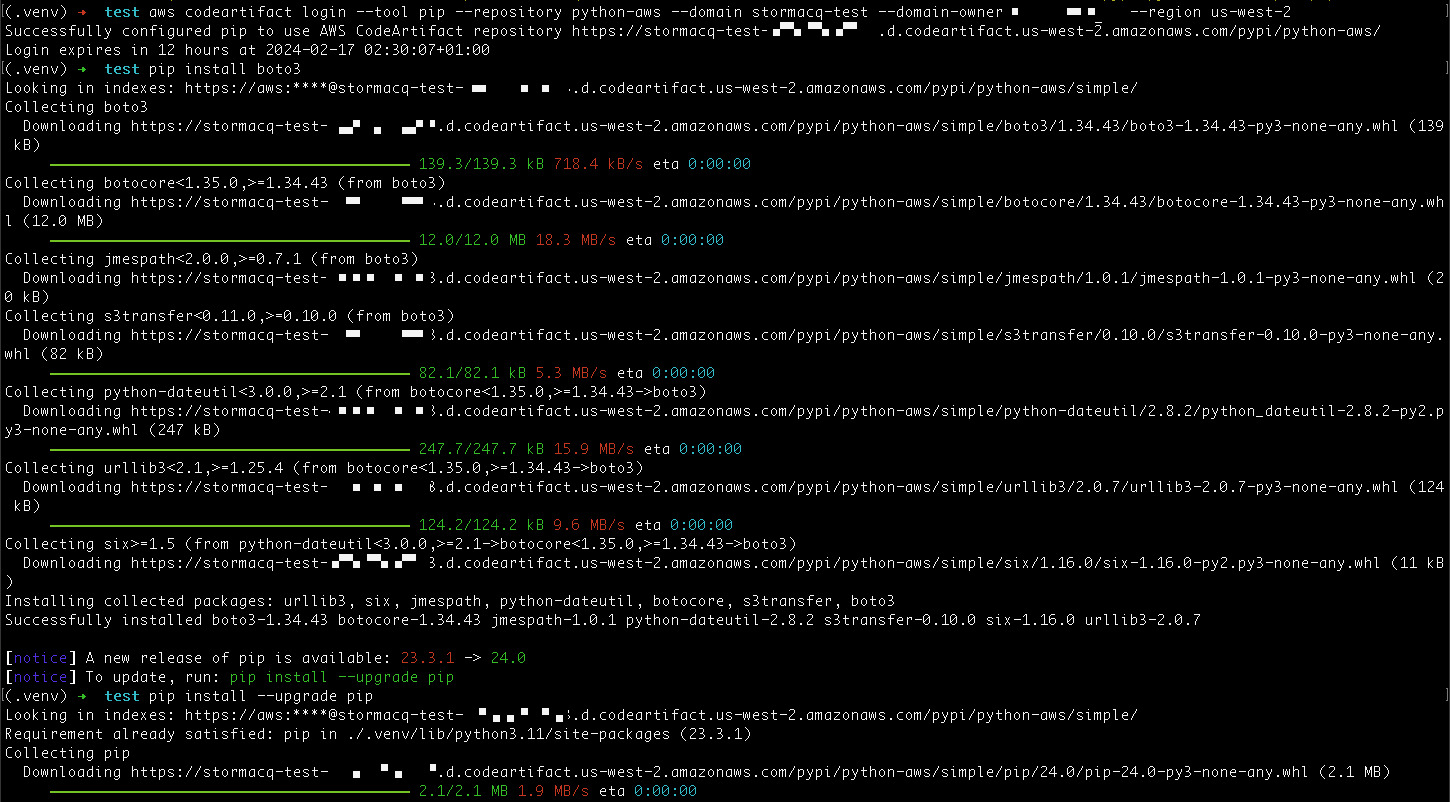

By default, when a developer authenticates against CodeArtifact and types

By default, when a developer authenticates against CodeArtifact and types pip install boto3, CodeArtifact downloads the packages from the public pypi repository, stages them on pypi-store, and copies them on python-aws.

Now, imagine I want to block CodeArtifact from fetching package updates from the upstream external pypi repository. I want python-aws to only serve packages that I approved from my pypi-store internal repository.

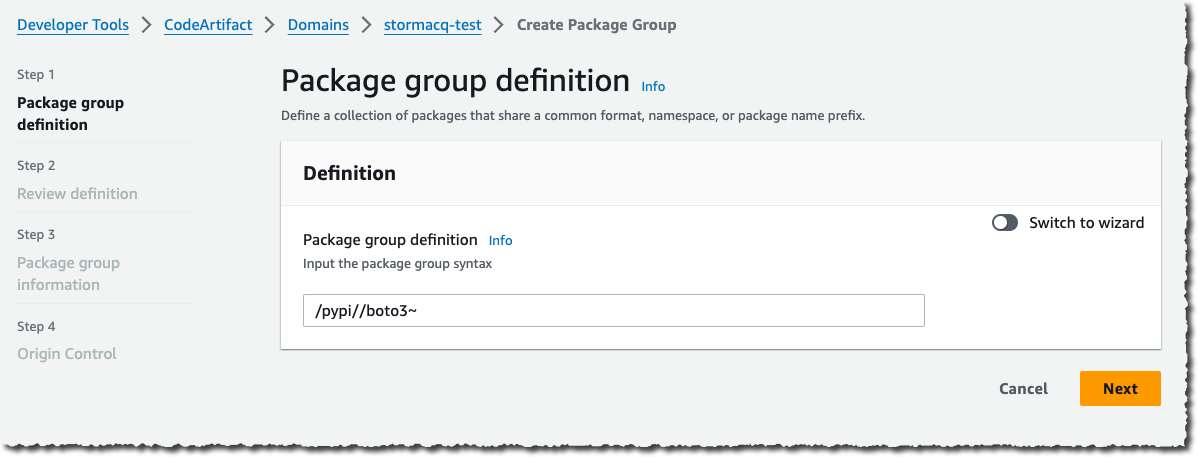

With the new capability that we released today, I can now apply this configuration for a group of packages. I navigate to my domain and select the Package Groups tab. Then, I select the Create Package Group button.

I enter the Package group definition. This expression defines what packages are included in this group. Packages are identified using a combination of three components: package format, an optional namespace, and name.

Here are a few examples of patterns that you can use for each of the allowed combinations:

- All package formats: /*

- A specific package format: /npm/*

- Package format and namespace prefix: /maven/com.amazon~

- Package format and namespace: /npm/aws-amplify/*

- Package format, namespace, and name prefix: /npm/aws-amplify/ui~

- Package format, namespace, and name: /maven/org.apache.logging.log4j/log4j-core$

I invite you to read the documentation to learn all the possibilities.

In my example, there is no concept of namespace for Python packages, and I want the group to include all packages with names starting with boto3 coming from pypi. Therefore, I write /pypi//boto3~.

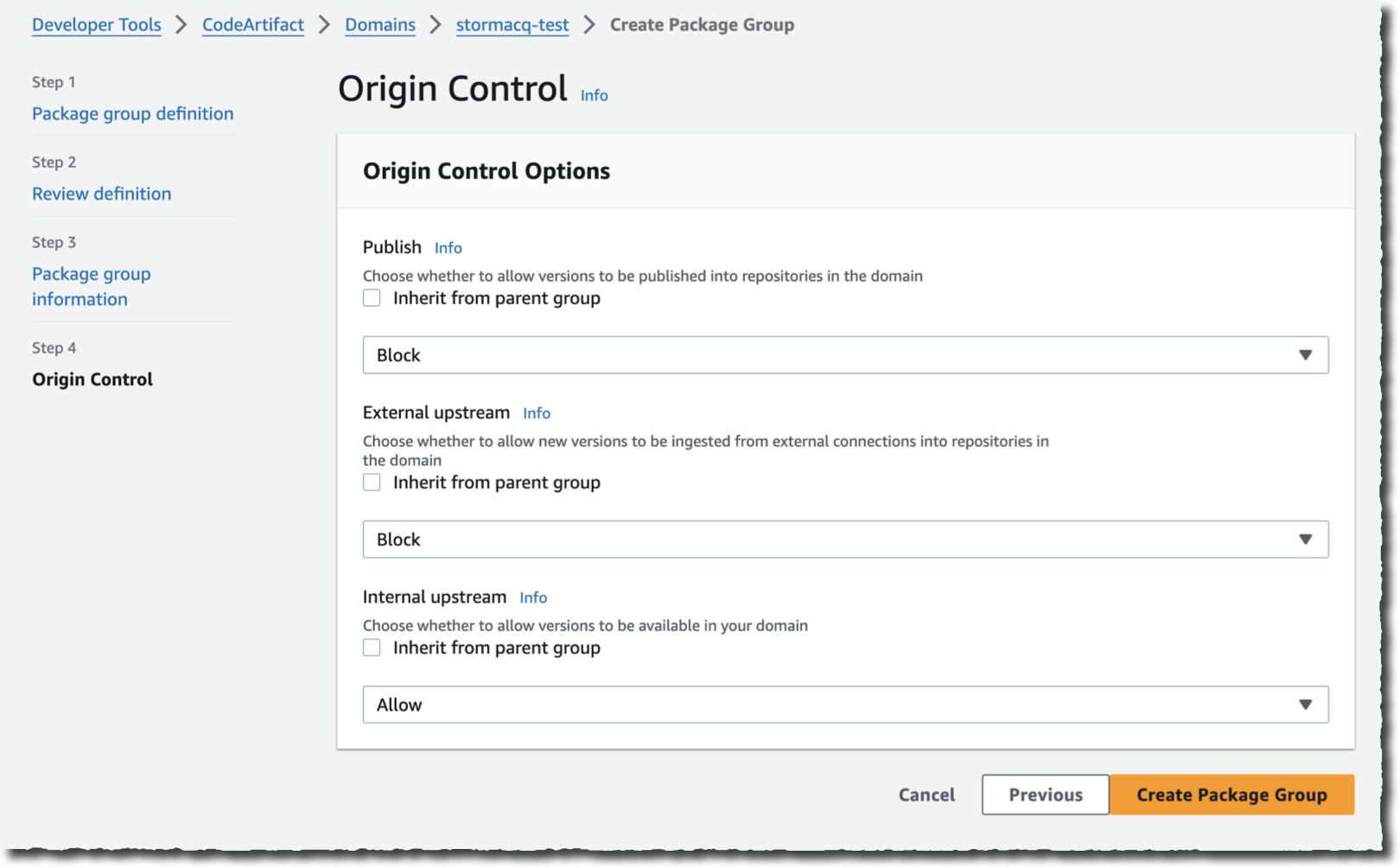

Then, I define the security parameters for my package group. In this example, I don’t want my organization’s developers to publish updates. I also don’t want CodeArtifact to fetch new versions from the external upstream repositories. I want to authorize only package updates from my internal staging directory.

I uncheck all Inherit from parent group boxes. I select Block for Publish and External upstream. I leave Allow on Internal upstream. Then, I select Create Package Group.

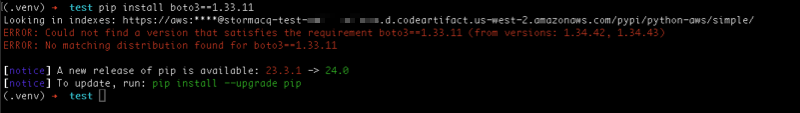

Once defined, developers are unable to install different package versions than the ones authorized in the python-aws repository. When I, as a developer, try to install another version of the boto3 package, I receive an error message. This is expected because the newer version of the boto3 package is not available in the upstream staging repo, and there is block rule that prevents fetching packages or package updates from external upstream repositories.

Similarly, let’s imagine your administrator wants to protect your organization from dependency substitution attacks. All your internal Python package names start with your company name (mycompany). The administrator wants to block developers for accidentally downloading from pypi.org packages that start with mycompany.

Administrator creates a rule with the pattern /pypi//mycompany~ with publish=allow, external upstream=block, and internal upstream=block. With this configuration, internal developers or your CI/CD pipeline can publish those packages, but CodeArtifact will not import any packages from pypi.org that start with mycompany, such as mycompany.foo or mycompany.bar. This prevents dependency substitution attacks for these packages.

Package groups are available in all AWS Regions where CodeArtifact is available, at no additional cost. It helps you to better control how packages and package updates land in your internal repositories. It helps to prevent various supply chain attacks, such as typosquatting or dependency confusion. It’s one additional configuration that you can add today into your infrastructure-as-code (IaC) tools to create and manage your CodeArtifact repositories.

Simpler Licensing with VMware vSphere Foundation and VMware Cloud Foundation 5.1.1

Tweet VMware has been on a journey to simplify its portfolio and transition from a perpetual to a subscription model to better serve customers with continuous innovation, faster time to value, and predictable investments. To that end, VMware recently introduced a simplified product portfolio that consists of two primary offerings: VMware Cloud Foundation, our flagship … Continued

The post Simpler Licensing with VMware vSphere Foundation and VMware Cloud Foundation 5.1.1 appeared first on VMware Support Insider.

Whois "geofeed" Data, (Thu, Mar 21st)

Attributing a particular IP address to a specific location is hard and often fails miserably. There are several difficulties that I have talked about before: Out-of-date whois data, data that is outright fake, or was never correct in the first place. Companies that have been allocated a larger address range are splitting it up into different geographic regions, but do not reflect this in their whois records.

Scans for Fortinet FortiOS and the CVE-2024-21762 vulnerability, (Wed, Mar 20th)

Late last week, an exploit surfaced on GitHub for CVE-2024-21762 [1]. This vulnerability affects Fortinet's FortiOS. A patch was released on February 8th. Owners of affected devices had over a month to patch [2]. A few days prior to the GitHub post, the exploit was published on the Chinese QQ messaging network [3]

Attacker Hunting Firewalls, (Tue, Mar 19th)

Firewalls and other perimeter devices are a huge target these days. Ivanti, Forigate, Citrix, and others offer plenty of difficult-to-patch vulnerabilities for attackers to exploit. Ransomware actors and others are always on the lookout for new victims. However, being and access broker or ransomware peddler is challenging: The competition for freshly deployed vulnerable devices, or devices not patched for the latest greatest vulnerability, is immense. Your success in the ransomware or access broker ecosystem depends on having a consistently updated list of potential victims.

AWS Weekly Roundup — Claude 3 Haiku in Amazon Bedrock, AWS CloudFormation optimizations, and more — March 18, 2024

Storage, storage, storage! Last week, we celebrated 18 years of innovation on Amazon Simple Storage Service (Amazon S3) at AWS Pi Day 2024. Amazon S3 mascot Buckets joined the celebrations and had a ton of fun! The 4-hour live stream was packed with puns, pie recipes powered by PartyRock, demos, code, and discussions about generative AI and Amazon S3.

In case you missed the live stream, you can watch the recording. We’ll also update the AWS Pi Day 2024 post on community.aws this week with show notes and session clips.

Last week’s launches

Here are some launches that got my attention:

Anthropic’s Claude 3 Haiku model is now available in Amazon Bedrock — Anthropic recently introduced the Claude 3 family of foundation models (FMs), comprising Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus. Claude 3 Haiku, the fastest and most compact model in the family, is now available in Amazon Bedrock. Check out Channy’s post for more details. In addition, my colleague Mike shows how to get started with Haiku in Amazon Bedrock in his video on community.aws.

Up to 40 percent faster stack creation with AWS CloudFormation — AWS CloudFormation now creates stacks up to 40 percent faster and has a new event called CONFIGURATION_COMPLETE. With this event, CloudFormation begins parallel creation of dependent resources within a stack, speeding up the whole process. The new event also gives users more control to shortcut their stack creation process in scenarios where a resource consistency check is unnecessary. To learn more, read this AWS DevOps Blog post.

Amazon SageMaker Canvas extends its model registry integration — SageMaker Canvas has extended its model registry integration to include time series forecasting models and models fine-tuned through SageMaker JumpStart. Users can now register these models to the SageMaker Model Registry with just a click. This enhancement expands the model registry integration to all problem types supported in Canvas, such as regression/classification tabular models and CV/NLP models. It streamlines the deployment of machine learning (ML) models to production environments. Check the Developer Guide for more information.

For a full list of AWS announcements, be sure to keep an eye on the What’s New at AWS page.

Other AWS news

Here are some additional news items, open source projects, and Twitch shows that you might find interesting:

Build On Generative AI — Season 3 of your favorite weekly Twitch show about all things generative AI is in full swing! Streaming every Monday, 9:00 US PT, my colleagues Tiffany and Darko discuss different aspects of generative AI and invite guest speakers to demo their work. In today’s episode, guest Martyn Kilbryde showed how to build a JIRA Agent powered by Amazon Bedrock. Check out show notes and the full list of episodes on community.aws.

Build On Generative AI — Season 3 of your favorite weekly Twitch show about all things generative AI is in full swing! Streaming every Monday, 9:00 US PT, my colleagues Tiffany and Darko discuss different aspects of generative AI and invite guest speakers to demo their work. In today’s episode, guest Martyn Kilbryde showed how to build a JIRA Agent powered by Amazon Bedrock. Check out show notes and the full list of episodes on community.aws.

Amazon S3 Connector for PyTorch — The Amazon S3 Connector for PyTorch now lets PyTorch Lightning users save model checkpoints directly to Amazon S3. Saving PyTorch Lightning model checkpoints is up to 40 percent faster with the Amazon S3 Connector for PyTorch than writing to Amazon Elastic Compute Cloud (Amazon EC2) instance storage. You can now also save, load, and delete checkpoints directly from PyTorch Lightning training jobs to Amazon S3. Check out the open source project on GitHub.

AWS open source news and updates — My colleague Ricardo writes this weekly open source newsletter in which he highlights new open source projects, tools, and demos from the AWS Community.

Upcoming AWS events

Check your calendars and sign up for these AWS events:

AWS at NVIDIA GTC 2024 — The NVIDIA GTC 2024 developer conference is taking place this week (March 18–21) in San Jose, CA. If you’re around, visit AWS at booth #708 to explore generative AI demos and get inspired by AWS, AWS Partners, and customer experts on the latest offerings in generative AI, robotics, and advanced computing at the in-booth theatre. Check out the AWS sessions and request 1:1 meetings.

AWS Summits — It’s AWS Summit season again! The first one is Paris (April 3), followed by Amsterdam (April 9), Sydney (April 10–11), London (April 24), Berlin (May 15–16), and Seoul (May 16–17). AWS Summits are a series of free online and in-person events that bring the cloud computing community together to connect, collaborate, and learn about AWS.

AWS Summits — It’s AWS Summit season again! The first one is Paris (April 3), followed by Amsterdam (April 9), Sydney (April 10–11), London (April 24), Berlin (May 15–16), and Seoul (May 16–17). AWS Summits are a series of free online and in-person events that bring the cloud computing community together to connect, collaborate, and learn about AWS.

AWS re:Inforce — Join us for AWS re:Inforce (June 10–12) in Philadelphia, PA. AWS re:Inforce is a learning conference focused on AWS security solutions, cloud security, compliance, and identity. Connect with the AWS teams that build the security tools and meet AWS customers to learn about their security journeys.

AWS re:Inforce — Join us for AWS re:Inforce (June 10–12) in Philadelphia, PA. AWS re:Inforce is a learning conference focused on AWS security solutions, cloud security, compliance, and identity. Connect with the AWS teams that build the security tools and meet AWS customers to learn about their security journeys.

You can browse all upcoming in-person and virtual events.

That’s all for this week. Check back next Monday for another Weekly Roundup!

— Antje

This post is part of our Weekly Roundup series. Check back each week for a quick roundup of interesting news and announcements from AWS!

Gamified Learning: Using Capture the Flag Challenges to Supplement Cybersecurity Training [Guest Diary], (Sun, Mar 17th)

[This is a Guest Diary by Joshua Woodward, an ISC intern as part of the SANS.edu BACS program]

Just listening to a lecture is boring. Is there a better way?

I recently had the opportunity to engage in conversation with Jonathan, a lead analyst at Rapid7, where our discussion led to the internal technical training that he gives to their new analysts. He saw a notable ineffectiveness in the training sessions and was "dissatisfied with the new analysts' ability to remember and apply the knowledge when it was time to use it." The new analysts struggled to recall and apply the knowledge from the classroom training and often "had to be retaught live," resulting in inefficiencies and frustration. After reflecting on the root cause of this issue, Jonathan suspected that the traditional approach to learning, such as classroom lectures and workshops, was at the heart of the problem. These more passive learning approaches failed to engage the participants, leading to disinterest in the training and lower knowledge retention. Drawing inspiration from a method that was effective for him, Jonathan decided to adopt a more active and engaging approach: Capture the Flag (CTF) competitions.

Capture the Flag (CTF) Competitions

Capture the Flag competitions can offer exposure to a wide range of cybersecurity concepts or drill into a particular skill set through carefully crafted puzzles. CTFs foster an active learning environment by encouraging participants to apply their critical thinking skills and knowledge in a practical context. The gamified nature of CTFs leads to more excitement and motivation to participate, and active engagement and problem-solving allows a deeper understanding and retention of cybersecurity concepts.

Considerations

Traditional training excels at comprehensively covering topics in a structured matter, while CTFs offer a better environment to apply skills practically and can be built to mimic real-world scenarios. However, the nature of CTFs may not be suitable for teaching specific skills in a predetermined manner, as participants may creatively approach challenges from various angles. Participants will only learn what is needed to solve the challenge. Carefully crafted challenges can offset this disadvantage to some extent, but they may not fully address this drawback. Despite the limitations, CTFs shine at getting participants to retain knowledge because they foster active learning. Participants are effectively teaching themselves in a hands-on manner that will help them remember and gain experience in the topic.

How puzzles are designed greatly influences the effectiveness of CTFs. Developing good challenges is a very time-consuming process. A senior analyst can teach a lecture in an ad-hoc matter, but all CTFs require a large preparation time. Jonathan mentioned that there are "a lot of competing requirements that are hard to balance" when designing a new challenge. The puzzle must be balanced and give participants a good starting point and prompt to prevent a knowledge blockade or feel overwhelming, but it still must be challenging and teach a specific skill set. Jonathan stated that when designing a challenge to target specific knowledge, a common trap is that it can easily start feeling like a trivia game rather than something fun, and "then you just have a quiz rather than a CTF." Well-designed challenges are the make-or-break linchpin for the successful implementation of CTFs in technical training.

Effectiveness

After introducing CTFs into his training plan, Jonathan noted that he witnessed a significant improvement in the analysts' ability to recall and apply the new knowledge. Being able to use the skills practically in an engaging and rewarding context seemed to give the participants a deeper understanding of the concepts and how to employ them when problem-solving.

I was able to interview an individual who had taken both types of training methods, and they noted that "CTF challenges were far more enjoyable and memorable" when compared to their original training. In terms of retaining and applying learning objectives, they found "CTF challenges to be significantly more effective." They were able to remember bits and pieces better from the CTF than from classroom training, which allowed them to have a starting place to research when solving situations in their work.

Jonathan comments that debating why the traditional classroom training failed is a discussion unto itself and has merit in researching it further. However, he did ultimately find that CTFs provided a workable alternative that helped fix the retention issue he was facing.

Integrating Capture the Flag challenges into internal training can give tangible improvements to participants' ability to retain and apply the knowledge being covered in training sessions. Combining CTFs with traditional training methods can help cover the drawbacks of either methodology at the cost of more preparation time.

—

* This article was written with the assistance of AI tools, including ChatGPT.

* Permission has been given by the interviewed sources to use their names and answers in this article. Full names have been redacted for privacy.

[1] https://www.sans.edu/cyber-security-programs/bachelors-degree/

———–

Guy Bruneau IPSS Inc.

My Handler Page

Twitter: GuyBruneau

gbruneau at isc dot sans dot edu

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Obfuscated Hexadecimal Payload, (Sat, Mar 16th)

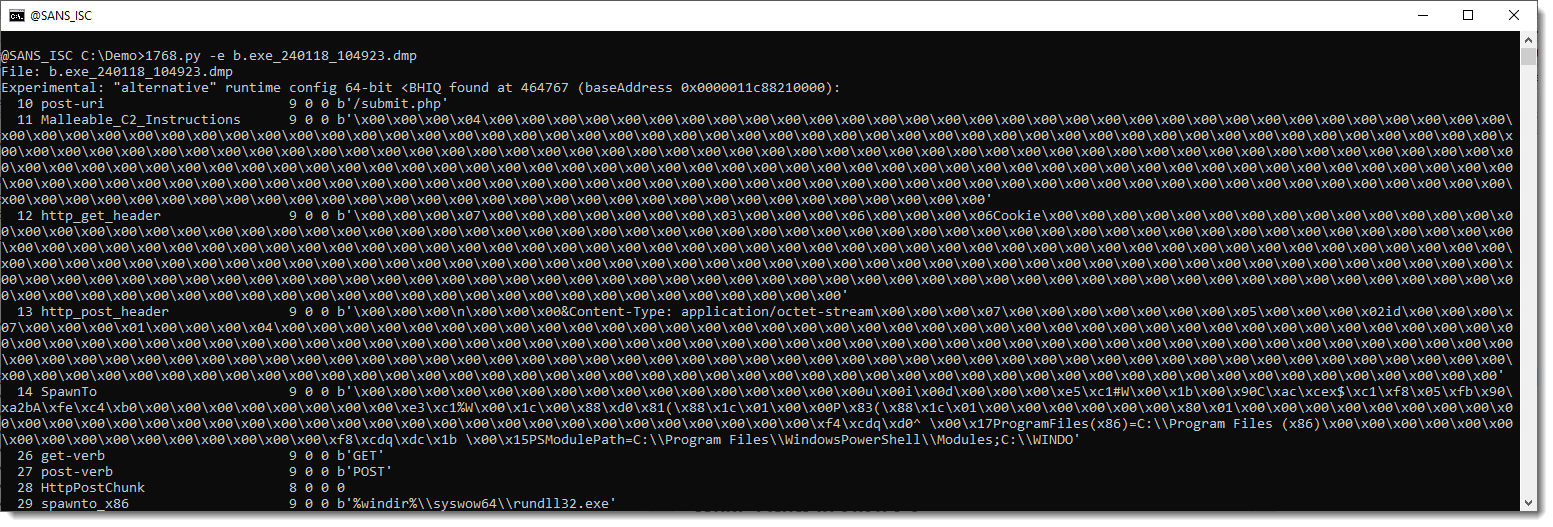

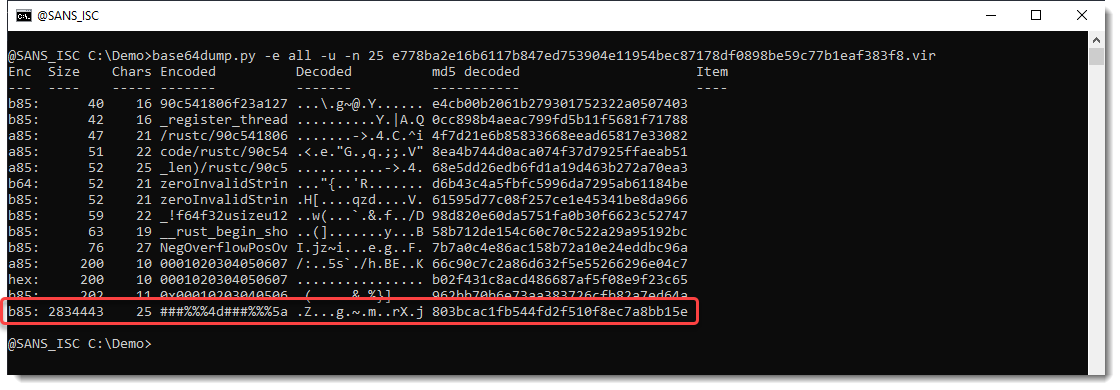

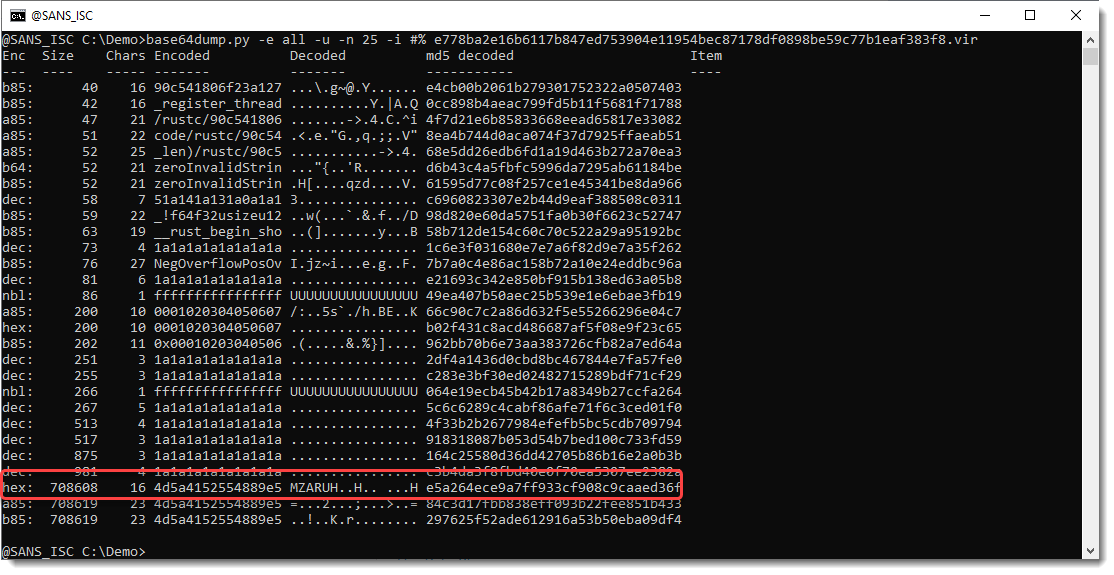

This PE file contains an obfuscated hexadecimal-encoded payload. When I analyze it with base64dump.py searching for all supported encodings, a very long payload is detected:

It's 2834443 characters long, and matches base85 encoding (b85), but this is likely a false positive, as base85 uses 85 unique characters (as its name suggests), but in this particular encoded content, only 23 unique characters are used (out of 85).

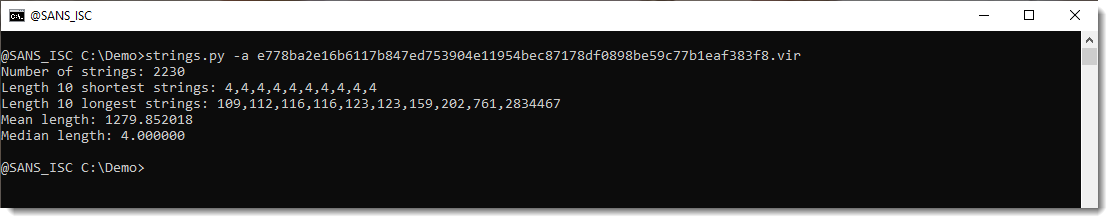

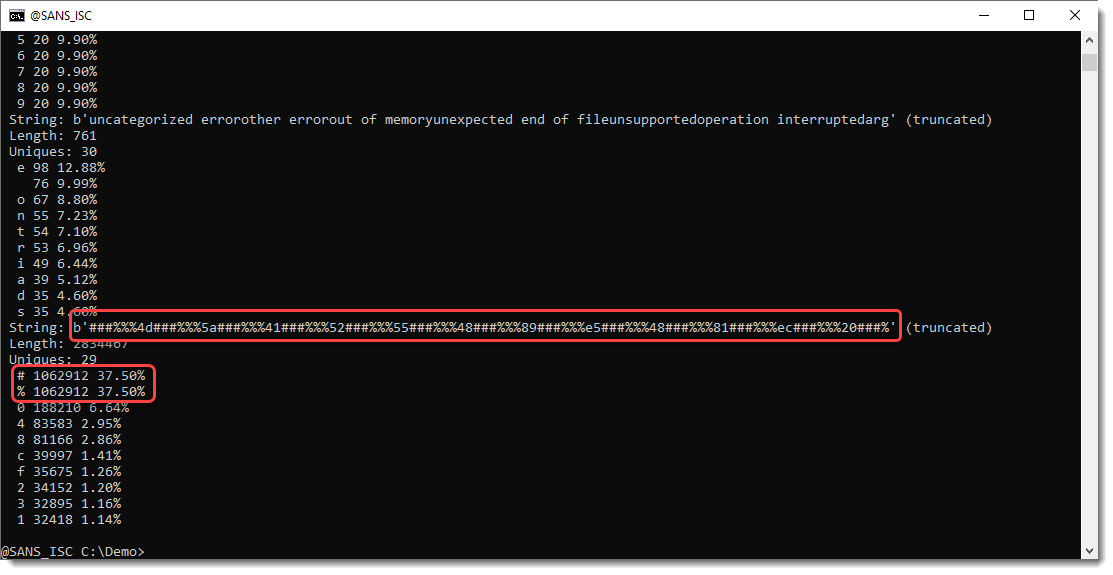

Analyzing the PE file with my strings.py tool (calculating statistics with option -a) reveals it does indeed contain one very long string:

Verbose mode (-V) gives statistics for the 10 longests strings. We see that 2 characters (# and %) appear very often in this string, more than 75% of this long string is made up of these 2 characters:

These 2 characters are likely inserted for obfuscation. Let's use base64dump.py and let it ignore these 2 characters (-i #%"):

Now we have a hex encoded payload that decodes to a PE file (MZ), and most likely a Cobalt Strike beacon (MZARUH).

Didier Stevens

Senior handler

blog.DidierStevens.com

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.