I've been working on comparing data from different DShield [1] honeypots to understand differences when the honeypots reside on different networks. One point of comparison is malware submitted to the honeypots. During a review of the summarized data, I noticed that one honeypot was an outlier in terms of malware captured.

API Rug Pull – The NIST NVD Database and API (Part 4 of 3), (Wed, Apr 24th)

Struts "devmode": Still a problem ten years later?, (Tue, Apr 23rd)

Like many similar frameworks and languages, Struts 2 has a "developer mode" (devmode) offering additional features to aid debugging. Error messages will be more verbose, and the devmode includes an OGNL console. OGNL, the Object-Graph Navigation Language, can interact with Java, but in the end, executing OGNL results in arbitrary code execution. This OGNL console resembles a "web shell" built into devmode.

No matter the language, and the exact features it provides, enabling a "devmode", "debug mode" or similar feature in production is never a good idea. But it probably surprises no one that it still shows up in publicly exposed sites ever so often. Attackers know this as well, and are "playing" with it.

To take advantage of devmode, an attacker would request a URL like:

/[anything].action?debug=command&expression=[OGNL Expression]

I noticed today that one URL in this format is showing up in our "first seen" URLs:

/devmode.action?debug=command&expression= (#_memberAccess["allowStaticMethodAccess"]=true, #foo=new java.lang.Boolean("false") , #context["xwork.MethodAccessor.denyMethodExecution"]=#foo, @org.apache.commons.io.IOUtils

For readability, I URL decoded and added spaces. This URL is likely just a scan for vulnerable systems. I ran a quick database query to see if we have other similar URLs recently. Indeed we had 2,443 distinct URLs in our database. Most of them follow this pattern:

debug=command&expression=(43867*40719)

Again, a simple check is performed to see if a system is vulnerable. Scans for this issue are sporadic, but we have had some notable increases recently. An old issue like this often comes back as people start to forget about it, so take this as a reminder to double-check your systems.

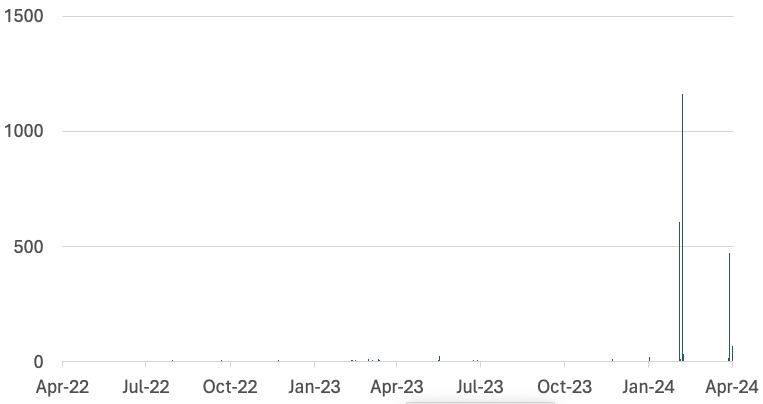

I am plotting the last two years below, and you see big spikes on February 24th, 27th, and April 19th this year.

—

Johannes B. Ullrich, Ph.D. , Dean of Research, SANS.edu

Twitter|

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

Guardrails for Amazon Bedrock now available with new safety filters and privacy controls

Today, I am happy to announce the general availability of Guardrails for Amazon Bedrock, first released in preview at re:Invent 2023. With Guardrails for Amazon Bedrock, you can implement safeguards in your generative artificial intelligence (generative AI) applications that are customized to your use cases and responsible AI policies. You can create multiple guardrails tailored to different use cases and apply them across multiple foundation models (FMs), improving end-user experiences and standardizing safety controls across generative AI applications. You can use Guardrails for Amazon Bedrock with all large language models (LLMs) in Amazon Bedrock, including fine-tuned models.

Guardrails for Bedrock offers industry-leading safety protection on top of the native capabilities of FMs, helping customers block as much as 85% more harmful content than protection natively provided by some foundation models on Amazon Bedrock today. Guardrails for Amazon Bedrock is the only responsible AI capability offered by top cloud providers that enables customers to build and customize safety and privacy protections for their generative AI applications in a single solution, and it works with all large language models (LLMs) in Amazon Bedrock, as well as fine-tuned models.

Aha! is a software company that helps more than 1 million people bring their product strategy to life. “Our customers depend on us every day to set goals, collect customer feedback, and create visual roadmaps,” said Dr. Chris Waters, co-founder and Chief Technology Officer at Aha!. “That is why we use Amazon Bedrock to power many of our generative AI capabilities. Amazon Bedrock provides responsible AI features, which enable us to have full control over our information through its data protection and privacy policies, and block harmful content through Guardrails for Bedrock. We just built on it to help product managers discover insights by analyzing feedback submitted by their customers. This is just the beginning. We will continue to build on advanced AWS technology to help product development teams everywhere prioritize what to build next with confidence.”

In the preview post, Antje showed you how to use guardrails to configure thresholds to filter content across harmful categories and define a set of topics that need to be avoided in the context of your application. The Content filters feature now has two additional safety categories: Misconduct for detecting criminal activities and Prompt Attack for detecting prompt injection and jailbreak attempts. We also added important new features, including sensitive information filters to detect and redact personally identifiable information (PII) and word filters to block inputs containing profane and custom words (for example, harmful words, competitor names, and products).

Guardrails for Amazon Bedrock sits in between the application and the model. Guardrails automatically evaluates everything going into the model from the application and coming out of the model to the application to detect and help prevent content that falls into restricted categories.

You can recap the steps in the preview release blog to learn how to configure Denied topics and Content filters. Let me show you how the new features work.

New features

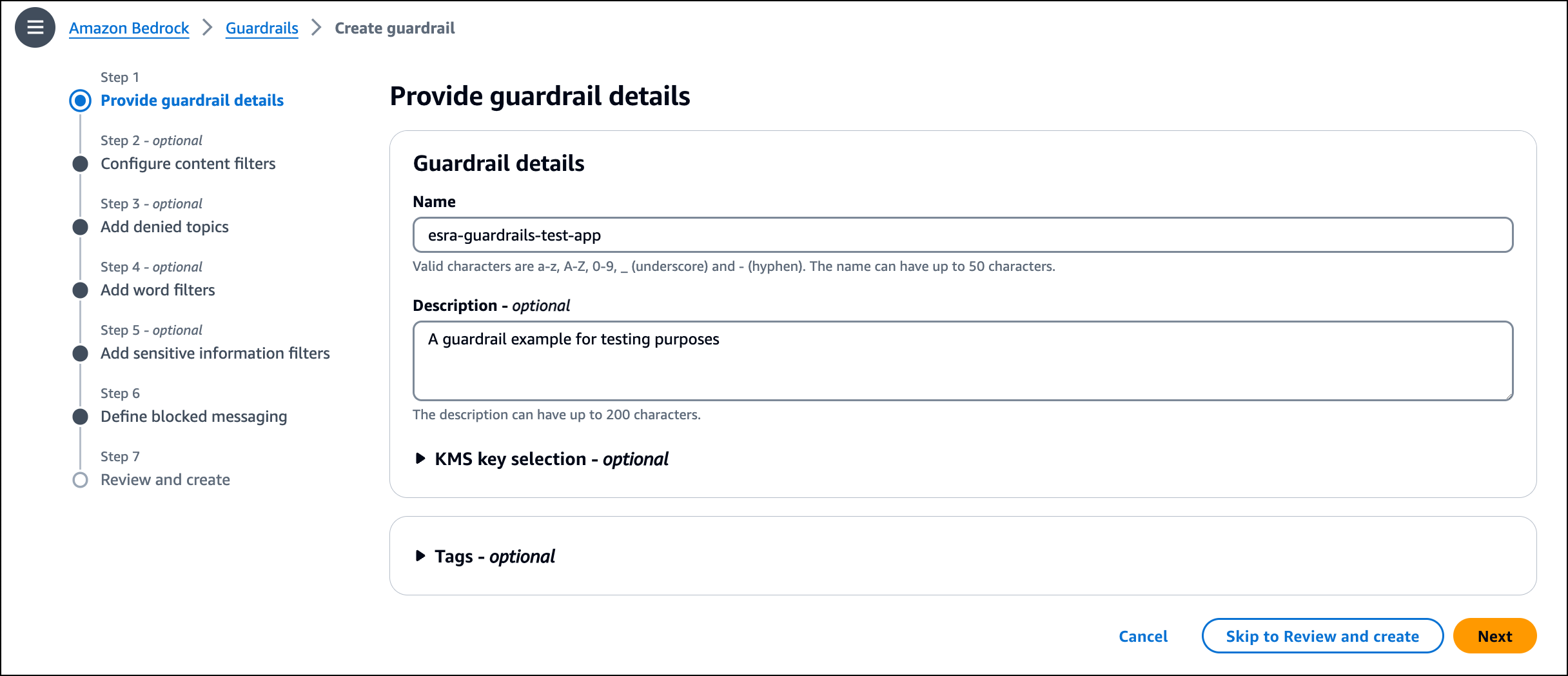

To start using Guardrails for Amazon Bedrock, I go to the AWS Management Console for Amazon Bedrock, where I can create guardrails and configure the new capabilities. In the navigation pane in the Amazon Bedrock console, I choose Guardrails, and then I choose Create guardrail.

I enter the guardrail Name and Description. I choose Next to move to the Add sensitive information filters step.

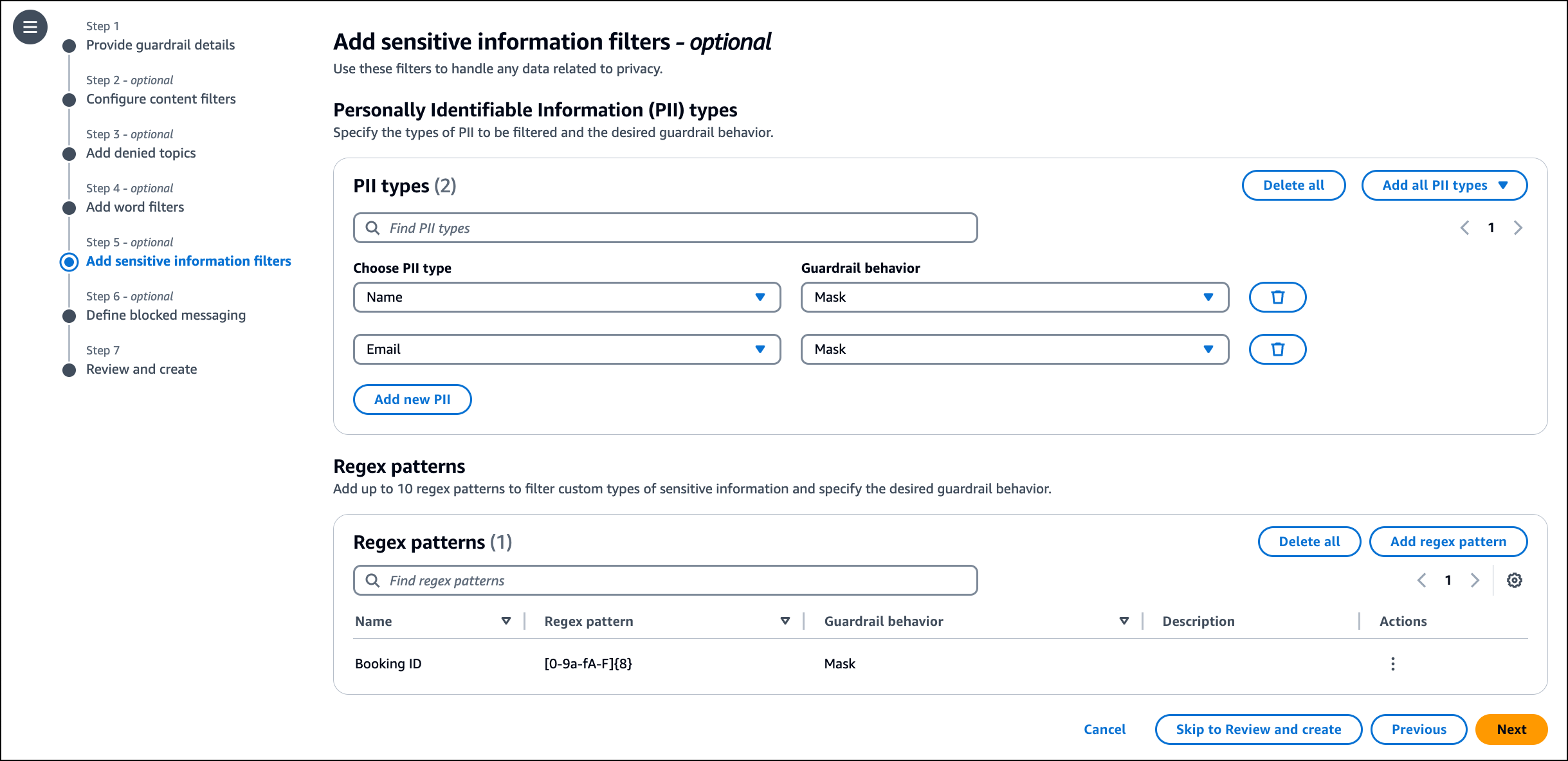

I use Sensitive information filters to detect sensitive and private information in user inputs and FM outputs. Based on the use cases, I can select a set of entities to be either blocked in inputs (for example, a FAQ-based chatbot that doesn’t require user-specific information) or redacted in outputs (for example, conversation summarization based on chat transcripts). The sensitive information filter supports a set of predefined PII types. I can also define custom regex-based entities specific to my use case and needs.

I add two PII types (Name, Email) from the list and add a regular expression pattern using Booking ID as Name and [0-9a-fA-F]{8} as the Regex pattern.

I choose Next and enter custom messages that will be displayed if my guardrail blocks the input or the model response in the Define blocked messaging step. I review the configuration at the last step and choose Create guardrail.

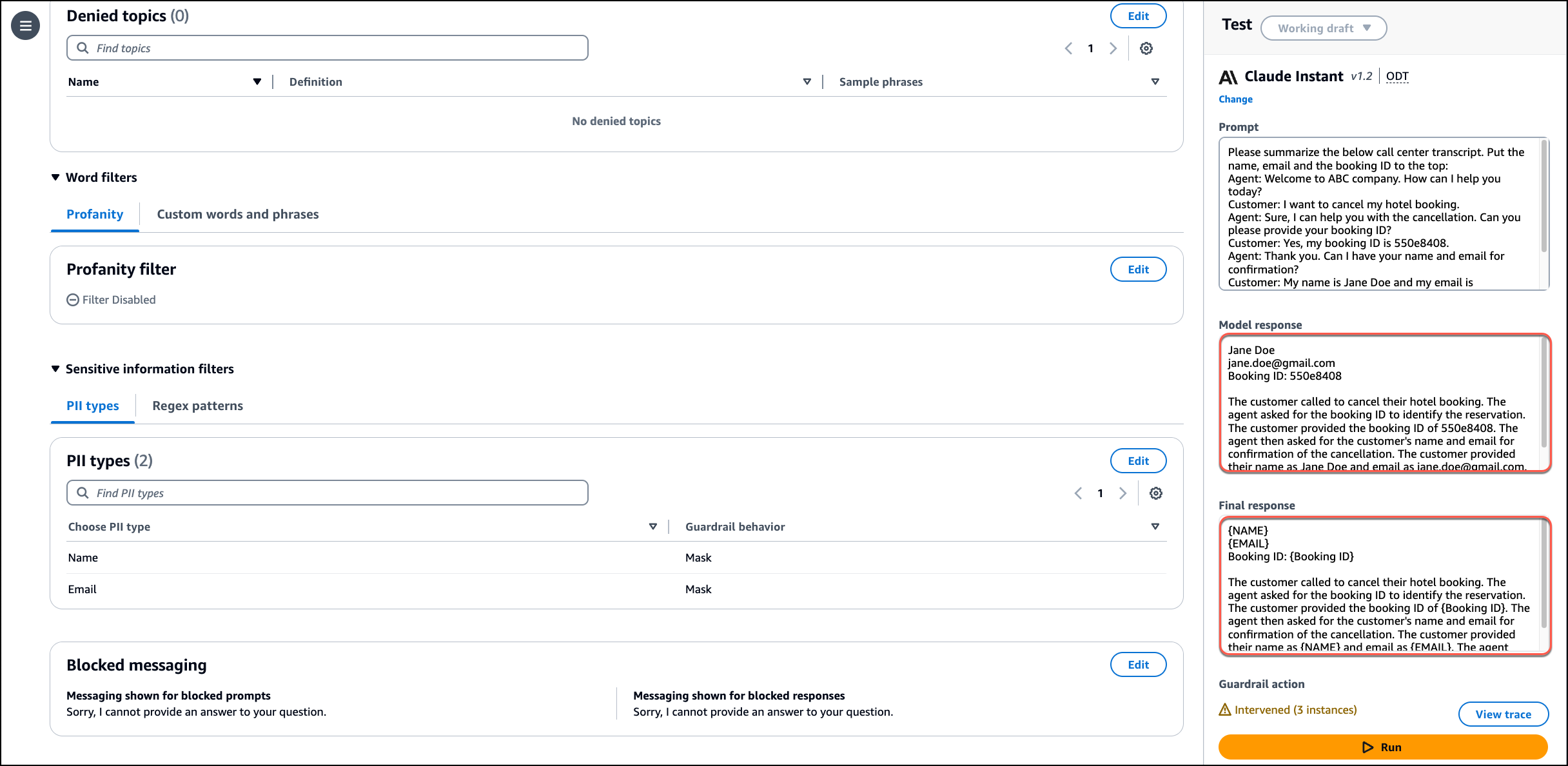

I navigate to the Guardrails Overview page and choose the Anthropic Claude Instant 1.2 model using the Test section. I enter the following call center transcript in the Prompt field and choose Run.

Please summarize the below call center transcript. Put the name, email and the booking ID to the top:

Agent: Welcome to ABC company. How can I help you today?

Customer: I want to cancel my hotel booking.

Agent: Sure, I can help you with the cancellation. Can you please provide your booking ID?

Customer: Yes, my booking ID is 550e8408.

Agent: Thank you. Can I have your name and email for confirmation?

Customer: My name is Jane Doe and my email is jane.doe@gmail.com

Agent: Thank you for confirming. I will go ahead and cancel your reservation.

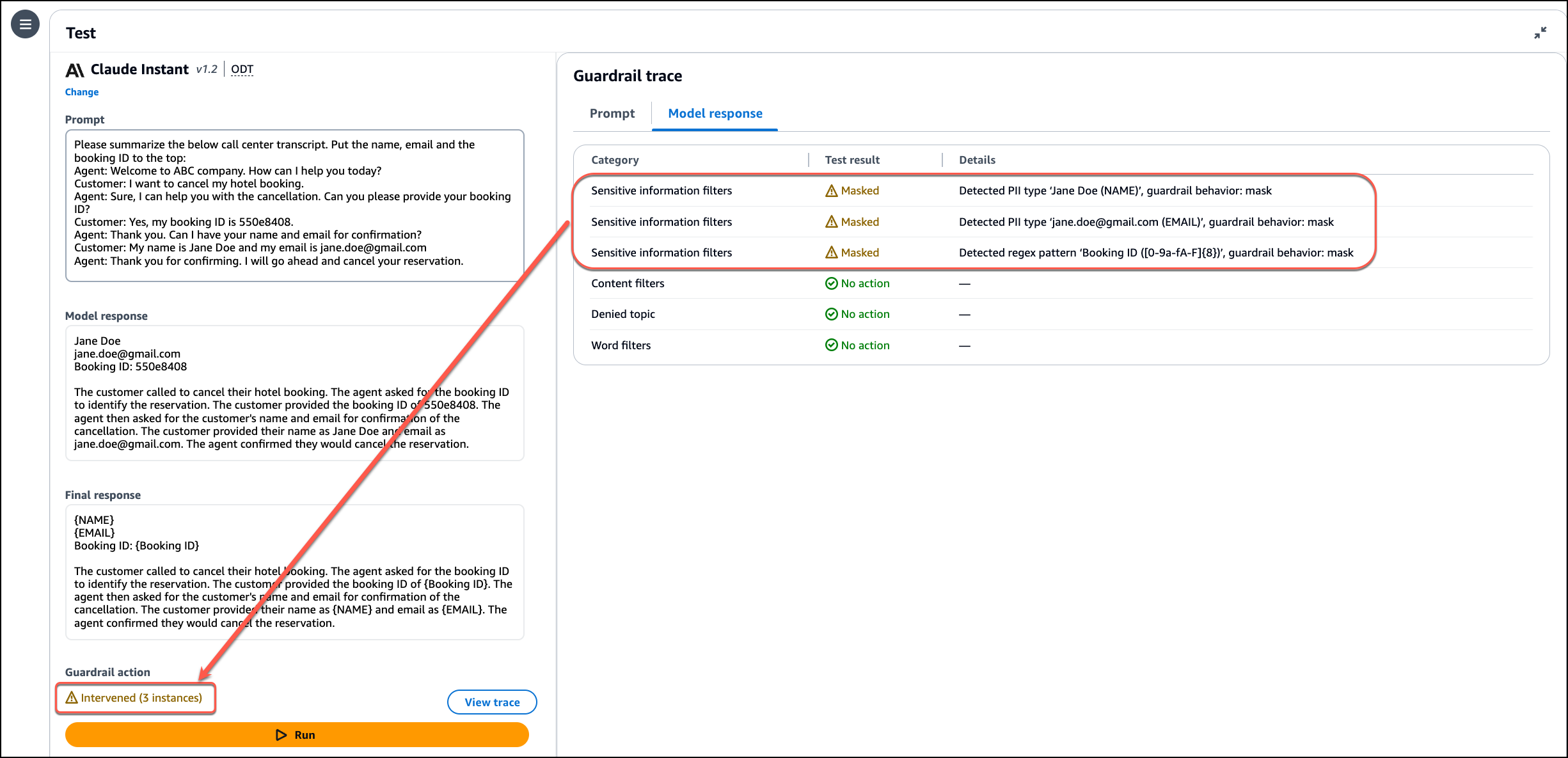

Guardrail action shows there are three instances where the guardrails came in to effect. I use View trace to check the details. I notice that the guardrail detected the Name, Email and Booking ID and masked them in the final response.

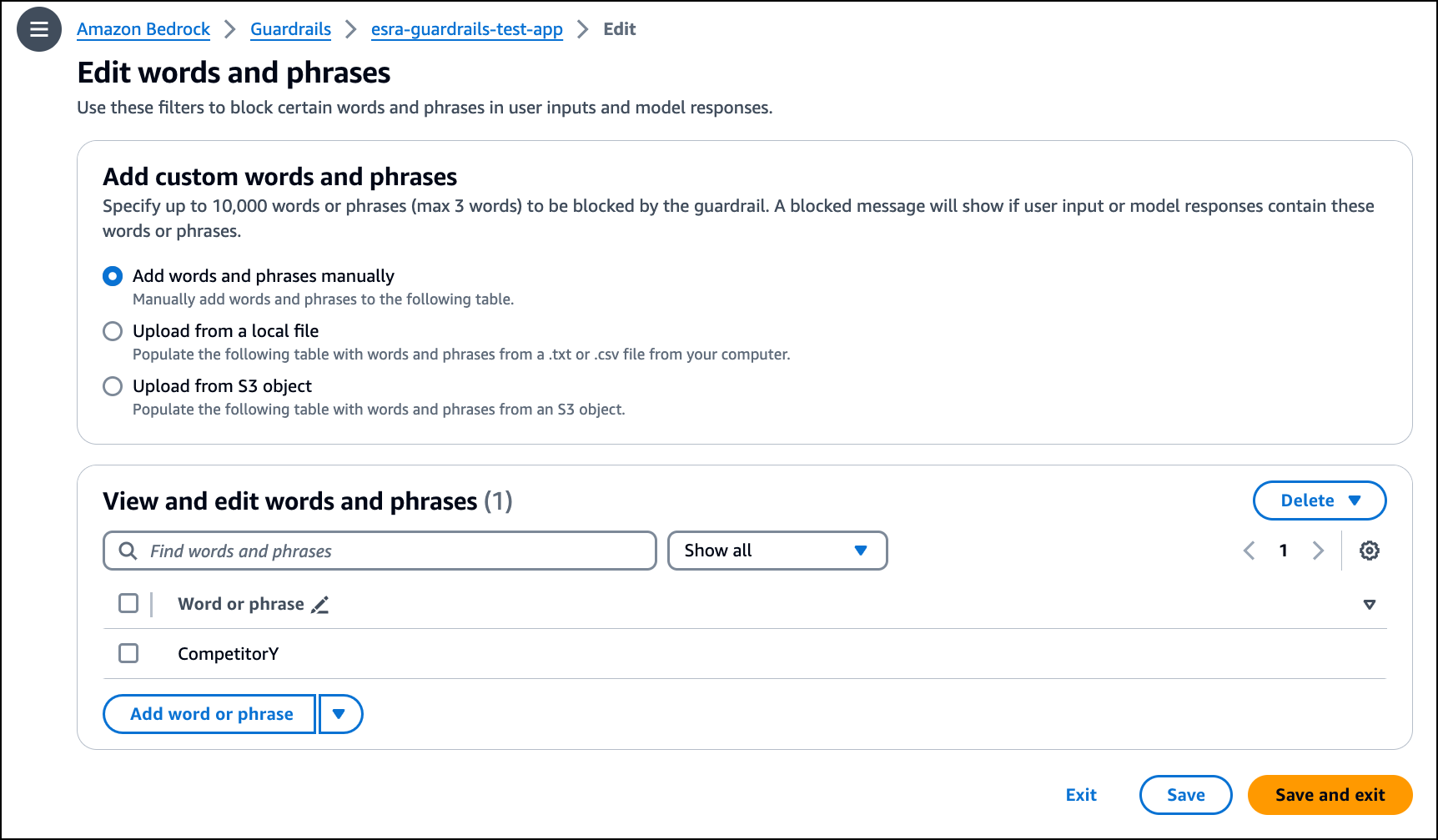

I use Word filters to block inputs containing profane and custom words (for example, competitor names or offensive words). I check the Filter profanity box. The profanity list of words is based on the global definition of profanity. Additionally, I can specify up to 10,000 phrases (with a maximum of three words per phrase) to be blocked by the guardrail. A blocked message will show if my input or model response contain these words or phrases.

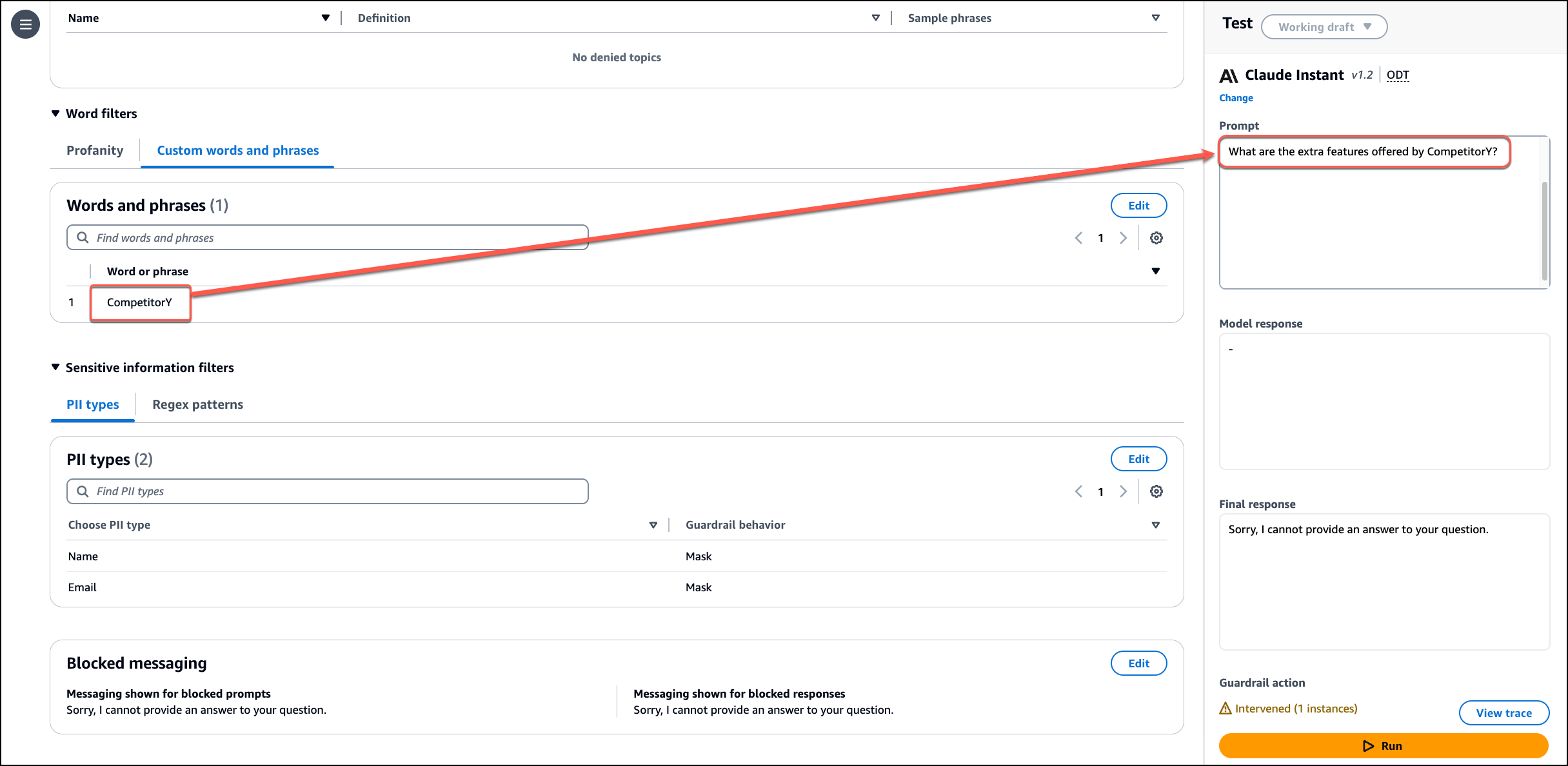

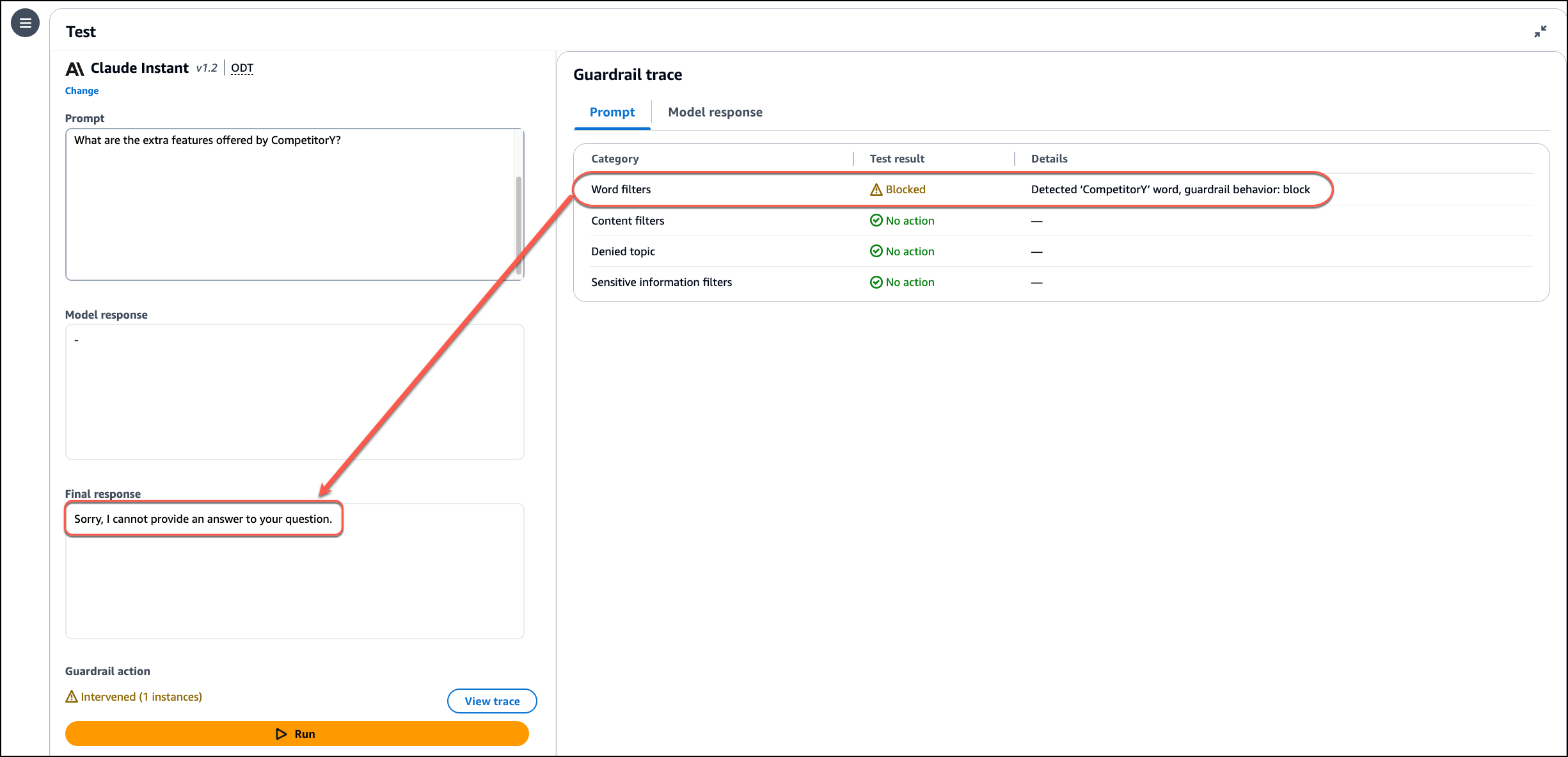

Now, I choose Custom words and phrases under Word filters and choose Edit. I use Add words and phrases manually to add a custom word CompetitorY. Alternatively, I can use Upload from a local file or Upload from S3 object if I need to upload a list of phrases. I choose Save and exit to return to my guardrail page.

I enter a prompt containing information about a fictional company and its competitor and add the question What are the extra features offered by CompetitorY?. I choose Run.

I use View trace to check the details. I notice that the guardrail intervened according to the policies I configured.

Now available

Guardrails for Amazon Bedrock is now available in US East (N. Virginia) and US West (Oregon) Regions.

For pricing information, visit the Amazon Bedrock pricing page.

To get started with this feature, visit the Guardrails for Amazon Bedrock web page.

For deep-dive technical content and to learn how our Builder communities are using Amazon Bedrock in their solutions, visit our community.aws website.

Agents for Amazon Bedrock: Introducing a simplified creation and configuration experience

With Agents for Amazon Bedrock, applications can use generative artificial intelligence (generative AI) to run tasks across multiple systems and data sources. Starting today, these new capabilities streamline the creation and management of agents:

Quick agent creation – You can now quickly create an agent and optionally add instructions and action groups later, providing flexibility and agility for your development process.

Agent builder – All agent configurations can be operated in the new agent builder section of the console.

Simplified configuration – Action groups can use a simplified schema that just lists functions and parameters without having to provide an API schema.

Return of control –You can skip using an AWS Lambda function and return control to the application invoking the agent. In this way, the application can directly integrate with systems outside AWS or call internal endpoints hosted in any Amazon Virtual Private Cloud (Amazon VPC) without the need to integrate the required networking and security configurations with a Lambda function.

Infrastructure as code – You can use AWS CloudFormation to deploy and manage agents with the new simplified configuration, ensuring consistency and reproducibility across environments for your generative AI applications.

Let’s see how these enhancements work in practice.

Creating an agent using the new simplified console

To test the new experience, I want to build an agent that can help me reply to an email containing customer feedback. I can use generative AI, but a single invocation of a foundation model (FM) is not enough because I need to interact with other systems. To do that, I use an agent.

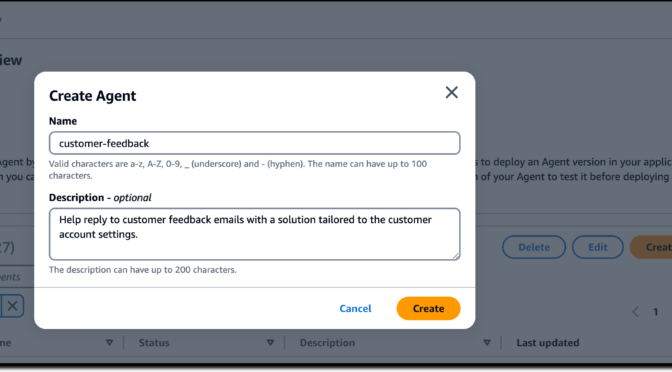

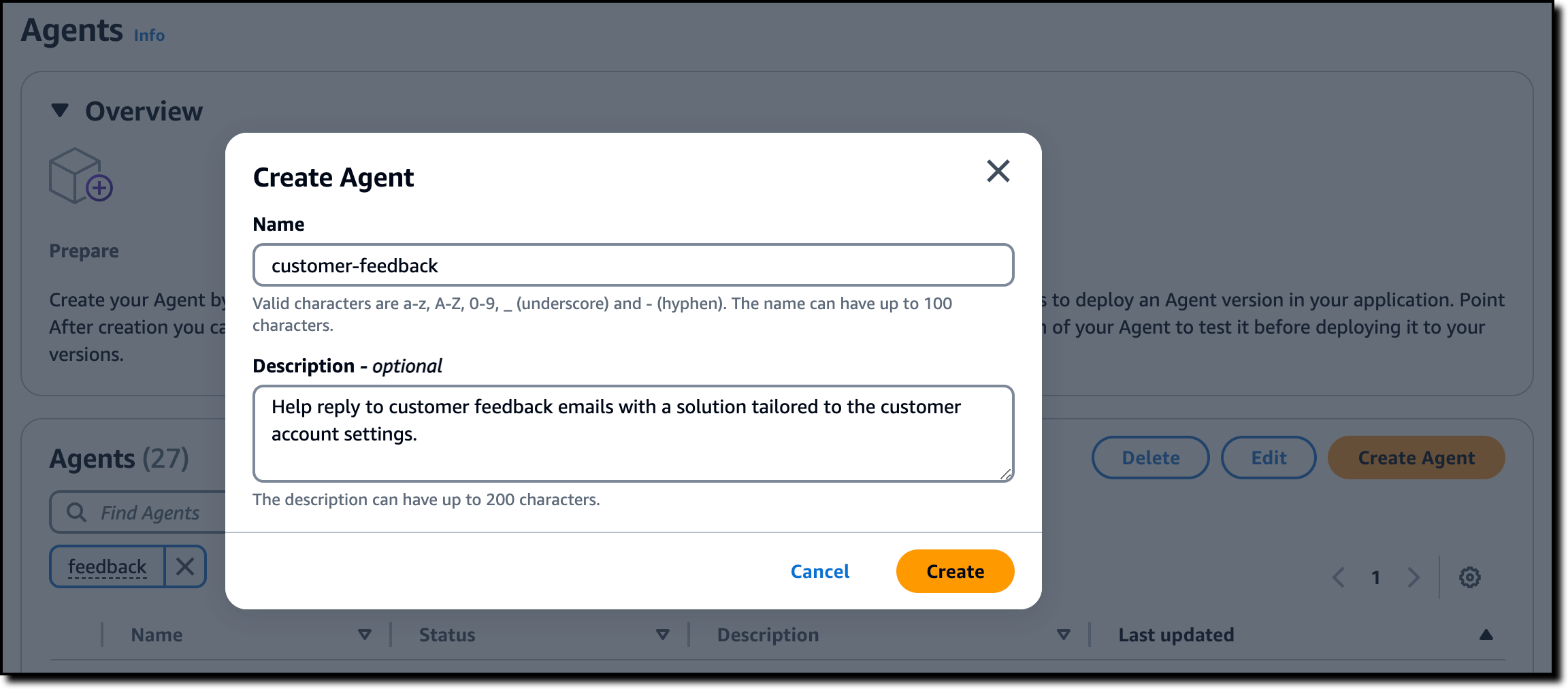

In the Amazon Bedrock console, I choose Agents from the navigation pane and then Create Agent. I enter a name for the agent (customer-feedback) and a description. Using the new interface, I proceed and create the agent without providing additional information at this stage.

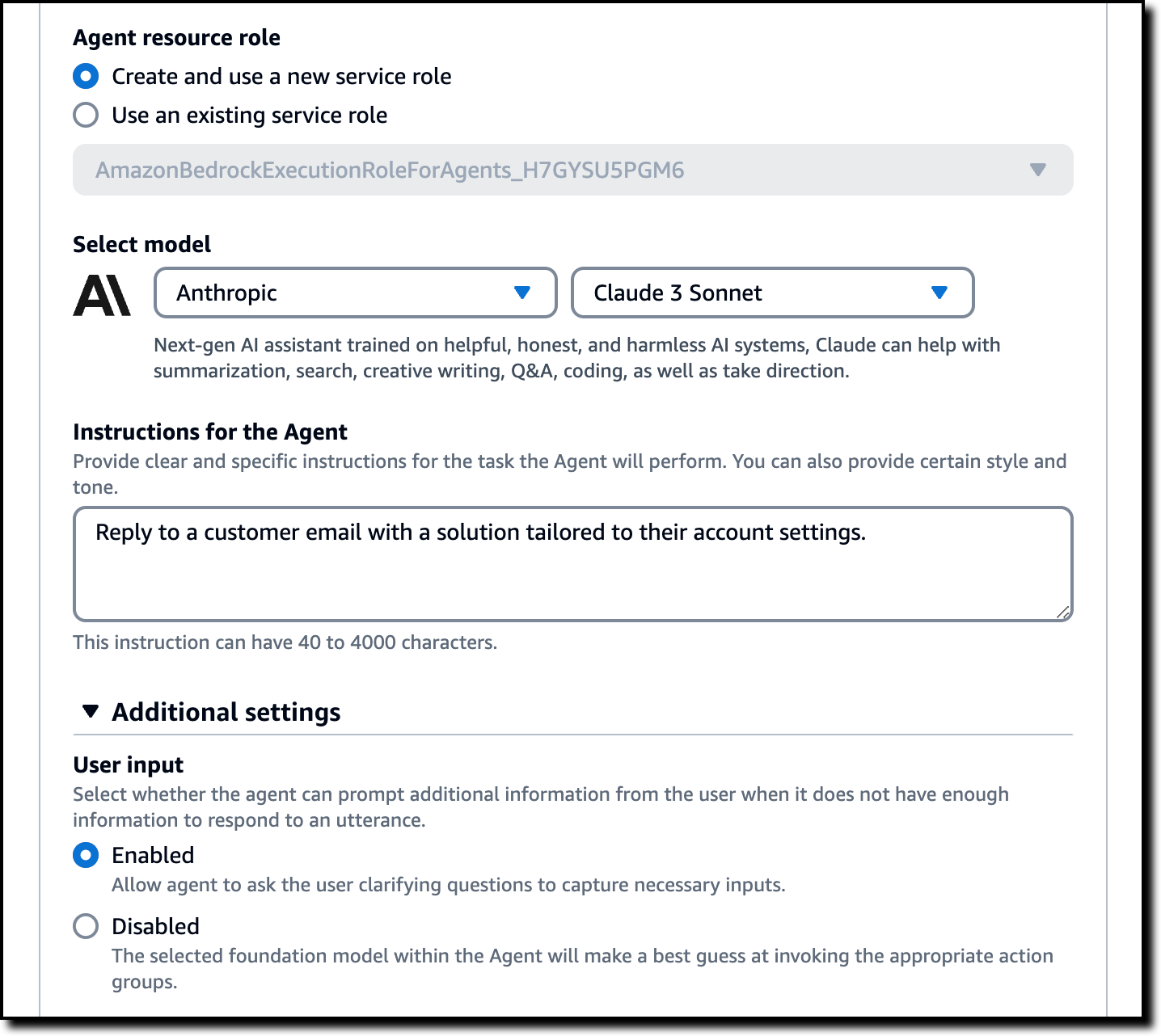

I am now presented with the Agent builder, the place where I can access and edit the overall configuration of an agent. In the Agent resource role, I leave the default setting as Create and use a new service role so that the AWS Identity and Access Management (IAM) role assumed by the agent is automatically created for me. For the model, I select Anthropic and Claude 3 Sonnet.

In Instructions for the Agent, I provide clear and specific instructions for the task the agent has to perform. Here, I can also specify the style and tone I want the agent to use when replying. For my use case, I enter:

Help reply to customer feedback emails with a solution tailored to the customer account settings.

In Additional settings, I select Enabled for User input so that the agent can ask for additional details when it does not have enough information to respond. Then, I choose Save to update the configuration of the agent.

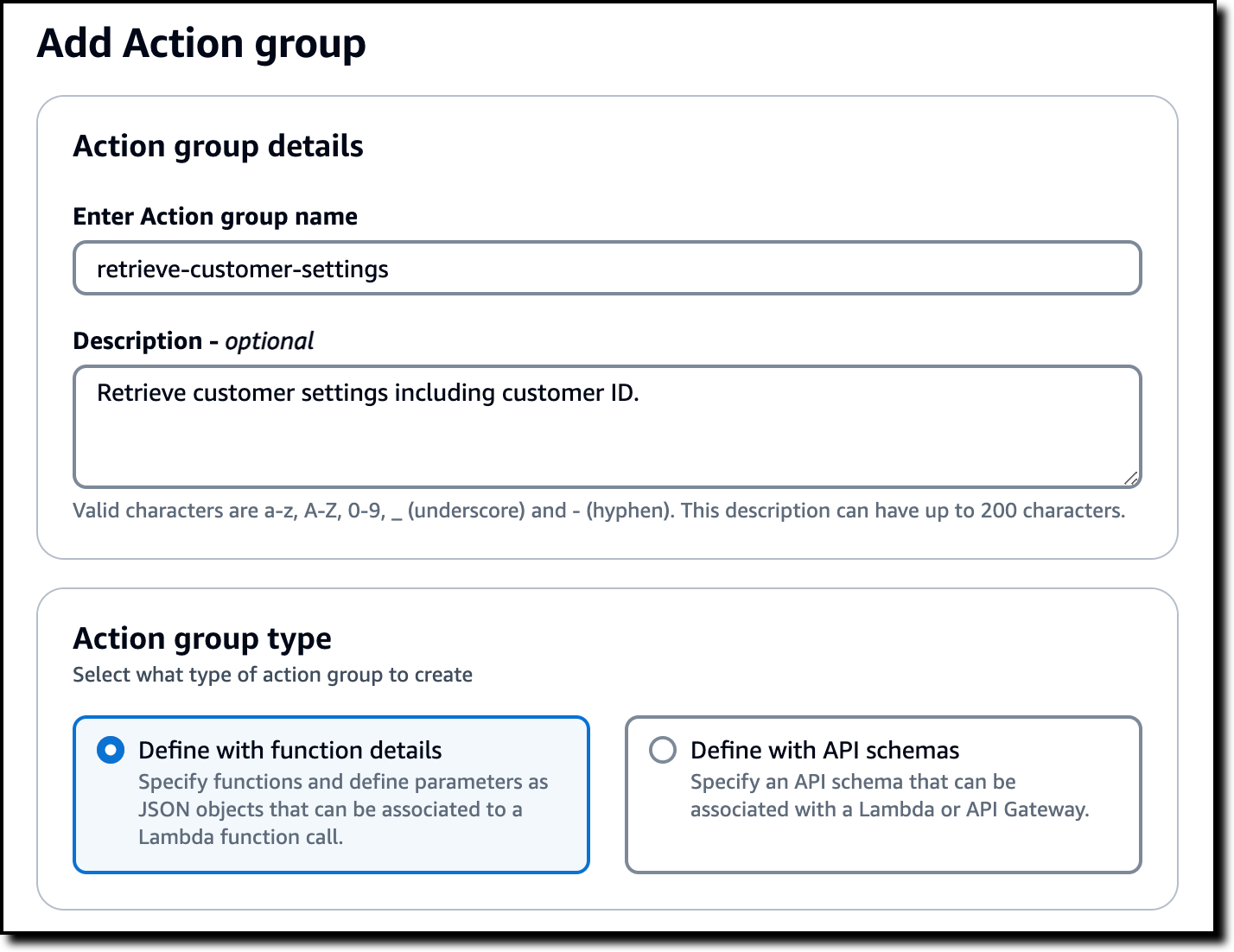

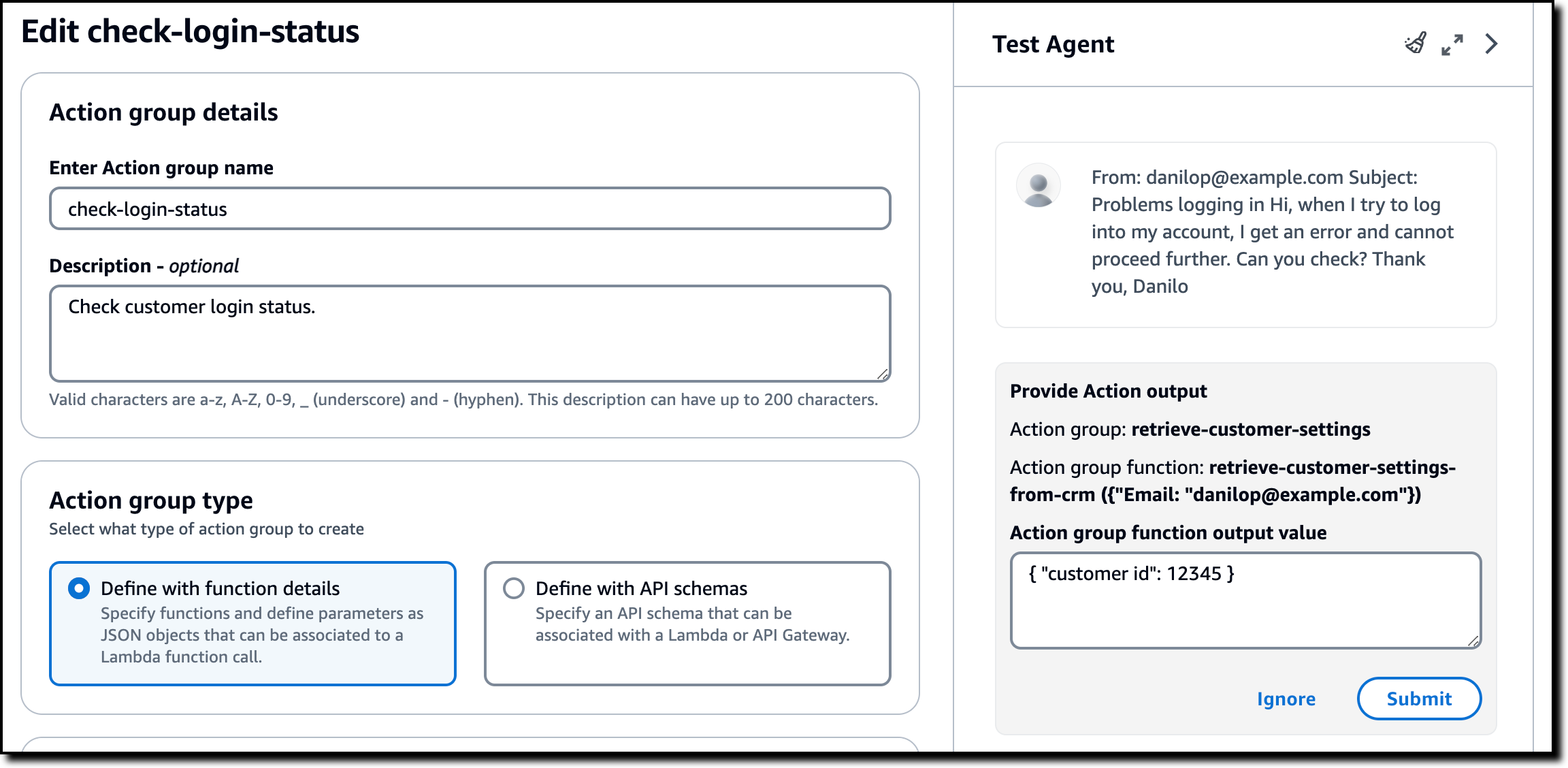

I now choose Add in the Action groups section. Action groups are the way agents can interact with external systems to gather more information or perform actions. I enter a name (retrieve-customer-settings) and a description for the action group:

Retrieve customer settings including customer ID.

The description is optional but, when provided, is passed to the model to help choose when to use this action group.

In Action group type, I select Define with function details so that I only need to specify functions and their parameters. The other option here (Define with API schemas) corresponds to the previous way of configuring action groups using an API schema.

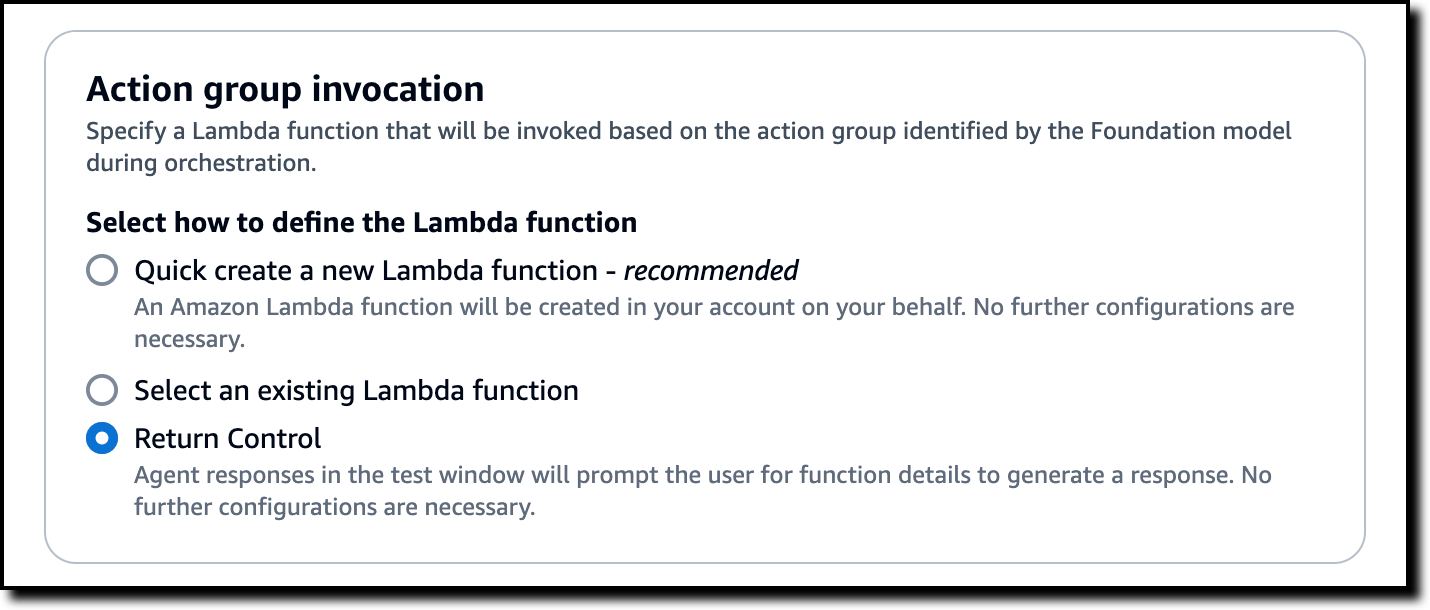

Action group functions can be associated to a Lambda function call or configured to return control to the user or application invoking the agent so that they can provide a response to the function. The option to return control is useful for four main use cases:

- When it’s easier to call an API from an existing application (for example, the one invoking the agent) than building a new Lambda function with the correct authentication and network configurations as required by the API

- When the duration of the task goes beyond the maximum Lambda function timeout of 15 minutes so that I can handle the task with an application running in containers or virtual servers or use a workflow orchestration such as AWS Step Functions

- When I have time-consuming actions because, with the return of control, the agent doesn’t wait for the action to complete before proceeding to the next step, and the invoking application can run actions asynchronously in the background while the orchestration flow of the agent continues

- When I need a quick way to mock the interaction with an API during the development and testing and of an agent

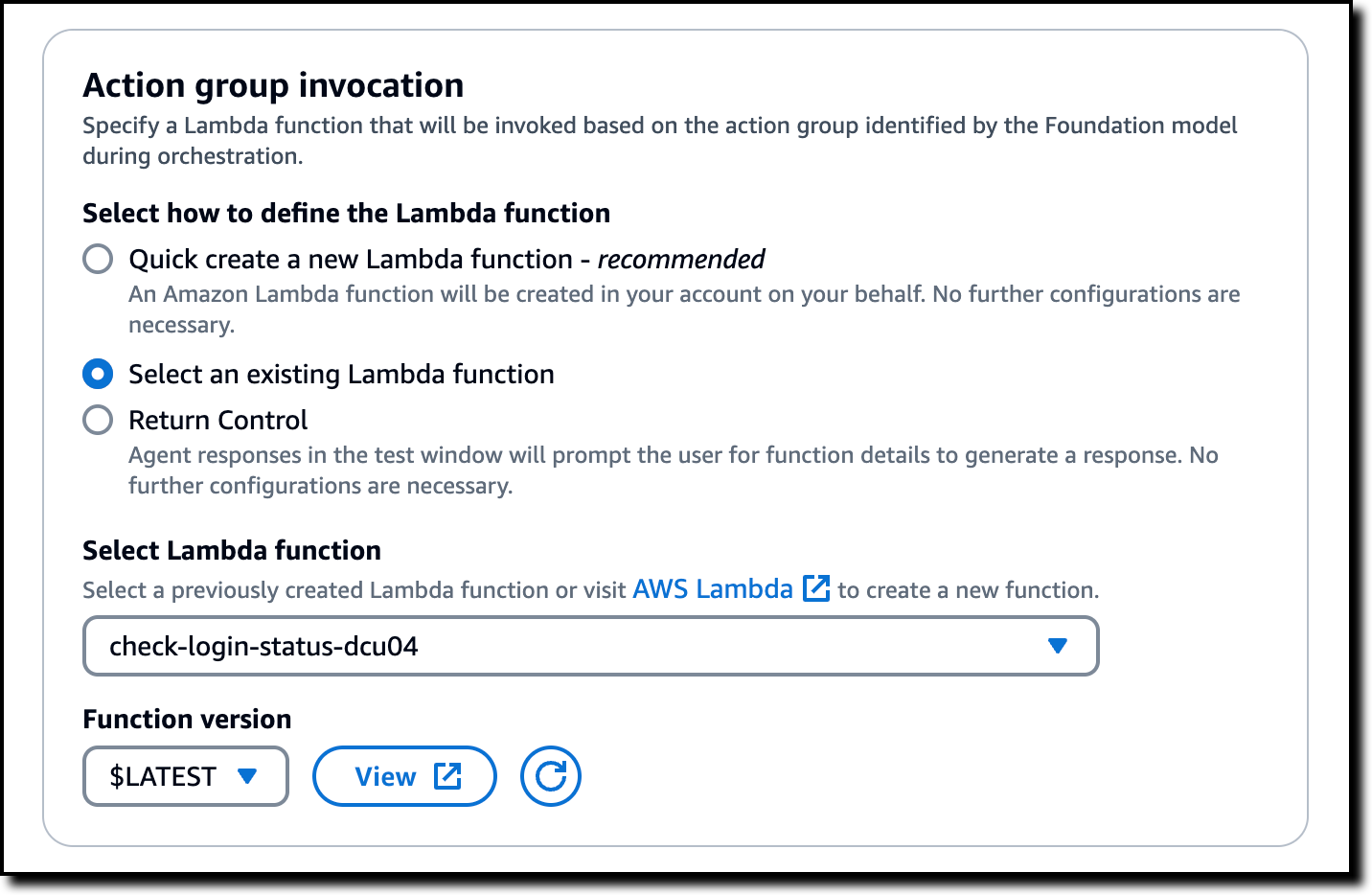

In Action group invocation, I can specify the Lambda function that will be invoked when this action group is identified by the model during orchestration. I can ask the console to quickly create a new Lambda function, to select an existing Lambda function, or return control so that the user or application invoking the agent will ask for details to generate a response. I select Return Control to show how that works in the console.

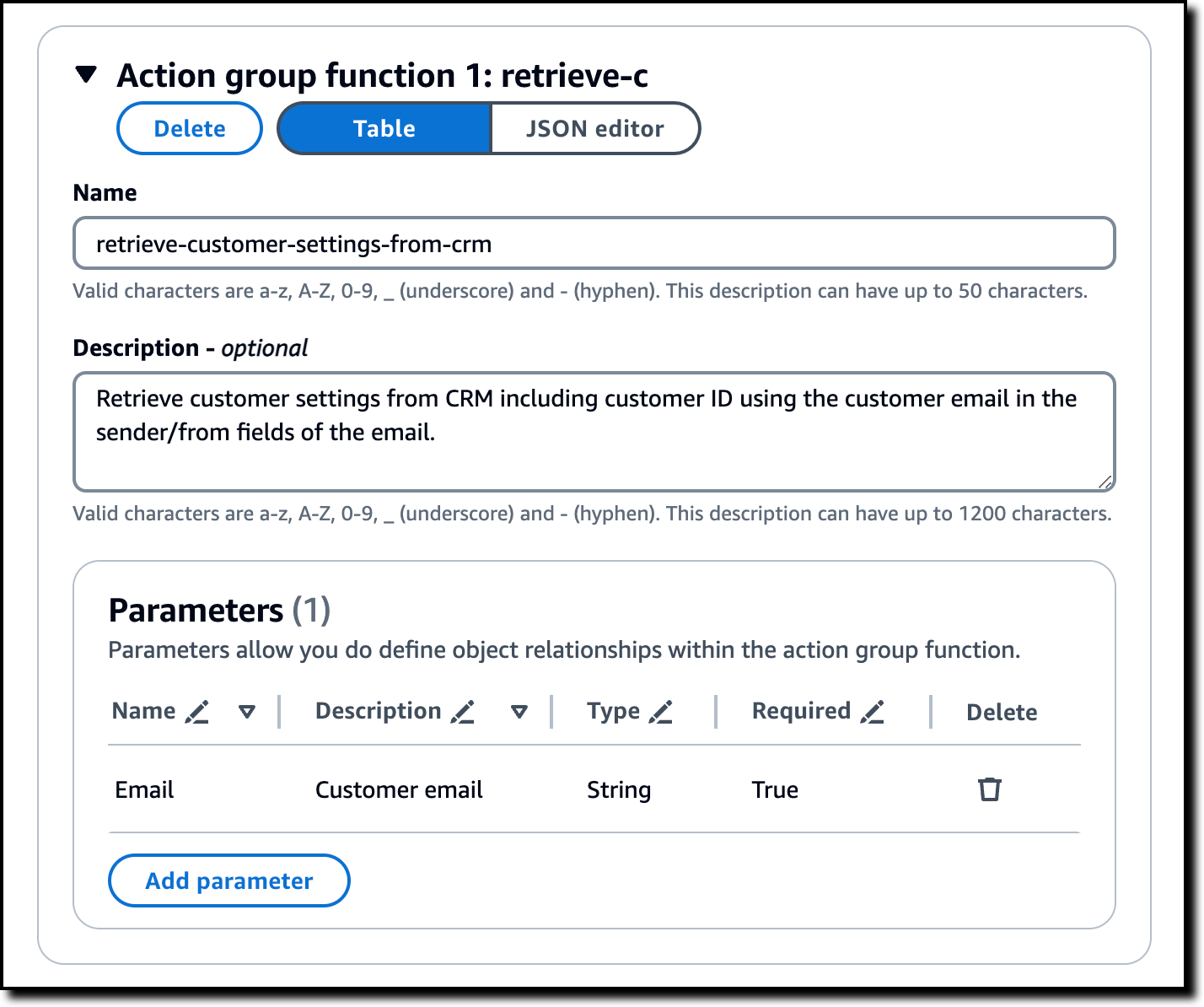

I configure the first function of the action group. I enter a name (retrieve-customer-settings-from-crm) and the following description for the function:

Retrieve customer settings from CRM including customer ID using the customer email in the sender/from fields of the email.

In Parameters, I add email with Customer email as the description. This is a parameter of type String and is required by this function. I choose Add to complete the creation of the action group.

Because, for my use case, I expect many customers to have issues when logging in, I add another action group (named check-login-status) with the following description:

Check customer login status.

This time, I select the option to create a new Lambda function so that I can handle these requests in code.

For this action group, I configure a function (named check-customer-login-status-in-login-system) with the following description:

Check customer login status in login system using the customer ID from settings.

In Parameters, I add customer_id, another required parameter of type String. Then, I choose Add to complete the creation of the second action group.

When I open the configuration of this action group, I see the name of the Lambda function that has been created in my account. There, I choose View to open the Lambda function in the console.

In the Lambda console, I edit the starting code that has been provided and implement my business case:

import json

def lambda_handler(event, context):

print(event)

agent = event['agent']

actionGroup = event['actionGroup']

function = event['function']

parameters = event.get('parameters', [])

# Execute your business logic here. For more information,

# refer to: https://docs.aws.amazon.com/bedrock/latest/userguide/agents-lambda.html

if actionGroup == 'check-login-status' and function == 'check-customer-login-status-in-login-system':

response = {

"status": "unknown"

}

for p in parameters:

if p['name'] == 'customer_id' and p['type'] == 'string' and p['value'] == '12345':

response = {

"status": "not verified",

"reason": "the email address has not been verified",

"solution": "please verify your email address"

}

else:

response = {

"error": "Unknown action group {} or function {}".format(actionGroup, function)

}

responseBody = {

"TEXT": {

"body": json.dumps(response)

}

}

action_response = {

'actionGroup': actionGroup,

'function': function,

'functionResponse': {

'responseBody': responseBody

}

}

dummy_function_response = {'response': action_response, 'messageVersion': event['messageVersion']}

print("Response: {}".format(dummy_function_response))

return dummy_function_response

I choose Deploy in the Lambda console. The function is configured with a resource-based policy that allows Amazon Bedrock to invoke the function. For this reason, I don’t need to update the IAM role used by the agent.

I am ready to test the agent. Back in the Amazon Bedrock console, with the agent selected, I look for the Test Agent section. There, I choose Prepare to prepare the agent and test it with the latest changes.

As input to the agent, I provide this sample email:

From: danilop@example.com

Subject: Problems logging in

Hi, when I try to log into my account, I get an error and cannot proceed further. Can you check? Thank you, Danilo

In the first step, the agent orchestration decides to use the first action group (retrieve-customer-settings) and function (retrieve-customer-settings-from-crm). This function is configured to return control, and in the console, I am asked to provide the output of the action group function. The customer email address is provided as the input parameter.

To simulate an interaction with an application, I reply with a JSON syntax and choose Submit:

{ "customer id": 12345 }

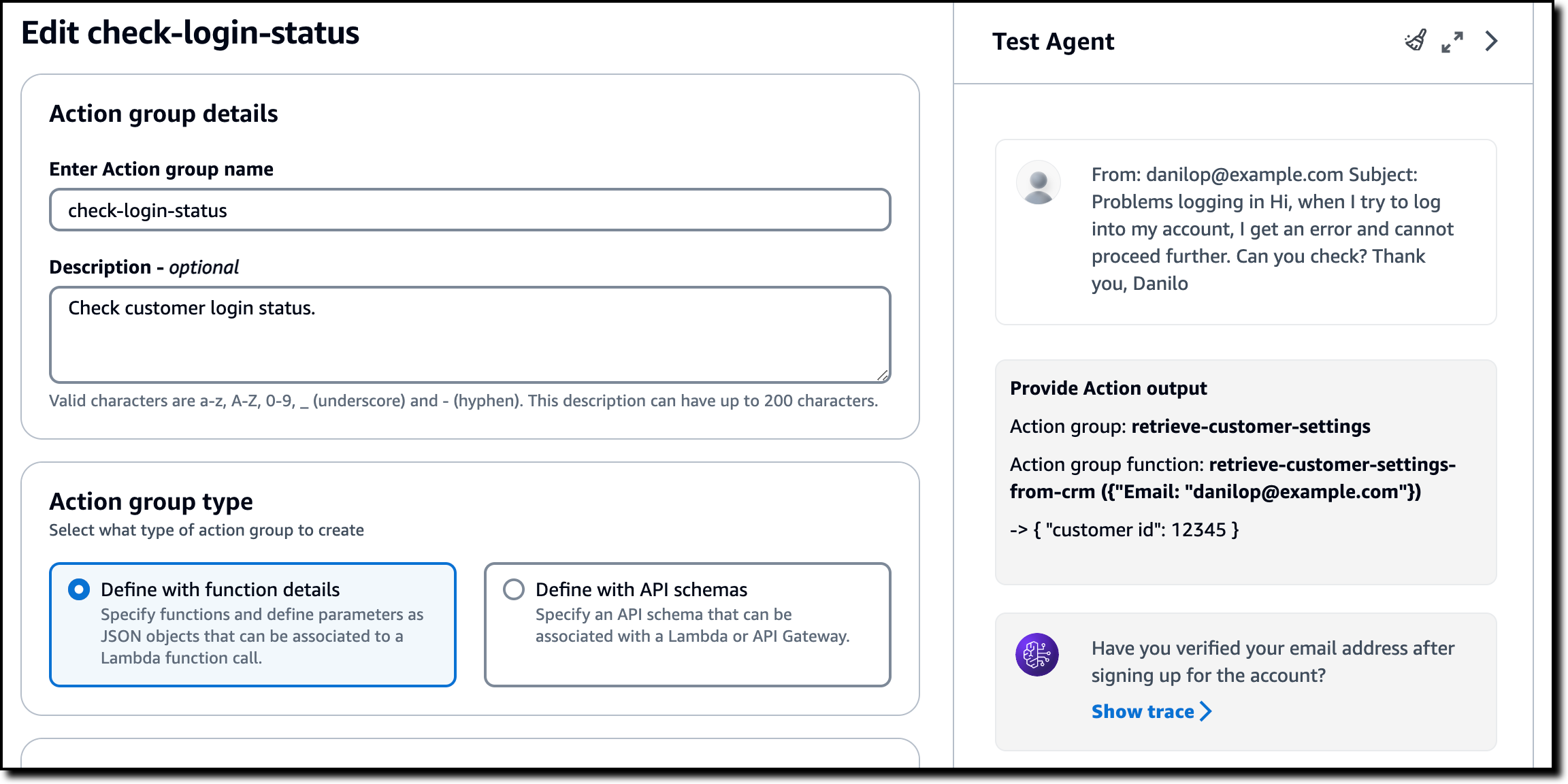

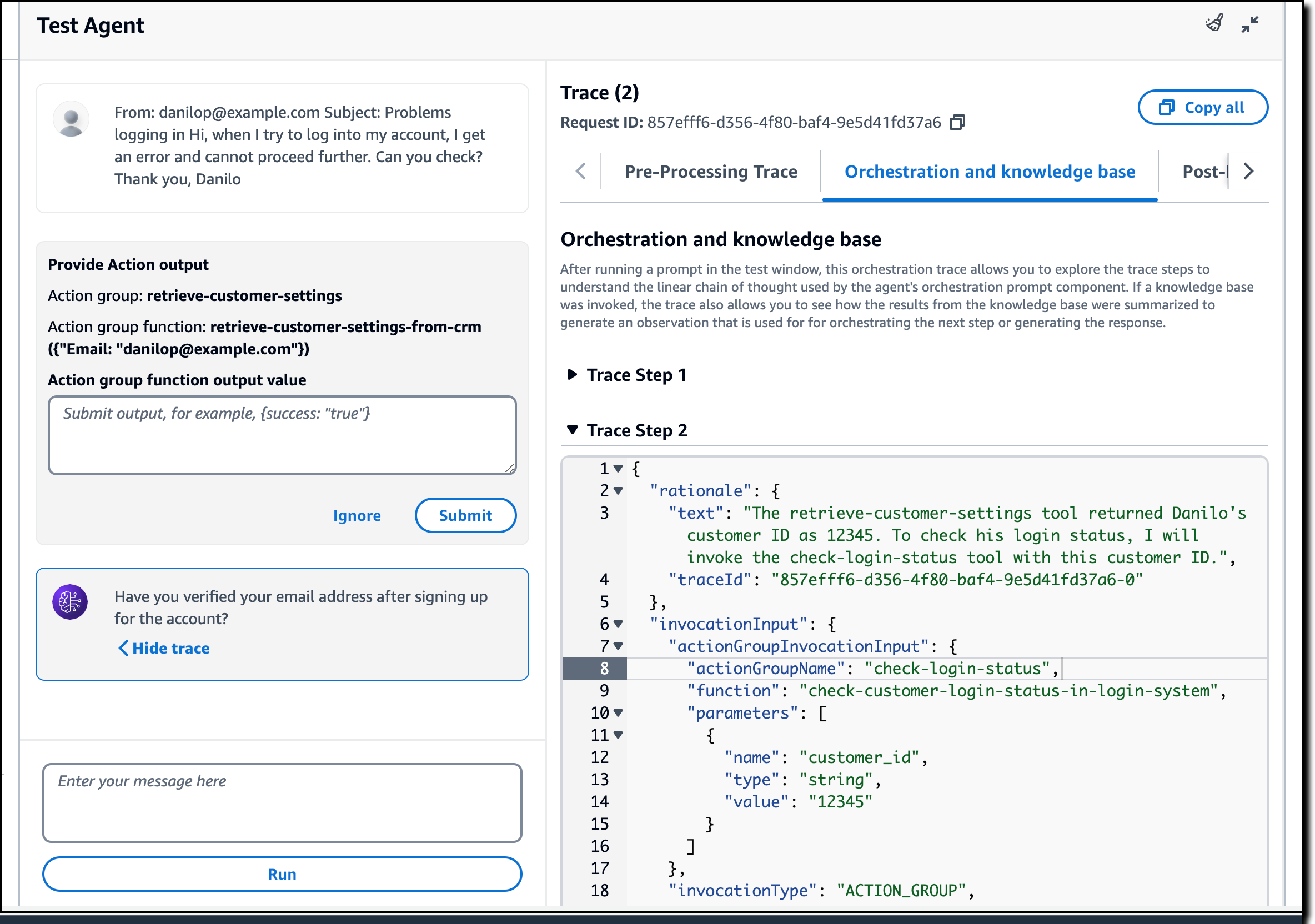

In the next step, the agent has the information required to use the second action group (check-login-status) and function (check-customer-login-status-in-login-system) to call the Lambda function. In return, the Lambda function provides this JSON payload:

{

"status": "not verified",

"reason": "the email address has not been verified",

"solution": "please verify your email address"

}Using this content, the agent can complete its task and suggest the correct solution for this customer.

I am satisfied with the result, but I want to know more about what happened under the hood. I choose Show trace where I can see the details of each step of the agent orchestration. This helps me understand the agent decisions and correct the configurations of the agent groups if they are not used as I expect.

Things to know

You can use the new simplified experience to create and manage Agents for Amazon Bedrock in the US East (N. Virginia) and US West (Oregon) AWS Regions.

You can now create an agent without having to specify an API schema or provide a Lambda function for the action groups. You just need to list the parameters that the action group needs. When invoking the agent, you can choose to return control with the details of the operation to perform so that you can handle the operation in your existing applications or if the duration is longer than the maximum Lambda function timeout.

CloudFormation support for Agents for Amazon Bedrock has been released recently and is now being updated to support the new simplified syntax.

To learn more:

- See the Agents for Amazon Bedrock section of the User Guide.

- Visit our community.aws site to find deep-dive technical content and discover how others are using Amazon Bedrock in their solutions.

— Danilo

Import custom models in Amazon Bedrock (preview)

With Amazon Bedrock, you have access to a choice of high-performing foundation models (FMs) from leading artificial intelligence (AI) companies that make it easier to build and scale generative AI applications. Some of these models provide publicly available weights that can be fine-tuned and customized for specific use cases. However, deploying customized FMs in a secure and scalable way is not an easy task.

Starting today, Amazon Bedrock adds in preview the capability to import custom weights for supported model architectures (such as Meta Llama 2, Llama 3, and Mistral) and serve the custom model using On-Demand mode. You can import models with weights in Hugging Face safetensors format from Amazon SageMaker and Amazon Simple Storage Service (Amazon S3).

In this way, you can use Amazon Bedrock with existing customized models such as Code Llama, a code-specialized version of Llama 2 that was created by further training Llama 2 on code-specific datasets, or use your data to fine-tune models for your own unique business case and import the resulting model in Amazon Bedrock.

Let’s see how this works in practice.

Bringing a custom model to Amazon Bedrock

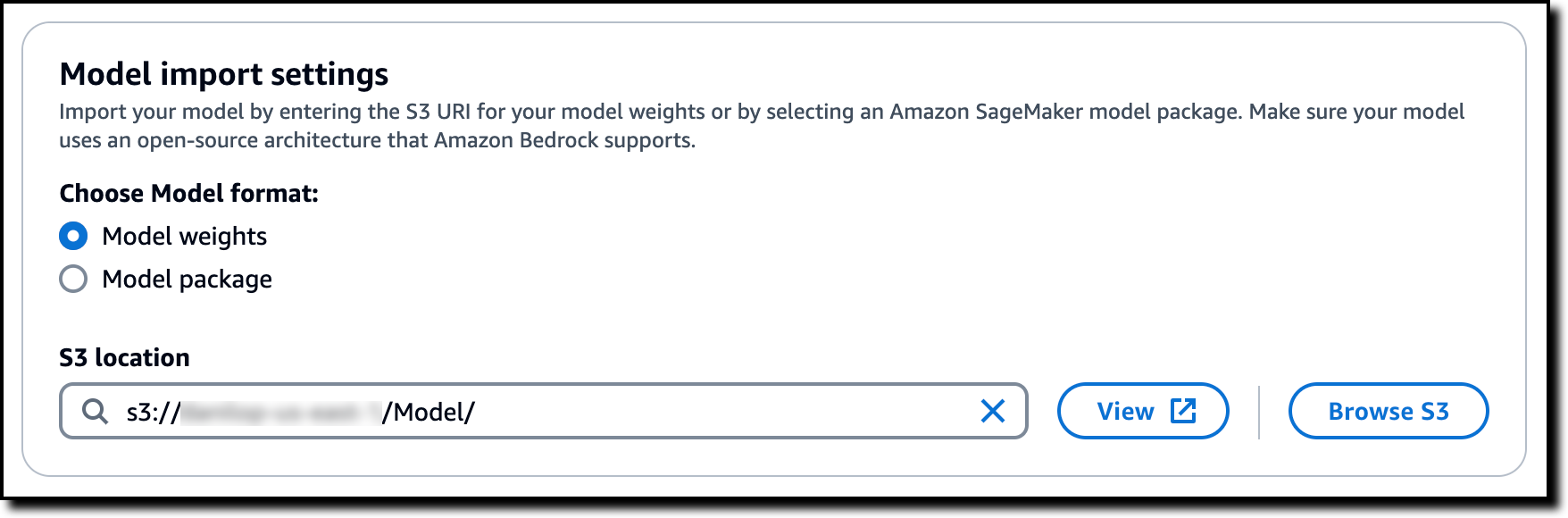

In the Amazon Bedrock console, I choose Imported models from the Foundation models section of the navigation pane. Now, I can create a custom model by importing model weights from an Amazon Simple Storage Service (Amazon S3) bucket or from an Amazon SageMaker model.

I choose to import model weights from an S3 bucket. In another browser tab, I download the MistralLite model from the Hugging Face website using this pull request (PR) that provides weights in safetensors format. The pull request is currently Ready to merge, so it might be part of the main branch when you read this. MistralLite is a fine-tuned Mistral-7B-v0.1 language model with enhanced capabilities of processing long context up to 32K tokens.

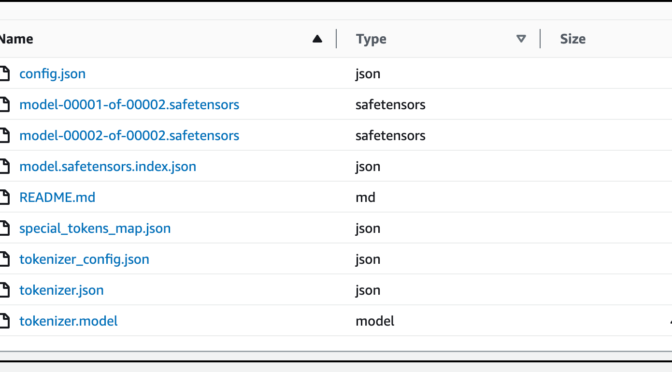

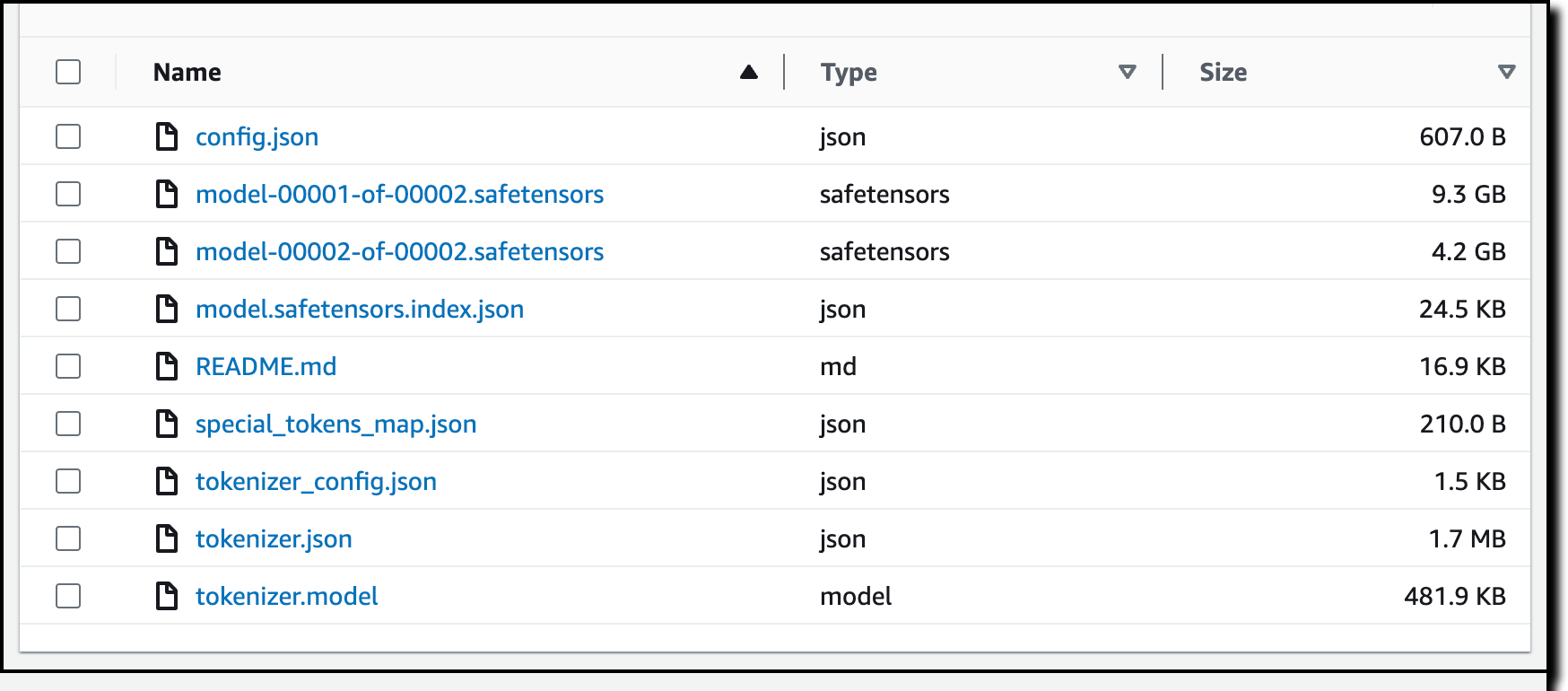

When the download is complete, I upload the files to an S3 bucket in the same AWS Region where I will import the model. Here are the MistralLite model files in the Amazon S3 console:

Back at the Amazon Bedrock console, I enter a name for the model and keep the proposed import job name.

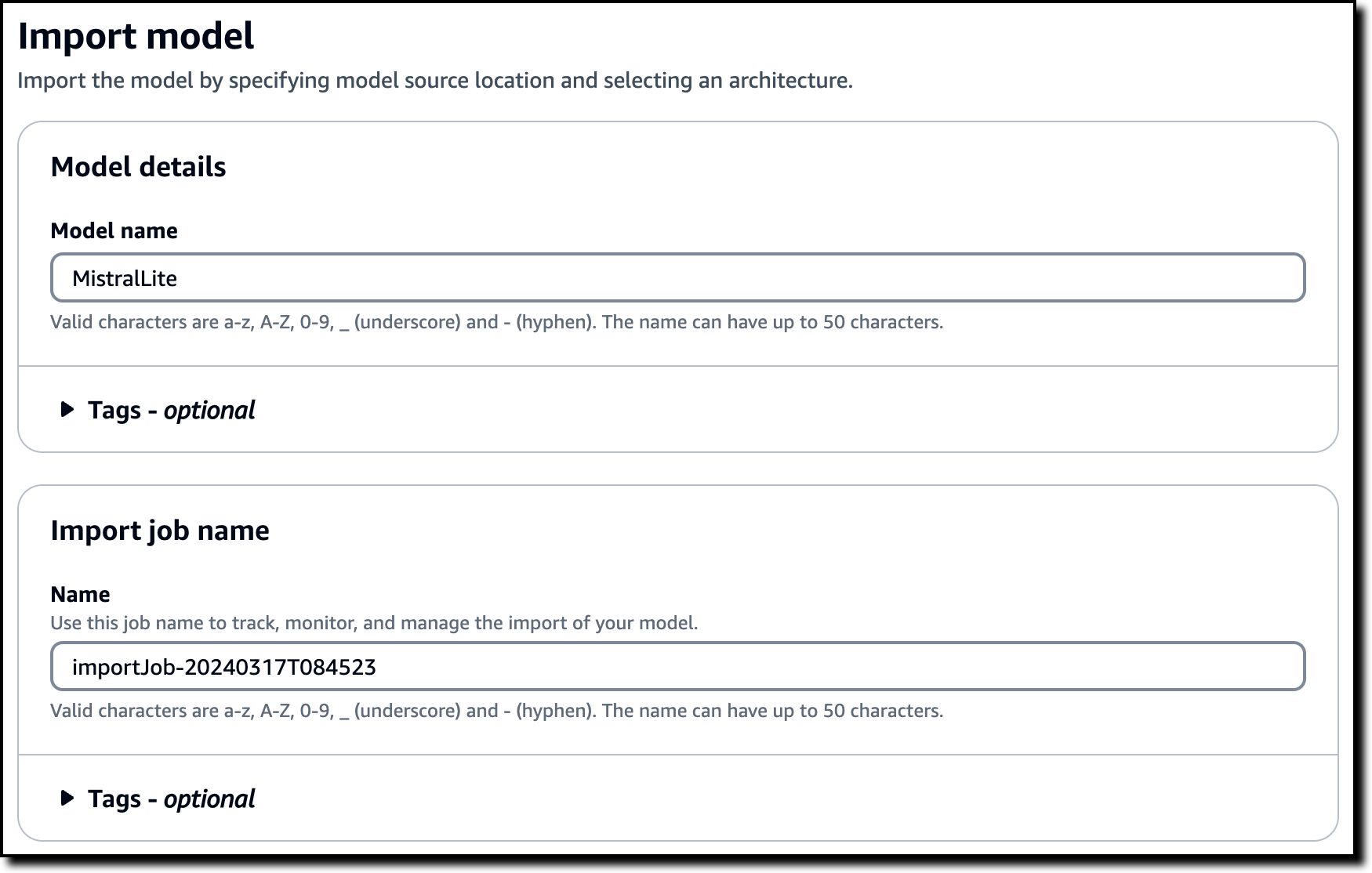

I select Model weights in the Model import settings and browse S3 to choose the location where I uploaded the model weights.

To authorize Amazon Bedrock to access the files on the S3 bucket, I select the option to create and use a new AWS Identity and Access Management (IAM) service role. I use the View permissions details link to check what will be in the role. Then, I submit the job.

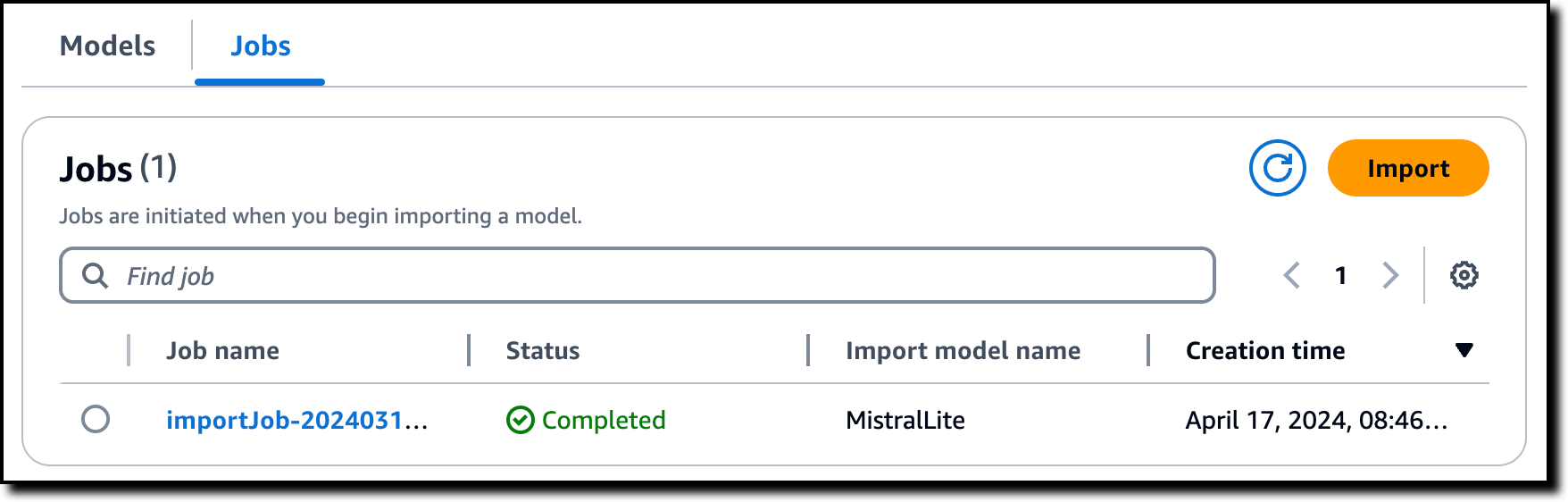

About ten minutes later, the import job is completed.

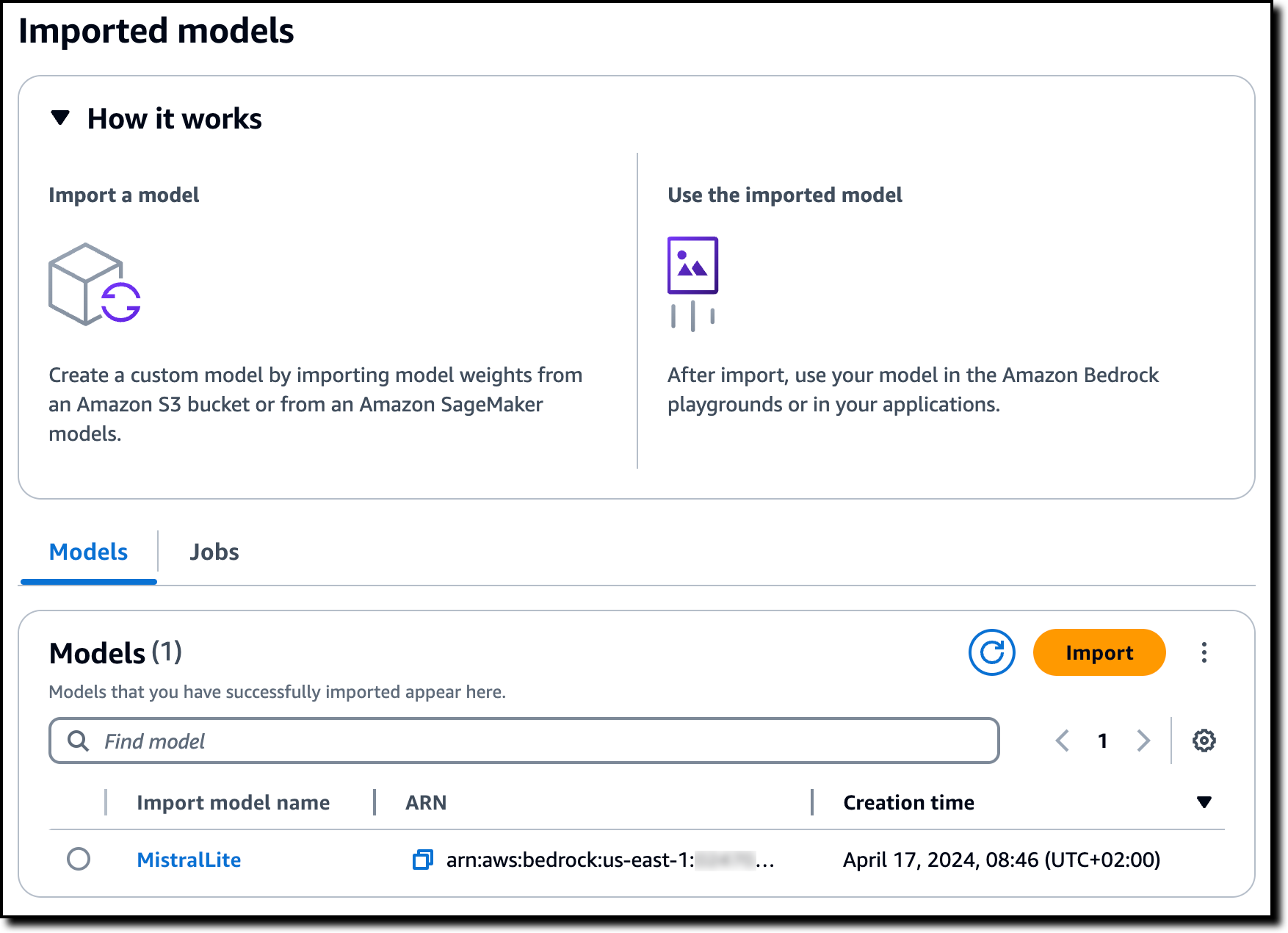

Now, I see the imported model in the console. The list also shows the model Amazon Resource Name (ARN) and the creation date.

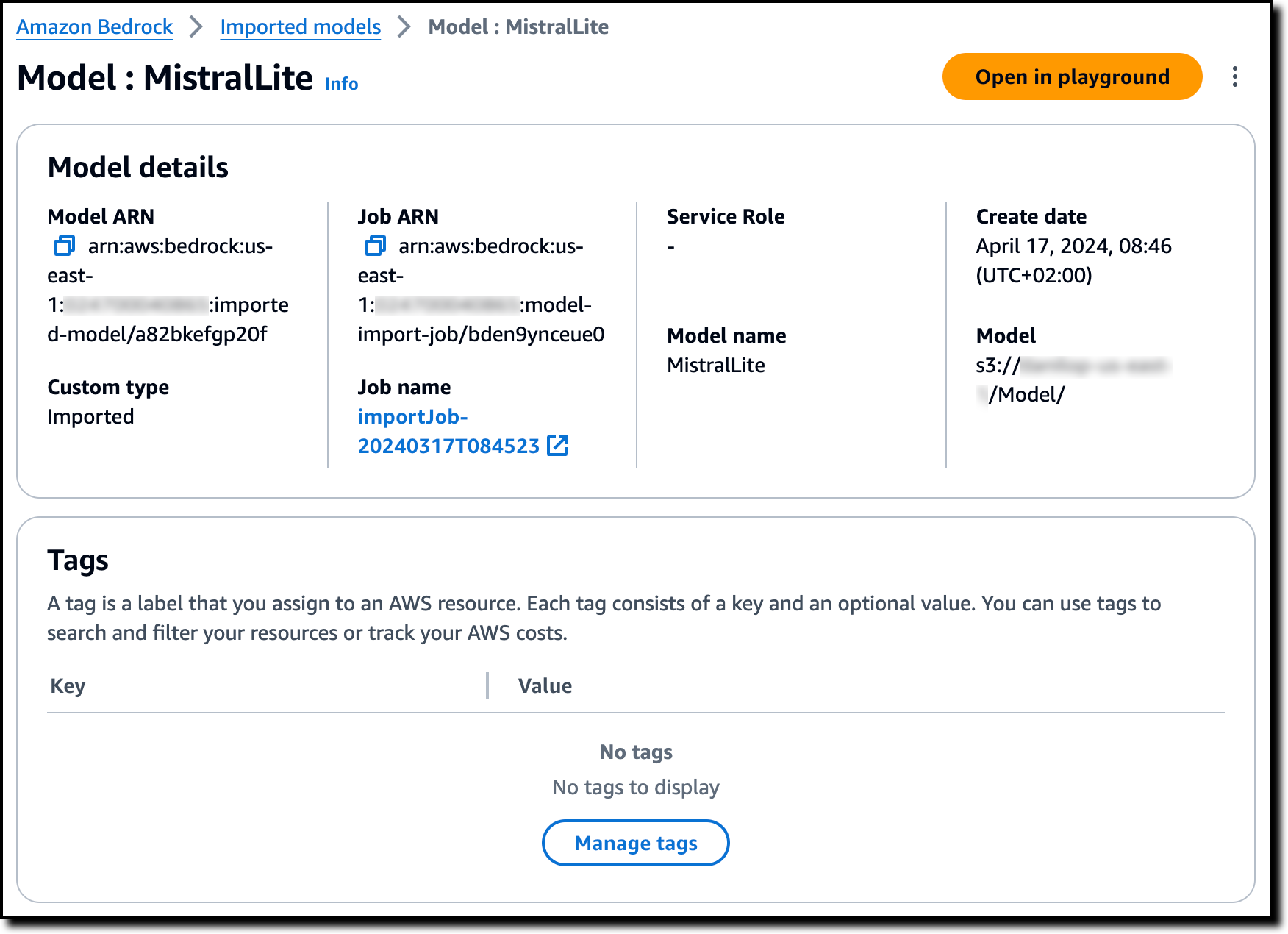

I choose the model to get more information, such as the S3 location of the model files.

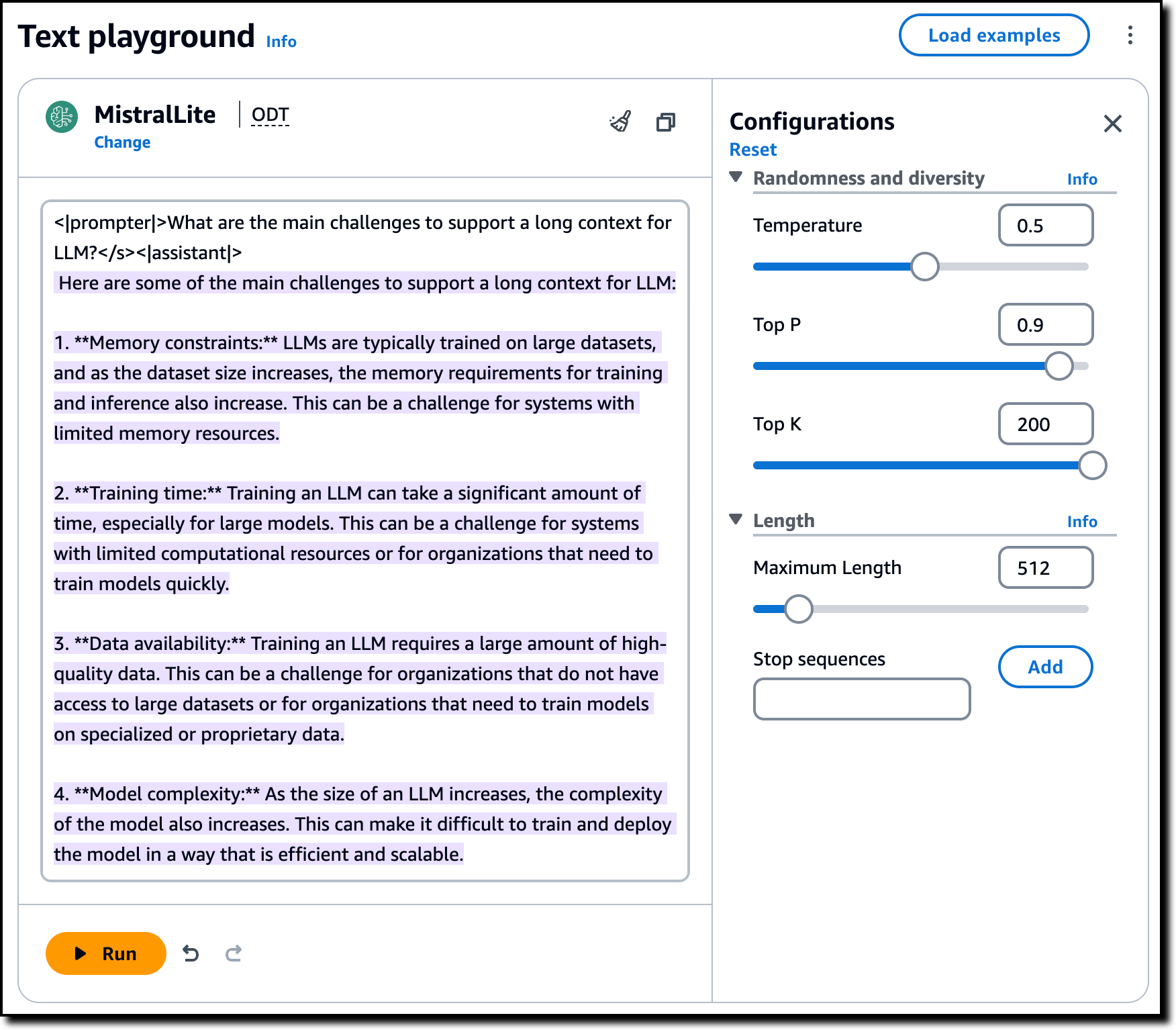

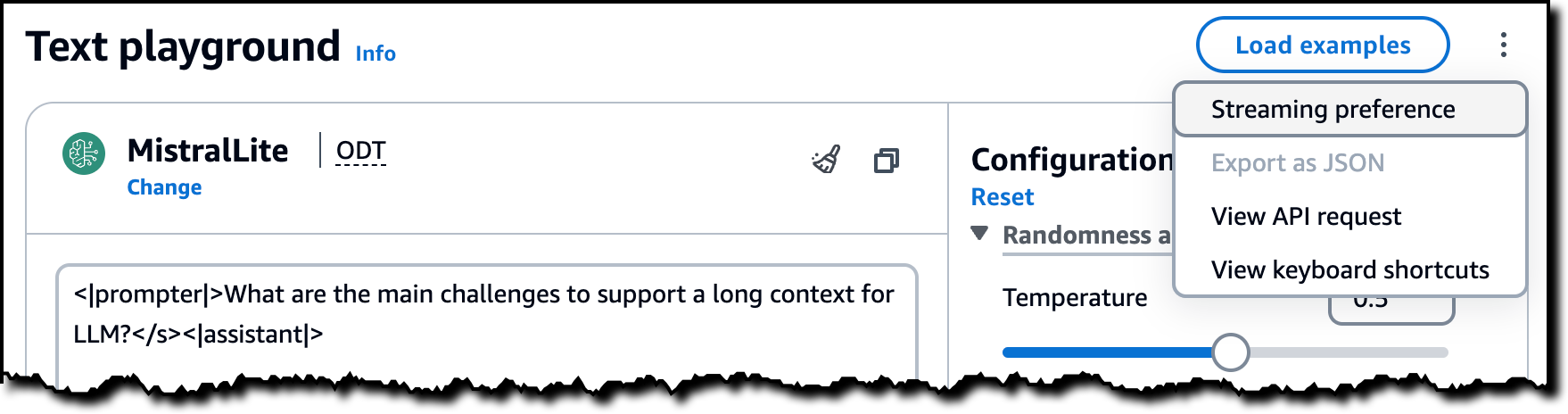

In the model detail page, I choose Open in playground to test the model in the console. In the text playground, I type a question using the prompt template of the model:

<|prompter|>What are the main challenges to support a long context for LLM?</s><|assistant|>

The MistralLite imported model is quick to reply and describe some of those challenges.

In the playground, I can tune responses for my use case using configurations such as temperature and maximum length or add stop sequences specific to the imported model.

To see the syntax of the API request, I choose the three small vertical dots at the top right of the playground.

I choose View API syntax and run the command using the AWS Command Line Interface (AWS CLI):

The output is similar to what I got in the playground. As you can see, for imported models, the model ID is the ARN of the imported model. I can use the model ID to invoke the imported model with the AWS CLI and AWS SDKs.

Things to know

You can bring your own weights for supported model architectures to Amazon Bedrock in the US East (N. Virginia) AWS Region. The model import capability is currently available in preview.

When using custom weights, Amazon Bedrock serves the model with On-Demand mode, and you only pay for what you use with no time-based term commitments. For detailed information, see Amazon Bedrock pricing.

The ability to import models is managed using AWS Identity and Access Management (IAM), and you can allow this capability only to the roles in your organization that need to have it.

With this launch, it’s now easier to build and scale generative AI applications using custom models with security and privacy built in.

To learn more:

- See the Amazon Bedrock User Guide.

- Visit our community.aws site to find deep-dive technical content and discover how others are using Amazon Bedrock in their solutions.

— Danilo

Unify DNS management using Amazon Route 53 Profiles with multiple VPCs and AWS accounts

If you are managing lots of accounts and Amazon Virtual Private Cloud (Amazon VPC) resources, sharing and then associating many DNS resources to each VPC can present a significant burden. You often hit limits around sharing and association, and you may have gone as far as building your own orchestration layers to propagate DNS configuration across your accounts and VPCs.

Today, I’m happy to announce Amazon Route 53 Profiles, which provide the ability to unify management of DNS across all of your organization’s accounts and VPCs. Route 53 Profiles let you define a standard DNS configuration, including Route 53 private hosted zone (PHZ) associations, Resolver forwarding rules, and Route 53 Resolver DNS Firewall rule groups, and apply that configuration to multiple VPCs in the same AWS Region. With Profiles, you have an easy way to ensure all of your VPCs have the same DNS configuration without the complexity of handling separate Route 53 resources. Managing DNS across many VPCs is now as simple as managing those same settings for a single VPC.

Profiles are natively integrated with AWS Resource Access Manager (RAM) allowing you to share your Profiles across accounts or with your AWS Organizations account. Profiles integrates seamlessly with Route 53 private hosted zones by allowing you to create and add existing private hosted zones to your Profile so that your organizations have access to these same settings when the Profile is shared across accounts. AWS CloudFormation allows you to use Profiles to set DNS settings consistently for VPCs as accounts are newly provisioned. With today’s release, you can better govern DNS settings for your multi-account environments.

How Route 53 Profiles works

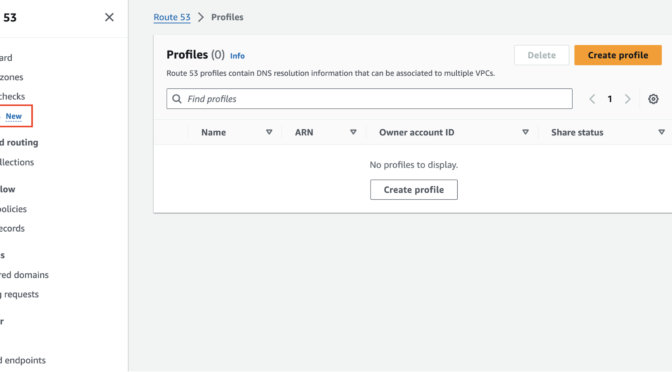

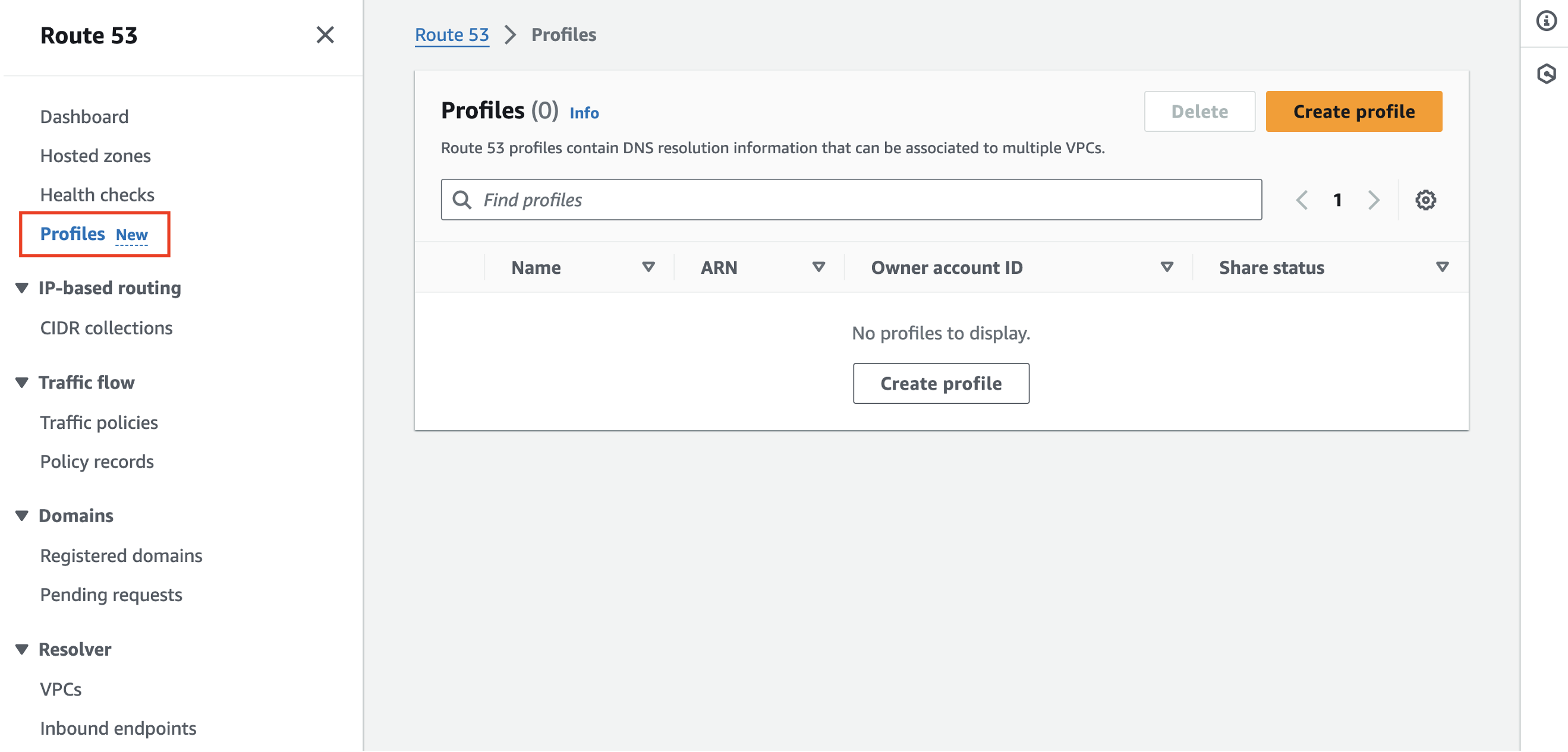

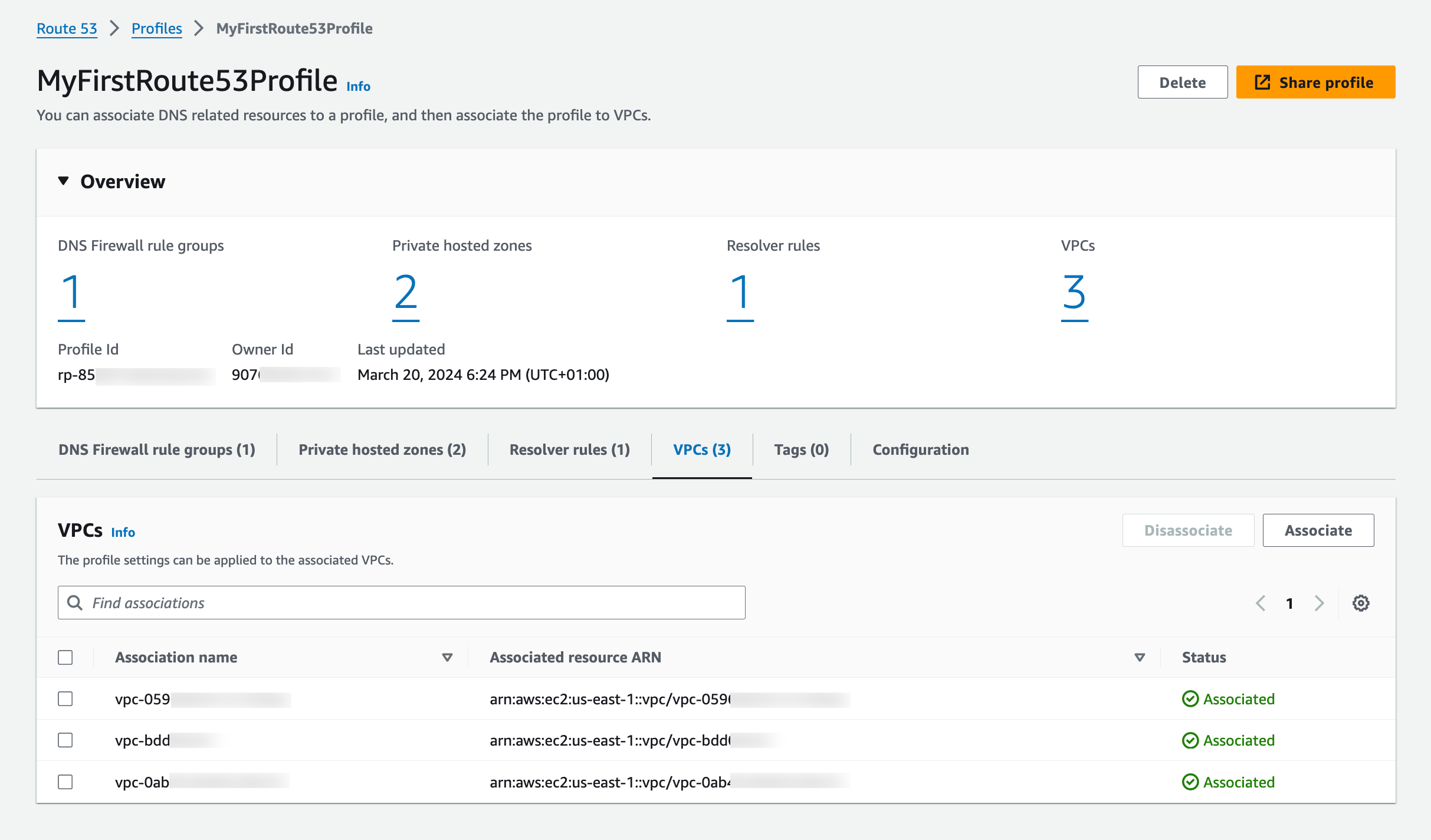

To start using the Route 53 Profiles, I go to the AWS Management Console for Route 53, where I can create Profiles, add resources to them, and associate them to their VPCs. Then, I share the Profile I created across another account using AWS RAM.

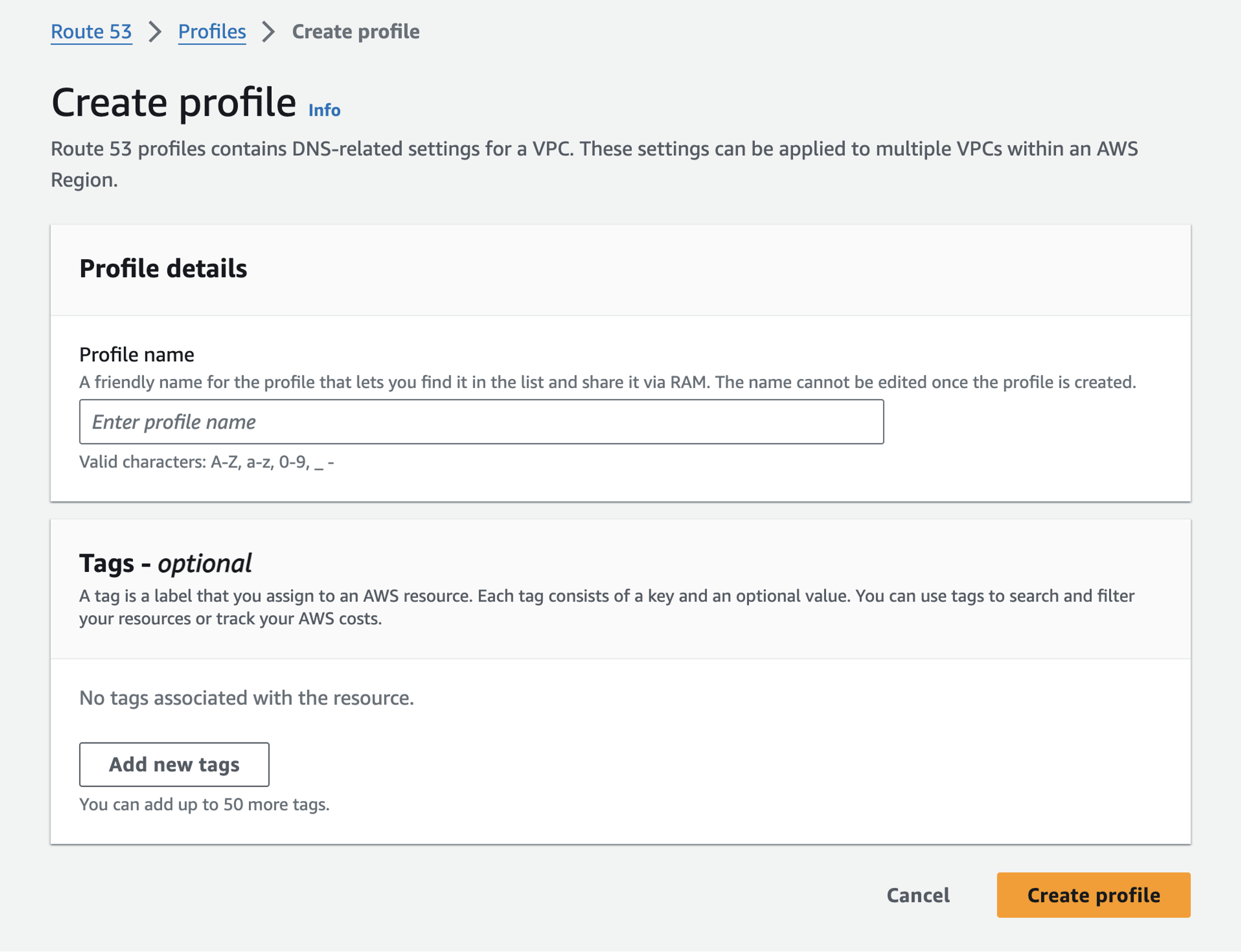

In the navigation pane in the Route 53 console, I choose Profiles and then I choose Create profile to set up my Profile.

I give my Profile configuration a friendly name such as MyFirstRoute53Profile and optionally add tags.

I can configure settings for DNS Firewall rule groups, private hosted zones and Resolver rules or add existing ones within my account all within the Profile console page.

I choose VPCs to associate my VPCs to the Profile. I can add tags as well as do configurations for recursive DNSSEC validation, the failure mode for the DNS Firewalls associated to my VPCs. I can also control the order of DNS evaluation: First VPC DNS then Profile DNS, or first Profile DNS then VPC DNS.

I can associate one Profile per VPC and can associate up to 5,000 VPCs to a single Profile.

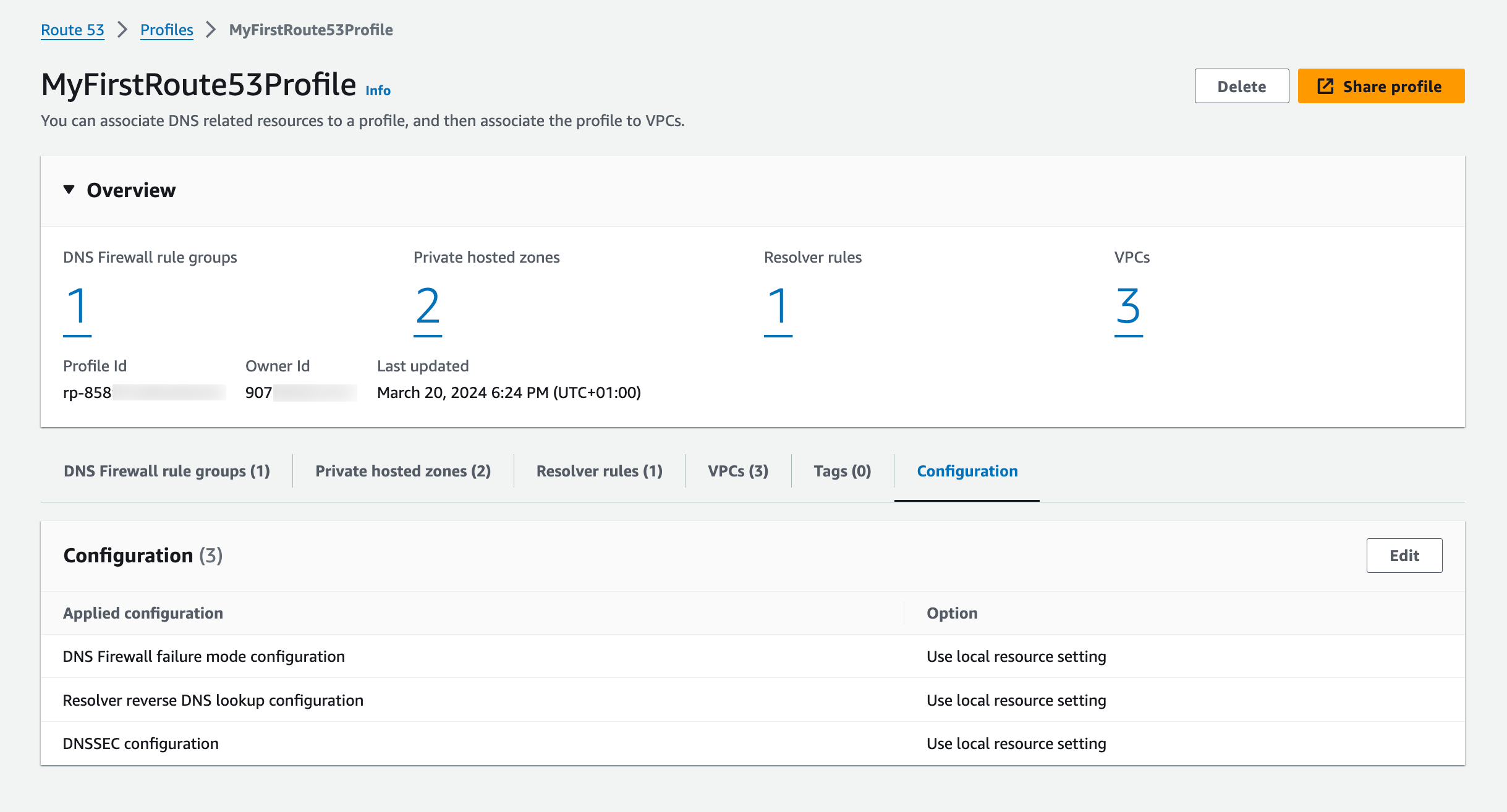

Profiles gives me the ability to manage settings for VPCs across accounts in my organization. I am able to disable reverse DNS rules for each of the VPCs the Profile is associated with rather than configuring these on a per-VPC basis. The Route 53 Resolver automatically creates rules for reverse DNS lookups for me so that different services can easily resolve hostnames from IP addresses. If I use DNS Firewall, I am able to select the failure mode for my firewall via settings, to fail open or fail closed. I am also able to specify if I wish for the VPCs associated to the Profile to have recursive DNSSEC validation enabled without having to use DNSSEC signing in Route 53 (or any other provider).

Let’s say I associate a Profile to a VPC. What happens when a query exactly matches both a resolver rule or PHZ associated directly to the VPC and a resolver rule or PHZ associated to the VPC’s Profile? Which DNS settings take precedence, the Profile’s or the local VPC’s? For example, if the VPC is associated to a PHZ for example.com and the Profile contains a PHZ for example.com, that VPC’s local DNS settings will take precedence over the Profile. When a query is made for a name for a conflicting domain name (for example, the Profile contains a PHZ for infra.example.com and the VPC is associated to a PHZ that has the name account1.infra.example.com), the most specific name wins.

Sharing Route 53 Profiles across accounts using AWS RAM

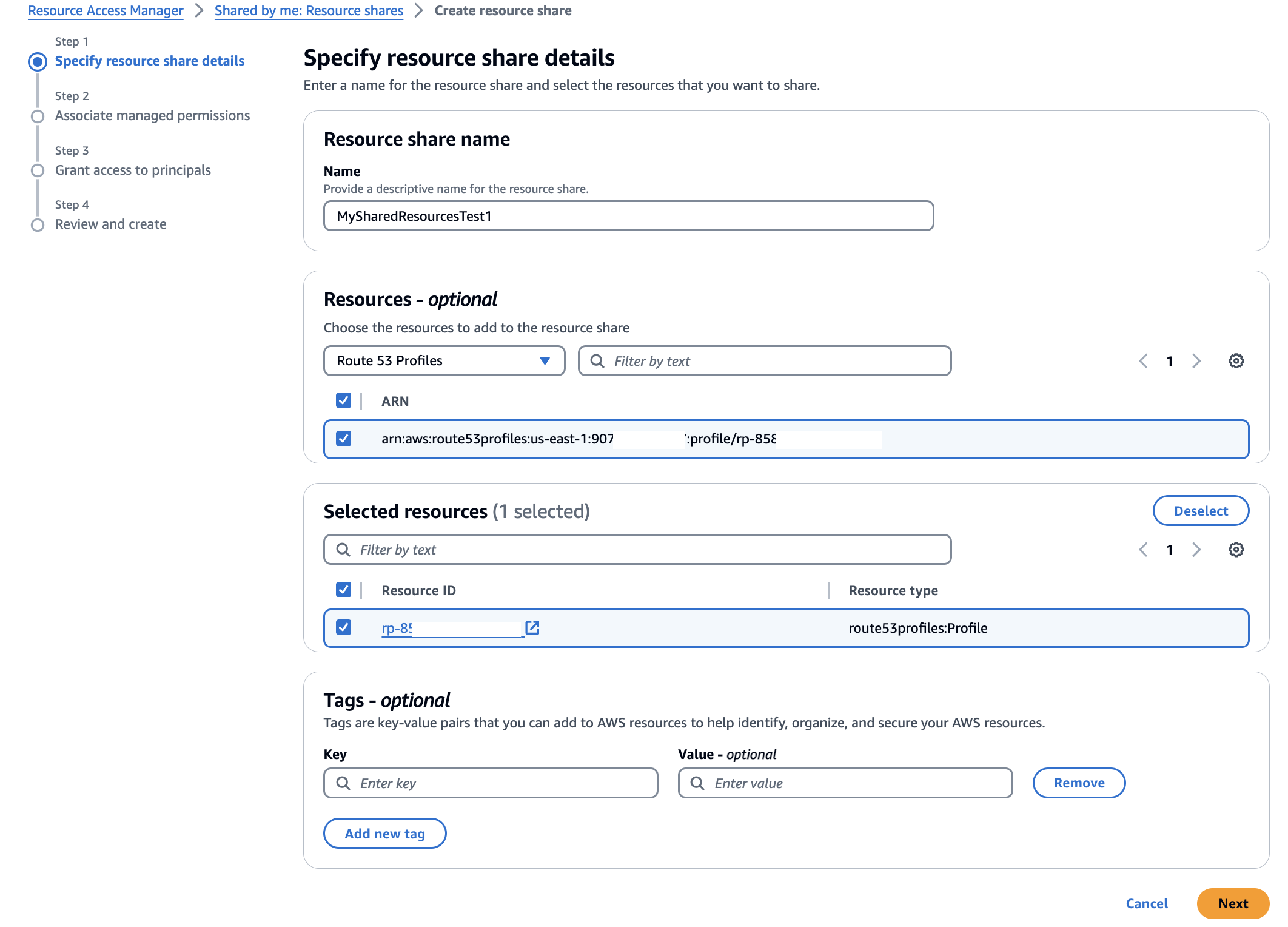

I use AWS Resource Access Manager (RAM) to share the Profile I created in the previous section with my other account.

I choose the Share profile option in the Profiles detail page or I can go to the AWS RAM console page and choose Create resource share.

I provide a name for my resource share and then I search for the ‘Route 53 Profiles’ in the Resources section. I select the Profile in Selected resources. I can choose to add tags. Then, I choose Next.

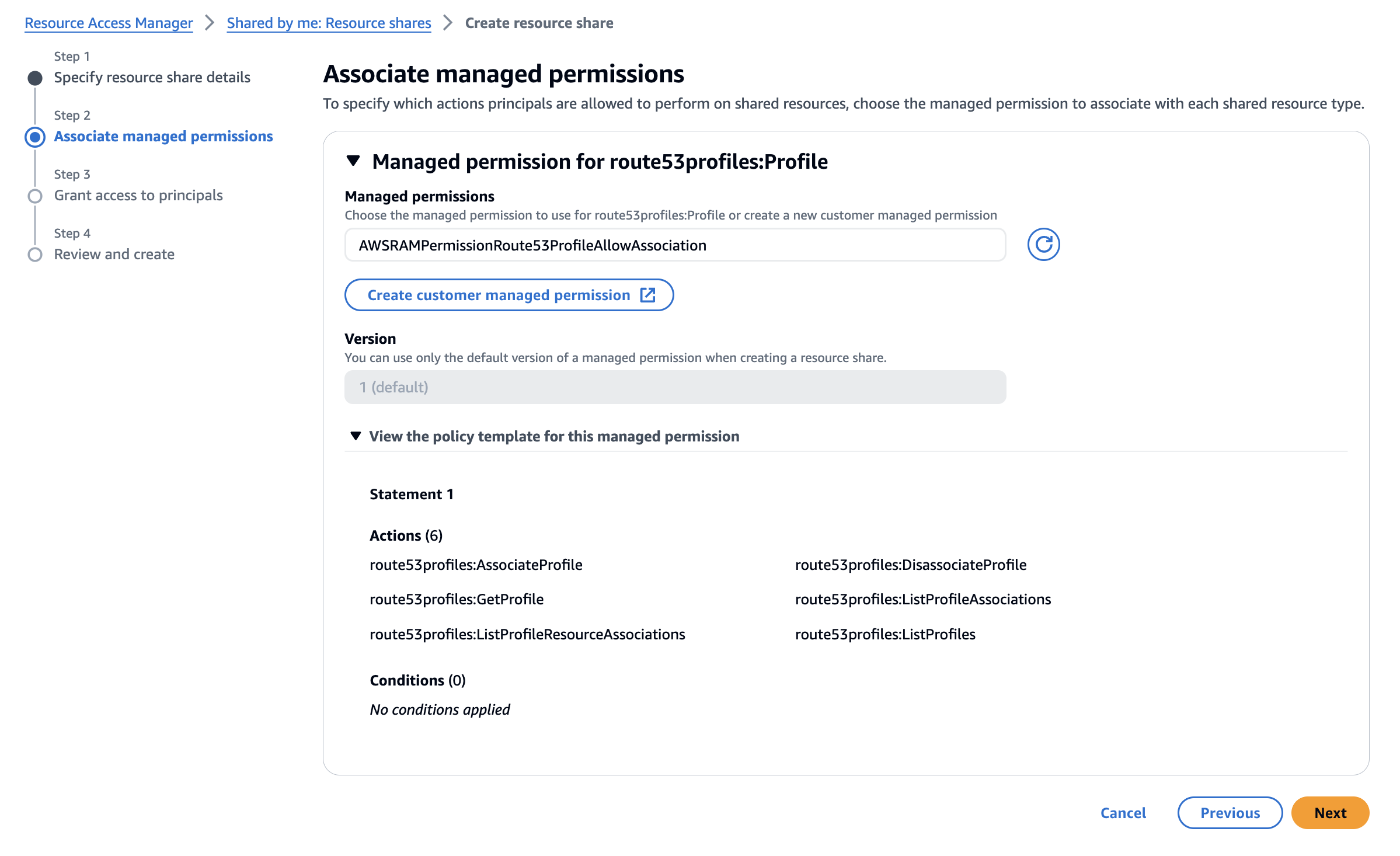

Profiles utilize RAM managed permissions, which allow me to attach different permissions to each resource type. By default, only the owner (the network admin) of the Profile will be able to modify the resources within the Profile. Recipients of the Profile (the VPC owners) will only be able to view the contents of the Profile (the ReadOnly mode). To allow a recipient of the Profile to add PHZs or other resources to it, the Profile’s owner will have to attach the necessary permissions to the resource. Recipients will not be able to edit or delete any resources added by the Profile owner to the shared resource.

I leave the default selections and choose Next to grant access to my other account.

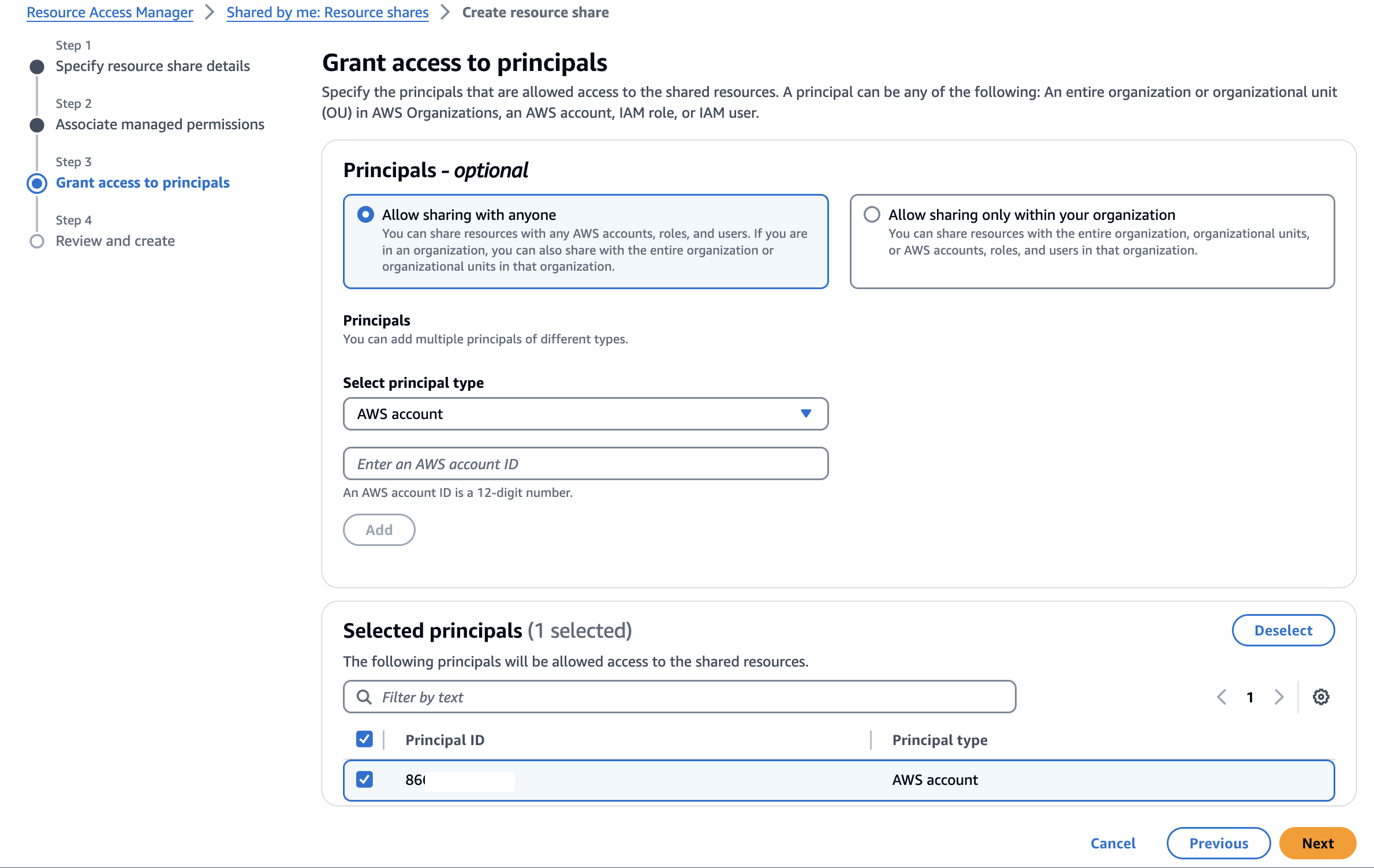

On the next page, I choose Allow sharing with anyone, enter my other account’s ID and then choose Add. After that, I choose that account ID in the Selected principals section and choose Next.

In the Review and create page, I choose Create resource share. Resource share is successfully created.

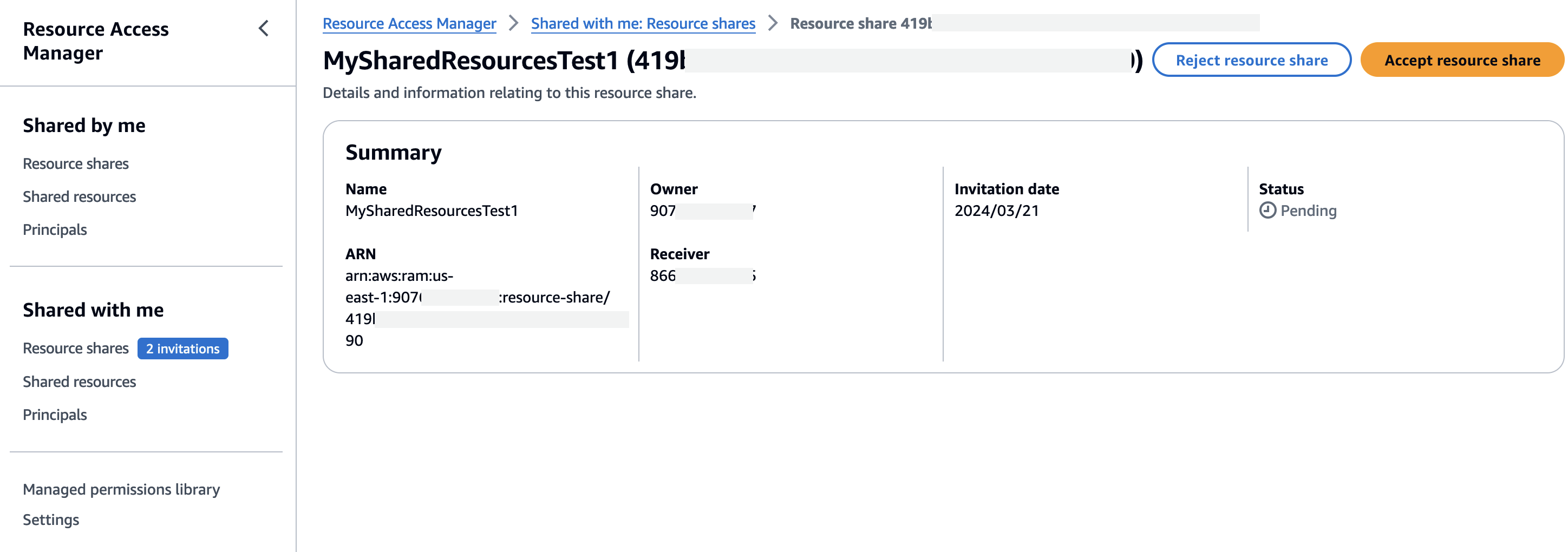

Now, I switch to my other account that I share my Profile with and go to the RAM console. In the navigation menu, I go to the Resource shares and choose the resource name I created in the first account. I choose Accept resource share to accept the invitation.

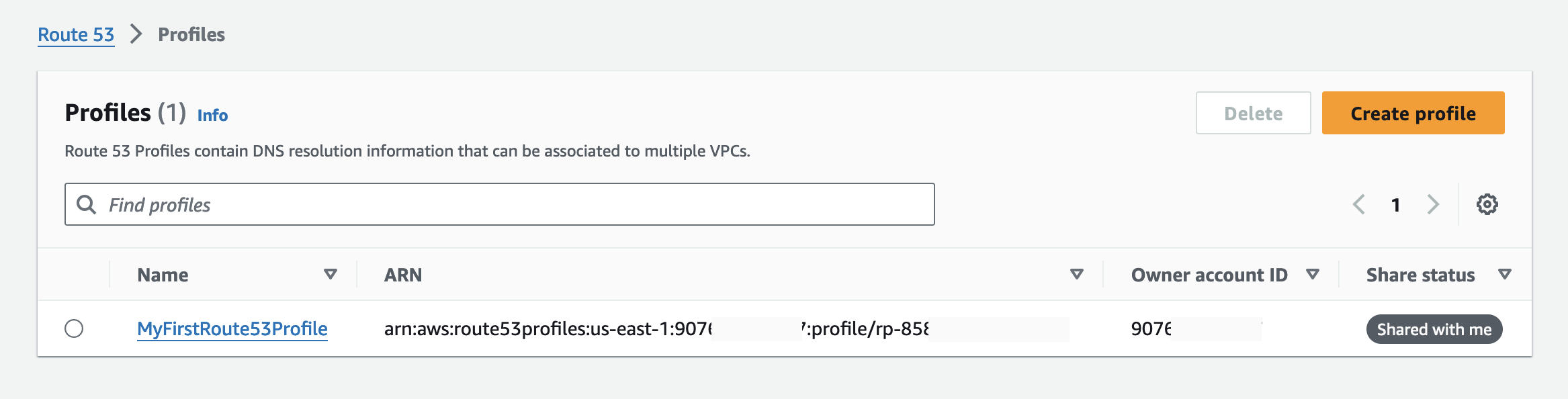

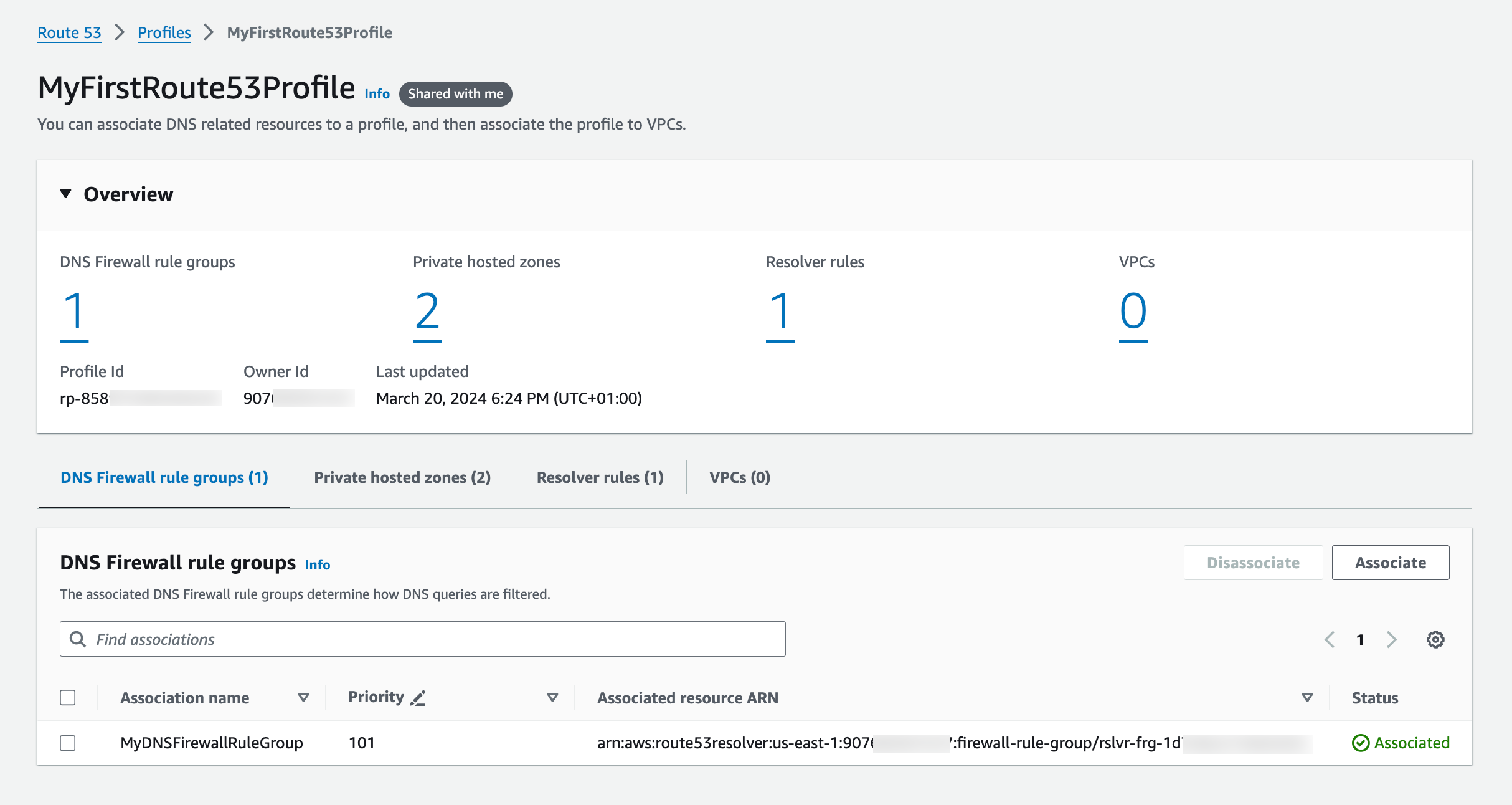

That’s it! Now, I go to my Route 53 Profiles page and I choose the Profile shared with me.

I have access to the shared Profile’s DNS Firewall rule groups, private hosted zones, and Resolver rules. I can associate this account’s VPCs to this Profile. I am not able to edit or delete any resources. Profiles are Regional resources and cannot be shared across Regions.

Available now

You can easily get started with Route 53 Profiles using the AWS Management Console, Route 53 API, AWS Command Line Interface (AWS CLI), AWS CloudFormation, and AWS SDKs.

Route 53 Profiles will be available in all AWS Regions, except in Canada West (Calgary), the AWS GovCloud (US) Regions and the Amazon Web Services China Regions.

For more details about the pricing, visit the Route 53 pricing page.

Get started with Profiles today and please let us know your feedback either through your usual AWS Support contacts or the AWS re:Post for Amazon Route 53.

It appears that the number of industrial devices accessible from the internet has risen by 30 thousand over the past three years, (Mon, Apr 22nd)

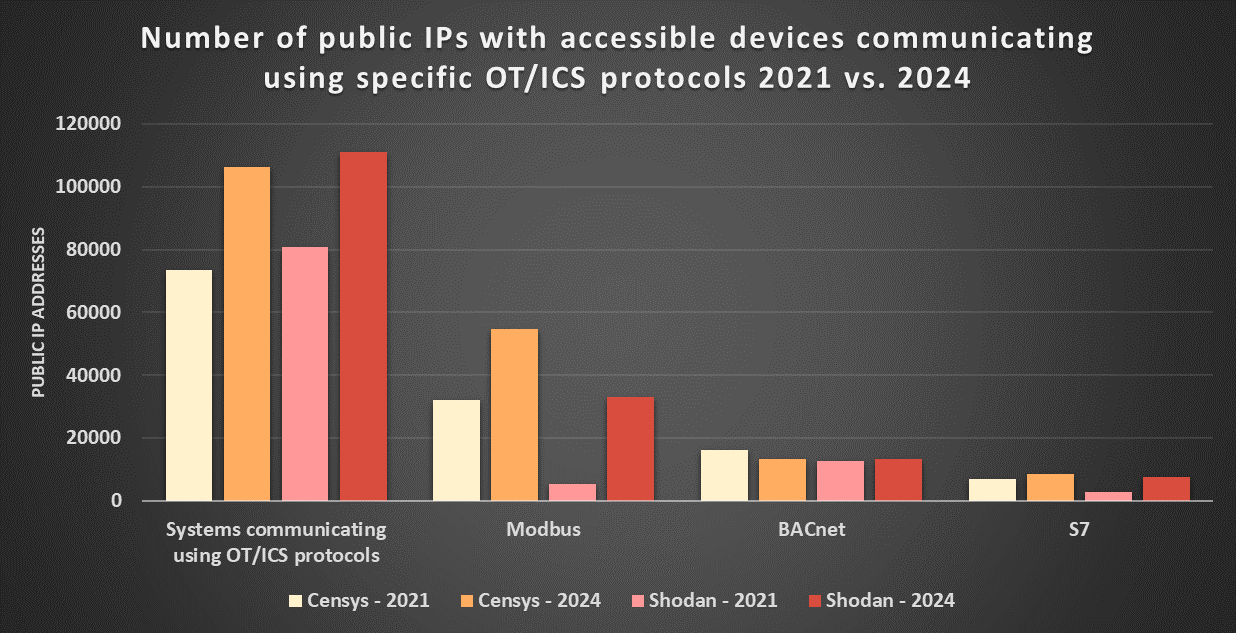

It has been nearly three years since we last looked at the number of industrial devices (or, rather, devices that communicate with common OT protocols, such as Modbus/TCP, BACnet, etc.) that are accessible from the internet[1]. Back in May of 2021, I wrote a slightly optimistic diary mentioning that there were probably somewhere between 74.2 thousand (according to Censys) and 80.8 thousand (according to Shodan) such systems, and that based on long-term data from Shodan, it appeared as though there was a downward trend in the number of these systems.

Given that few months ago, a series of incidents related to internet-exposed PLCs with default passwords was reported[2], and CISA has been releasing more ICS-related advisories than any other kind for a while now[3], I thought it might be a good time to go over the current numbers and see at how the situation has changed over the past 35 months.

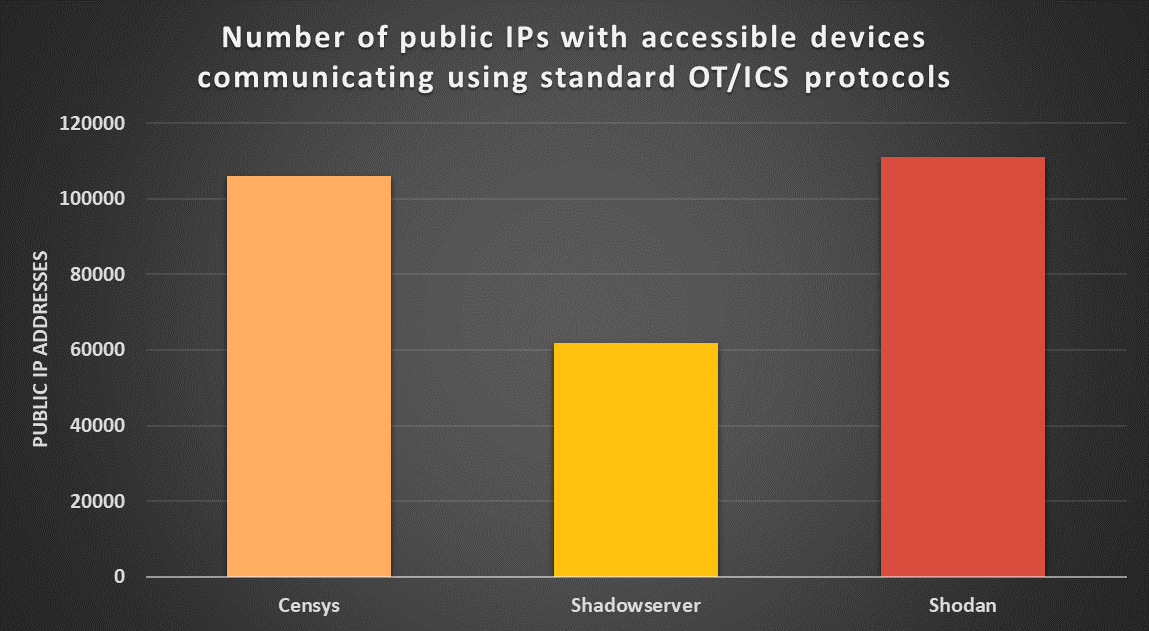

At first glance, the current number of ICS-like devices accessible from the internet would seem to be somewhere between 61.7 thousand (the number of “ICS” devices detected by Shadowserver[4]) and 237.2 thousand (the number of “ICS" devices detected by Censys[5]), with Shodan reporting an in-between number of 111.1 thousand [6]. It should be noted though, that even if none of these services necessarily correctly detects all OT devices, the number reported by Censys seems to be significantly overinflated by the fact that the service uses a fairly wide definition of what constitutes an “ICS system” and classifies as such even devices that do not communicate using any of the common industrial protocols. If we do a search limited only to devices that use one of the most common protocols that Censys can detect (e.g., Modbus, Fox, EtherNet/IP, BACnet, etc.), we get a much more believable/comparable number of 106.2 thousand[7].

It is therefore probable that the real number of internet-connected ICS systems is currently somewhere between 61 and 111 thousand, with data from Shodan and Censys both showing an increase of approximately 30 thousand from May 2021. It should be noted, though, that some of the detected devices are certain to be honeypots – of the 106.2 thousand systems reported by Censys, 307 devices were explicitly classified as such[8], however, it is likely that the real number of honeypots among detected systems is significantly higher.

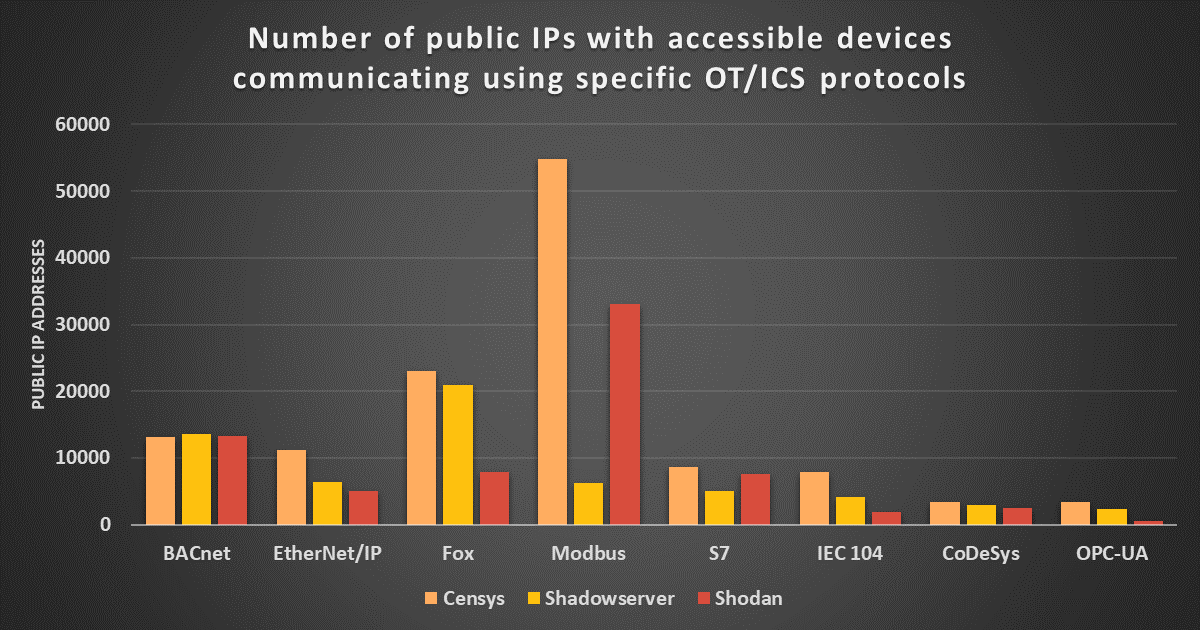

If we take a closer look at some of the most often detected industrial protocols, it quickly becomes obvious that – with the exception of BACnet – the data reported by all three services varies significantly between different protocols.

It also becomes apparent that the large difference between the number of ICS systems detected by Shodan and Censys (> 100k) and the much smaller number detected by Shadowserver (61k) is caused mainly by a significantly lower number of detected devices that use Modbus on part of Shadowserver. For comparison, at the time of writing, Censys detects 54,770 internet-exposed devices using this protocol, while Shodan detects 33,036 of such devices, and Shadowserver detects only about 6,300 of them.

While on the topic of Modbus, it is worth noting that the number of ICS-like devices that support this protocol detected by both Shodan and Censys has increased significantly since 2021, and this increase seems to account for most of the overall rise in the number of detected ICS devices.

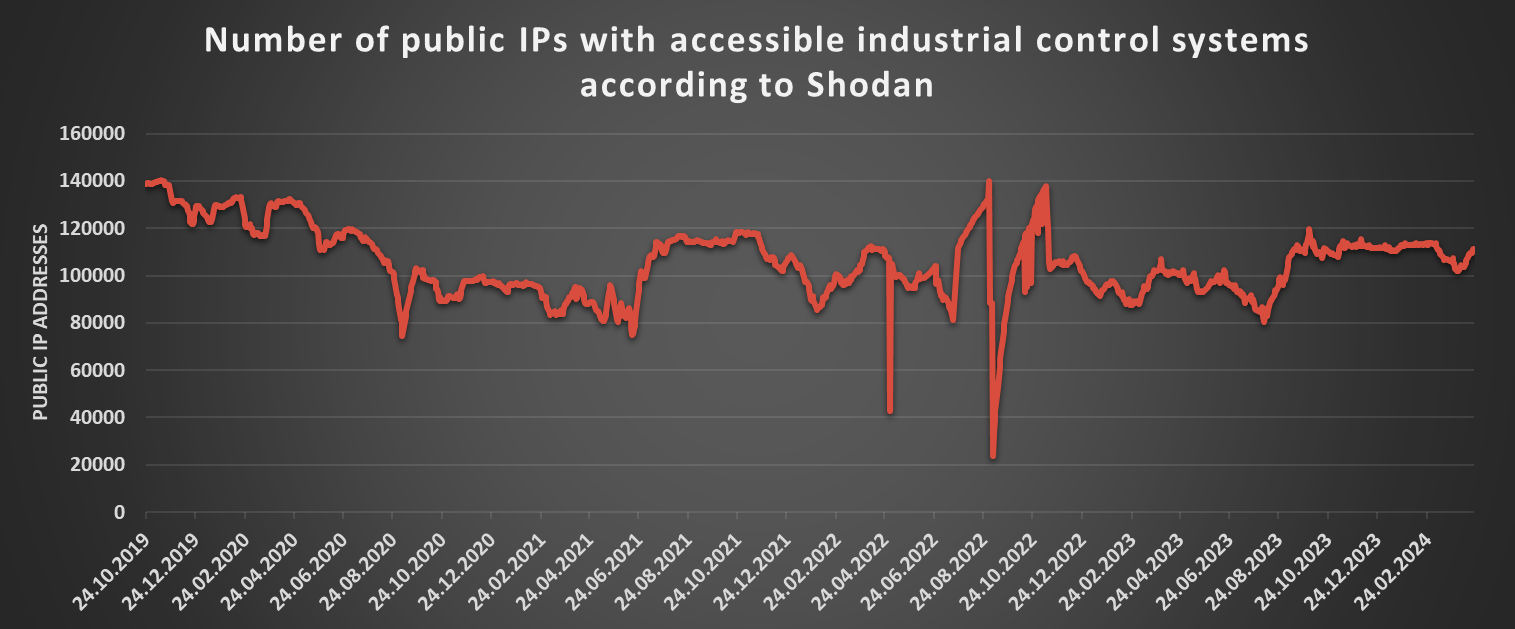

In fact, if we take a closer look at the changes over time, it is clear that the downward trend, which I mentioned in the 2021 diary, didn’t last too long. Looking at data gathered from Shodan using my TriOp tool[9], it seems that shortly after I published the diary, the number of detected ICS devices rose significantly, and it has oscillated around approximately 100 thousand ever since.

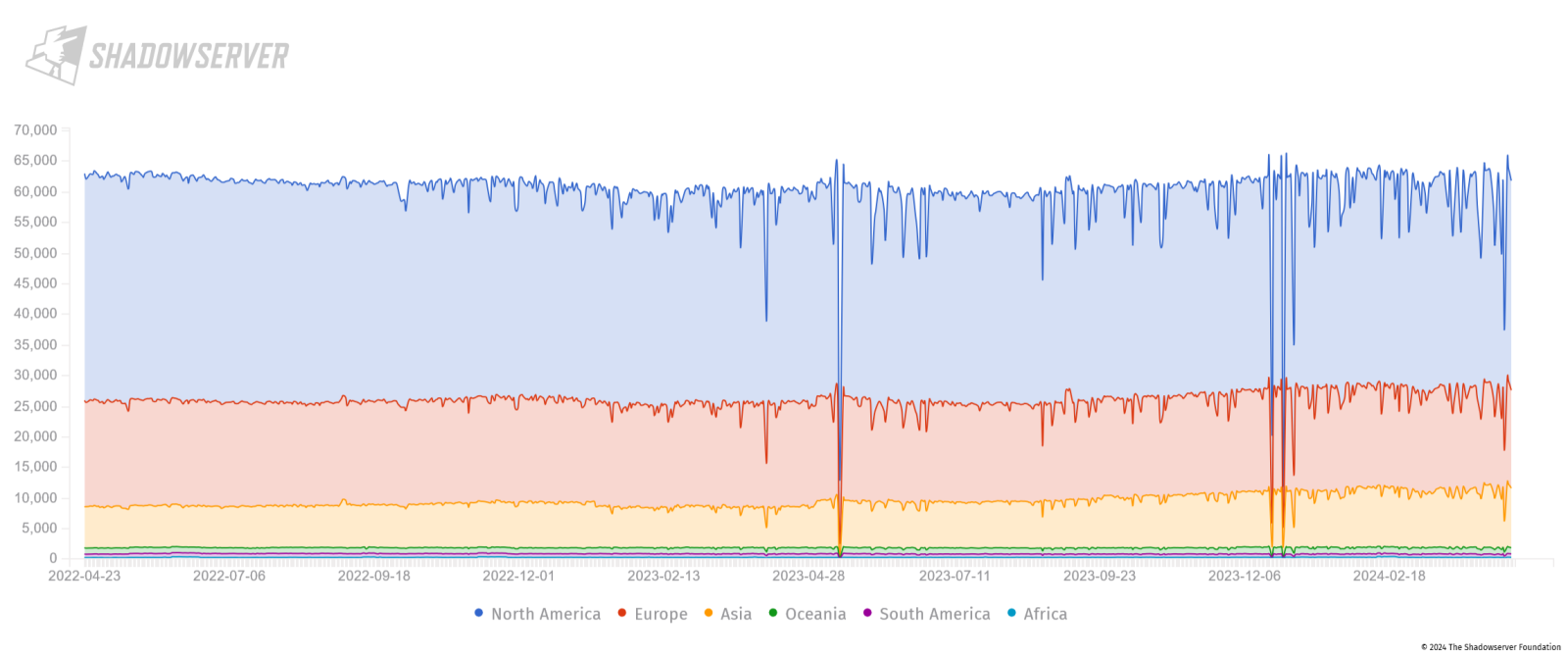

Although data provided by Shodan are hardly exact, the hypothesis that the number of internet-connected ICS devices has not fallen appreciably for at least the last two years seems to be supported by data from Shadowserver, as you may see in the following chart.

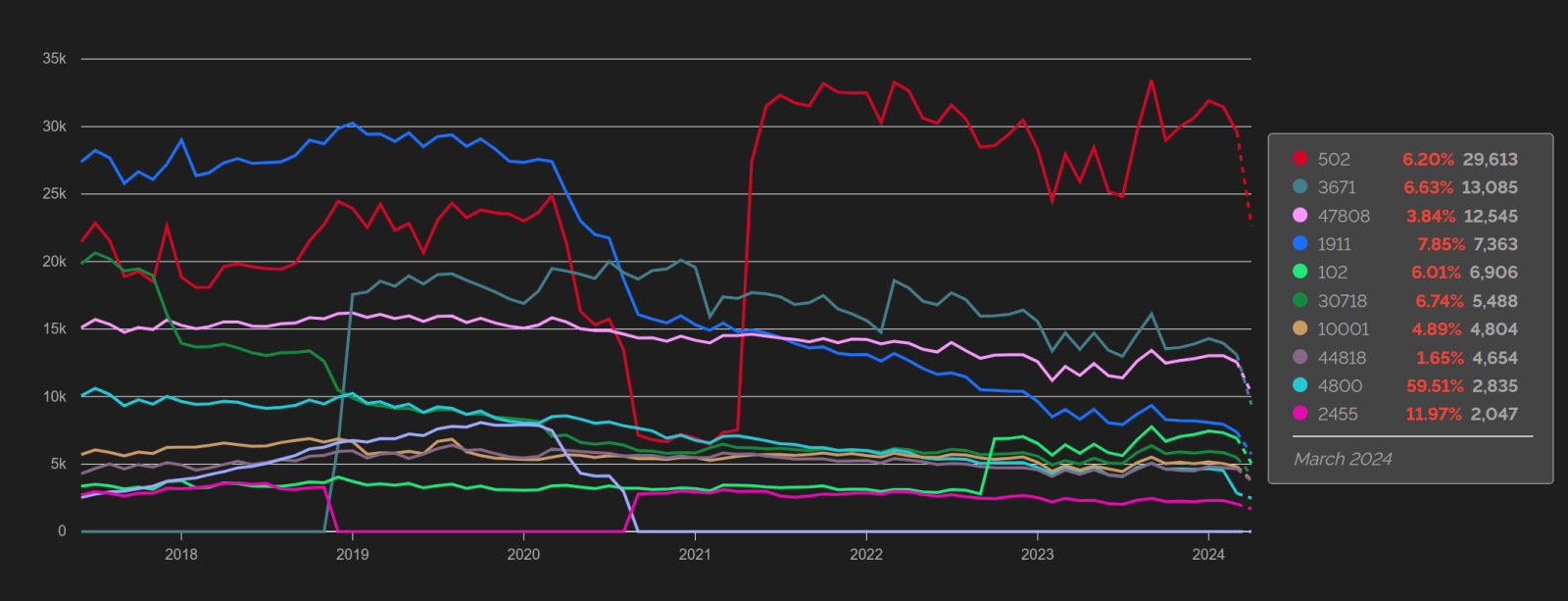

Nevertheless, even though there has therefore probably not been any notable decrease in the number of internet-connected ICS devices, this doesn’t mean that there have not been changes in the types of devices and the protocols they communicate with. As the following chart from Shodan shows, these aspects have changed quite a lot over time.

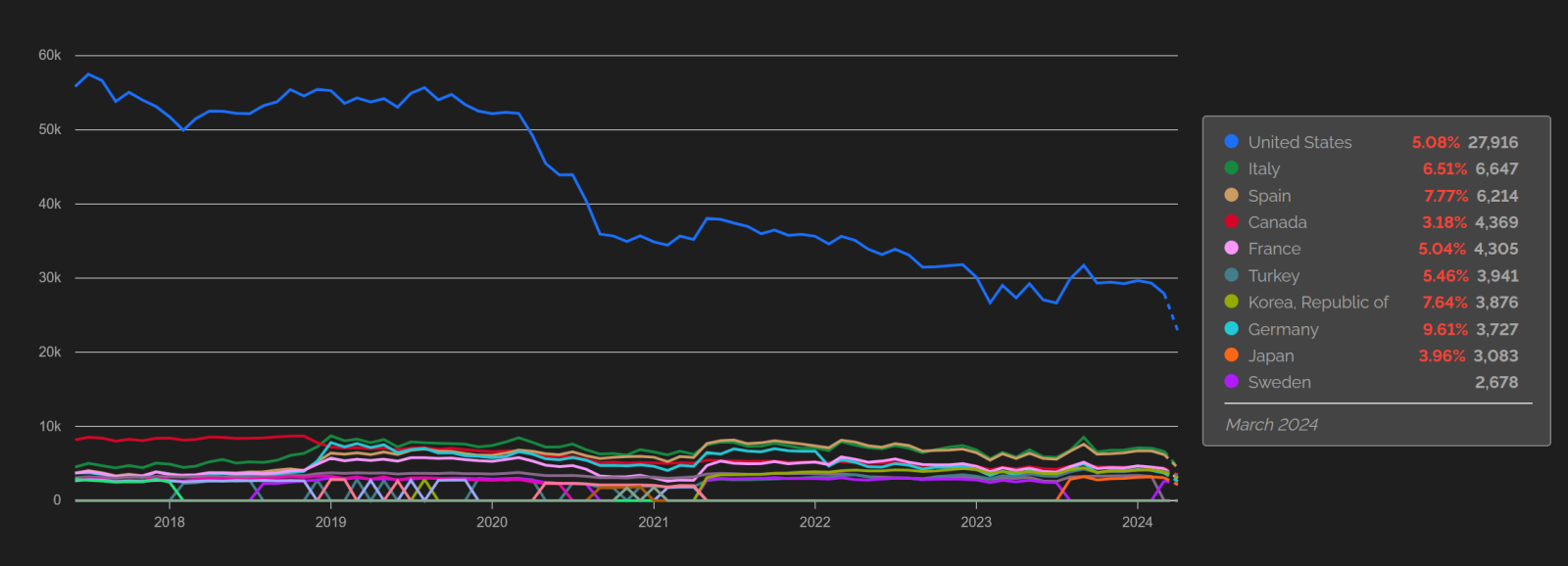

It is also worth mentioning that these charts and the aforementioned numbers describe the overall situation on the global internet, and therefore say nothing about the state of affairs in different countries around the world. This is important since although there may not have been any appreciable decrease in the number of internet-exposed ICS devices globally, it seems that the number of these devices has decreased significantly in multiple countries in the past few years – most notably in the United States, as you may see in the following chart from Shodan (though, it is worth noting that Shadowserver saw only a much lower decrease for the US – from approximately 32 thousand ICS devices to the current 29.5 thousand devices[10]).

Unfortunately, the fact that in some countries, the number of internet-connected ICS devices has fallen also means that there are (most likely) multiple countries where it has just as significantly risen, given that we have not seen an overall, global decrease… In fact, the must be countries where it rose much more significantly, given the aforementioned overall increase of approximately 30 thousand ICS systems detected by both Shodan and Censys globally.

So, while I have ended the 2021 diary stating a hope that the situation might improve in time and that the number of industrial/OT systems accessible from the internet will decrease, I will end this one on a slightly more pessimistic note – hoping that the situation doesn’t worsen just as significantly in the next three years as it did in the past three…

[1] https://isc.sans.edu/diary/Number+of+industrial+control+systems+on+the+internet+is+lower+then+in+2020but+still+far+from+zero/27412

[2] https://therecord.media/cisa-water-utilities-unitronics-plc-vulnerability

[3] https://www.cisa.gov/news-events/cybersecurity-advisories?page=0

[4] https://dashboard.shadowserver.org/statistics/combined/time-series/?date_range=30&source=ics&style=stacked

[5] https://search.censys.io/search?resource=hosts&sort=RELEVANCE&per_page=25&virtual_hosts=EXCLUDE&q=labels%3D%60ics%60

[6] https://www.shodan.io/search?query=tag%3Aics

[7] https://search.censys.io/search?resource=hosts&sort=RELEVANCE&per_page=25&virtual_hosts=EXCLUDE&q=%28labels%3D%60ics%60%29+and+%28services.service_name%3D%60MODBUS%60+or+services.service_name%3D%60FOX%60+or+services.service_name%3D%60BACNET%60+or+services.service_name%3D%60EIP%60+or+services.service_name%3D%60S7%60+or+services.service_name%3D%60IEC60870_5_104%60%29

[8] https://search.censys.io/search?resource=hosts&virtual_hosts=EXCLUDE&q=%28%28labels%3D%60ics%60%29+and+%28services.service_name%3D%60MODBUS%60+or+services.service_name%3D%60FOX%60+or+services.service_name%3D%60BACNET%60+or+services.service_name%3D%60EIP%60+or+services.service_name%3D%60S7%60+or+services.service_name%3D%60IEC60870_5_104%60%29%29+and+labels%3D%60honeypot%60

[9] https://github.com/NettleSec/TriOp

[10] https://dashboard.shadowserver.org/statistics/combined/time-series/?date_range=other&d1=2022-04-23&d2=2024-04-22&source=ics&geo=US&style=stacked

———–

Jan Kopriva

@jk0pr | LinkedIn

Nettles Consulting

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

The CVE's They are A-Changing!, (Wed, Apr 17th)

The downloadable format of CVE's from Miter will be changing in June 2024, so if you are using CVE downloads to populate your scanner, SIEM or to feed a SOC process, now would be a good time to look at that. If you are a vendor and use these downloads to populate your own feeds or product database, if you're not using the new format already you might be behind the eight ball!

The old format (CVE JSON 4.0) is being replaced by CVE JSON 5.0, full details can be found here:

https://www.cve.org/Media/News/item/blog/2023/03/29/CVE-Downloads-in-JSON-5-Format

You can play with the actual files here: https://github.com/CVEProject/cvelistV5

(ps the earworm is free!)

===============

Rob VandenBrink

rob@coherentsecurity.com

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.

A Vuln is a Vuln, unless the CVE for it is after Feb 12, 2024, (Wed, Apr 17th)

The NVD (National Vulnerability Database) announcement page (https://nvd.nist.gov/general/news/nvd-program-transition-announcement) indicates a growing backlog of vulnerabilities that are causing delays in their process.

CVE's are issued by CNA's (CVE Numbering Authorities), and the "one version of the truth" for CVE's is at Mitre.org (the V5 list is here https://github.com/CVEProject/cvelistV5). There are roughly 100 (and growing) CNA's that have blocks of numbers and can issue CVEs on their own recognizance, along with MITRE who is the "root CNA". The CVE process seems to be alive and well (thanks for that MITRE!)

In the past NVD typically researched each CVE as it came in, and the CVE would become a posted vulnerability, enriched with additional fields and information (ie metadata), within hours(ish). This additional metadata makes for a MUCH more useful reference – the vuln now contains the original CVE, vendor links, possibly mitigations and workarounds, links to other references (CWE's for instance), sometimes PoC's. The vulnerability entry also contains the CPE information, which makes for a great index if you use this data in a scanner, IPS or SIEM (or anything else for that matter). For instance, compare the recent Palo Alto issue's CVE and NVD entries:

- https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2024-3400

- https://nvd.nist.gov/vuln/detail/CVE-2024-3400

This enrichment process has slowed significantly starting on Feb 12 – depending on the CVE this process may be effectively stopped entirely. This means that if your scanner, SIEM or SOC process needs that additional metadata, a good chunk of the last 2 months worth of vulnerabilities essentially have not yet happened as far as the metadata goes. You can see how this is a problem for lots of vendors that produce scanners, firewalls, Intrustion Prevention Systems and SIEMs – along with all of their customers (which is essentially all of us).

Feb 12 coincidentally is just ahead of the new FedRAMP requirements (Rev 5) being released https://www.fedramp.gov/blog/2023-05-30-rev-5-baselines-have-been-approved-and-released/. Does this match up mean that NIST perhaps had some advance notice, and they maybe have outsourcers that don't (yet) meet these FedRAMP requirements? Or is NIST itself not yet in compliance with those regulations? The timing doesn't match for dev's running behind on the CVE Format change – that's not until June. Lots of maybes, but nobody seems to know for sure what's going on here and why – if you have real information on this, please post in our comment form! Enquiring minds (really) need to know!

===============

Rob VandenBrink

rob@coherentsecurity.com

(c) SANS Internet Storm Center. https://isc.sans.edu Creative Commons Attribution-Noncommercial 3.0 United States License.